a883fe94dfafffd359b121a8628ab4db.ppt

- Количество слайдов: 107

9. 94 The cognitive science of intuitive theories J. Tenenbaum, T. Lombrozo, L. Schulz, R. Saxe

9. 94 The cognitive science of intuitive theories J. Tenenbaum, T. Lombrozo, L. Schulz, R. Saxe

Plan for the class • Goal: – Introduce you to an exciting field of research unfolding at MIT and in the broader world.

Plan for the class • Goal: – Introduce you to an exciting field of research unfolding at MIT and in the broader world.

Plan for the class • An integrated series of talks and discussions – Today: Josh Tenenbaum (MIT), “Computational models of theory-based inductive inference” – Tuesday: Tania Lombrozo (Harvard/Berkeley) “Explanation in intuitive theories” – Wednesday: Rebecca Saxe (Harvard/MIT), “Understanding other minds” – Thursday: Laura Schulz (MIT), “Theories and evidence” – Friday: Special Mystery Guest, “When theories fail”

Plan for the class • An integrated series of talks and discussions – Today: Josh Tenenbaum (MIT), “Computational models of theory-based inductive inference” – Tuesday: Tania Lombrozo (Harvard/Berkeley) “Explanation in intuitive theories” – Wednesday: Rebecca Saxe (Harvard/MIT), “Understanding other minds” – Thursday: Laura Schulz (MIT), “Theories and evidence” – Friday: Special Mystery Guest, “When theories fail”

Plan for the class • Requirements for credit (pass/fail, 3 units) – Attend the classes – Participate in discussions – Take-home quiz: • Emailed to you this weekend (after the class) • Due back to me by email on Wednesday, Feb. 1 If you are not registered on the list below, make sure to register and send me an email message at: jbt@mit. edu

Plan for the class • Requirements for credit (pass/fail, 3 units) – Attend the classes – Participate in discussions – Take-home quiz: • Emailed to you this weekend (after the class) • Due back to me by email on Wednesday, Feb. 1 If you are not registered on the list below, make sure to register and send me an email message at: jbt@mit. edu

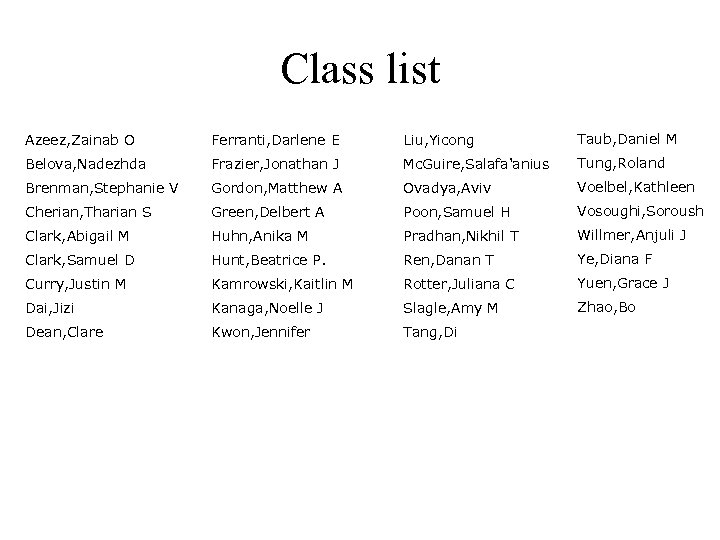

Class list Azeez, Zainab O Ferranti, Darlene E Liu, Yicong Taub, Daniel M Belova, Nadezhda Frazier, Jonathan J Mc. Guire, Salafa'anius Tung, Roland Brenman, Stephanie V Gordon, Matthew A Ovadya, Aviv Voelbel, Kathleen Cherian, Tharian S Green, Delbert A Poon, Samuel H Vosoughi, Soroush Clark, Abigail M Huhn, Anika M Pradhan, Nikhil T Willmer, Anjuli J Clark, Samuel D Hunt, Beatrice P. Ren, Danan T Ye, Diana F Curry, Justin M Kamrowski, Kaitlin M Rotter, Juliana C Yuen, Grace J Dai, Jizi Kanaga, Noelle J Slagle, Amy M Zhao, Bo Dean, Clare Kwon, Jennifer Tang, Di

Class list Azeez, Zainab O Ferranti, Darlene E Liu, Yicong Taub, Daniel M Belova, Nadezhda Frazier, Jonathan J Mc. Guire, Salafa'anius Tung, Roland Brenman, Stephanie V Gordon, Matthew A Ovadya, Aviv Voelbel, Kathleen Cherian, Tharian S Green, Delbert A Poon, Samuel H Vosoughi, Soroush Clark, Abigail M Huhn, Anika M Pradhan, Nikhil T Willmer, Anjuli J Clark, Samuel D Hunt, Beatrice P. Ren, Danan T Ye, Diana F Curry, Justin M Kamrowski, Kaitlin M Rotter, Juliana C Yuen, Grace J Dai, Jizi Kanaga, Noelle J Slagle, Amy M Zhao, Bo Dean, Clare Kwon, Jennifer Tang, Di

Scheduling • Today: – Go to 4: 30 (with a break in the middle)? • Friday: – An hour earlier: 1: 00 – 3: 00?

Scheduling • Today: – Go to 4: 30 (with a break in the middle)? • Friday: – An hour earlier: 1: 00 – 3: 00?

The big problem of intelligence How does the mind get so much out of so little?

The big problem of intelligence How does the mind get so much out of so little?

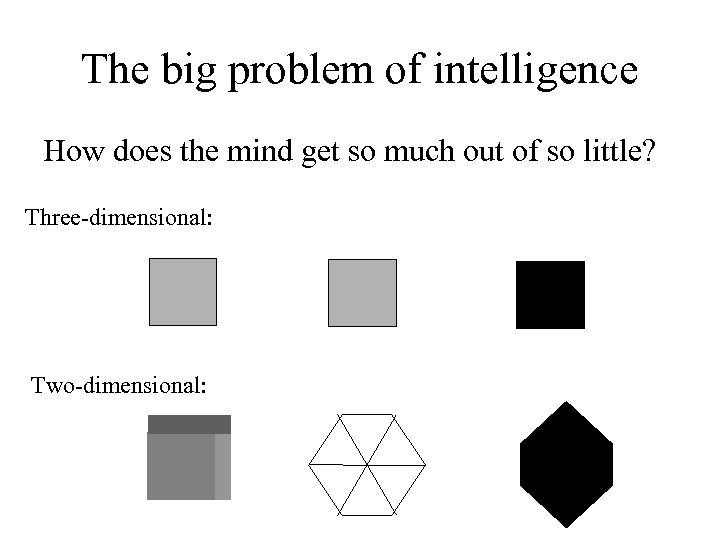

The big problem of intelligence How does the mind get so much out of so little? Three-dimensional: Two-dimensional:

The big problem of intelligence How does the mind get so much out of so little? Three-dimensional: Two-dimensional:

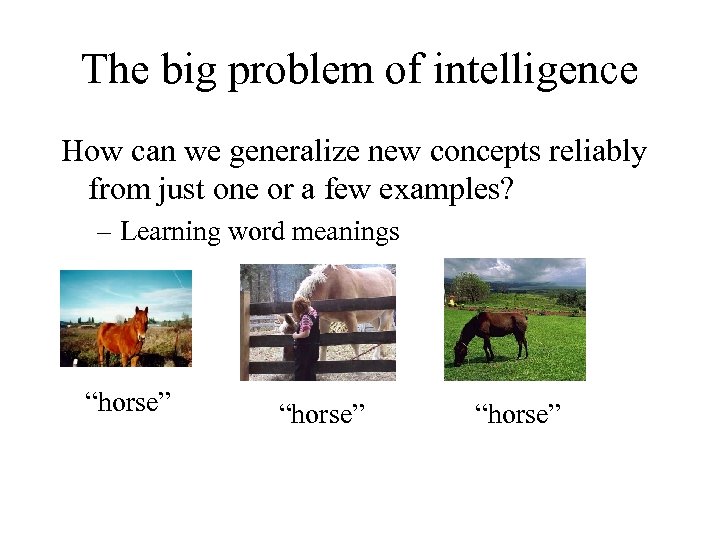

The big problem of intelligence How can we generalize new concepts reliably from just one or a few examples? – Learning word meanings “horse”

The big problem of intelligence How can we generalize new concepts reliably from just one or a few examples? – Learning word meanings “horse”

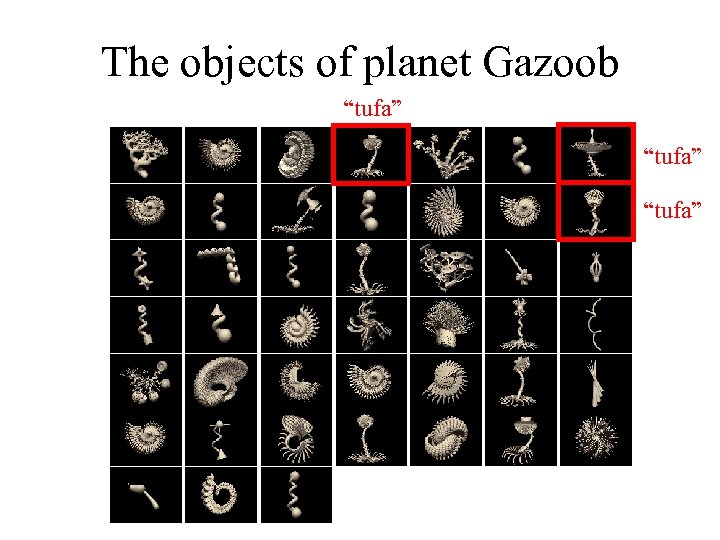

The objects of planet Gazoob “tufa”

The objects of planet Gazoob “tufa”

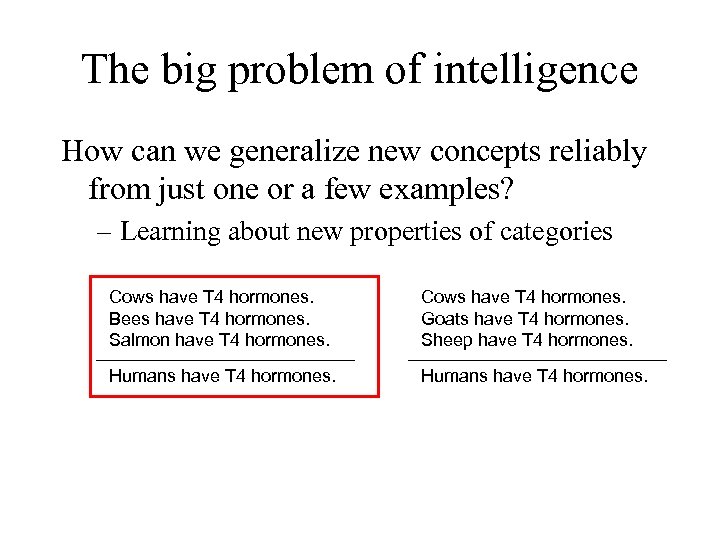

The big problem of intelligence How can we generalize new concepts reliably from just one or a few examples? – Learning about new properties of categories Cows have T 4 hormones. Bees have T 4 hormones. Salmon have T 4 hormones. Cows have T 4 hormones. Goats have T 4 hormones. Sheep have T 4 hormones. Humans have T 4 hormones.

The big problem of intelligence How can we generalize new concepts reliably from just one or a few examples? – Learning about new properties of categories Cows have T 4 hormones. Bees have T 4 hormones. Salmon have T 4 hormones. Cows have T 4 hormones. Goats have T 4 hormones. Sheep have T 4 hormones. Humans have T 4 hormones.

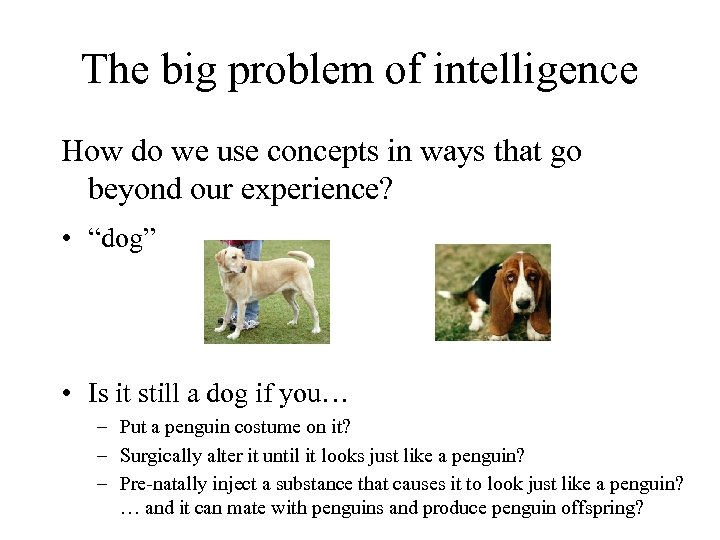

The big problem of intelligence How do we use concepts in ways that go beyond our experience? • “dog” • Is it still a dog if you… – Put a penguin costume on it? – Surgically alter it until it looks just like a penguin? – Pre-natally inject a substance that causes it to look just like a penguin? … and it can mate with penguins and produce penguin offspring?

The big problem of intelligence How do we use concepts in ways that go beyond our experience? • “dog” • Is it still a dog if you… – Put a penguin costume on it? – Surgically alter it until it looks just like a penguin? – Pre-natally inject a substance that causes it to look just like a penguin? … and it can mate with penguins and produce penguin offspring?

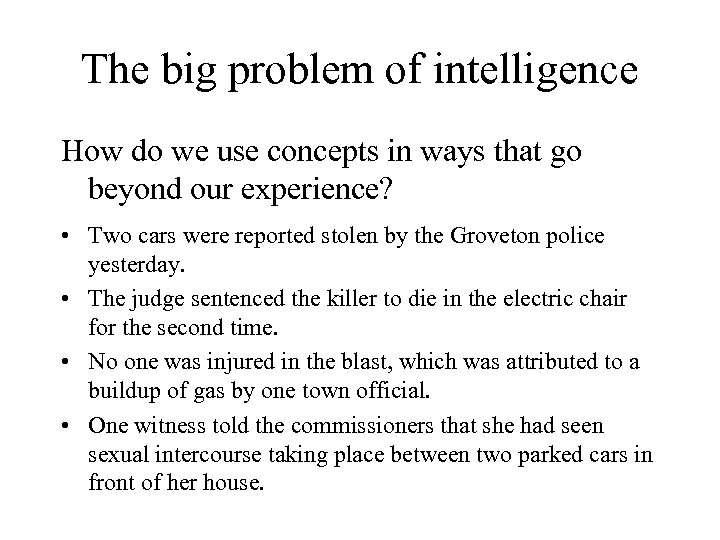

The big problem of intelligence How do we use concepts in ways that go beyond our experience? • Two cars were reported stolen by the Groveton police yesterday. • The judge sentenced the killer to die in the electric chair for the second time. • No one was injured in the blast, which was attributed to a buildup of gas by one town official. • One witness told the commissioners that she had seen sexual intercourse taking place between two parked cars in front of her house.

The big problem of intelligence How do we use concepts in ways that go beyond our experience? • Two cars were reported stolen by the Groveton police yesterday. • The judge sentenced the killer to die in the electric chair for the second time. • No one was injured in the blast, which was attributed to a buildup of gas by one town official. • One witness told the commissioners that she had seen sexual intercourse taking place between two parked cars in front of her house.

The big problem of intelligence How do we use concepts in ways that go beyond our experience? Consider a man named Boris. – Is the mother of Boris’s father his grandmother? – Is the mother of Boris’s sister his mother? – Is the son of Boris’s sister his son? (Note: Boris and his family were stranded on a desert island when he was a young boy. )

The big problem of intelligence How do we use concepts in ways that go beyond our experience? Consider a man named Boris. – Is the mother of Boris’s father his grandmother? – Is the mother of Boris’s sister his mother? – Is the son of Boris’s sister his son? (Note: Boris and his family were stranded on a desert island when he was a young boy. )

What makes us so smart? • Memory? • Logical inference?

What makes us so smart? • Memory? • Logical inference?

What makes us so smart? • Memory? No. – The difference between a test that you can pass on rote memory and a test that shows whether you “actually learned something”. • Logical inference? No. – The difference between deductive inference and inductive inference.

What makes us so smart? • Memory? No. – The difference between a test that you can pass on rote memory and a test that shows whether you “actually learned something”. • Logical inference? No. – The difference between deductive inference and inductive inference.

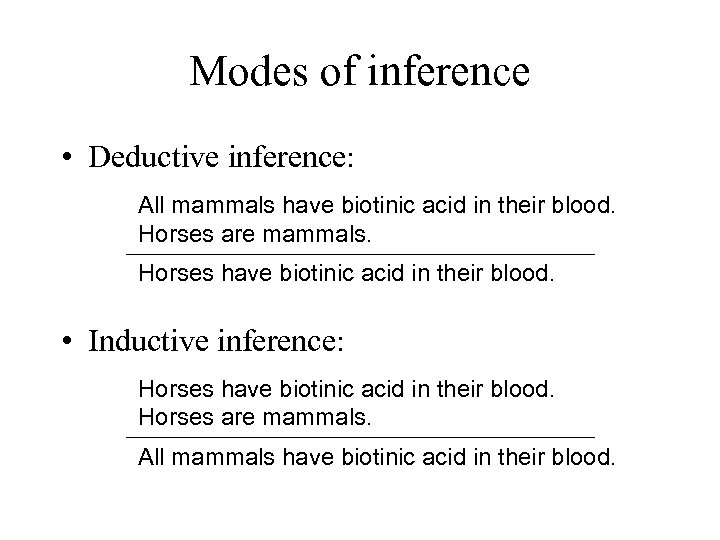

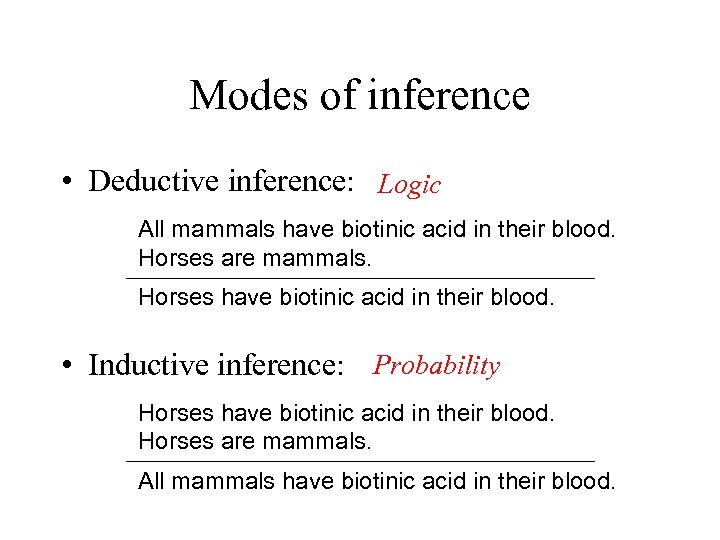

Modes of inference • Deductive inference: All mammals have biotinic acid in their blood. Horses are mammals. Horses have biotinic acid in their blood. • Inductive inference: Horses have biotinic acid in their blood. Horses are mammals. All mammals have biotinic acid in their blood.

Modes of inference • Deductive inference: All mammals have biotinic acid in their blood. Horses are mammals. Horses have biotinic acid in their blood. • Inductive inference: Horses have biotinic acid in their blood. Horses are mammals. All mammals have biotinic acid in their blood.

What makes us so smart? • Intuitive theories – Systems of concepts that are in some important respects like scientific theories. – Abstract knowledge that supports prediction, explanation, exploration, and decision-making for an infinite range of situations that we have not previously encountered.

What makes us so smart? • Intuitive theories – Systems of concepts that are in some important respects like scientific theories. – Abstract knowledge that supports prediction, explanation, exploration, and decision-making for an infinite range of situations that we have not previously encountered.

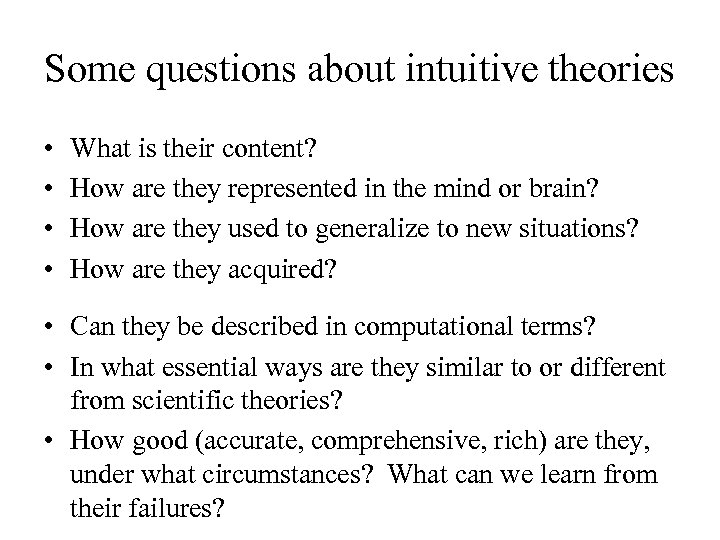

Some questions about intuitive theories • • What is their content? How are they represented in the mind or brain? How are they used to generalize to new situations? How are they acquired?

Some questions about intuitive theories • • What is their content? How are they represented in the mind or brain? How are they used to generalize to new situations? How are they acquired?

Some questions about intuitive theories • • What is their content? How are they represented in the mind or brain? How are they used to generalize to new situations? How are they acquired? • Can they be described in computational terms? • In what essential ways are they similar to or different from scientific theories? • How good (accurate, comprehensive, rich) are they, under what circumstances? What can we learn from their failures?

Some questions about intuitive theories • • What is their content? How are they represented in the mind or brain? How are they used to generalize to new situations? How are they acquired? • Can they be described in computational terms? • In what essential ways are they similar to or different from scientific theories? • How good (accurate, comprehensive, rich) are they, under what circumstances? What can we learn from their failures?

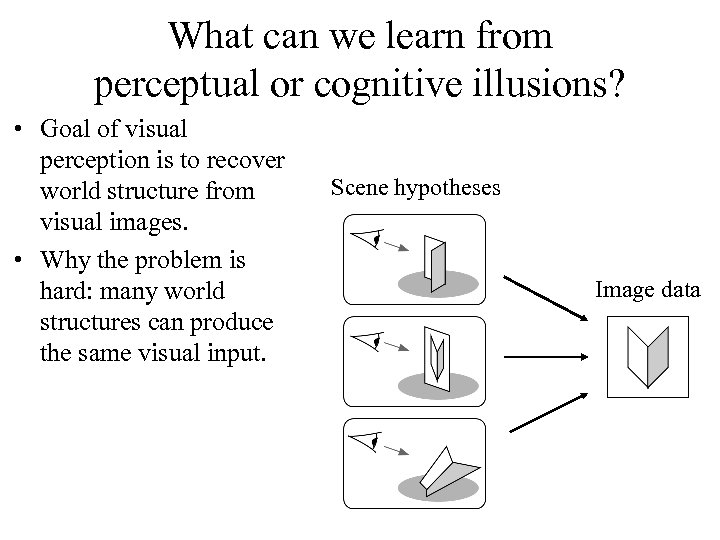

What can we learn from perceptual or cognitive illusions? • Goal of visual perception is to recover world structure from visual images. • Why the problem is hard: many world structures can produce the same visual input. Scene hypotheses Image data

What can we learn from perceptual or cognitive illusions? • Goal of visual perception is to recover world structure from visual images. • Why the problem is hard: many world structures can produce the same visual input. Scene hypotheses Image data

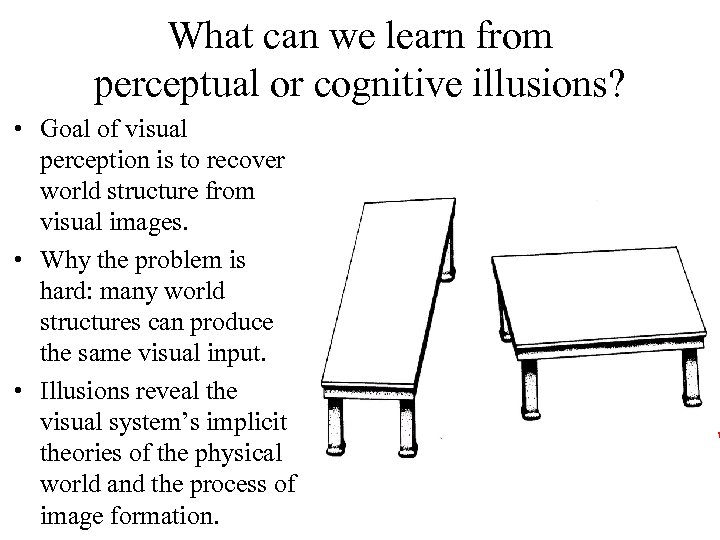

What can we learn from perceptual or cognitive illusions? • Goal of visual perception is to recover world structure from visual images. • Why the problem is hard: many world structures can produce the same visual input. • Illusions reveal the visual system’s implicit theories of the physical world and the process of image formation.

What can we learn from perceptual or cognitive illusions? • Goal of visual perception is to recover world structure from visual images. • Why the problem is hard: many world structures can produce the same visual input. • Illusions reveal the visual system’s implicit theories of the physical world and the process of image formation.

Computational models of theorybased inductive inference Josh Tenenbaum Department of Brain and Cognitive Sciences Computer Science and Artificial Intelligence Laboratory MIT

Computational models of theorybased inductive inference Josh Tenenbaum Department of Brain and Cognitive Sciences Computer Science and Artificial Intelligence Laboratory MIT

Plan for today • A general framework for solving underconstrained inference problems – Bayesian inference • Applications in perception and cognition – lightness perception – predicting the future (with Tom Griffiths) – learning about properties of natural species (with Charles Kemp)

Plan for today • A general framework for solving underconstrained inference problems – Bayesian inference • Applications in perception and cognition – lightness perception – predicting the future (with Tom Griffiths) – learning about properties of natural species (with Charles Kemp)

Modes of inference • Deductive inference: Logic All mammals have biotinic acid in their blood. Horses are mammals. Horses have biotinic acid in their blood. • Inductive inference: Probability Horses have biotinic acid in their blood. Horses are mammals. All mammals have biotinic acid in their blood.

Modes of inference • Deductive inference: Logic All mammals have biotinic acid in their blood. Horses are mammals. Horses have biotinic acid in their blood. • Inductive inference: Probability Horses have biotinic acid in their blood. Horses are mammals. All mammals have biotinic acid in their blood.

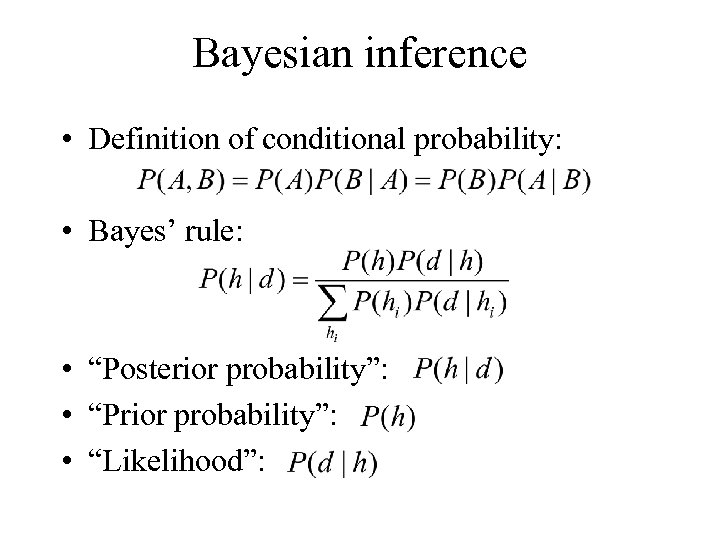

Bayesian inference • Definition of conditional probability: • Bayes’ rule: • “Posterior probability”: • “Prior probability”: • “Likelihood”:

Bayesian inference • Definition of conditional probability: • Bayes’ rule: • “Posterior probability”: • “Prior probability”: • “Likelihood”:

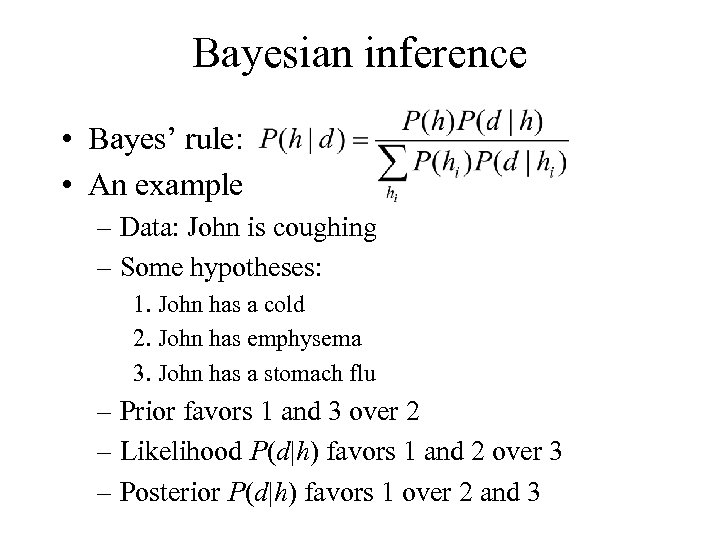

Bayesian inference • Bayes’ rule: • An example – Data: John is coughing – Some hypotheses: 1. John has a cold 2. John has emphysema 3. John has a stomach flu – Prior favors 1 and 3 over 2 – Likelihood P(d|h) favors 1 and 2 over 3 – Posterior P(d|h) favors 1 over 2 and 3

Bayesian inference • Bayes’ rule: • An example – Data: John is coughing – Some hypotheses: 1. John has a cold 2. John has emphysema 3. John has a stomach flu – Prior favors 1 and 3 over 2 – Likelihood P(d|h) favors 1 and 2 over 3 – Posterior P(d|h) favors 1 over 2 and 3

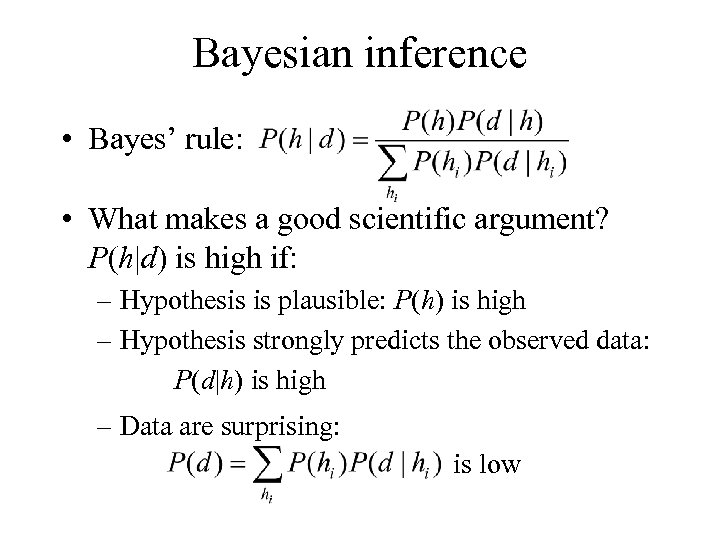

Bayesian inference • Bayes’ rule: • What makes a good scientific argument? P(h|d) is high if: – Hypothesis is plausible: P(h) is high – Hypothesis strongly predicts the observed data: P(d|h) is high – Data are surprising: is low

Bayesian inference • Bayes’ rule: • What makes a good scientific argument? P(h|d) is high if: – Hypothesis is plausible: P(h) is high – Hypothesis strongly predicts the observed data: P(d|h) is high – Data are surprising: is low

Coin flipping HHTHT HHHHH What process produced these sequences?

Coin flipping HHTHT HHHHH What process produced these sequences?

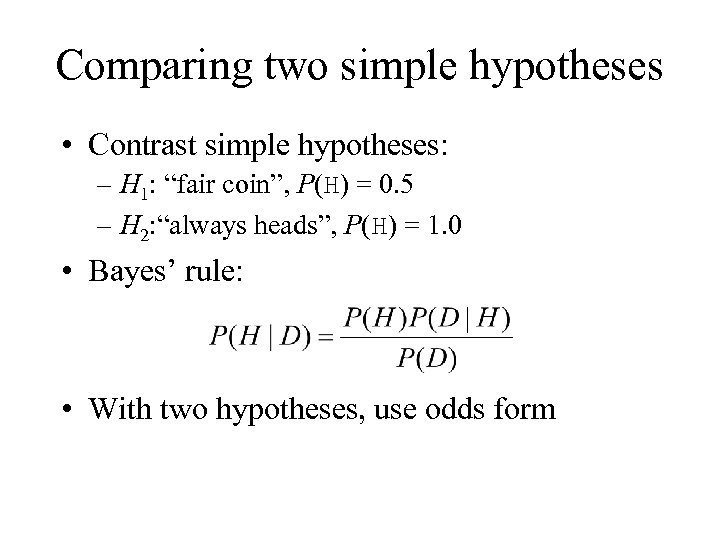

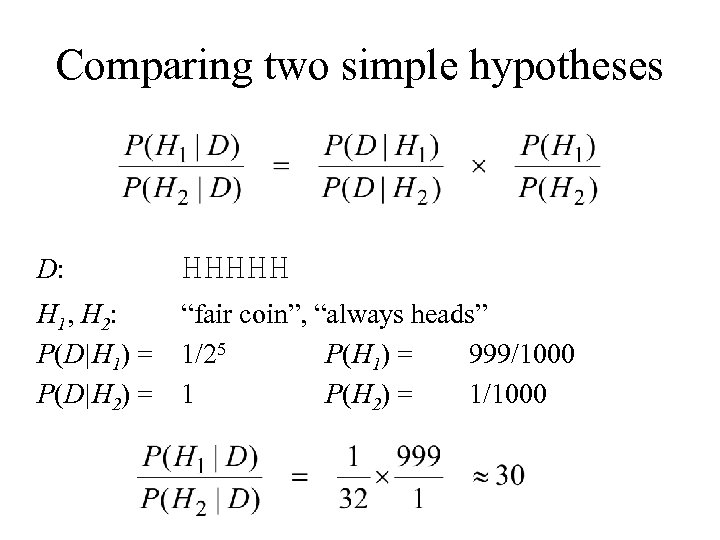

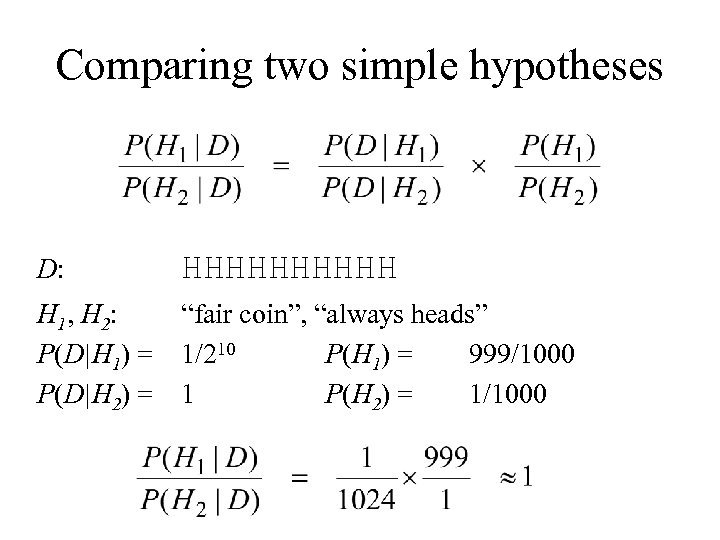

Comparing two simple hypotheses • Contrast simple hypotheses: – H 1: “fair coin”, P(H) = 0. 5 – H 2: “always heads”, P(H) = 1. 0 • Bayes’ rule: • With two hypotheses, use odds form

Comparing two simple hypotheses • Contrast simple hypotheses: – H 1: “fair coin”, P(H) = 0. 5 – H 2: “always heads”, P(H) = 1. 0 • Bayes’ rule: • With two hypotheses, use odds form

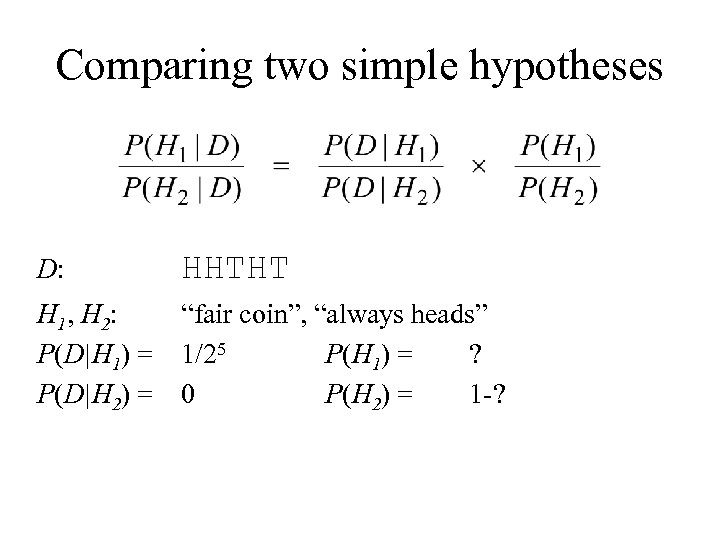

Comparing two simple hypotheses D: HHTHT H 1, H 2: P(D|H 1) = P(D|H 2) = “fair coin”, “always heads” 1/25 P(H 1) = ? 0 P(H 2) = 1 -?

Comparing two simple hypotheses D: HHTHT H 1, H 2: P(D|H 1) = P(D|H 2) = “fair coin”, “always heads” 1/25 P(H 1) = ? 0 P(H 2) = 1 -?

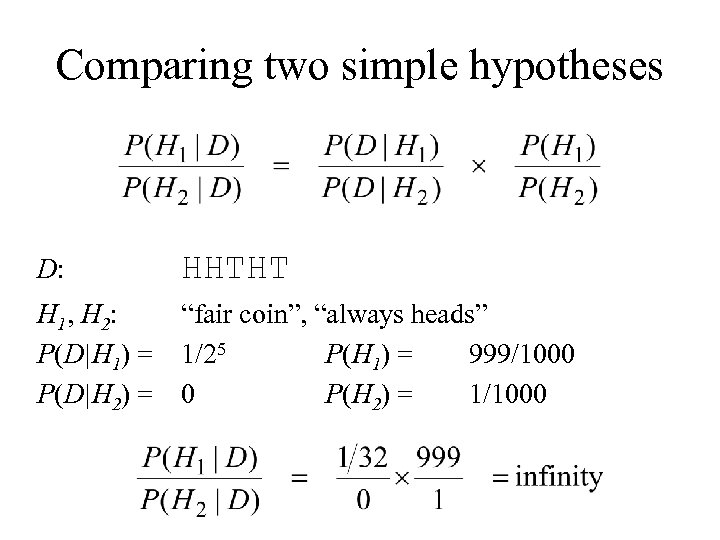

Comparing two simple hypotheses D: HHTHT H 1, H 2: P(D|H 1) = P(D|H 2) = “fair coin”, “always heads” 1/25 P(H 1) = 999/1000 0 P(H 2) = 1/1000

Comparing two simple hypotheses D: HHTHT H 1, H 2: P(D|H 1) = P(D|H 2) = “fair coin”, “always heads” 1/25 P(H 1) = 999/1000 0 P(H 2) = 1/1000

Comparing two simple hypotheses D: HHHHH H 1, H 2: P(D|H 1) = P(D|H 2) = “fair coin”, “always heads” 1/25 P(H 1) = 999/1000 1 P(H 2) = 1/1000

Comparing two simple hypotheses D: HHHHH H 1, H 2: P(D|H 1) = P(D|H 2) = “fair coin”, “always heads” 1/25 P(H 1) = 999/1000 1 P(H 2) = 1/1000

Comparing two simple hypotheses D: HHHHH H 1, H 2: P(D|H 1) = P(D|H 2) = “fair coin”, “always heads” 1/210 P(H 1) = 999/1000 1 P(H 2) = 1/1000

Comparing two simple hypotheses D: HHHHH H 1, H 2: P(D|H 1) = P(D|H 2) = “fair coin”, “always heads” 1/210 P(H 1) = 999/1000 1 P(H 2) = 1/1000

The role of intuitive theories The fact that HHTHT looks representative of a fair coin and HHHHH does not reflects our implicit theories of how the world works. – Easy to imagine how a trick all-heads coin could work: high prior probability. – Hard to imagine how a trick “HHTHT” coin could work: low prior probability.

The role of intuitive theories The fact that HHTHT looks representative of a fair coin and HHHHH does not reflects our implicit theories of how the world works. – Easy to imagine how a trick all-heads coin could work: high prior probability. – Hard to imagine how a trick “HHTHT” coin could work: low prior probability.

Plan for today • A general framework for solving underconstrained inference problems – Bayesian inference • Applications in perception and cognition – lightness perception – predicting the future (with Tom Griffiths) – learning about properties of natural species (with Charles Kemp)

Plan for today • A general framework for solving underconstrained inference problems – Bayesian inference • Applications in perception and cognition – lightness perception – predicting the future (with Tom Griffiths) – learning about properties of natural species (with Charles Kemp)

Gelb / Gilchrist demo

Gelb / Gilchrist demo

Explaining the illusion • The problem of lightness constancy – Separating the intrinsic reflectance (“color”) of a surface from the intensity of the illumination. • Anchoring heuristic: – Assume that the brightest patch in each scene is white. • Questions: – Is this really right? – Why (and when) is it a good solution to the problem of lightness constancy?

Explaining the illusion • The problem of lightness constancy – Separating the intrinsic reflectance (“color”) of a surface from the intensity of the illumination. • Anchoring heuristic: – Assume that the brightest patch in each scene is white. • Questions: – Is this really right? – Why (and when) is it a good solution to the problem of lightness constancy?

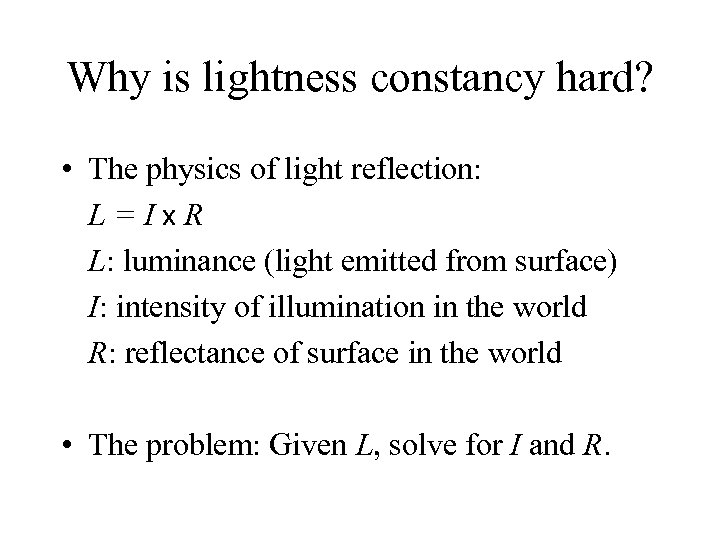

Why is lightness constancy hard? • The physics of light reflection: L=Ix. R L: luminance (light emitted from surface) I: intensity of illumination in the world R: reflectance of surface in the world • The problem: Given L, solve for I and R.

Why is lightness constancy hard? • The physics of light reflection: L=Ix. R L: luminance (light emitted from surface) I: intensity of illumination in the world R: reflectance of surface in the world • The problem: Given L, solve for I and R.

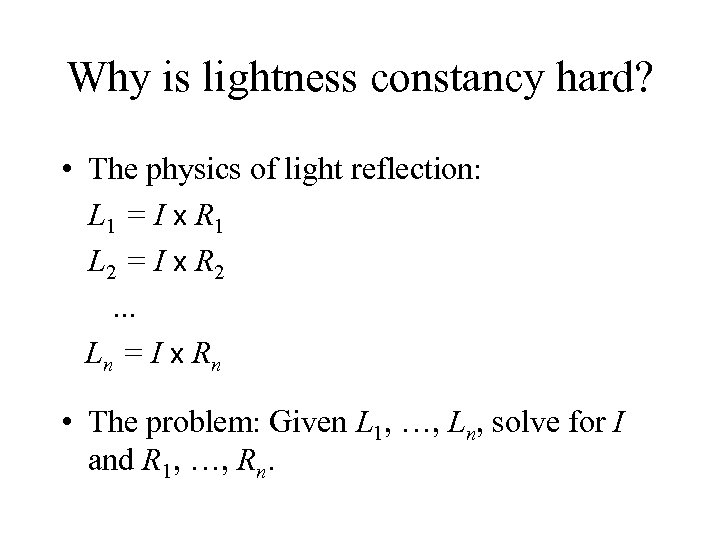

Why is lightness constancy hard? • The physics of light reflection: L 1 = I x R 1 L 2 = I x R 2. . . Ln = I x R n • The problem: Given L 1, …, Ln, solve for I and R 1, …, Rn.

Why is lightness constancy hard? • The physics of light reflection: L 1 = I x R 1 L 2 = I x R 2. . . Ln = I x R n • The problem: Given L 1, …, Ln, solve for I and R 1, …, Rn.

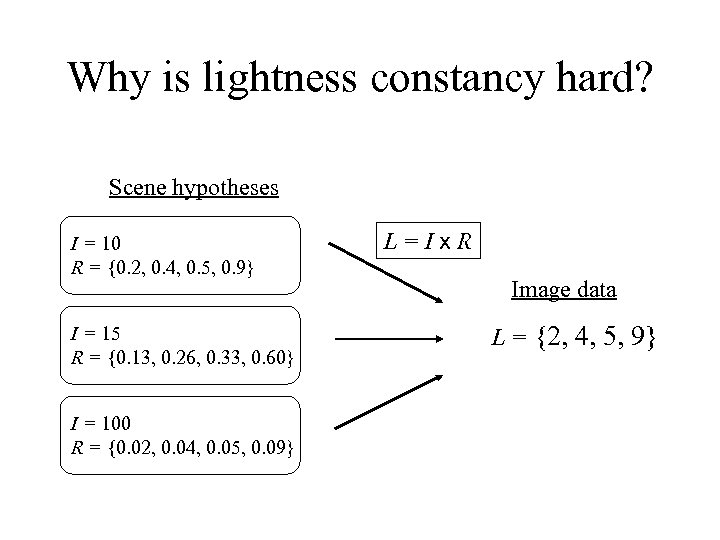

Why is lightness constancy hard? Scene hypotheses I = 10 R = {0. 2, 0. 4, 0. 5, 0. 9} I = 15 R = {0. 13, 0. 26, 0. 33, 0. 60} I = 100 R = {0. 02, 0. 04, 0. 05, 0. 09} L=Ix. R Image data L = {2, 4, 5, 9}

Why is lightness constancy hard? Scene hypotheses I = 10 R = {0. 2, 0. 4, 0. 5, 0. 9} I = 15 R = {0. 13, 0. 26, 0. 33, 0. 60} I = 100 R = {0. 02, 0. 04, 0. 05, 0. 09} L=Ix. R Image data L = {2, 4, 5, 9}

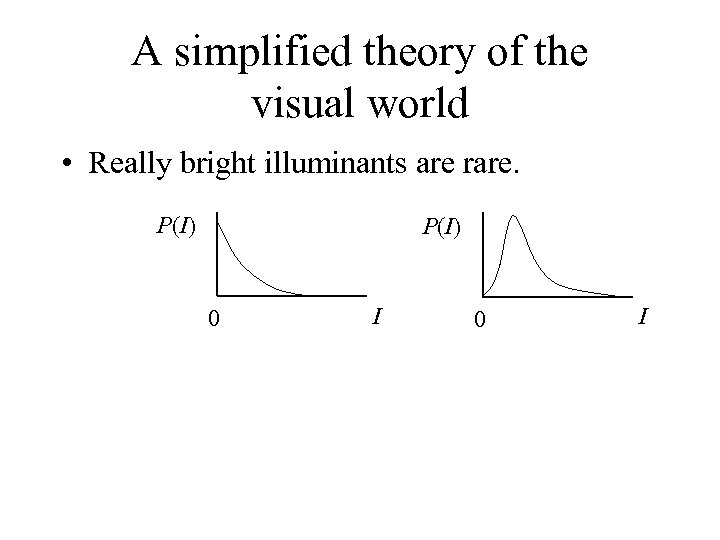

A simplified theory of the visual world • Really bright illuminants are rare. P(I) 0 I

A simplified theory of the visual world • Really bright illuminants are rare. P(I) 0 I

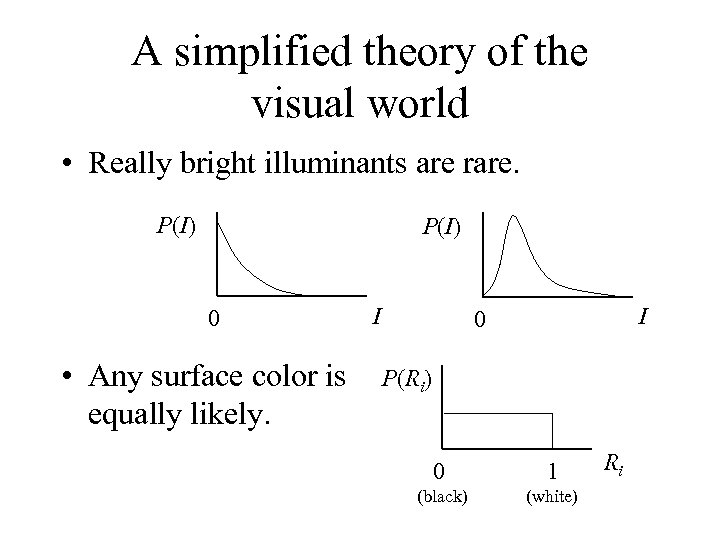

A simplified theory of the visual world • Really bright illuminants are rare. P(I) 0 • Any surface color is equally likely. I I 0 P(Ri) 0 1 (black) (white) Ri

A simplified theory of the visual world • Really bright illuminants are rare. P(I) 0 • Any surface color is equally likely. I I 0 P(Ri) 0 1 (black) (white) Ri

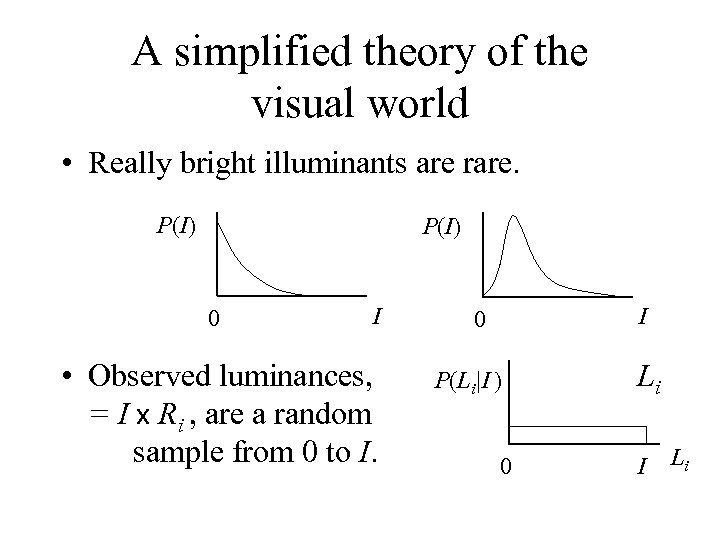

A simplified theory of the visual world • Really bright illuminants are rare. P(I) 0 I • Observed luminances, = I x Ri , are a random sample from 0 to I. I 0 P(Li|I ) 0 Li I Li

A simplified theory of the visual world • Really bright illuminants are rare. P(I) 0 I • Observed luminances, = I x Ri , are a random sample from 0 to I. I 0 P(Li|I ) 0 Li I Li

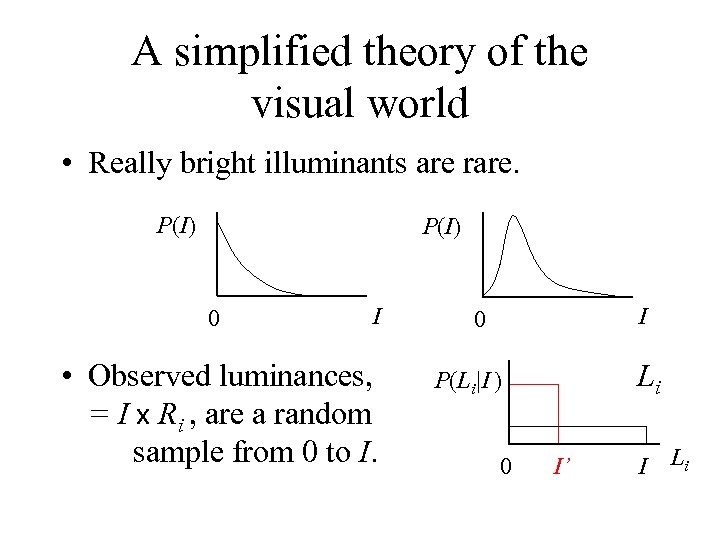

A simplified theory of the visual world • Really bright illuminants are rare. P(I) 0 I • Observed luminances, = I x Ri , are a random sample from 0 to I. I 0 Li P(Li|I ) 0 I’ I Li

A simplified theory of the visual world • Really bright illuminants are rare. P(I) 0 I • Observed luminances, = I x Ri , are a random sample from 0 to I. I 0 Li P(Li|I ) 0 I’ I Li

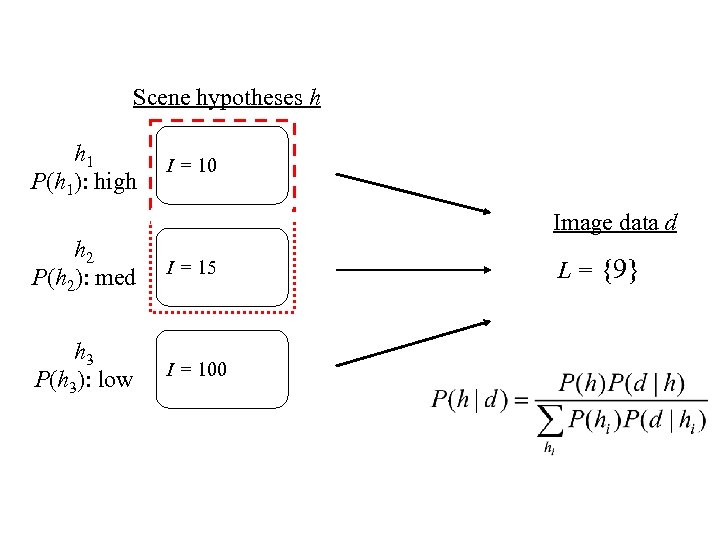

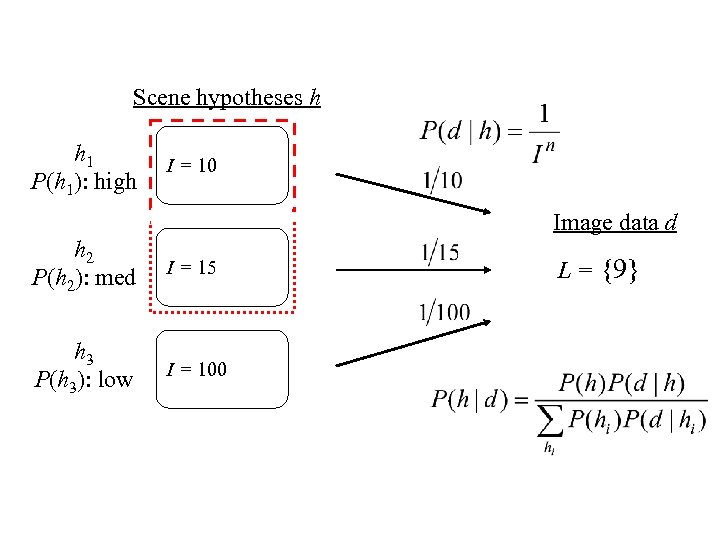

Scene hypotheses h h 1 P(h 1): high I = 10 Image data d h 2 P(h 2): med I = 15 h 3 P(h 3): low I = 100 L = {9}

Scene hypotheses h h 1 P(h 1): high I = 10 Image data d h 2 P(h 2): med I = 15 h 3 P(h 3): low I = 100 L = {9}

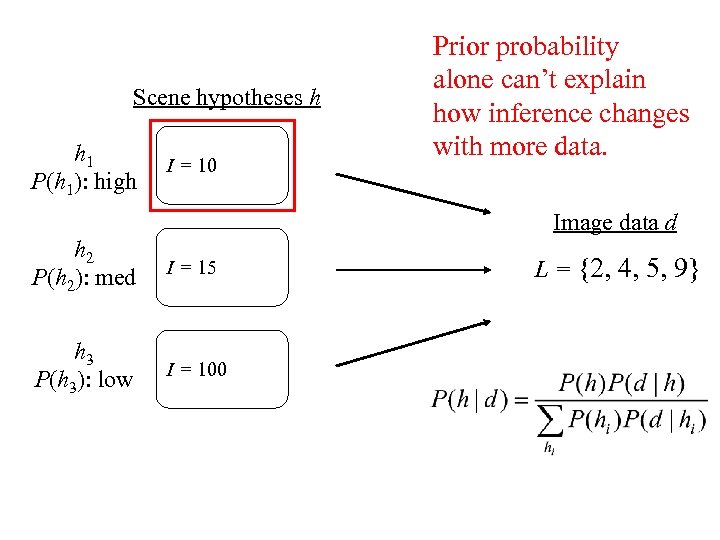

Scene hypotheses h h 1 P(h 1): high I = 10 Prior probability alone can’t explain how inference changes with more data. Image data d h 2 P(h 2): med I = 15 h 3 P(h 3): low I = 100 L = {2, 4, 5, 9}

Scene hypotheses h h 1 P(h 1): high I = 10 Prior probability alone can’t explain how inference changes with more data. Image data d h 2 P(h 2): med I = 15 h 3 P(h 3): low I = 100 L = {2, 4, 5, 9}

Scene hypotheses h h 1 P(h 1): high I = 10 Image data d h 2 P(h 2): med I = 15 h 3 P(h 3): low I = 100 L = {9}

Scene hypotheses h h 1 P(h 1): high I = 10 Image data d h 2 P(h 2): med I = 15 h 3 P(h 3): low I = 100 L = {9}

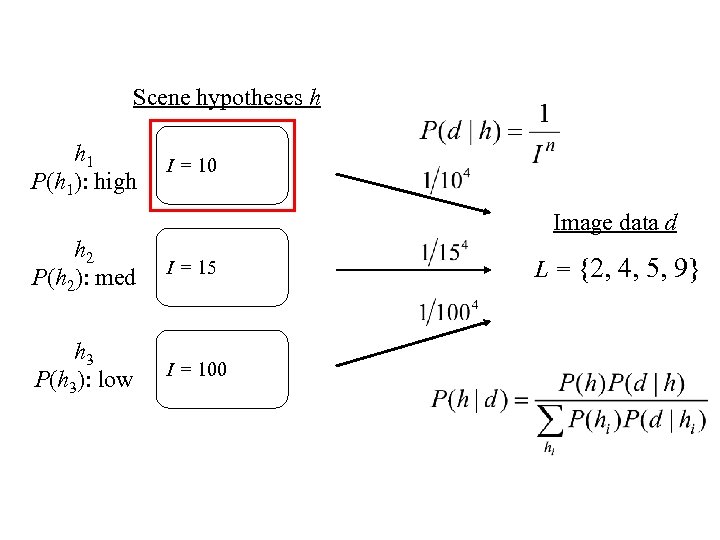

Scene hypotheses h h 1 P(h 1): high I = 10 Image data d h 2 P(h 2): med I = 15 h 3 P(h 3): low I = 100 L = {2, 4, 5, 9}

Scene hypotheses h h 1 P(h 1): high I = 10 Image data d h 2 P(h 2): med I = 15 h 3 P(h 3): low I = 100 L = {2, 4, 5, 9}

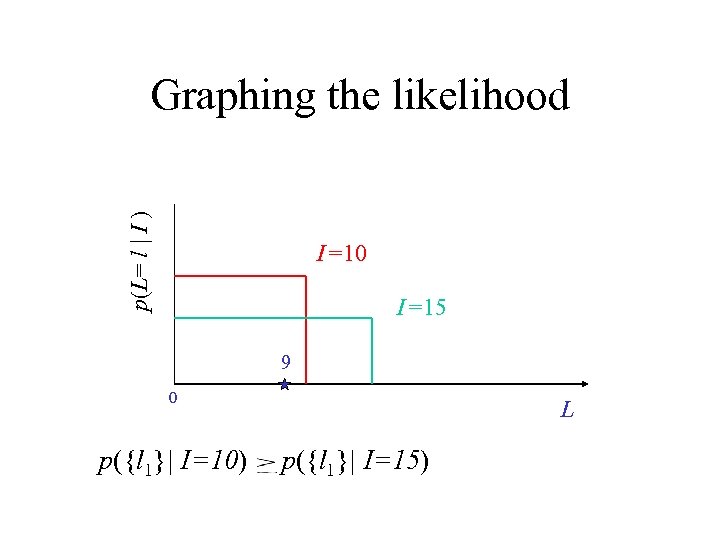

p(L= l | I ) Graphing the likelihood I =10 I =15 9 0 p({l 1}| I=10) L p({l 1}| I=15)

p(L= l | I ) Graphing the likelihood I =10 I =15 9 0 p({l 1}| I=10) L p({l 1}| I=15)

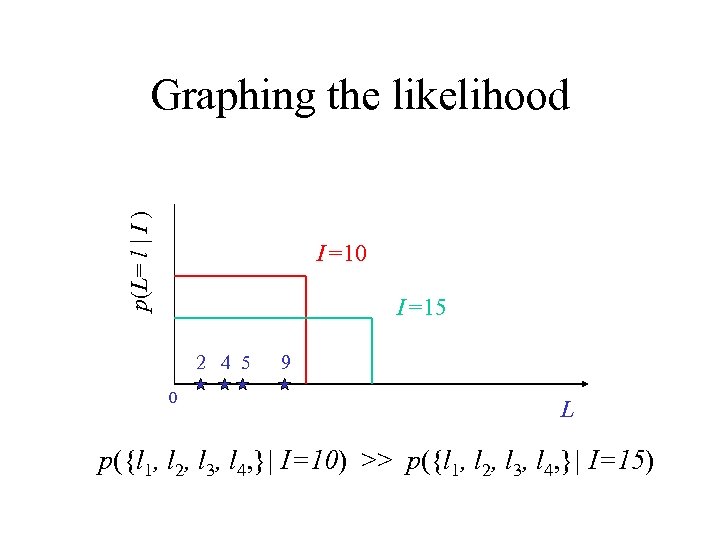

p(L= l | I ) Graphing the likelihood I =10 I =15 2 4 5 0 9 L p({l 1, l 2, l 3, l 4, }| I=10) >> p({l 1, l 2, l 3, l 4, }| I=15)

p(L= l | I ) Graphing the likelihood I =10 I =15 2 4 5 0 9 L p({l 1, l 2, l 3, l 4, }| I=10) >> p({l 1, l 2, l 3, l 4, }| I=15)

Explanations lightness constancy • Anchoring heuristic: Assume that the brightest patch in each scene is white. – Is this really right? – Why (and when) is it a good solution to the problem? • Bayesian analysis – Explains the computational basis for inference. – Explains why confidence in “brightest = white” increases as more samples are observed.

Explanations lightness constancy • Anchoring heuristic: Assume that the brightest patch in each scene is white. – Is this really right? – Why (and when) is it a good solution to the problem? • Bayesian analysis – Explains the computational basis for inference. – Explains why confidence in “brightest = white” increases as more samples are observed.

Applications to cognition • Predicting the future (with Tom Griffiths) • Learning about properties of natural species (with Charles Kemp)

Applications to cognition • Predicting the future (with Tom Griffiths) • Learning about properties of natural species (with Charles Kemp)

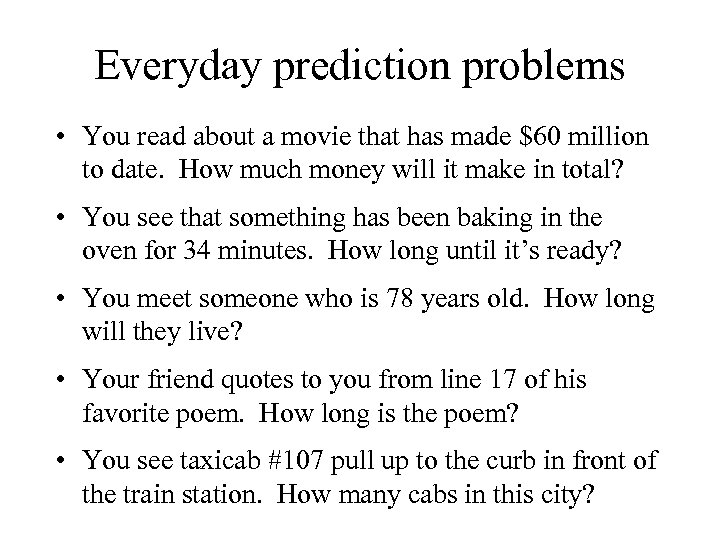

Everyday prediction problems • You read about a movie that has made $60 million to date. How much money will it make in total? • You see that something has been baking in the oven for 34 minutes. How long until it’s ready? • You meet someone who is 78 years old. How long will they live? • Your friend quotes to you from line 17 of his favorite poem. How long is the poem? • You see taxicab #107 pull up to the curb in front of the train station. How many cabs in this city?

Everyday prediction problems • You read about a movie that has made $60 million to date. How much money will it make in total? • You see that something has been baking in the oven for 34 minutes. How long until it’s ready? • You meet someone who is 78 years old. How long will they live? • Your friend quotes to you from line 17 of his favorite poem. How long is the poem? • You see taxicab #107 pull up to the curb in front of the train station. How many cabs in this city?

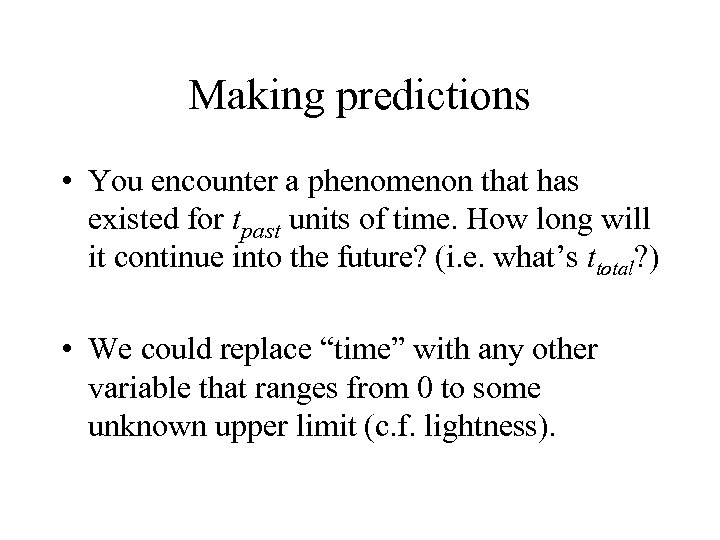

Making predictions • You encounter a phenomenon that has existed for tpast units of time. How long will it continue into the future? (i. e. what’s ttotal? ) • We could replace “time” with any other variable that ranges from 0 to some unknown upper limit (c. f. lightness).

Making predictions • You encounter a phenomenon that has existed for tpast units of time. How long will it continue into the future? (i. e. what’s ttotal? ) • We could replace “time” with any other variable that ranges from 0 to some unknown upper limit (c. f. lightness).

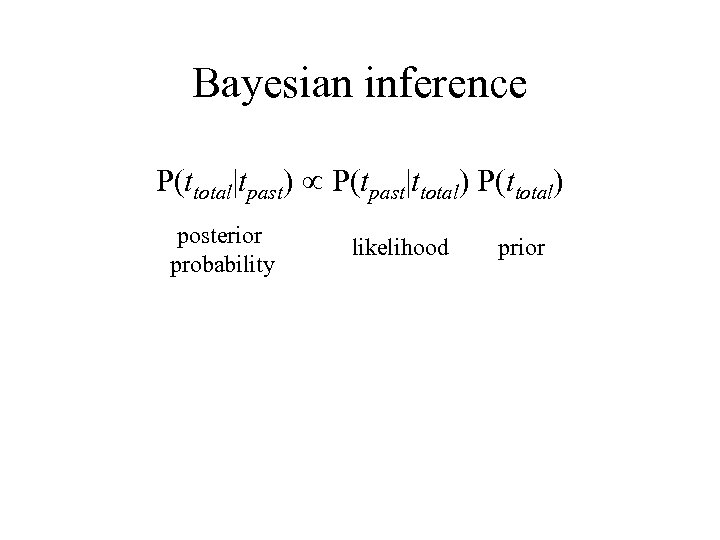

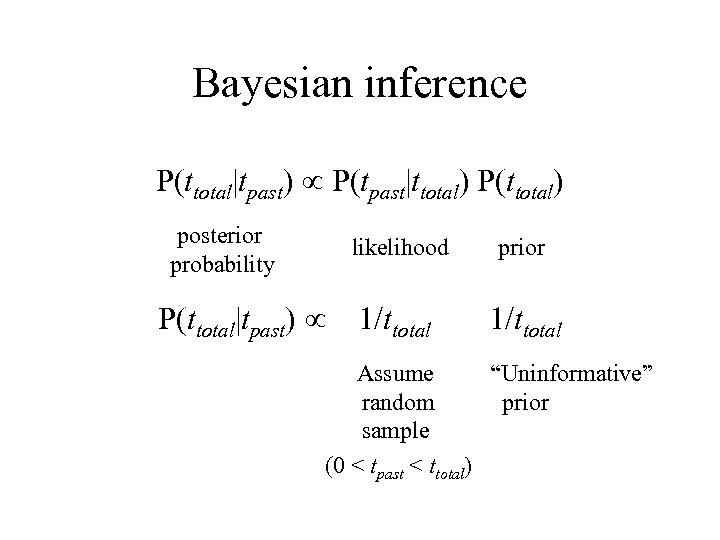

Bayesian inference P(ttotal|tpast) P(tpast|ttotal) P(ttotal) posterior probability likelihood prior

Bayesian inference P(ttotal|tpast) P(tpast|ttotal) P(ttotal) posterior probability likelihood prior

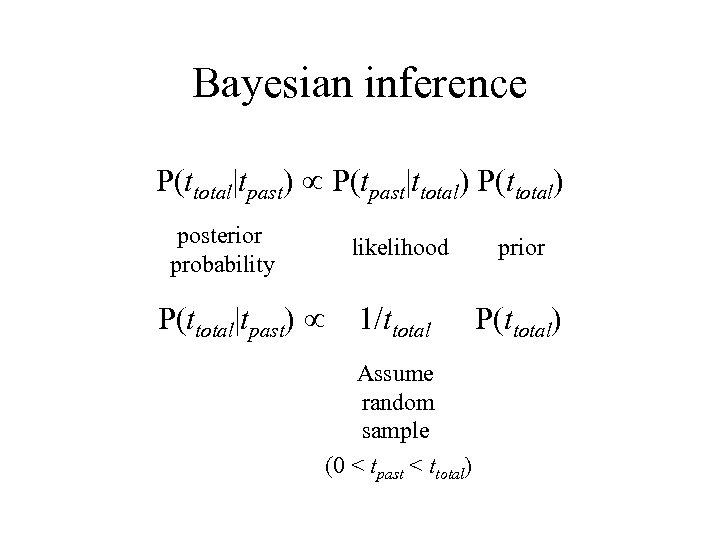

Bayesian inference P(ttotal|tpast) P(tpast|ttotal) P(ttotal) posterior probability likelihood P(ttotal|tpast) prior 1/ttotal P(ttotal) Assume random sample (0 < tpast < ttotal)

Bayesian inference P(ttotal|tpast) P(tpast|ttotal) P(ttotal) posterior probability likelihood P(ttotal|tpast) prior 1/ttotal P(ttotal) Assume random sample (0 < tpast < ttotal)

Bayesian inference P(ttotal|tpast) P(tpast|ttotal) P(ttotal) posterior probability likelihood P(ttotal|tpast) prior 1/ttotal Assume “Uninformative” random prior sample (0 < tpast < ttotal)

Bayesian inference P(ttotal|tpast) P(tpast|ttotal) P(ttotal) posterior probability likelihood P(ttotal|tpast) prior 1/ttotal Assume “Uninformative” random prior sample (0 < tpast < ttotal)

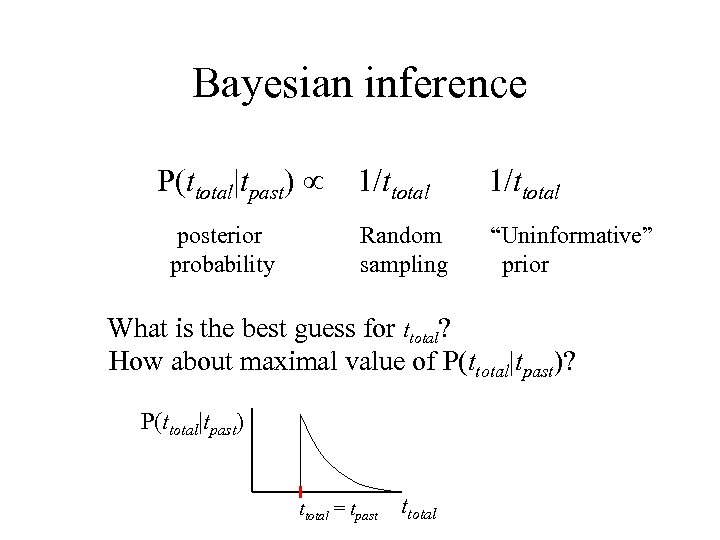

Bayesian inference P(ttotal|tpast) posterior probability 1/ttotal Random sampling “Uninformative” prior What is the best guess for ttotal? How about maximal value of P(ttotal|tpast)? P(ttotal|tpast) ttotal = tpast ttotal

Bayesian inference P(ttotal|tpast) posterior probability 1/ttotal Random sampling “Uninformative” prior What is the best guess for ttotal? How about maximal value of P(ttotal|tpast)? P(ttotal|tpast) ttotal = tpast ttotal

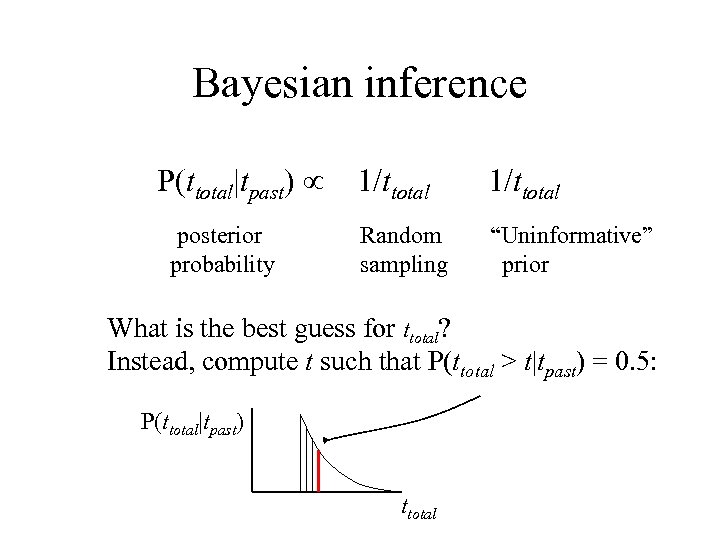

Bayesian inference P(ttotal|tpast) posterior probability 1/ttotal Random sampling “Uninformative” prior What is the best guess for ttotal? Instead, compute t such that P(ttotal > t|tpast) = 0. 5: P(ttotal|tpast) ttotal

Bayesian inference P(ttotal|tpast) posterior probability 1/ttotal Random sampling “Uninformative” prior What is the best guess for ttotal? Instead, compute t such that P(ttotal > t|tpast) = 0. 5: P(ttotal|tpast) ttotal

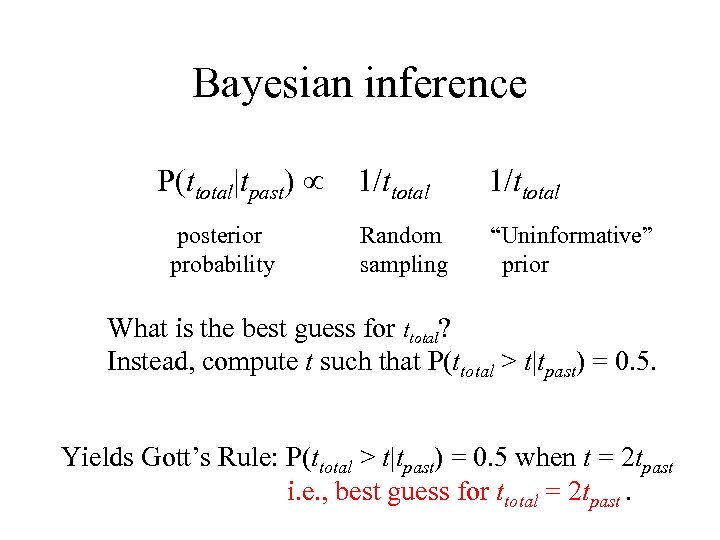

Bayesian inference P(ttotal|tpast) posterior probability 1/ttotal Random sampling “Uninformative” prior What is the best guess for ttotal? Instead, compute t such that P(ttotal > t|tpast) = 0. 5. Yields Gott’s Rule: P(ttotal > t|tpast) = 0. 5 when t = 2 tpast i. e. , best guess for ttotal = 2 tpast.

Bayesian inference P(ttotal|tpast) posterior probability 1/ttotal Random sampling “Uninformative” prior What is the best guess for ttotal? Instead, compute t such that P(ttotal > t|tpast) = 0. 5. Yields Gott’s Rule: P(ttotal > t|tpast) = 0. 5 when t = 2 tpast i. e. , best guess for ttotal = 2 tpast.

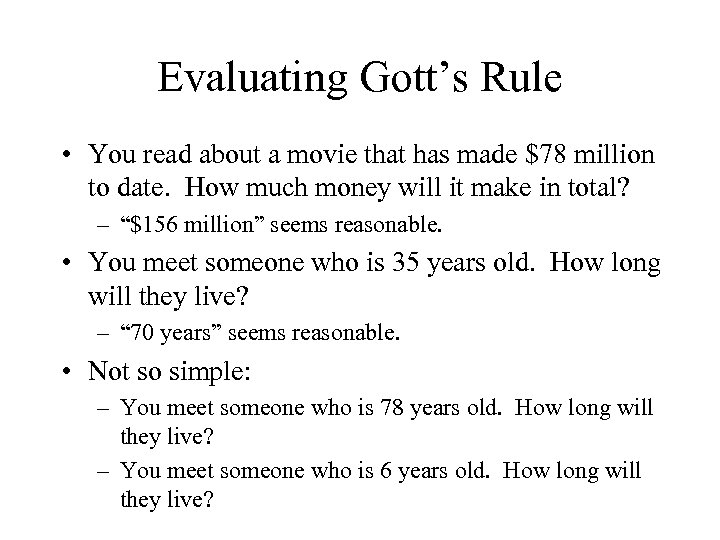

Evaluating Gott’s Rule • You read about a movie that has made $78 million to date. How much money will it make in total? – “$156 million” seems reasonable. • You meet someone who is 35 years old. How long will they live? – “ 70 years” seems reasonable. • Not so simple: – You meet someone who is 78 years old. How long will they live? – You meet someone who is 6 years old. How long will they live?

Evaluating Gott’s Rule • You read about a movie that has made $78 million to date. How much money will it make in total? – “$156 million” seems reasonable. • You meet someone who is 35 years old. How long will they live? – “ 70 years” seems reasonable. • Not so simple: – You meet someone who is 78 years old. How long will they live? – You meet someone who is 6 years old. How long will they live?

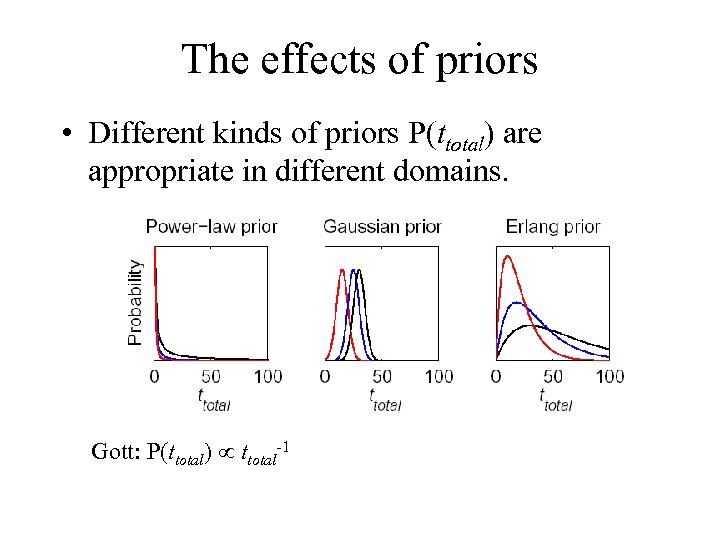

The effects of priors • Different kinds of priors P(ttotal) are appropriate in different domains. Gott: P(ttotal) ttotal-1

The effects of priors • Different kinds of priors P(ttotal) are appropriate in different domains. Gott: P(ttotal) ttotal-1

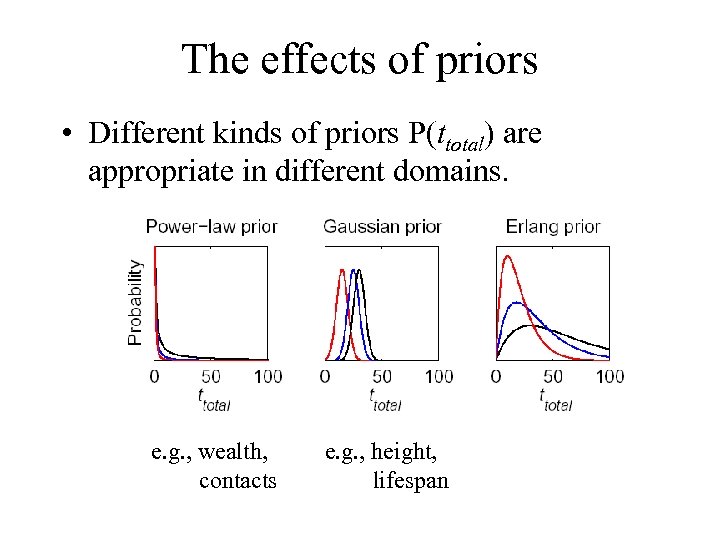

The effects of priors • Different kinds of priors P(ttotal) are appropriate in different domains. e. g. , wealth, contacts e. g. , height, lifespan

The effects of priors • Different kinds of priors P(ttotal) are appropriate in different domains. e. g. , wealth, contacts e. g. , height, lifespan

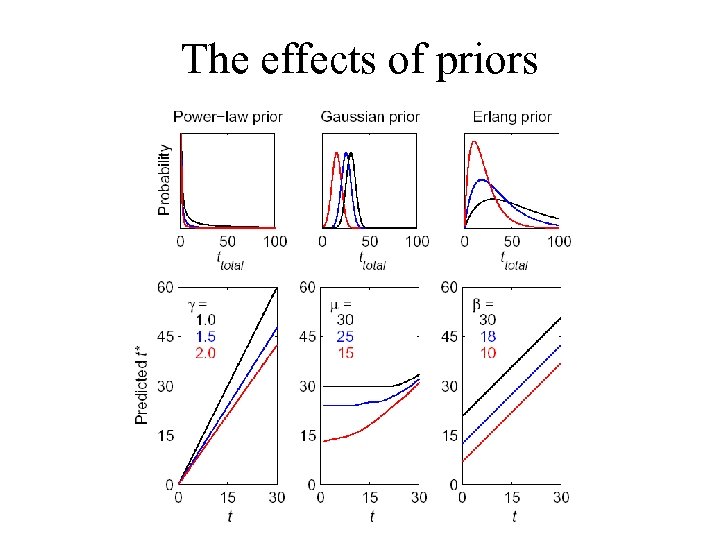

The effects of priors

The effects of priors

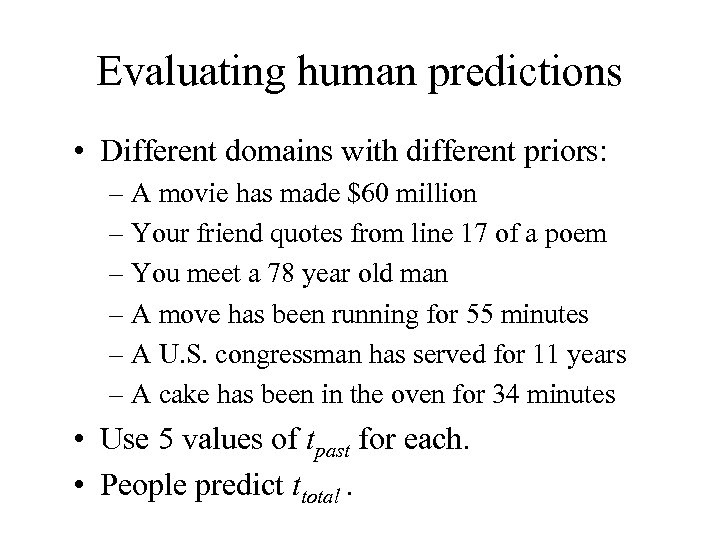

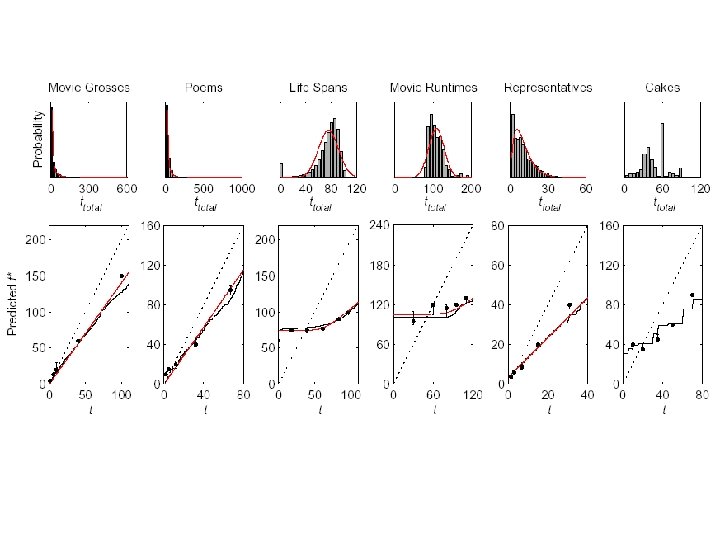

Evaluating human predictions • Different domains with different priors: – A movie has made $60 million – Your friend quotes from line 17 of a poem – You meet a 78 year old man – A move has been running for 55 minutes – A U. S. congressman has served for 11 years – A cake has been in the oven for 34 minutes • Use 5 values of tpast for each. • People predict ttotal.

Evaluating human predictions • Different domains with different priors: – A movie has made $60 million – Your friend quotes from line 17 of a poem – You meet a 78 year old man – A move has been running for 55 minutes – A U. S. congressman has served for 11 years – A cake has been in the oven for 34 minutes • Use 5 values of tpast for each. • People predict ttotal.

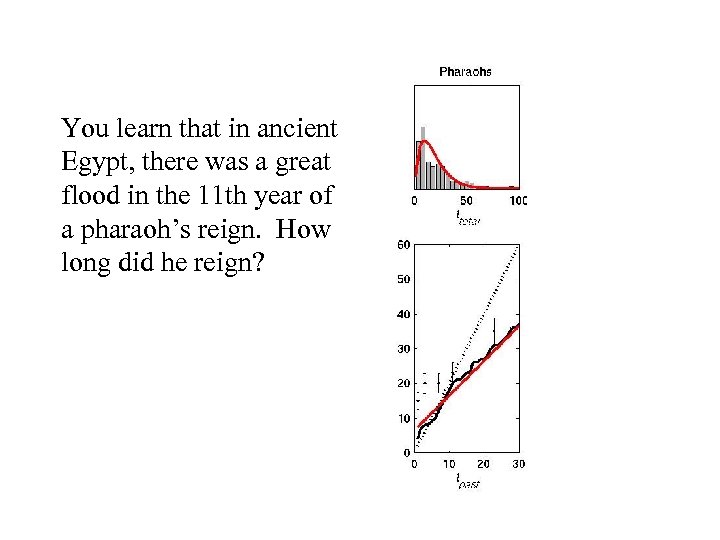

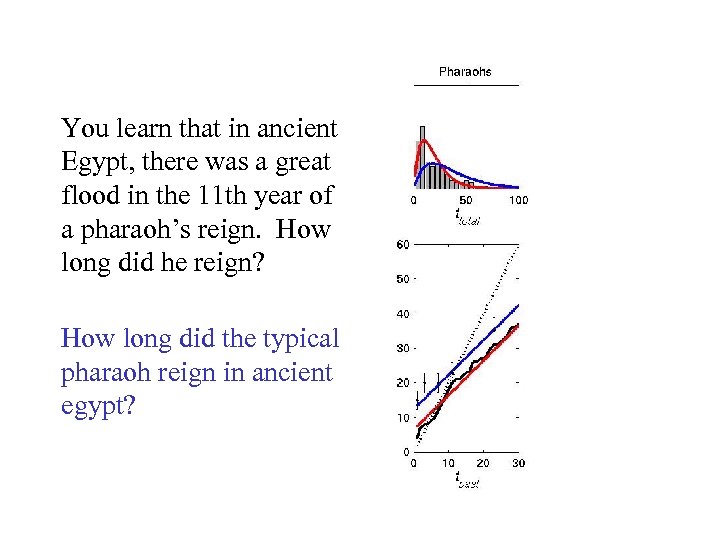

You learn that in ancient Egypt, there was a great flood in the 11 th year of a pharaoh’s reign. How long did he reign?

You learn that in ancient Egypt, there was a great flood in the 11 th year of a pharaoh’s reign. How long did he reign?

You learn that in ancient Egypt, there was a great flood in the 11 th year of a pharaoh’s reign. How long did he reign? How long did the typical pharaoh reign in ancient egypt?

You learn that in ancient Egypt, there was a great flood in the 11 th year of a pharaoh’s reign. How long did he reign? How long did the typical pharaoh reign in ancient egypt?

Assumptions guiding inference • Random sampling • Strong prior knowledge – Form of the prior (power-law or exponential) – Specific distribution given that form (parameters) – Non-parametric distribution when necessary. • With these assumptions, strong predictions can be made from a single observation

Assumptions guiding inference • Random sampling • Strong prior knowledge – Form of the prior (power-law or exponential) – Specific distribution given that form (parameters) – Non-parametric distribution when necessary. • With these assumptions, strong predictions can be made from a single observation

Applications to cognition • Predicting the future (with Tom Griffiths) • Learning about properties of natural species (with Charles Kemp)

Applications to cognition • Predicting the future (with Tom Griffiths) • Learning about properties of natural species (with Charles Kemp)

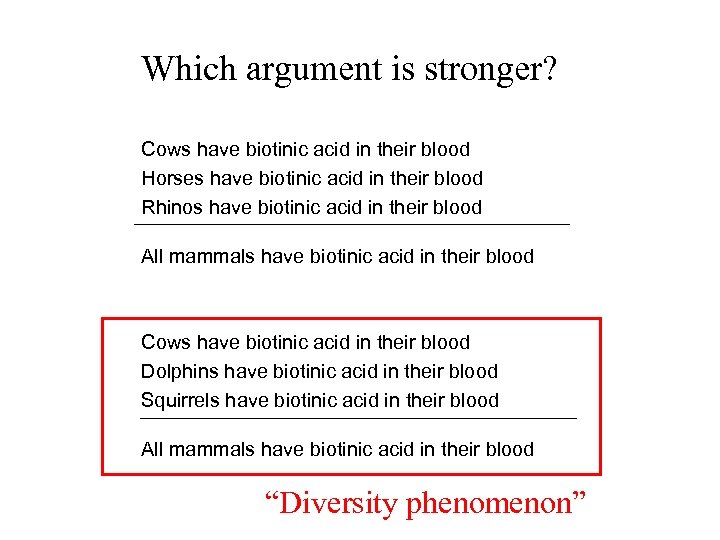

Which argument is stronger? Cows have biotinic acid in their blood Horses have biotinic acid in their blood Rhinos have biotinic acid in their blood All mammals have biotinic acid in their blood Cows have biotinic acid in their blood Dolphins have biotinic acid in their blood Squirrels have biotinic acid in their blood All mammals have biotinic acid in their blood “Diversity phenomenon”

Which argument is stronger? Cows have biotinic acid in their blood Horses have biotinic acid in their blood Rhinos have biotinic acid in their blood All mammals have biotinic acid in their blood Cows have biotinic acid in their blood Dolphins have biotinic acid in their blood Squirrels have biotinic acid in their blood All mammals have biotinic acid in their blood “Diversity phenomenon”

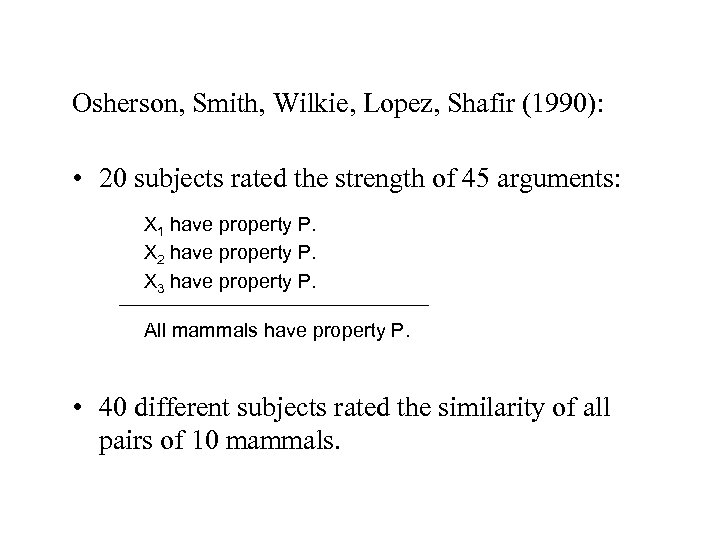

Osherson, Smith, Wilkie, Lopez, Shafir (1990): • 20 subjects rated the strength of 45 arguments: X 1 have property P. X 2 have property P. X 3 have property P. All mammals have property P. • 40 different subjects rated the similarity of all pairs of 10 mammals.

Osherson, Smith, Wilkie, Lopez, Shafir (1990): • 20 subjects rated the strength of 45 arguments: X 1 have property P. X 2 have property P. X 3 have property P. All mammals have property P. • 40 different subjects rated the similarity of all pairs of 10 mammals.

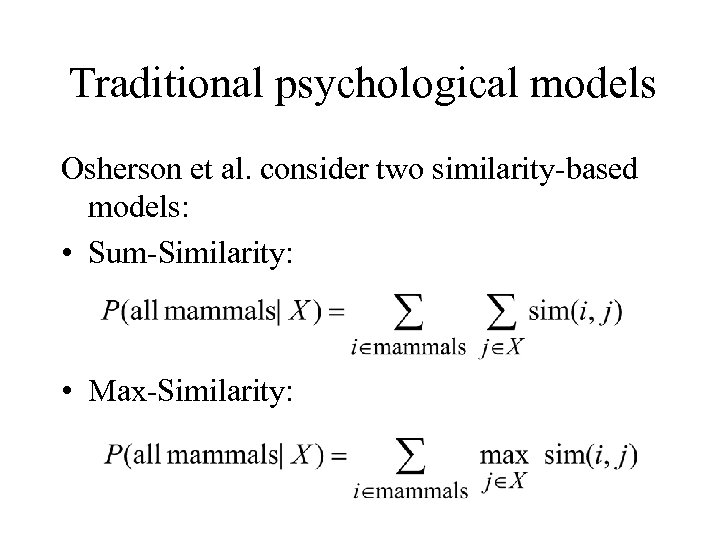

Traditional psychological models Osherson et al. consider two similarity-based models: • Sum-Similarity: • Max-Similarity:

Traditional psychological models Osherson et al. consider two similarity-based models: • Sum-Similarity: • Max-Similarity:

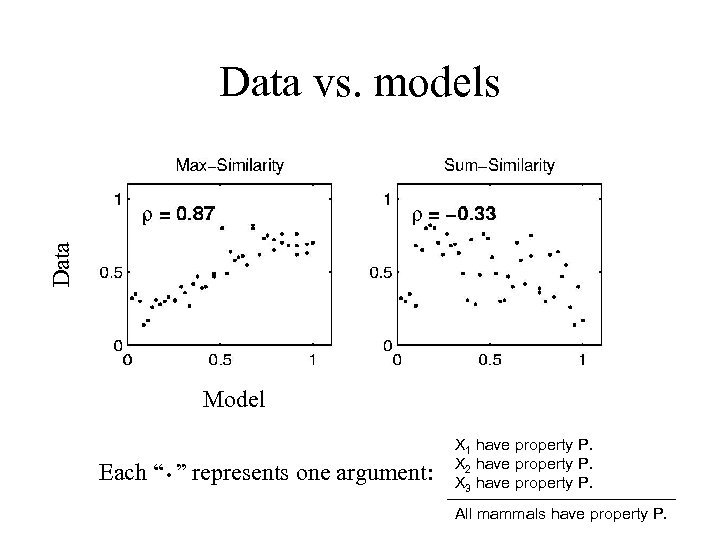

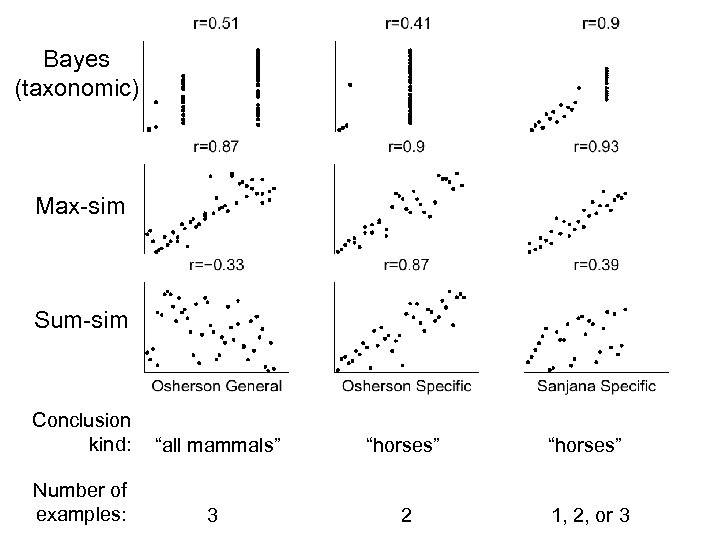

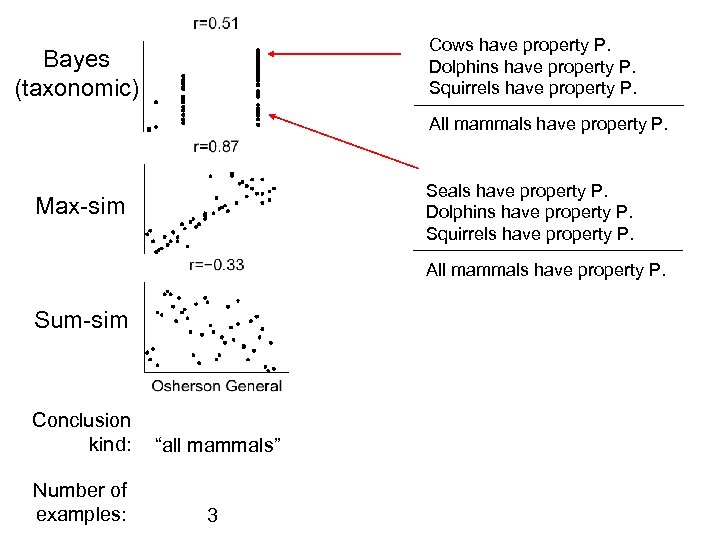

Data vs. models Model . Each “ ” represents one argument: X 1 have property P. X 2 have property P. X 3 have property P. All mammals have property P.

Data vs. models Model . Each “ ” represents one argument: X 1 have property P. X 2 have property P. X 3 have property P. All mammals have property P.

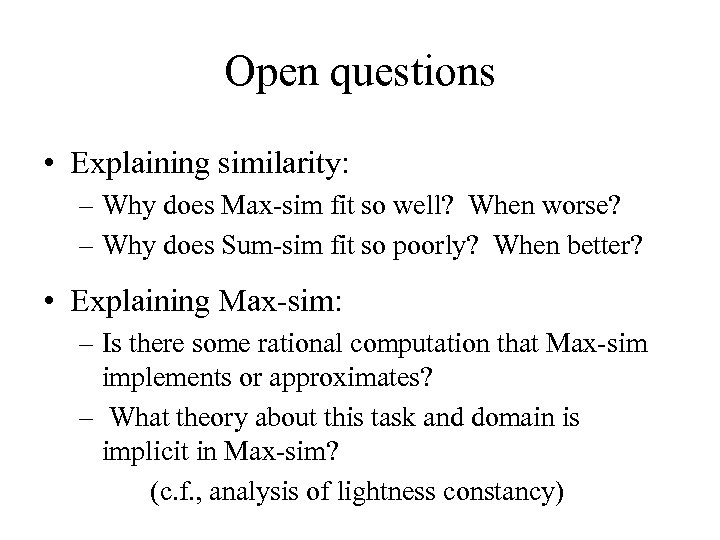

Open questions • Explaining similarity: – Why does Max-sim fit so well? When worse? – Why does Sum-sim fit so poorly? When better? • Explaining Max-sim: – Is there some rational computation that Max-sim implements or approximates? – What theory about this task and domain is implicit in Max-sim? (c. f. , analysis of lightness constancy)

Open questions • Explaining similarity: – Why does Max-sim fit so well? When worse? – Why does Sum-sim fit so poorly? When better? • Explaining Max-sim: – Is there some rational computation that Max-sim implements or approximates? – What theory about this task and domain is implicit in Max-sim? (c. f. , analysis of lightness constancy)

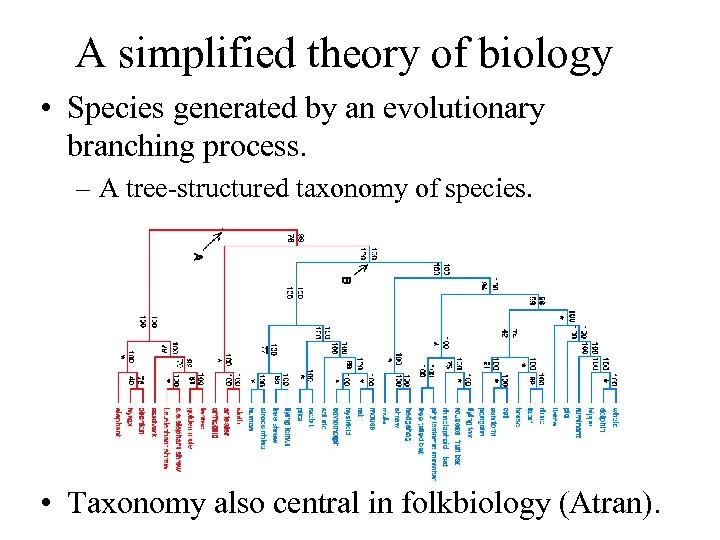

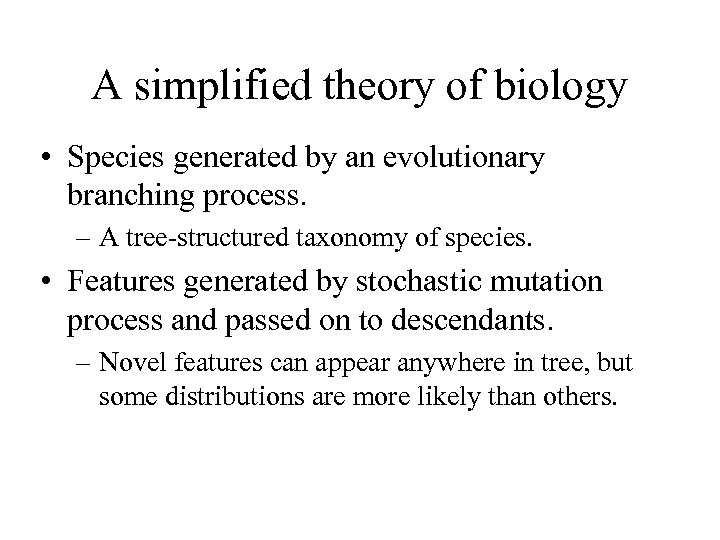

A simplified theory of biology • Species generated by an evolutionary branching process. – A tree-structured taxonomy of species. • Taxonomy also central in folkbiology (Atran).

A simplified theory of biology • Species generated by an evolutionary branching process. – A tree-structured taxonomy of species. • Taxonomy also central in folkbiology (Atran).

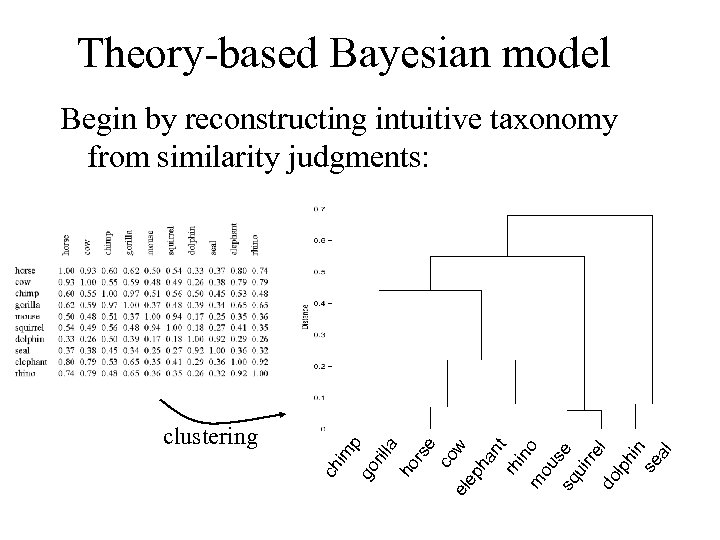

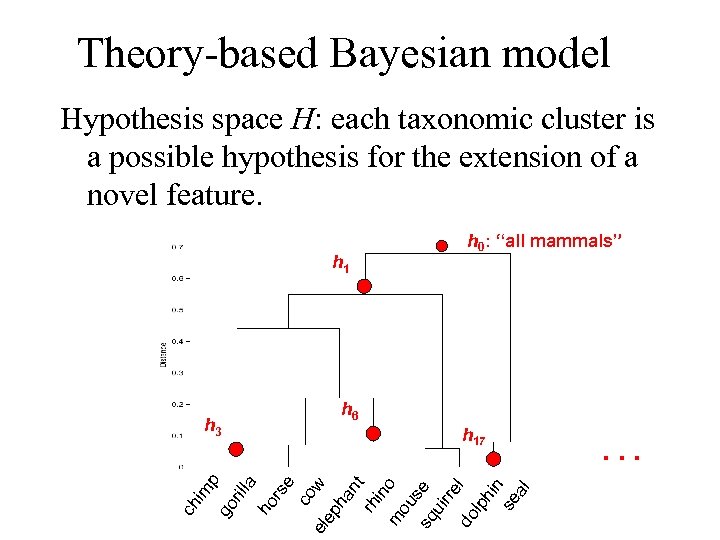

Theory-based Bayesian model rs e co w el ep ha nt rh in m o ou s sq e ui rre do l lp hi n se al a ho rill go im ch clustering p Begin by reconstructing intuitive taxonomy from similarity judgments:

Theory-based Bayesian model rs e co w el ep ha nt rh in m o ou s sq e ui rre do l lp hi n se al a ho rill go im ch clustering p Begin by reconstructing intuitive taxonomy from similarity judgments:

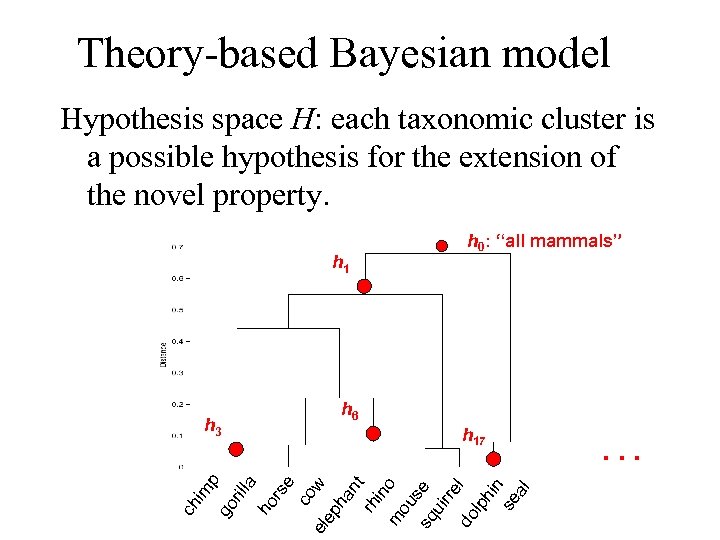

Theory-based Bayesian model Hypothesis space H: each taxonomic cluster is a possible hypothesis for the extension of the novel property. h 1 h 6 rs e co w el ep ha nt rh in m o ou s sq e ui rre do l lp hi n se al ho rill a h 17 go im p h 3 ch h 0: “all mammals” . . .

Theory-based Bayesian model Hypothesis space H: each taxonomic cluster is a possible hypothesis for the extension of the novel property. h 1 h 6 rs e co w el ep ha nt rh in m o ou s sq e ui rre do l lp hi n se al ho rill a h 17 go im p h 3 ch h 0: “all mammals” . . .

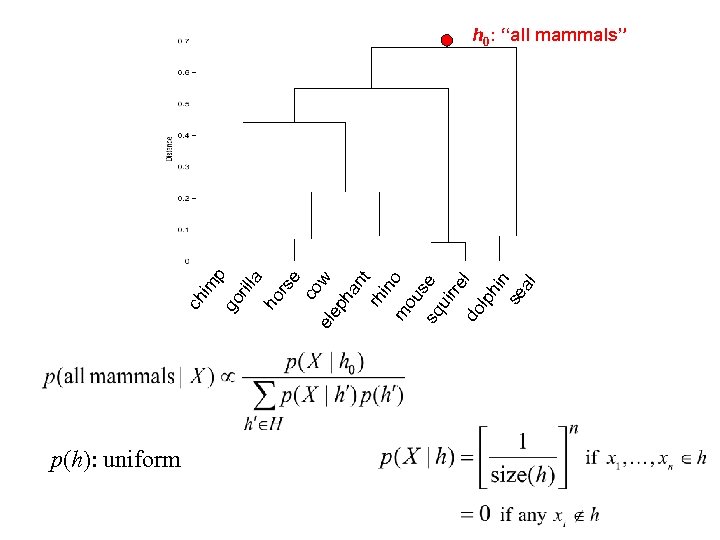

p(h): uniform rs e co w el ep ha nt rh in m o ou s sq e ui rre do l lp hi n se al ho p a rill go im ch h 0: “all mammals”

p(h): uniform rs e co w el ep ha nt rh in m o ou s sq e ui rre do l lp hi n se al ho p a rill go im ch h 0: “all mammals”

How taxonomy constrains induction • Atran (1998): “Fundamental principle of systematic induction” (Warburton 1967, Bock 1973) – Given a property found among members of any two species, the best initial hypothesis is that the property is also present among all species that are included in the smallest higher-order taxon containing the original pair of species.

How taxonomy constrains induction • Atran (1998): “Fundamental principle of systematic induction” (Warburton 1967, Bock 1973) – Given a property found among members of any two species, the best initial hypothesis is that the property is also present among all species that are included in the smallest higher-order taxon containing the original pair of species.

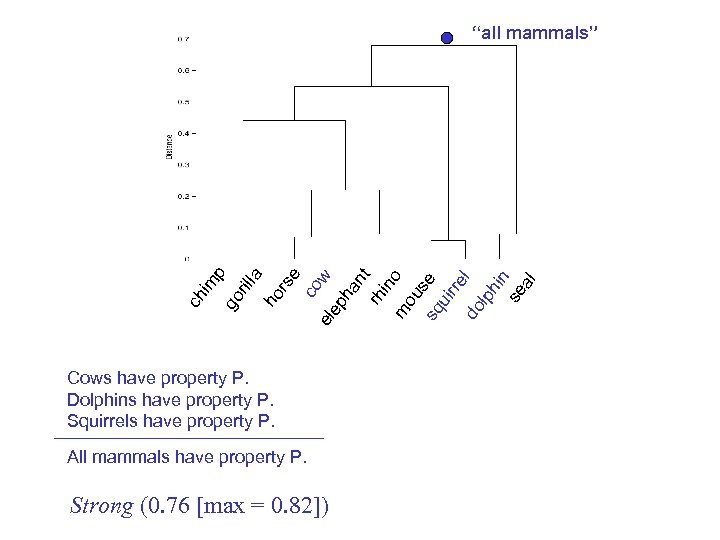

ho rs e co w el ep ha nt rh in m o ou s sq e ui rre do l lp hi n se al a rill go ch im p “all mammals” Cows have property P. Dolphins have property P. Squirrels have property P. All mammals have property P. Strong (0. 76 [max = 0. 82])

ho rs e co w el ep ha nt rh in m o ou s sq e ui rre do l lp hi n se al a rill go ch im p “all mammals” Cows have property P. Dolphins have property P. Squirrels have property P. All mammals have property P. Strong (0. 76 [max = 0. 82])

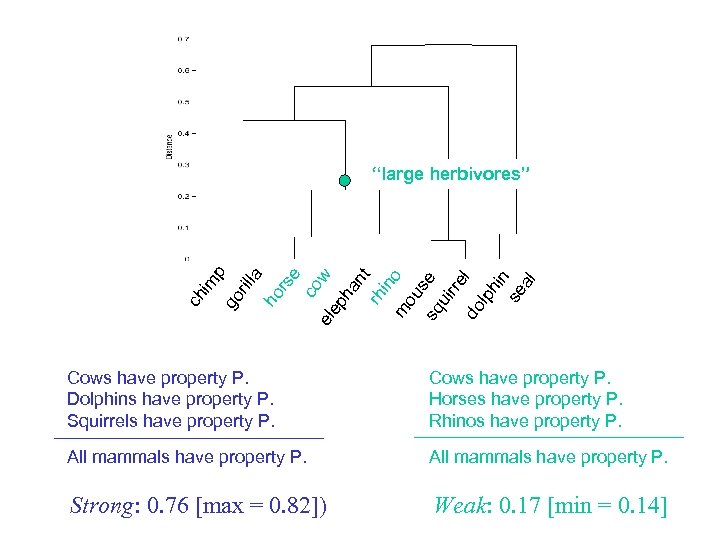

ho rs e co w el ep ha nt rh in m o ou s sq e ui rre do l lp hi n se al a rill go ch im p “large herbivores” Cows have property P. Dolphins have property P. Squirrels have property P. Cows have property P. Horses have property P. Rhinos have property P. All mammals have property P. Strong: 0. 76 [max = 0. 82]) Weak: 0. 17 [min = 0. 14]

ho rs e co w el ep ha nt rh in m o ou s sq e ui rre do l lp hi n se al a rill go ch im p “large herbivores” Cows have property P. Dolphins have property P. Squirrels have property P. Cows have property P. Horses have property P. Rhinos have property P. All mammals have property P. Strong: 0. 76 [max = 0. 82]) Weak: 0. 17 [min = 0. 14]

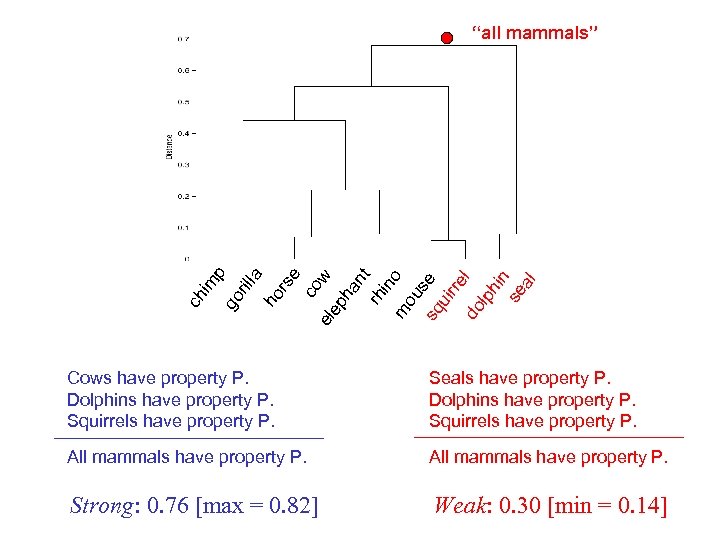

ho rs e co w el ep ha nt rh in m o ou s sq e ui rre do l lp hi n se al a rill go ch im p “all mammals” Cows have property P. Dolphins have property P. Squirrels have property P. Seals have property P. Dolphins have property P. Squirrels have property P. All mammals have property P. Strong: 0. 76 [max = 0. 82] Weak: 0. 30 [min = 0. 14]

ho rs e co w el ep ha nt rh in m o ou s sq e ui rre do l lp hi n se al a rill go ch im p “all mammals” Cows have property P. Dolphins have property P. Squirrels have property P. Seals have property P. Dolphins have property P. Squirrels have property P. All mammals have property P. Strong: 0. 76 [max = 0. 82] Weak: 0. 30 [min = 0. 14]

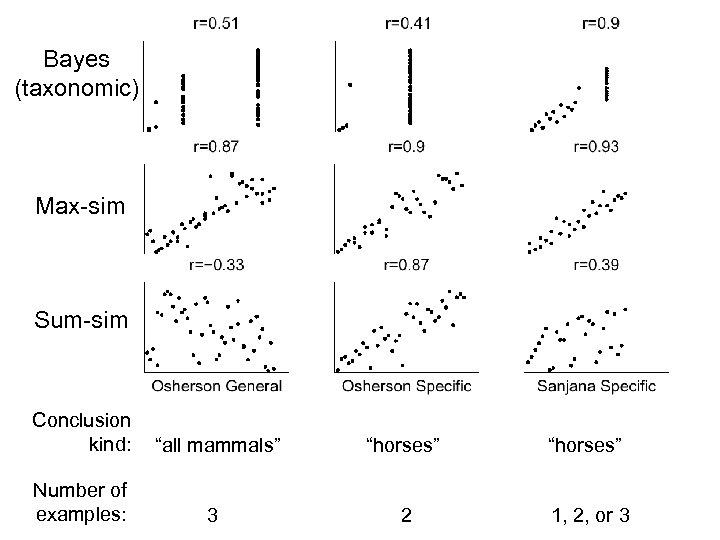

Bayes (taxonomic) Max-sim Sum-sim Conclusion kind: “all mammals” “horses” Number of examples: 3 2 1, 2, or 3

Bayes (taxonomic) Max-sim Sum-sim Conclusion kind: “all mammals” “horses” Number of examples: 3 2 1, 2, or 3

Cows have property P. Dolphins have property P. Squirrels have property P. Bayes (taxonomic) All mammals have property P. Seals have property P. Dolphins have property P. Squirrels have property P. Max-sim All mammals have property P. Sum-sim Conclusion kind: “all mammals” Number of examples: 3

Cows have property P. Dolphins have property P. Squirrels have property P. Bayes (taxonomic) All mammals have property P. Seals have property P. Dolphins have property P. Squirrels have property P. Max-sim All mammals have property P. Sum-sim Conclusion kind: “all mammals” Number of examples: 3

A simplified theory of biology • Species generated by an evolutionary branching process. – A tree-structured taxonomy of species. • Features generated by stochastic mutation process and passed on to descendants. – Novel features can appear anywhere in tree, but some distributions are more likely than others.

A simplified theory of biology • Species generated by an evolutionary branching process. – A tree-structured taxonomy of species. • Features generated by stochastic mutation process and passed on to descendants. – Novel features can appear anywhere in tree, but some distributions are more likely than others.

Theory-based Bayesian model Hypothesis space H: each taxonomic cluster is a possible hypothesis for the extension of a novel feature. h 1 h 6 rs e co w el ep ha nt rh in m o ou s sq e ui rre do l lp hi n se al ho rill a h 17 go im p h 3 ch h 0: “all mammals” . . .

Theory-based Bayesian model Hypothesis space H: each taxonomic cluster is a possible hypothesis for the extension of a novel feature. h 1 h 6 rs e co w el ep ha nt rh in m o ou s sq e ui rre do l lp hi n se al ho rill a h 17 go im p h 3 ch h 0: “all mammals” . . .

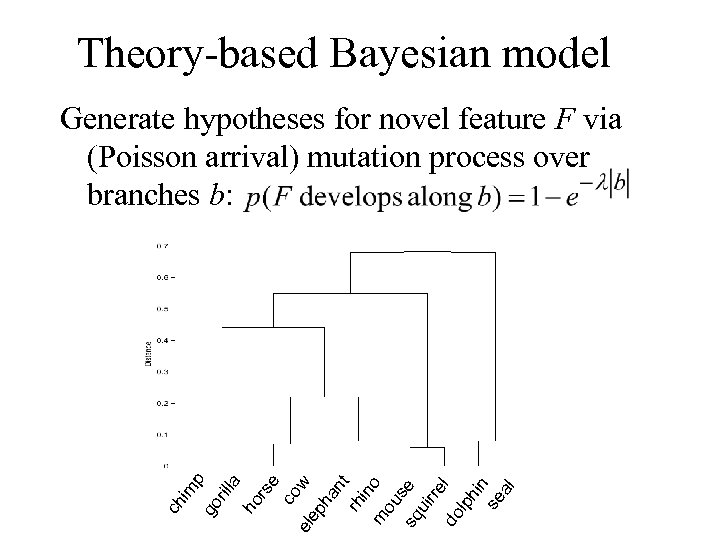

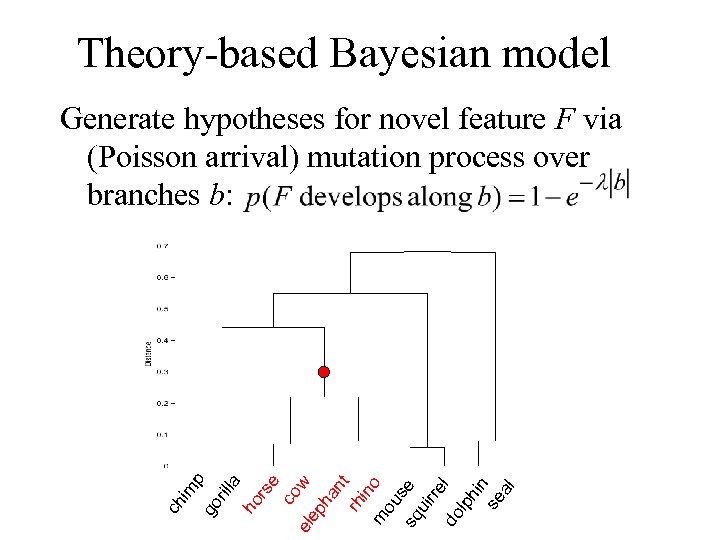

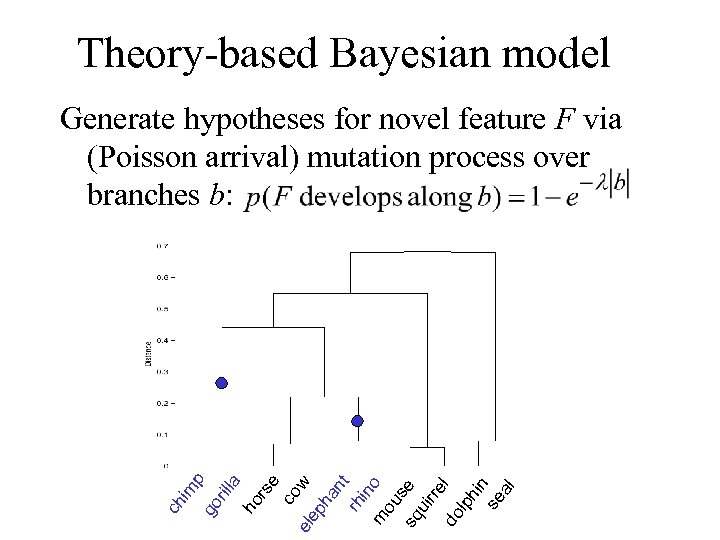

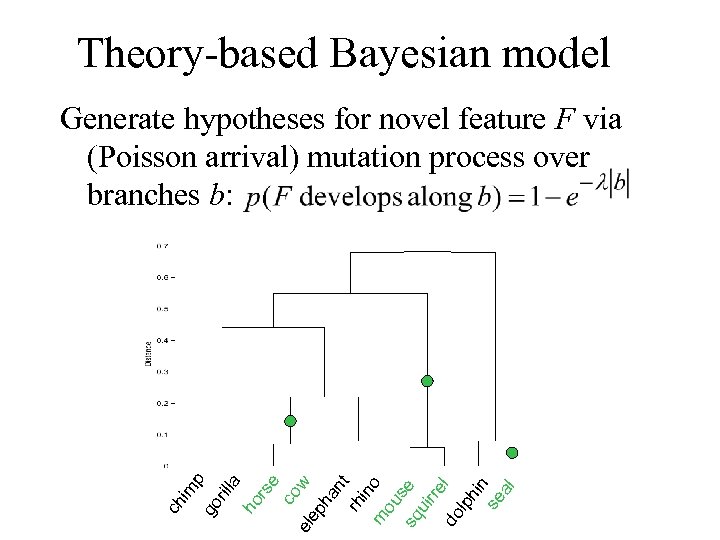

Theory-based Bayesian model co w el ep ha nt rh in m o ou s sq e ui rre do l lp hi n se al ho r se a rill go ch im p Generate hypotheses for novel feature F via (Poisson arrival) mutation process over branches b:

Theory-based Bayesian model co w el ep ha nt rh in m o ou s sq e ui rre do l lp hi n se al ho r se a rill go ch im p Generate hypotheses for novel feature F via (Poisson arrival) mutation process over branches b:

Theory-based Bayesian model co w el ep ha nt rh in m o ou s sq e ui rre do l lp hi n se al ho r se a rill go ch im p Generate hypotheses for novel feature F via (Poisson arrival) mutation process over branches b:

Theory-based Bayesian model co w el ep ha nt rh in m o ou s sq e ui rre do l lp hi n se al ho r se a rill go ch im p Generate hypotheses for novel feature F via (Poisson arrival) mutation process over branches b:

Theory-based Bayesian model co w el ep ha nt rh in m o ou s sq e ui rre do l lp hi n se al ho r se a rill go ch im p Generate hypotheses for novel feature F via (Poisson arrival) mutation process over branches b:

Theory-based Bayesian model co w el ep ha nt rh in m o ou s sq e ui rre do l lp hi n se al ho r se a rill go ch im p Generate hypotheses for novel feature F via (Poisson arrival) mutation process over branches b:

Theory-based Bayesian model co w el ep ha nt rh in m o ou s sq e ui rre do l lp hi n se al ho r se a rill go ch im p Generate hypotheses for novel feature F via (Poisson arrival) mutation process over branches b:

Theory-based Bayesian model co w el ep ha nt rh in m o ou s sq e ui rre do l lp hi n se al ho r se a rill go ch im p Generate hypotheses for novel feature F via (Poisson arrival) mutation process over branches b:

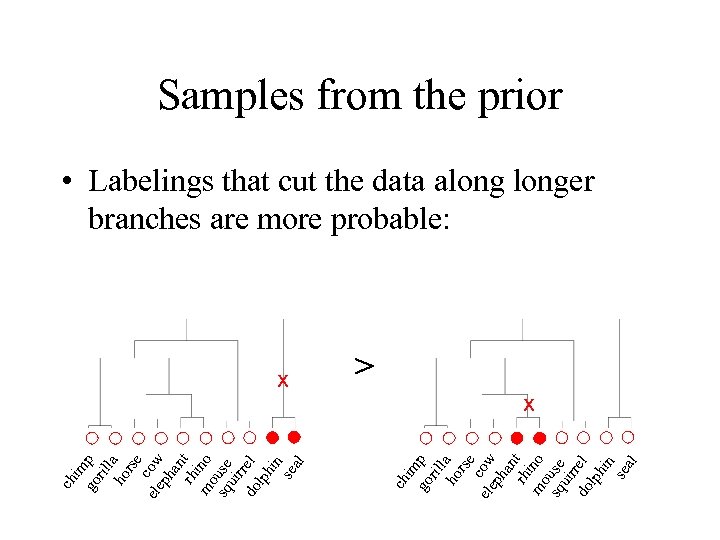

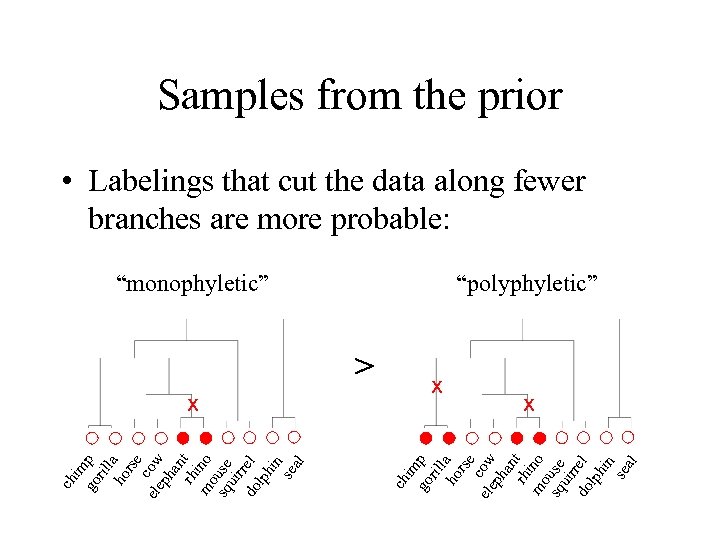

x ch im go p ril l ho a rse ele cow ph an rh t mo ino sq use uir do rel lph in se al Samples from the prior • Labelings that cut the data alonger branches are more probable: > x

x ch im go p ril l ho a rse ele cow ph an rh t mo ino sq use uir do rel lph in se al Samples from the prior • Labelings that cut the data alonger branches are more probable: > x

> x ch im go p ril l ho a rse ele cow ph an rh t mo ino sq use uir do rel lph in se al Samples from the prior • Labelings that cut the data along fewer branches are more probable: “monophyletic” “polyphyletic” x x

> x ch im go p ril l ho a rse ele cow ph an rh t mo ino sq use uir do rel lph in se al Samples from the prior • Labelings that cut the data along fewer branches are more probable: “monophyletic” “polyphyletic” x x

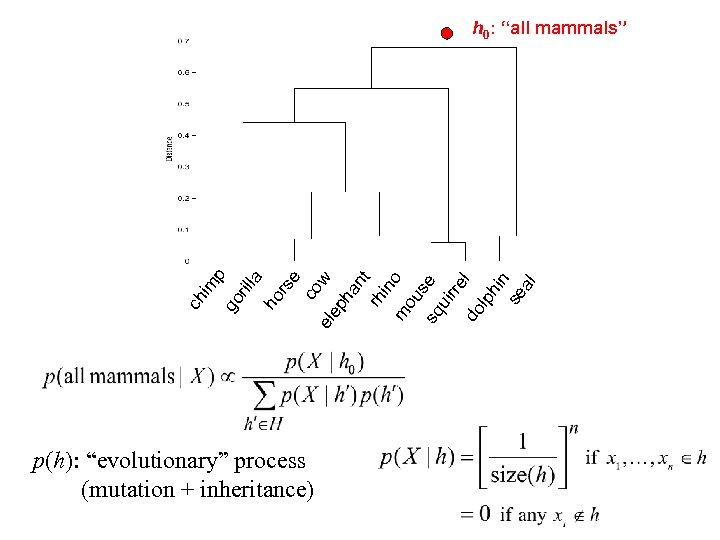

ho rs e co w el ep ha nt rh in m o ou s sq e ui rre do l lp hi n se al a rill go ch im p h 0: “all mammals” p(h): “evolutionary” process (mutation + inheritance)

ho rs e co w el ep ha nt rh in m o ou s sq e ui rre do l lp hi n se al a rill go ch im p h 0: “all mammals” p(h): “evolutionary” process (mutation + inheritance)

Bayes (taxonomic) Max-sim Sum-sim Conclusion kind: “all mammals” “horses” Number of examples: 3 2 1, 2, or 3

Bayes (taxonomic) Max-sim Sum-sim Conclusion kind: “all mammals” “horses” Number of examples: 3 2 1, 2, or 3

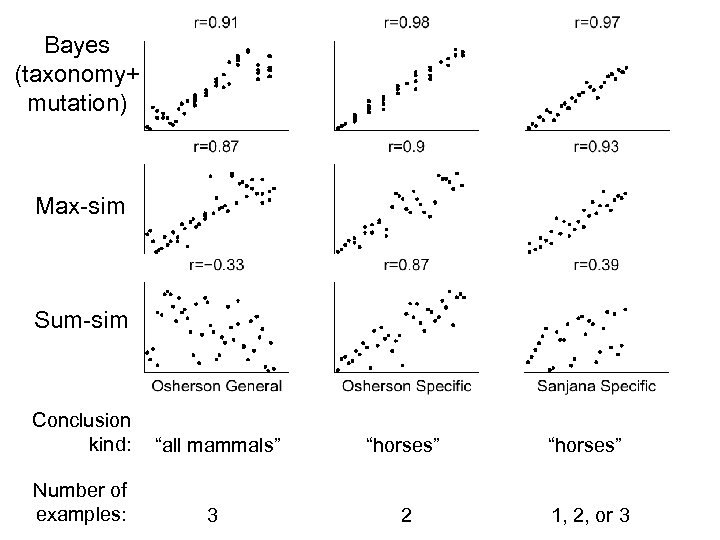

Bayes (taxonomy+ mutation) Max-sim Sum-sim Conclusion kind: “all mammals” “horses” Number of examples: 3 2 1, 2, or 3

Bayes (taxonomy+ mutation) Max-sim Sum-sim Conclusion kind: “all mammals” “horses” Number of examples: 3 2 1, 2, or 3

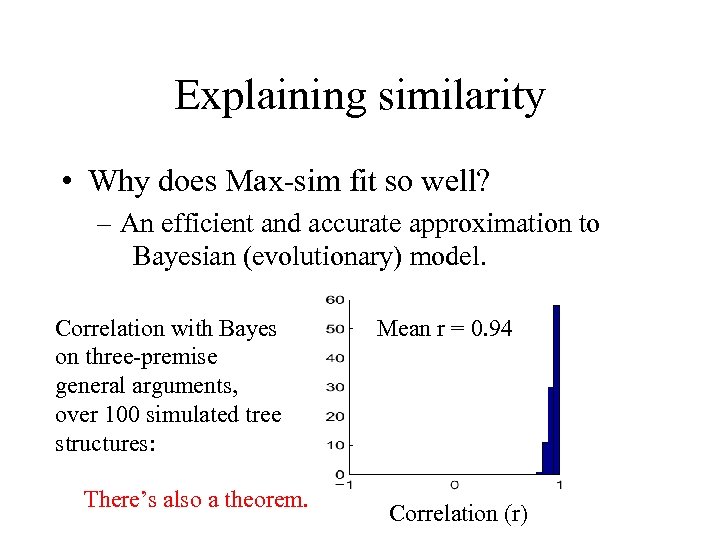

Explaining similarity • Why does Max-sim fit so well? – An efficient and accurate approximation to Bayesian (evolutionary) model. Correlation with Bayes on three-premise general arguments, over 100 simulated tree structures: There’s also a theorem. Mean r = 0. 94 Correlation (r)

Explaining similarity • Why does Max-sim fit so well? – An efficient and accurate approximation to Bayesian (evolutionary) model. Correlation with Bayes on three-premise general arguments, over 100 simulated tree structures: There’s also a theorem. Mean r = 0. 94 Correlation (r)

Biology: Summary • Theory-based statistical inference explains inductive reasoning in folk biology. • Mathematical modeling reveals people’s implicit theories about the world. – Category structure: taxonomic tree. – Feature distribution: stochastic mutation process + inheritance. • Clarifies traditional psychological models. – Why Max-sim over Sum-sim?

Biology: Summary • Theory-based statistical inference explains inductive reasoning in folk biology. • Mathematical modeling reveals people’s implicit theories about the world. – Category structure: taxonomic tree. – Feature distribution: stochastic mutation process + inheritance. • Clarifies traditional psychological models. – Why Max-sim over Sum-sim?

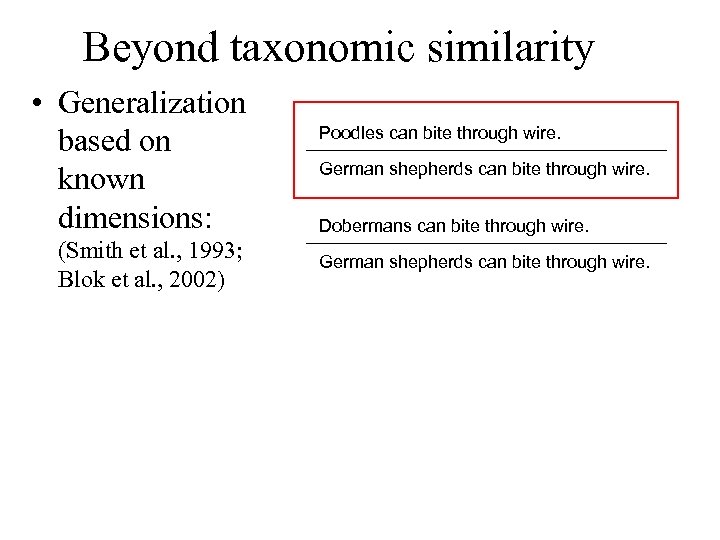

Beyond taxonomic similarity • Generalization based on known dimensions: (Smith et al. , 1993; Blok et al. , 2002) Poodles can bite through wire. German shepherds can bite through wire. Dobermans can bite through wire. German shepherds can bite through wire.

Beyond taxonomic similarity • Generalization based on known dimensions: (Smith et al. , 1993; Blok et al. , 2002) Poodles can bite through wire. German shepherds can bite through wire. Dobermans can bite through wire. German shepherds can bite through wire.

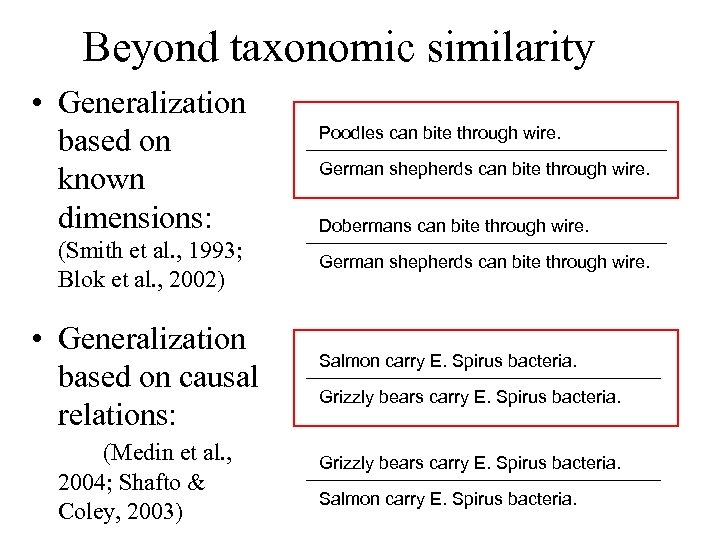

Beyond taxonomic similarity • Generalization based on known dimensions: (Smith et al. , 1993; Blok et al. , 2002) • Generalization based on causal relations: (Medin et al. , 2004; Shafto & Coley, 2003) Poodles can bite through wire. German shepherds can bite through wire. Dobermans can bite through wire. German shepherds can bite through wire. Salmon carry E. Spirus bacteria. Grizzly bears carry E. Spirus bacteria. Salmon carry E. Spirus bacteria.

Beyond taxonomic similarity • Generalization based on known dimensions: (Smith et al. , 1993; Blok et al. , 2002) • Generalization based on causal relations: (Medin et al. , 2004; Shafto & Coley, 2003) Poodles can bite through wire. German shepherds can bite through wire. Dobermans can bite through wire. German shepherds can bite through wire. Salmon carry E. Spirus bacteria. Grizzly bears carry E. Spirus bacteria. Salmon carry E. Spirus bacteria.

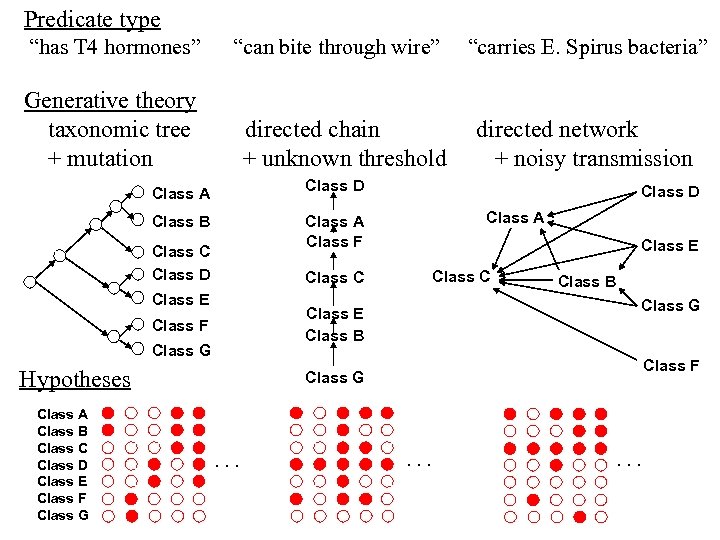

Predicate type “has T 4 hormones” “can bite through wire” Generative theory taxonomic tree + mutation directed chain + unknown threshold directed network + noisy transmission Class D Class A Class B Class D Class A Class F Class C Class D Class E Class C Class E Class B Class G Class E Class B Class F Class G Hypotheses Class A Class B Class C Class D Class E Class F Class G “carries E. Spirus bacteria” Class F Class G . .

Predicate type “has T 4 hormones” “can bite through wire” Generative theory taxonomic tree + mutation directed chain + unknown threshold directed network + noisy transmission Class D Class A Class B Class D Class A Class F Class C Class D Class E Class C Class E Class B Class G Class E Class B Class F Class G Hypotheses Class A Class B Class C Class D Class E Class F Class G “carries E. Spirus bacteria” Class F Class G . .

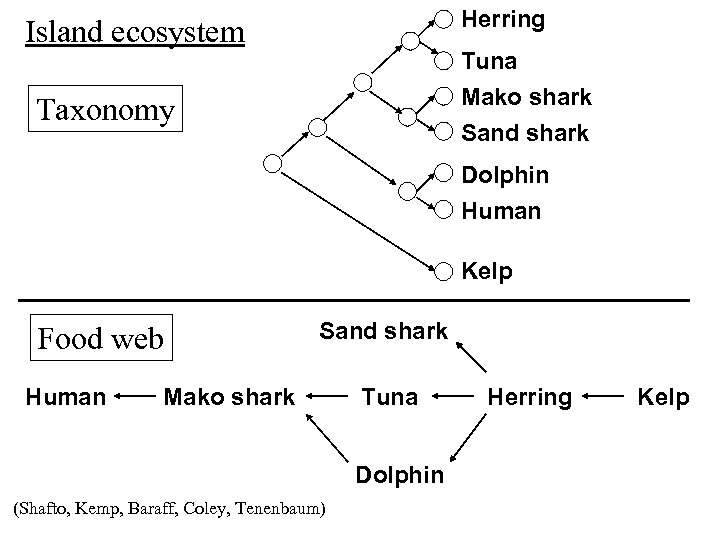

Herring Island ecosystem Tuna Mako shark Sand shark Taxonomy Dolphin Human Kelp Food web Human Sand shark Mako shark Tuna Dolphin (Shafto, Kemp, Baraff, Coley, Tenenbaum) Herring Kelp

Herring Island ecosystem Tuna Mako shark Sand shark Taxonomy Dolphin Human Kelp Food web Human Sand shark Mako shark Tuna Dolphin (Shafto, Kemp, Baraff, Coley, Tenenbaum) Herring Kelp

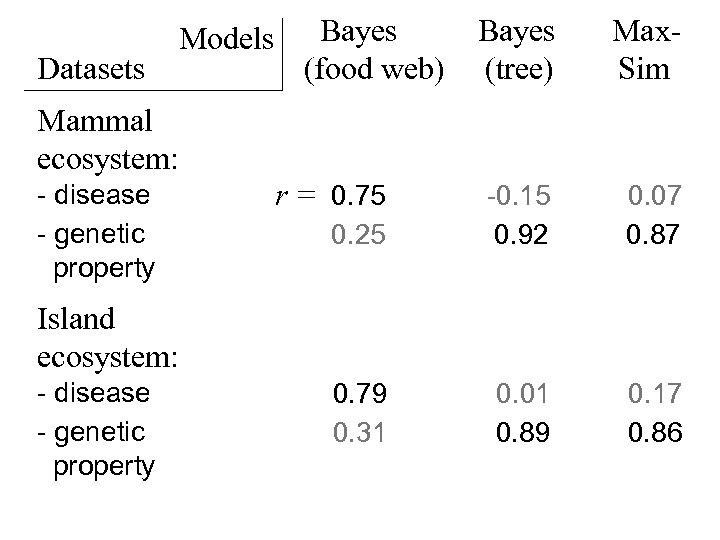

Datasets Models Bayes (food web) Bayes (tree) Max. Sim 0. 25 -0. 15 0. 92 0. 07 0. 87 0. 79 0. 31 0. 01 0. 89 0. 17 0. 86 Mammal ecosystem: - disease - genetic property r = 0. 75 Island ecosystem: - disease - genetic property

Datasets Models Bayes (food web) Bayes (tree) Max. Sim 0. 25 -0. 15 0. 92 0. 07 0. 87 0. 79 0. 31 0. 01 0. 89 0. 17 0. 86 Mammal ecosystem: - disease - genetic property r = 0. 75 Island ecosystem: - disease - genetic property

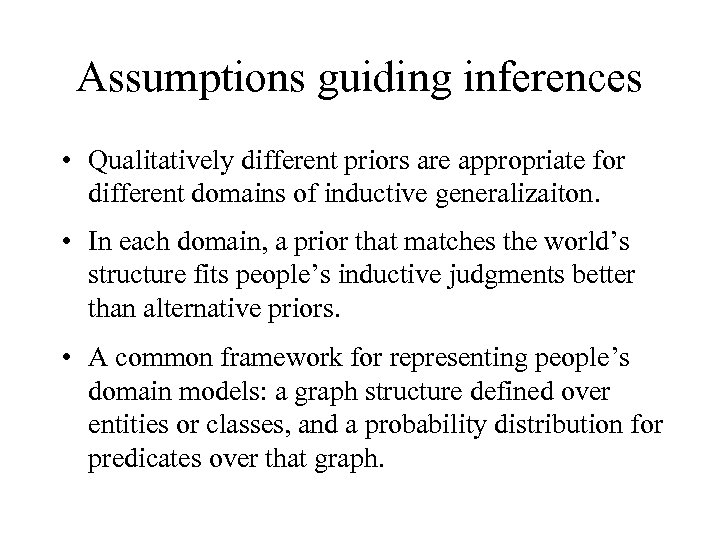

Assumptions guiding inferences • Qualitatively different priors are appropriate for different domains of inductive generalizaiton. • In each domain, a prior that matches the world’s structure fits people’s inductive judgments better than alternative priors. • A common framework for representing people’s domain models: a graph structure defined over entities or classes, and a probability distribution for predicates over that graph.

Assumptions guiding inferences • Qualitatively different priors are appropriate for different domains of inductive generalizaiton. • In each domain, a prior that matches the world’s structure fits people’s inductive judgments better than alternative priors. • A common framework for representing people’s domain models: a graph structure defined over entities or classes, and a probability distribution for predicates over that graph.

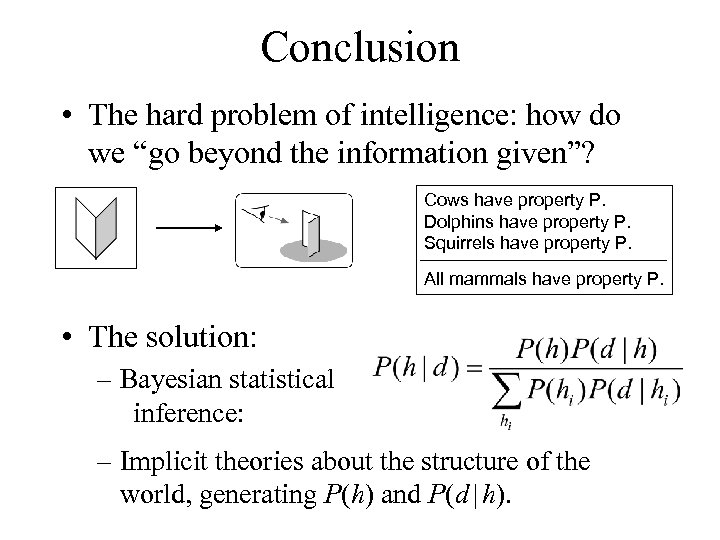

Conclusion • The hard problem of intelligence: how do we “go beyond the information given”? Cows have property P. Dolphins have property P. Squirrels have property P. All mammals have property P. • The solution: – Bayesian statistical inference: – Implicit theories about the structure of the world, generating P(h) and P(d | h).

Conclusion • The hard problem of intelligence: how do we “go beyond the information given”? Cows have property P. Dolphins have property P. Squirrels have property P. All mammals have property P. • The solution: – Bayesian statistical inference: – Implicit theories about the structure of the world, generating P(h) and P(d | h).

Discussion • How is this intuitive theory of biology like or not like a scientific theory? • In what sense does the visual system have a theory of the world? How is it like or not like a cognitive theory of biology, or a scientific theory?

Discussion • How is this intuitive theory of biology like or not like a scientific theory? • In what sense does the visual system have a theory of the world? How is it like or not like a cognitive theory of biology, or a scientific theory?