7ad84ee68f01a7f9d709bbea08569740.ppt

- Количество слайдов: 45

6. Trust Negotiations and Trading Privacy for Trust* Presented by: Prof. Bharat Bhargava Department of Computer Sciences and Center for Education and Research in Information Assurance and Security (CERIAS) Purdue University with contributions from Prof. Leszek Lilien Western Michigan University and CERIAS, Purdue University * Supported in part by NSF grants IIS-0209059, IIS-0242840, ANI-0219110, and Cisco URP grant. 3/23/04

6. Trust Negotiations and Trading Privacy for Trust* Presented by: Prof. Bharat Bhargava Department of Computer Sciences and Center for Education and Research in Information Assurance and Security (CERIAS) Purdue University with contributions from Prof. Leszek Lilien Western Michigan University and CERIAS, Purdue University * Supported in part by NSF grants IIS-0209059, IIS-0242840, ANI-0219110, and Cisco URP grant. 3/23/04

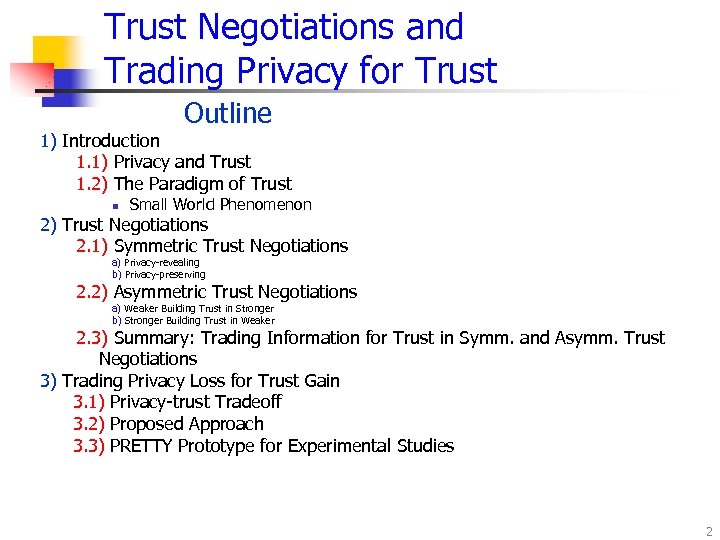

Trust Negotiations and Trading Privacy for Trust Outline 1) Introduction 1. 1) Privacy and Trust 1. 2) The Paradigm of Trust n Small World Phenomenon 2) Trust Negotiations 2. 1) Symmetric Trust Negotiations a) Privacy-revealing b) Privacy-preserving 2. 2) Asymmetric Trust Negotiations a) Weaker Building Trust in Stronger b) Stronger Building Trust in Weaker 2. 3) Summary: Trading Information for Trust in Symm. and Asymm. Trust Negotiations 3) Trading Privacy Loss for Trust Gain 3. 1) Privacy-trust Tradeoff 3. 2) Proposed Approach 3. 3) PRETTY Prototype for Experimental Studies 2

Trust Negotiations and Trading Privacy for Trust Outline 1) Introduction 1. 1) Privacy and Trust 1. 2) The Paradigm of Trust n Small World Phenomenon 2) Trust Negotiations 2. 1) Symmetric Trust Negotiations a) Privacy-revealing b) Privacy-preserving 2. 2) Asymmetric Trust Negotiations a) Weaker Building Trust in Stronger b) Stronger Building Trust in Weaker 2. 3) Summary: Trading Information for Trust in Symm. and Asymm. Trust Negotiations 3) Trading Privacy Loss for Trust Gain 3. 1) Privacy-trust Tradeoff 3. 2) Proposed Approach 3. 3) PRETTY Prototype for Experimental Studies 2

1) Introduction (1) 1. 1) Privacy and Trust n Privacy Problem n Consider computer-based interactions n n Interactions involve dissemination of private data n n From a simple transaction to a complex collaboration It is voluntary, “pseudo-voluntary, ” or required by law Threats of privacy violations result in lower trust Lower trust leads to isolation and lack of collaboration Trust must be established n n n Data – provide quality an integrity End-to-end communication – sender authentication, message integrity Network routing algorithms – deal with malicious peers, intruders, security attacks 3

1) Introduction (1) 1. 1) Privacy and Trust n Privacy Problem n Consider computer-based interactions n n Interactions involve dissemination of private data n n From a simple transaction to a complex collaboration It is voluntary, “pseudo-voluntary, ” or required by law Threats of privacy violations result in lower trust Lower trust leads to isolation and lack of collaboration Trust must be established n n n Data – provide quality an integrity End-to-end communication – sender authentication, message integrity Network routing algorithms – deal with malicious peers, intruders, security attacks 3

1) Introduction (2) 1. 2) The Paradigm of Trust n Trust – a paradigm of security for open computing environments (such as the Web) n Replaces/enhances CIA (confid. /integr. /availab. ) as one of means for achieving security n n But not as one of the goals of of security (as CIA are) Trust is a powerful paradigm n Well tested in social models of interaction and systems n Trust is pervasive: Constantly –if often unconsciously– applied in interactions between: n n n people / businesses / institutions / animals (e. g. : a guide dog) / artifacts (sic! —e. g. : “Can I rely on my car for this long trip? ”) Able to simplify security solutions n By reducing complexity of interactions among human and artificial system components 4

1) Introduction (2) 1. 2) The Paradigm of Trust n Trust – a paradigm of security for open computing environments (such as the Web) n Replaces/enhances CIA (confid. /integr. /availab. ) as one of means for achieving security n n But not as one of the goals of of security (as CIA are) Trust is a powerful paradigm n Well tested in social models of interaction and systems n Trust is pervasive: Constantly –if often unconsciously– applied in interactions between: n n n people / businesses / institutions / animals (e. g. : a guide dog) / artifacts (sic! —e. g. : “Can I rely on my car for this long trip? ”) Able to simplify security solutions n By reducing complexity of interactions among human and artificial system components 4

![1) Introduction (3) Small World Phenomenon n Small-world phenomenon n [Milgram, 1967] Find chains 1) Introduction (3) Small World Phenomenon n Small-world phenomenon n [Milgram, 1967] Find chains](https://present5.com/presentation/7ad84ee68f01a7f9d709bbea08569740/image-5.jpg) 1) Introduction (3) Small World Phenomenon n Small-world phenomenon n [Milgram, 1967] Find chains of acquaintances linking any two randomly chosen people in the United States who do not know one another (remember the Erdös number? ) n n Result: the average number of intermediate steps in a successful chain: between 5 and 6 => the six degrees of separation principle Relevance to security research n n [Čapkun et al. , 2002] A graph exhibits the small-world phenomenon if (roughly speaking) any two vertices in the graph are likely to be connected through a short sequence of intermediate vertices Trust is useful due to its inherently incorporating the small-world phenomenon 5

1) Introduction (3) Small World Phenomenon n Small-world phenomenon n [Milgram, 1967] Find chains of acquaintances linking any two randomly chosen people in the United States who do not know one another (remember the Erdös number? ) n n Result: the average number of intermediate steps in a successful chain: between 5 and 6 => the six degrees of separation principle Relevance to security research n n [Čapkun et al. , 2002] A graph exhibits the small-world phenomenon if (roughly speaking) any two vertices in the graph are likely to be connected through a short sequence of intermediate vertices Trust is useful due to its inherently incorporating the small-world phenomenon 5

2) Trust Negotiations n n Trust negotiations n Establish mutual trust between interacting parties Types of trust negotiations [L. Lilien and B. Bhargava, 2006] 2. 1) Symmetric trust negotiations - partners of „similar-strenght” n Overwhelmingly popular in the literature 2. 2) Asymmetric trust negotiations - a “weaker” and a “stronger” partner n Identified by us (as far as we know) 6

2) Trust Negotiations n n Trust negotiations n Establish mutual trust between interacting parties Types of trust negotiations [L. Lilien and B. Bhargava, 2006] 2. 1) Symmetric trust negotiations - partners of „similar-strenght” n Overwhelmingly popular in the literature 2. 2) Asymmetric trust negotiations - a “weaker” and a “stronger” partner n Identified by us (as far as we know) 6

Symmetric and Asymmetric Trust Negotiations 2. 1) Symmetric trust negotiations n Two types: a) Symmetric „privacy-revealing” negotiations: Disclose certificates or policies to the partner b) Symmetric „privacy-preserving” negotiations: Preserve privacy of certificates and policies n Examples: Individual to individual / most B 2 B /. . . 2. 2) Asymmetric trust negotiations n n Two types: a) Weaker Building Trust in Stronger b) Stronger Building Trust in Weaker Examples: Individual to institution / small business to large business /. . . 7

Symmetric and Asymmetric Trust Negotiations 2. 1) Symmetric trust negotiations n Two types: a) Symmetric „privacy-revealing” negotiations: Disclose certificates or policies to the partner b) Symmetric „privacy-preserving” negotiations: Preserve privacy of certificates and policies n Examples: Individual to individual / most B 2 B /. . . 2. 2) Asymmetric trust negotiations n n Two types: a) Weaker Building Trust in Stronger b) Stronger Building Trust in Weaker Examples: Individual to institution / small business to large business /. . . 7

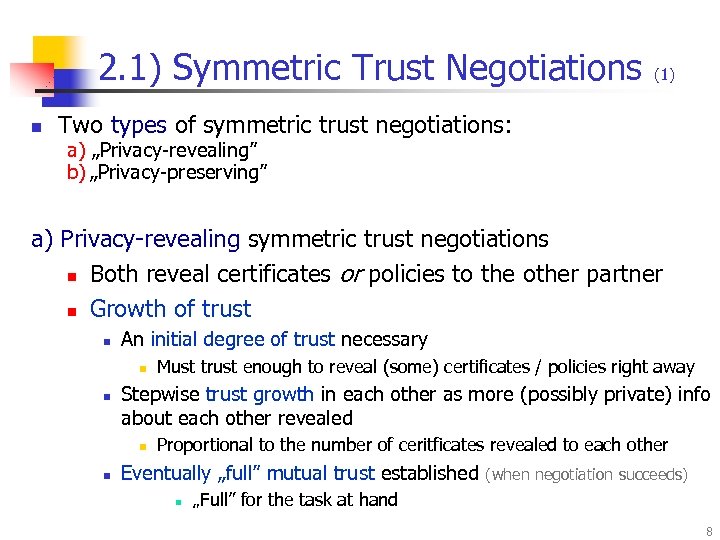

2. 1) Symmetric Trust Negotiations n (1) Two types of symmetric trust negotiations: a) „Privacy-revealing” b) „Privacy-preserving” a) Privacy-revealing symmetric trust negotiations n Both reveal certificates or policies to the other partner n Growth of trust n An initial degree of trust necessary n n Stepwise trust growth in each other as more (possibly private) info about each other revealed n n Must trust enough to reveal (some) certificates / policies right away Proportional to the number of ceritficates revealed to each other Eventually „full” mutual trust established n (when negotiation succeeds) „Full” for the task at hand 8

2. 1) Symmetric Trust Negotiations n (1) Two types of symmetric trust negotiations: a) „Privacy-revealing” b) „Privacy-preserving” a) Privacy-revealing symmetric trust negotiations n Both reveal certificates or policies to the other partner n Growth of trust n An initial degree of trust necessary n n Stepwise trust growth in each other as more (possibly private) info about each other revealed n n Must trust enough to reveal (some) certificates / policies right away Proportional to the number of ceritficates revealed to each other Eventually „full” mutual trust established n (when negotiation succeeds) „Full” for the task at hand 8

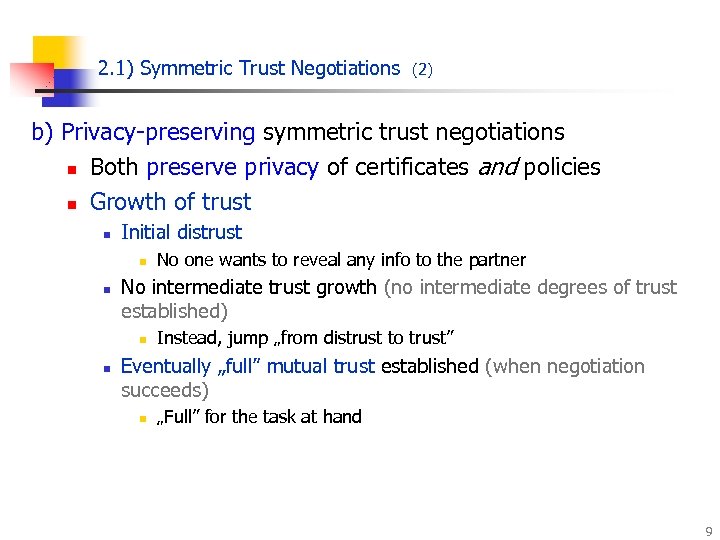

2. 1) Symmetric Trust Negotiations (2) b) Privacy-preserving symmetric trust negotiations n Both preserve privacy of certificates and policies n Growth of trust n Initial distrust n n No intermediate trust growth (no intermediate degrees of trust established) n n No one wants to reveal any info to the partner Instead, jump „from distrust to trust” Eventually „full” mutual trust established (when negotiation succeeds) n „Full” for the task at hand 9

2. 1) Symmetric Trust Negotiations (2) b) Privacy-preserving symmetric trust negotiations n Both preserve privacy of certificates and policies n Growth of trust n Initial distrust n n No intermediate trust growth (no intermediate degrees of trust established) n n No one wants to reveal any info to the partner Instead, jump „from distrust to trust” Eventually „full” mutual trust established (when negotiation succeeds) n „Full” for the task at hand 9

2. 2) Asymmetric Trust Negotiations n Weaker and Stronger build trust in each other a) Weaker building trust in Stronger “a priori” n E. g. , a customer looking for a mortgage loan first selects a reputable bank, only then starts negotiations b) Stronger building trust in Weaker “in real time” n E. g. , the bank asks the customer for a lot of private info personal income and tax data, …) to establish trust in her (incl. 10

2. 2) Asymmetric Trust Negotiations n Weaker and Stronger build trust in each other a) Weaker building trust in Stronger “a priori” n E. g. , a customer looking for a mortgage loan first selects a reputable bank, only then starts negotiations b) Stronger building trust in Weaker “in real time” n E. g. , the bank asks the customer for a lot of private info personal income and tax data, …) to establish trust in her (incl. 10

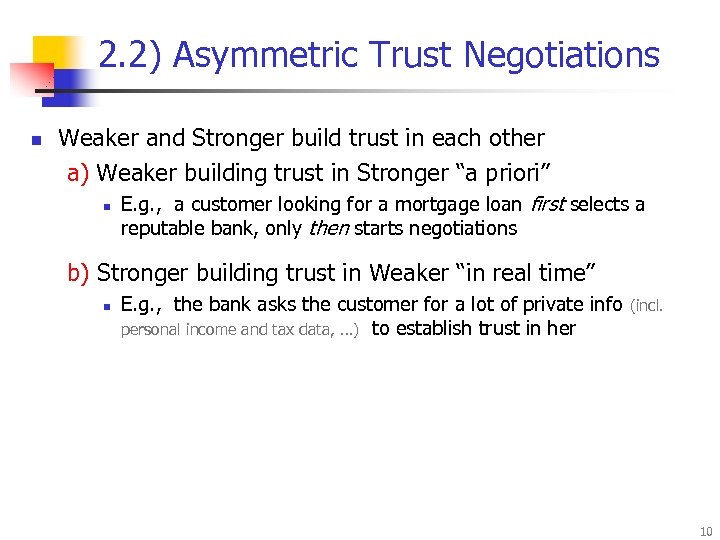

2. 2) Asymmetric Trust Negotiations (2) a) Weaker Building Trust in Stronger n Means of building trust by Weaker in Stronger n Ask around n n Better Business Bureau, consumer advocacy groups, … Verify partner’s credentials n n Trustworthy or not, stable or not, … Problem: Needs time for a fair judgment Check reputation databases n n Accomplishments, failures and associated recoveries, … Mission, goals, policies (incl. privacy policies), … Observe partner’s behavior n n Family, friends, co-workers, … Check partner’s history and stated philosophy n n (a priori): Certificates and awards, memberships in trust-building organizations (e. g. , BBB), … Protect yourself against partner’s misbehavior n Trusted third-party, security deposit, prepayment, buying insurance, … 11

2. 2) Asymmetric Trust Negotiations (2) a) Weaker Building Trust in Stronger n Means of building trust by Weaker in Stronger n Ask around n n Better Business Bureau, consumer advocacy groups, … Verify partner’s credentials n n Trustworthy or not, stable or not, … Problem: Needs time for a fair judgment Check reputation databases n n Accomplishments, failures and associated recoveries, … Mission, goals, policies (incl. privacy policies), … Observe partner’s behavior n n Family, friends, co-workers, … Check partner’s history and stated philosophy n n (a priori): Certificates and awards, memberships in trust-building organizations (e. g. , BBB), … Protect yourself against partner’s misbehavior n Trusted third-party, security deposit, prepayment, buying insurance, … 11

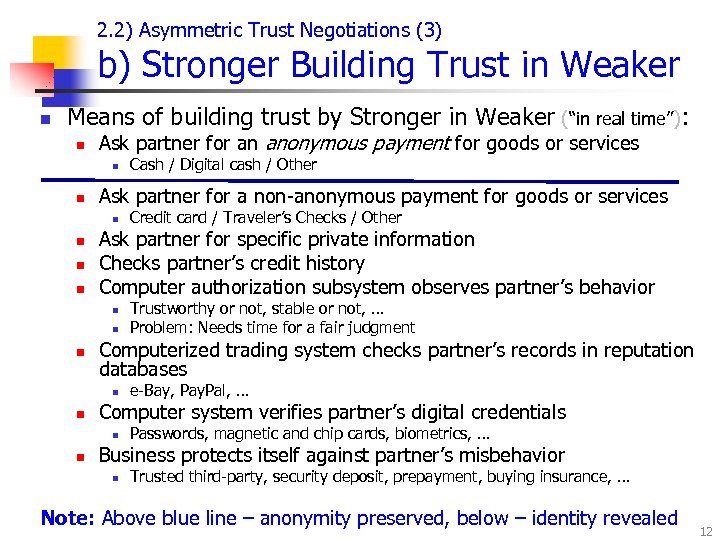

2. 2) Asymmetric Trust Negotiations (3) b) Stronger Building Trust in Weaker n Means of building trust by Stronger in Weaker (“in real time”): n Ask partner for an anonymous payment for goods or services n n Ask partner for a non-anonymous payment for goods or services n n n e-Bay, Pay. Pal, … Computer system verifies partner’s digital credentials n n Trustworthy or not, stable or not, … Problem: Needs time for a fair judgment Computerized trading system checks partner’s records in reputation databases n n Credit card / Traveler’s Checks / Other Ask partner for specific private information Checks partner’s credit history Computer authorization subsystem observes partner’s behavior n n Cash / Digital cash / Other Passwords, magnetic and chip cards, biometrics, … Business protects itself against partner’s misbehavior n Trusted third-party, security deposit, prepayment, buying insurance, … Note: Above blue line – anonymity preserved, below – identity revealed 12

2. 2) Asymmetric Trust Negotiations (3) b) Stronger Building Trust in Weaker n Means of building trust by Stronger in Weaker (“in real time”): n Ask partner for an anonymous payment for goods or services n n Ask partner for a non-anonymous payment for goods or services n n n e-Bay, Pay. Pal, … Computer system verifies partner’s digital credentials n n Trustworthy or not, stable or not, … Problem: Needs time for a fair judgment Computerized trading system checks partner’s records in reputation databases n n Credit card / Traveler’s Checks / Other Ask partner for specific private information Checks partner’s credit history Computer authorization subsystem observes partner’s behavior n n Cash / Digital cash / Other Passwords, magnetic and chip cards, biometrics, … Business protects itself against partner’s misbehavior n Trusted third-party, security deposit, prepayment, buying insurance, … Note: Above blue line – anonymity preserved, below – identity revealed 12

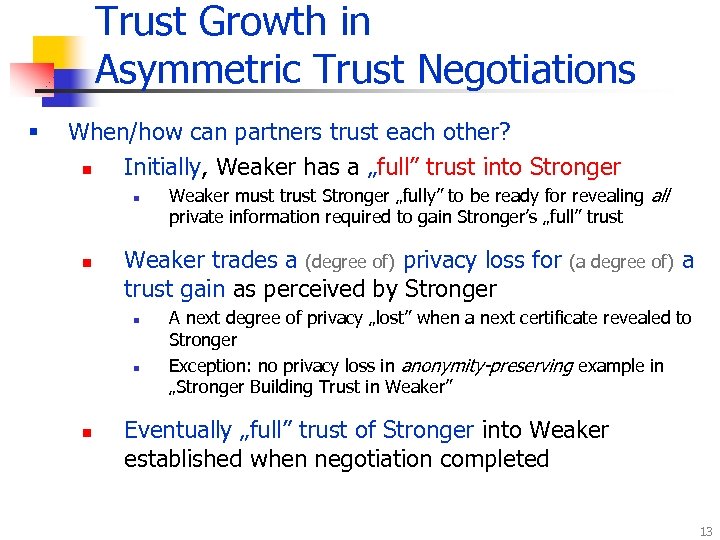

Trust Growth in Asymmetric Trust Negotiations § When/how can partners trust each other? n Initially, Weaker has a „full” trust into Stronger n n Weaker trades a (degree of) privacy loss for trust gain as perceived by Stronger n n n Weaker must trust Stronger „fully” to be ready for revealing all private information required to gain Stronger’s „full” trust (a degree of) a A next degree of privacy „lost” when a next certificate revealed to Stronger Exception: no privacy loss in anonymity-preserving example in „Stronger Building Trust in Weaker” Eventually „full” trust of Stronger into Weaker established when negotiation completed 13

Trust Growth in Asymmetric Trust Negotiations § When/how can partners trust each other? n Initially, Weaker has a „full” trust into Stronger n n Weaker trades a (degree of) privacy loss for trust gain as perceived by Stronger n n n Weaker must trust Stronger „fully” to be ready for revealing all private information required to gain Stronger’s „full” trust (a degree of) a A next degree of privacy „lost” when a next certificate revealed to Stronger Exception: no privacy loss in anonymity-preserving example in „Stronger Building Trust in Weaker” Eventually „full” trust of Stronger into Weaker established when negotiation completed 13

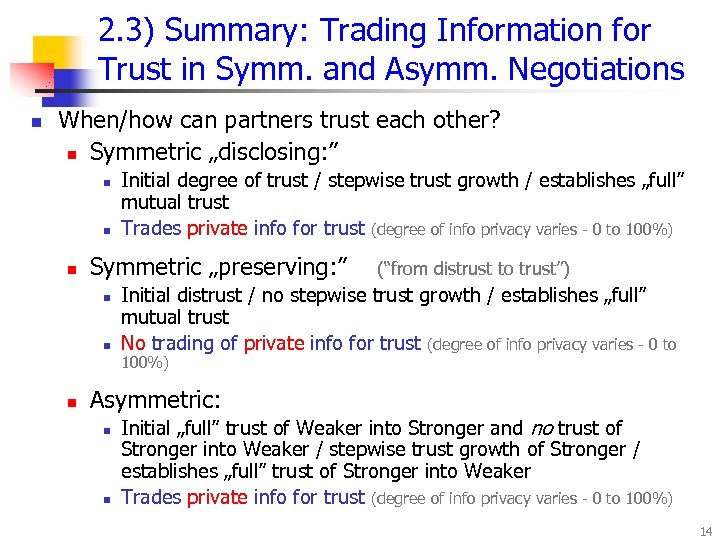

2. 3) Summary: Trading Information for Trust in Symm. and Asymm. Negotiations n When/how can partners trust each other? n Symmetric „disclosing: ” n n n Symmetric „preserving: ” n n n Initial degree of trust / stepwise trust growth / establishes „full” mutual trust Trades private info for trust (degree of info privacy varies - 0 to 100%) (“from distrust to trust”) Initial distrust / no stepwise trust growth / establishes „full” mutual trust No trading of private info for trust (degree of info privacy varies - 0 to 100%) Asymmetric: n n Initial „full” trust of Weaker into Stronger and no trust of Stronger into Weaker / stepwise trust growth of Stronger / establishes „full” trust of Stronger into Weaker Trades private info for trust (degree of info privacy varies - 0 to 100%) 14

2. 3) Summary: Trading Information for Trust in Symm. and Asymm. Negotiations n When/how can partners trust each other? n Symmetric „disclosing: ” n n n Symmetric „preserving: ” n n n Initial degree of trust / stepwise trust growth / establishes „full” mutual trust Trades private info for trust (degree of info privacy varies - 0 to 100%) (“from distrust to trust”) Initial distrust / no stepwise trust growth / establishes „full” mutual trust No trading of private info for trust (degree of info privacy varies - 0 to 100%) Asymmetric: n n Initial „full” trust of Weaker into Stronger and no trust of Stronger into Weaker / stepwise trust growth of Stronger / establishes „full” trust of Stronger into Weaker Trades private info for trust (degree of info privacy varies - 0 to 100%) 14

3) Trading Privacy Loss for Trust Gain n n We’re focusing on asymmetric trust negotiations: Trading privacy for trust Approach to trading privacy for trust: [Zhong and Bhargava, Purdue] § § Formalize the privacy-trust tradeoff problem Estimate privacy loss due to disclosing a credential set Estimate trust gain due to disclosing a credential set Develop algorithms that minimize privacy loss for required trust gain n Bec. nobody likes loosing more privacy than necessary More details available 15

3) Trading Privacy Loss for Trust Gain n n We’re focusing on asymmetric trust negotiations: Trading privacy for trust Approach to trading privacy for trust: [Zhong and Bhargava, Purdue] § § Formalize the privacy-trust tradeoff problem Estimate privacy loss due to disclosing a credential set Estimate trust gain due to disclosing a credential set Develop algorithms that minimize privacy loss for required trust gain n Bec. nobody likes loosing more privacy than necessary More details available 15

![Related Work n n n Automated trust negotiation (ATN) [Yu, Winslett, and Seamons, 2003] Related Work n n n Automated trust negotiation (ATN) [Yu, Winslett, and Seamons, 2003]](https://present5.com/presentation/7ad84ee68f01a7f9d709bbea08569740/image-16.jpg) Related Work n n n Automated trust negotiation (ATN) [Yu, Winslett, and Seamons, 2003] n Tradeoff between the length of the negotiation, the amount of information disclosed, and the computation effort Trust-based decision making [Wegella et al. 2003] n Trust lifecycle management, with considerations of both trust and risk assessments Trading privacy for trust [Seigneur and Jensen, 2004] n Privacy as the linkability of pieces of evidence to a pseudonym; measured by using nymity [Goldberg, thesis, 2000] 16

Related Work n n n Automated trust negotiation (ATN) [Yu, Winslett, and Seamons, 2003] n Tradeoff between the length of the negotiation, the amount of information disclosed, and the computation effort Trust-based decision making [Wegella et al. 2003] n Trust lifecycle management, with considerations of both trust and risk assessments Trading privacy for trust [Seigneur and Jensen, 2004] n Privacy as the linkability of pieces of evidence to a pseudonym; measured by using nymity [Goldberg, thesis, 2000] 16

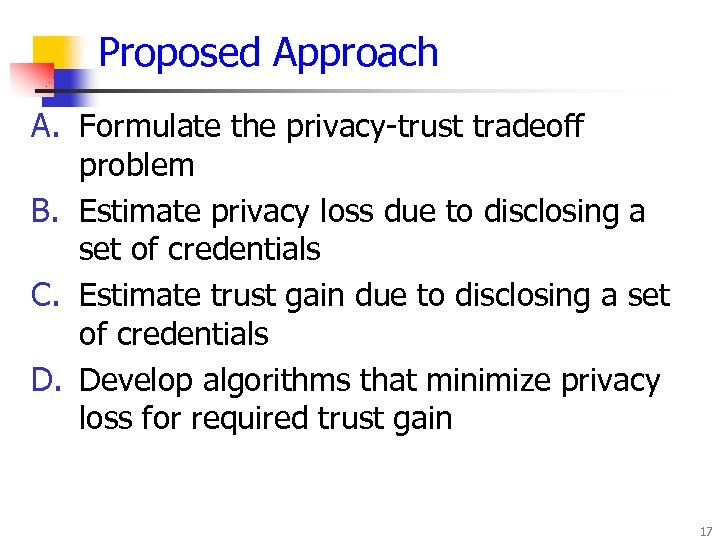

Proposed Approach A. Formulate the privacy-trust tradeoff problem B. Estimate privacy loss due to disclosing a set of credentials C. Estimate trust gain due to disclosing a set of credentials D. Develop algorithms that minimize privacy loss for required trust gain 17

Proposed Approach A. Formulate the privacy-trust tradeoff problem B. Estimate privacy loss due to disclosing a set of credentials C. Estimate trust gain due to disclosing a set of credentials D. Develop algorithms that minimize privacy loss for required trust gain 17

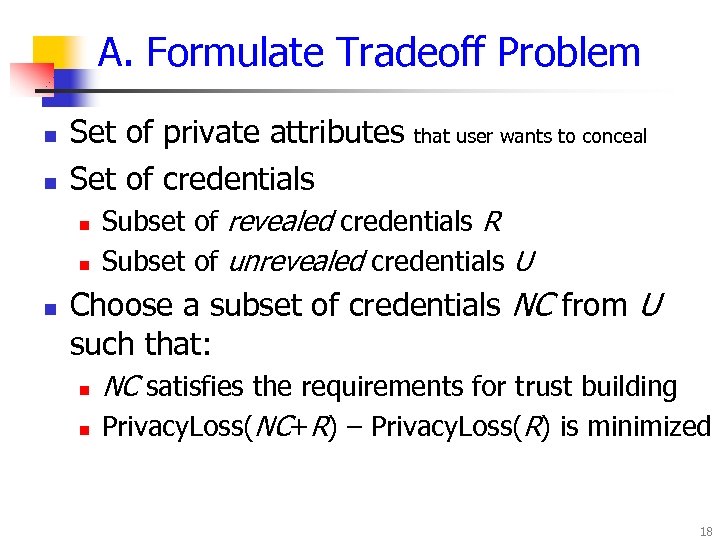

A. Formulate Tradeoff Problem n n Set of private attributes Set of credentials n n n that user wants to conceal Subset of revealed credentials R Subset of unrevealed credentials U Choose a subset of credentials NC from U such that: n n NC satisfies the requirements for trust building Privacy. Loss(NC+R) – Privacy. Loss(R) is minimized 18

A. Formulate Tradeoff Problem n n Set of private attributes Set of credentials n n n that user wants to conceal Subset of revealed credentials R Subset of unrevealed credentials U Choose a subset of credentials NC from U such that: n n NC satisfies the requirements for trust building Privacy. Loss(NC+R) – Privacy. Loss(R) is minimized 18

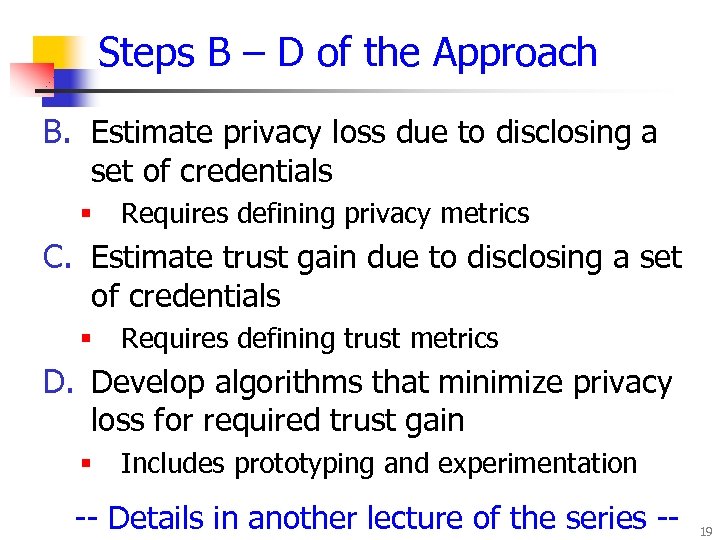

Steps B – D of the Approach B. Estimate privacy loss due to disclosing a set of credentials § Requires defining privacy metrics C. Estimate trust gain due to disclosing a set of credentials § Requires defining trust metrics D. Develop algorithms that minimize privacy loss for required trust gain § Includes prototyping and experimentation -- Details in another lecture of the series -- 19

Steps B – D of the Approach B. Estimate privacy loss due to disclosing a set of credentials § Requires defining privacy metrics C. Estimate trust gain due to disclosing a set of credentials § Requires defining trust metrics D. Develop algorithms that minimize privacy loss for required trust gain § Includes prototyping and experimentation -- Details in another lecture of the series -- 19

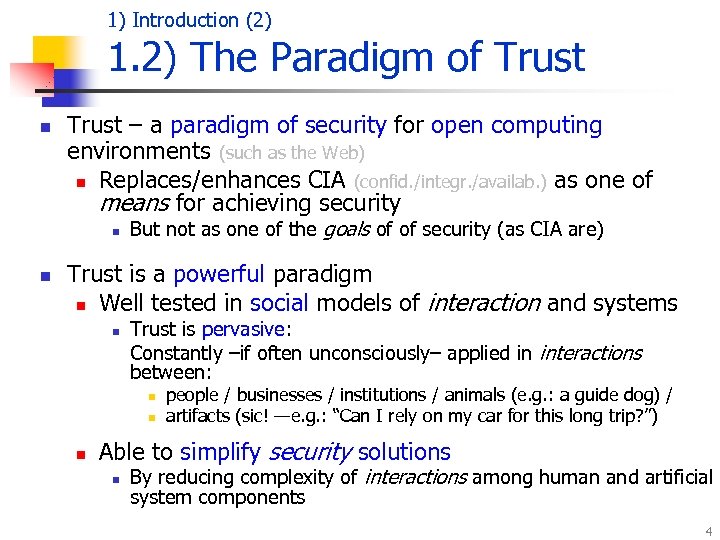

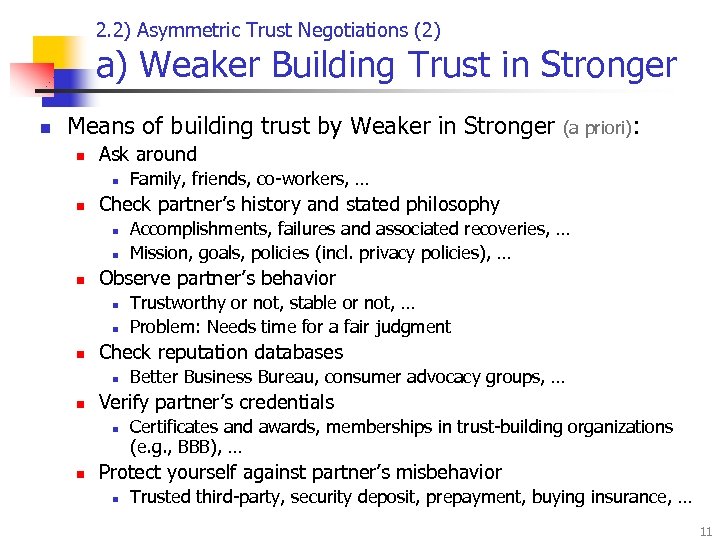

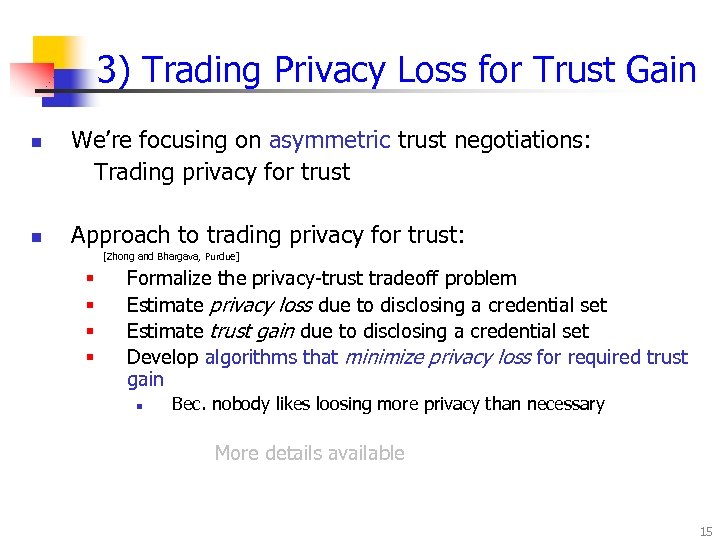

![3. 3) PRETTY Prototype for Experimental Studies (4) (1) (2) [2 c 2] (3) 3. 3) PRETTY Prototype for Experimental Studies (4) (1) (2) [2 c 2] (3)](https://present5.com/presentation/7ad84ee68f01a7f9d709bbea08569740/image-20.jpg) 3. 3) PRETTY Prototype for Experimental Studies (4) (1) (2) [2 c 2] (3) User Role [2 b] [2 d] [2 a] [2 c 1] (

3. 3) PRETTY Prototype for Experimental Studies (4) (1) (2) [2 c 2] (3) User Role [2 b] [2 d] [2 a] [2 c 1] (

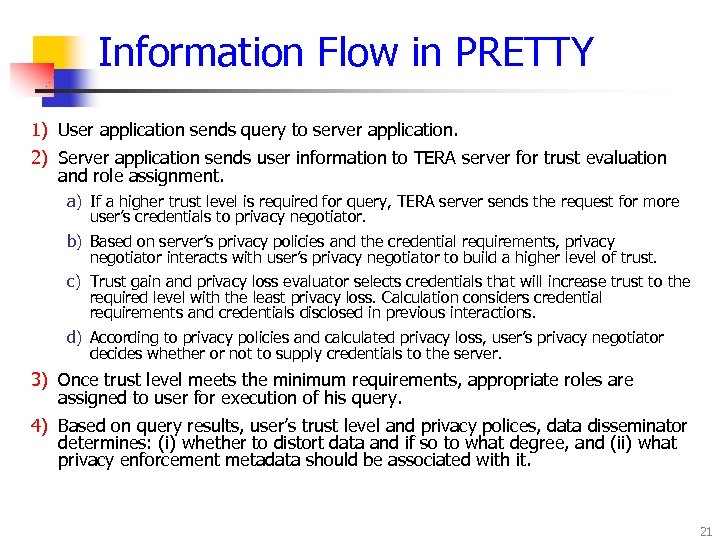

Information Flow in PRETTY 1) User application sends query to server application. 2) Server application sends user information to TERA server for trust evaluation and role assignment. a) If a higher trust level is required for query, TERA server sends the request for more user’s credentials to privacy negotiator. b) Based on server’s privacy policies and the credential requirements, privacy negotiator interacts with user’s privacy negotiator to build a higher level of trust. c) Trust gain and privacy loss evaluator selects credentials that will increase trust to the required level with the least privacy loss. Calculation considers credential requirements and credentials disclosed in previous interactions. d) According to privacy policies and calculated privacy loss, user’s privacy negotiator decides whether or not to supply credentials to the server. 3) Once trust level meets the minimum requirements, appropriate roles are assigned to user for execution of his query. 4) Based on query results, user’s trust level and privacy polices, data disseminator determines: (i) whether to distort data and if so to what degree, and (ii) what privacy enforcement metadata should be associated with it. 21

Information Flow in PRETTY 1) User application sends query to server application. 2) Server application sends user information to TERA server for trust evaluation and role assignment. a) If a higher trust level is required for query, TERA server sends the request for more user’s credentials to privacy negotiator. b) Based on server’s privacy policies and the credential requirements, privacy negotiator interacts with user’s privacy negotiator to build a higher level of trust. c) Trust gain and privacy loss evaluator selects credentials that will increase trust to the required level with the least privacy loss. Calculation considers credential requirements and credentials disclosed in previous interactions. d) According to privacy policies and calculated privacy loss, user’s privacy negotiator decides whether or not to supply credentials to the server. 3) Once trust level meets the minimum requirements, appropriate roles are assigned to user for execution of his query. 4) Based on query results, user’s trust level and privacy polices, data disseminator determines: (i) whether to distort data and if so to what degree, and (ii) what privacy enforcement metadata should be associated with it. 21

References L. Lilien and B. Bhargava, ”A scheme for privacy-preserving data dissemination, ” IEEE Transactions on Systems, Man and Cybernetics, Part A: Systems and Humans , Vol. 36(3), May 2006, pp. 503 -506. Bharat Bhargava, Leszek Lilien, Arnon Rosenthal, Marianne Winslett, “Pervasive Trust, ” IEEE Intelligent Systems, Sept. /Oct. 2004, pp. 74 -77 B. Bhargava and L. Lilien, “Private and Trusted Collaborations, ” Secure Knowledge Management (SKM 2004): A Workshop, 2004. B. Bhargava, C. Farkas, L. Lilien and F. Makedon, “Trust, Privacy, and Security. Summary of a Workshop Breakout Session at the National Science Foundation Information and Data Management (IDM) Workshop held in Seattle, Washington, September 14 - 16, 2003, ” CERIAS Tech Report 2003 -34, CERIAS, Purdue University, Nov. 2003. http: //www 2. cs. washington. edu/nsf 2003 or https: //www. cerias. purdue. edu/tools_and_resources/bibtex_archive/2003 -34. pdf “Internet Security Glossary, ” The Internet Society, Aug. 2004; www. faqs. org/rfcs/rfc 2828. html. “Sensor Nation: Special Report, ” IEEE Spectrum, vol. 41, no. 7, 2004. R. Khare and A. Rifkin, “Trust Management on the World Wide Web, ” First Monday, vol. 3, no. 6, 1998; www. firstmonday. dk/issues/issue 3_6/khare. M. Richardson, R. Agrawal, and P. Domingos, “Trust Management for the Semantic Web, ” Proc. 2 nd Int’l Semantic Web Conf. , LNCS 2870, Springer-Verlag, 2003, pp. 351– 368. P. Schiegg et al. , “Supply Chain Management Systems—A Survey of the State of the Art, ” Collaborative Systems for Production Management: Proc. 8 th Int’l Conf. Advances in Production Management Systems (APMS 2002), IFIP Conf. Proc. 257, Kluwer, 2002. N. C. Romano Jr. and J. Fjermestad, “Electronic Commerce Customer Relationship Management: A Research Agenda, ” Information Technology and Management, vol. 4, nos. 2– 3, 2003, pp. 233– 258. 22

References L. Lilien and B. Bhargava, ”A scheme for privacy-preserving data dissemination, ” IEEE Transactions on Systems, Man and Cybernetics, Part A: Systems and Humans , Vol. 36(3), May 2006, pp. 503 -506. Bharat Bhargava, Leszek Lilien, Arnon Rosenthal, Marianne Winslett, “Pervasive Trust, ” IEEE Intelligent Systems, Sept. /Oct. 2004, pp. 74 -77 B. Bhargava and L. Lilien, “Private and Trusted Collaborations, ” Secure Knowledge Management (SKM 2004): A Workshop, 2004. B. Bhargava, C. Farkas, L. Lilien and F. Makedon, “Trust, Privacy, and Security. Summary of a Workshop Breakout Session at the National Science Foundation Information and Data Management (IDM) Workshop held in Seattle, Washington, September 14 - 16, 2003, ” CERIAS Tech Report 2003 -34, CERIAS, Purdue University, Nov. 2003. http: //www 2. cs. washington. edu/nsf 2003 or https: //www. cerias. purdue. edu/tools_and_resources/bibtex_archive/2003 -34. pdf “Internet Security Glossary, ” The Internet Society, Aug. 2004; www. faqs. org/rfcs/rfc 2828. html. “Sensor Nation: Special Report, ” IEEE Spectrum, vol. 41, no. 7, 2004. R. Khare and A. Rifkin, “Trust Management on the World Wide Web, ” First Monday, vol. 3, no. 6, 1998; www. firstmonday. dk/issues/issue 3_6/khare. M. Richardson, R. Agrawal, and P. Domingos, “Trust Management for the Semantic Web, ” Proc. 2 nd Int’l Semantic Web Conf. , LNCS 2870, Springer-Verlag, 2003, pp. 351– 368. P. Schiegg et al. , “Supply Chain Management Systems—A Survey of the State of the Art, ” Collaborative Systems for Production Management: Proc. 8 th Int’l Conf. Advances in Production Management Systems (APMS 2002), IFIP Conf. Proc. 257, Kluwer, 2002. N. C. Romano Jr. and J. Fjermestad, “Electronic Commerce Customer Relationship Management: A Research Agenda, ” Information Technology and Management, vol. 4, nos. 2– 3, 2003, pp. 233– 258. 22

End 3/23/04

End 3/23/04

Using Entropy to Trade Privacy for Trust Yuhui Zhong Bharat Bhargava {zhong, bb}@cs. purdue. edu Department of Computer Sciences Purdue University This work is supported by NSF grant IIS-0209059 3/23/04

Using Entropy to Trade Privacy for Trust Yuhui Zhong Bharat Bhargava {zhong, bb}@cs. purdue. edu Department of Computer Sciences Purdue University This work is supported by NSF grant IIS-0209059 3/23/04

Problem motivation n Privacy and trust form an adversarial relationship n n n Internet users worry about revealing personal data. This fear held back $15 billion in online revenue in 2001 Users have to provide digital credentials that contain private information in order to build trust in open environments like Internet. Research is needed to quantify the tradeoff between privacy and trust 25

Problem motivation n Privacy and trust form an adversarial relationship n n n Internet users worry about revealing personal data. This fear held back $15 billion in online revenue in 2001 Users have to provide digital credentials that contain private information in order to build trust in open environments like Internet. Research is needed to quantify the tradeoff between privacy and trust 25

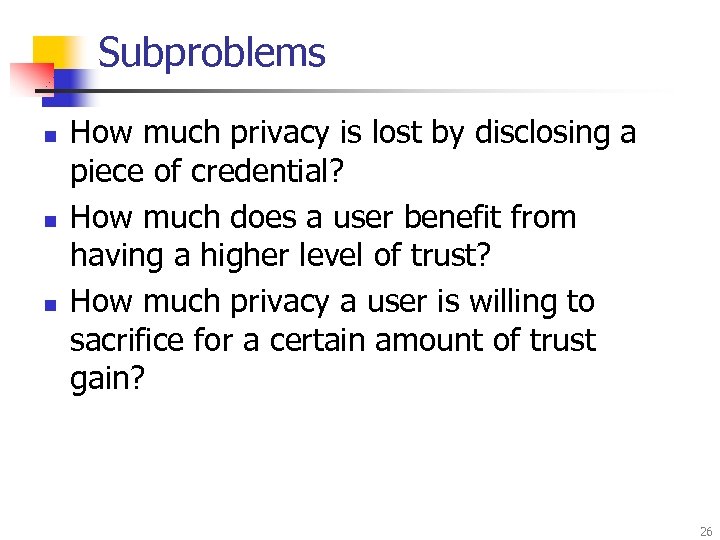

Subproblems n n n How much privacy is lost by disclosing a piece of credential? How much does a user benefit from having a higher level of trust? How much privacy a user is willing to sacrifice for a certain amount of trust gain? 26

Subproblems n n n How much privacy is lost by disclosing a piece of credential? How much does a user benefit from having a higher level of trust? How much privacy a user is willing to sacrifice for a certain amount of trust gain? 26

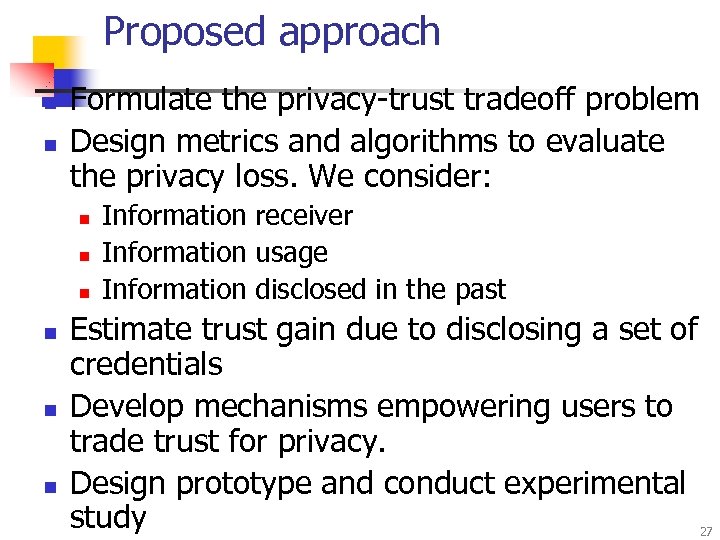

Proposed approach n n Formulate the privacy-trust tradeoff problem Design metrics and algorithms to evaluate the privacy loss. We consider: n n n Information receiver Information usage Information disclosed in the past Estimate trust gain due to disclosing a set of credentials Develop mechanisms empowering users to trade trust for privacy. Design prototype and conduct experimental study 27

Proposed approach n n Formulate the privacy-trust tradeoff problem Design metrics and algorithms to evaluate the privacy loss. We consider: n n n Information receiver Information usage Information disclosed in the past Estimate trust gain due to disclosing a set of credentials Develop mechanisms empowering users to trade trust for privacy. Design prototype and conduct experimental study 27

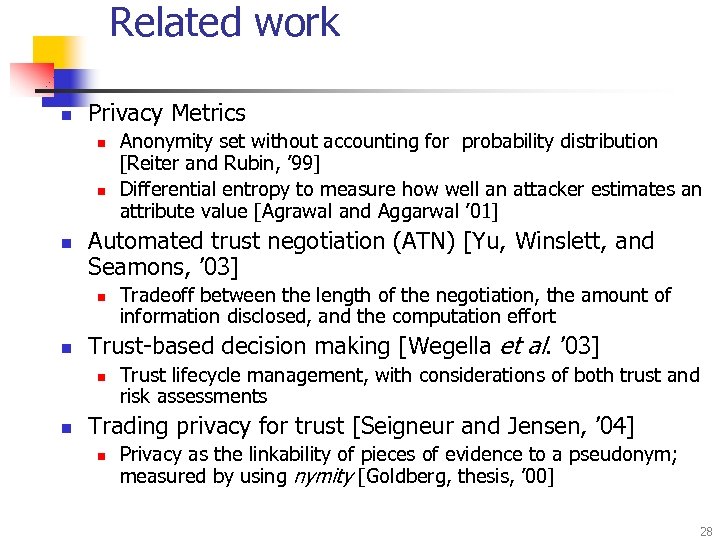

Related work n Privacy Metrics n n n Automated trust negotiation (ATN) [Yu, Winslett, and Seamons, ’ 03] n n Tradeoff between the length of the negotiation, the amount of information disclosed, and the computation effort Trust-based decision making [Wegella et al. ’ 03] n n Anonymity set without accounting for probability distribution [Reiter and Rubin, ’ 99] Differential entropy to measure how well an attacker estimates an attribute value [Agrawal and Aggarwal ’ 01] Trust lifecycle management, with considerations of both trust and risk assessments Trading privacy for trust [Seigneur and Jensen, ’ 04] n Privacy as the linkability of pieces of evidence to a pseudonym; measured by using nymity [Goldberg, thesis, ’ 00] 28

Related work n Privacy Metrics n n n Automated trust negotiation (ATN) [Yu, Winslett, and Seamons, ’ 03] n n Tradeoff between the length of the negotiation, the amount of information disclosed, and the computation effort Trust-based decision making [Wegella et al. ’ 03] n n Anonymity set without accounting for probability distribution [Reiter and Rubin, ’ 99] Differential entropy to measure how well an attacker estimates an attribute value [Agrawal and Aggarwal ’ 01] Trust lifecycle management, with considerations of both trust and risk assessments Trading privacy for trust [Seigneur and Jensen, ’ 04] n Privacy as the linkability of pieces of evidence to a pseudonym; measured by using nymity [Goldberg, thesis, ’ 00] 28

Formulation of tradeoff problem n n Set of private attributes Set of credentials n n n (1) that user wants to conceal R(i): subset of credentials revealed to receiver i U(i): credentials unrevealed to receiver i Credential set with minimal privacy loss n n n A subset of credentials NC from U (i) NC satisfies the requirements for trust building Privacy. Loss(NC∪R(i)) – Privacy. Loss(R(i))) is minimized 29

Formulation of tradeoff problem n n Set of private attributes Set of credentials n n n (1) that user wants to conceal R(i): subset of credentials revealed to receiver i U(i): credentials unrevealed to receiver i Credential set with minimal privacy loss n n n A subset of credentials NC from U (i) NC satisfies the requirements for trust building Privacy. Loss(NC∪R(i)) – Privacy. Loss(R(i))) is minimized 29

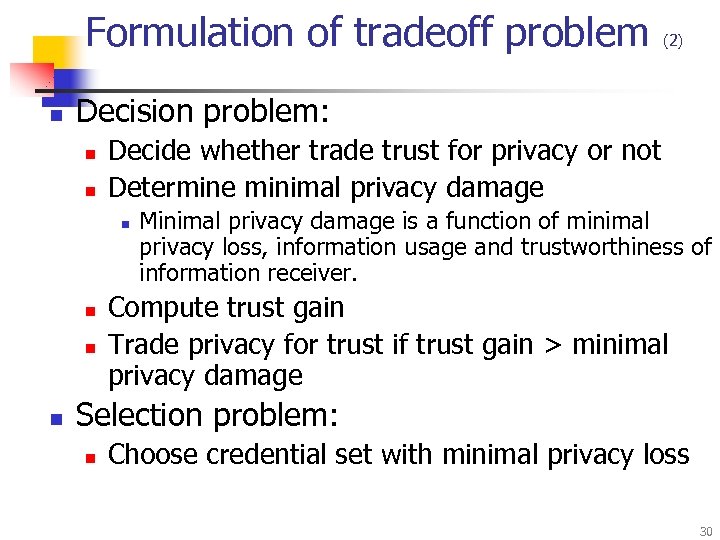

Formulation of tradeoff problem n Decision problem: n n Decide whether trade trust for privacy or not Determine minimal privacy damage n n (2) Minimal privacy damage is a function of minimal privacy loss, information usage and trustworthiness of information receiver. Compute trust gain Trade privacy for trust if trust gain > minimal privacy damage Selection problem: n Choose credential set with minimal privacy loss 30

Formulation of tradeoff problem n Decision problem: n n Decide whether trade trust for privacy or not Determine minimal privacy damage n n (2) Minimal privacy damage is a function of minimal privacy loss, information usage and trustworthiness of information receiver. Compute trust gain Trade privacy for trust if trust gain > minimal privacy damage Selection problem: n Choose credential set with minimal privacy loss 30

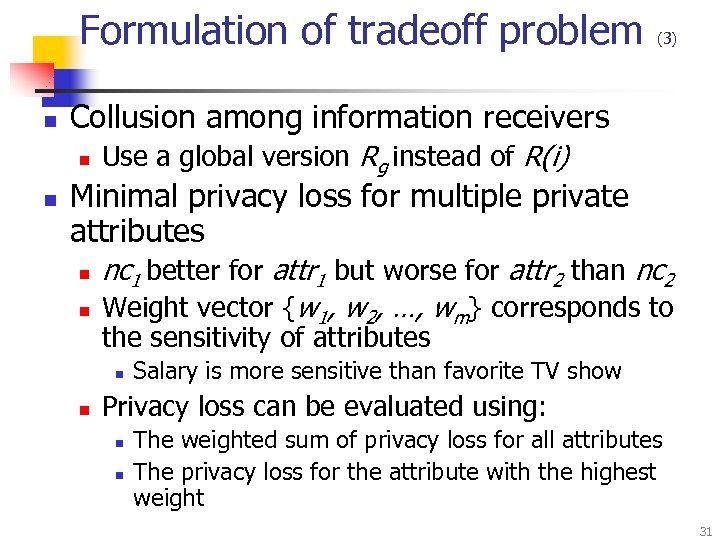

Formulation of tradeoff problem n Collusion among information receivers n n (3) Use a global version Rg instead of R(i) Minimal privacy loss for multiple private attributes n n nc 1 better for attr 1 but worse for attr 2 than nc 2 Weight vector {w 1, w 2, …, wm} corresponds to the sensitivity of attributes n n Salary is more sensitive than favorite TV show Privacy loss can be evaluated using: n n The weighted sum of privacy loss for all attributes The privacy loss for the attribute with the highest weight 31

Formulation of tradeoff problem n Collusion among information receivers n n (3) Use a global version Rg instead of R(i) Minimal privacy loss for multiple private attributes n n nc 1 better for attr 1 but worse for attr 2 than nc 2 Weight vector {w 1, w 2, …, wm} corresponds to the sensitivity of attributes n n Salary is more sensitive than favorite TV show Privacy loss can be evaluated using: n n The weighted sum of privacy loss for all attributes The privacy loss for the attribute with the highest weight 31

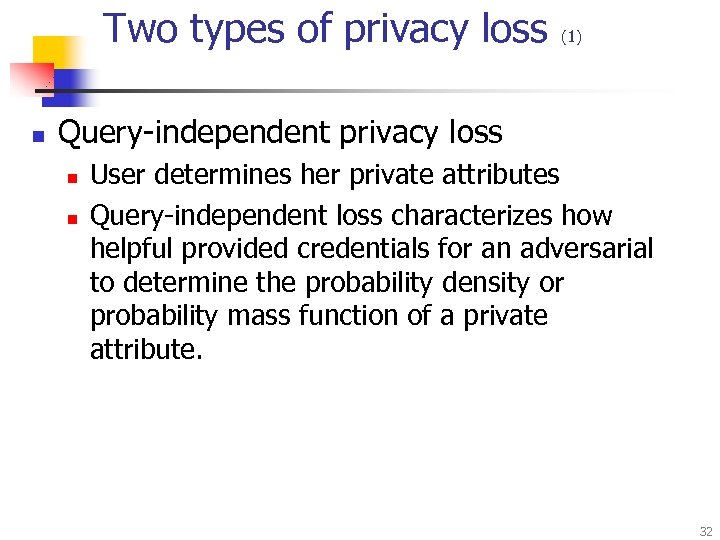

Two types of privacy loss n (1) Query-independent privacy loss n n User determines her private attributes Query-independent loss characterizes how helpful provided credentials for an adversarial to determine the probability density or probability mass function of a private attribute. 32

Two types of privacy loss n (1) Query-independent privacy loss n n User determines her private attributes Query-independent loss characterizes how helpful provided credentials for an adversarial to determine the probability density or probability mass function of a private attribute. 32

Two types of privacy loss n (2) Query-dependent privacy loss n n n User determines a set of potential queries Q that she is reluctant to answer Provided credentials reveal information of attribute set A. Q is a function of A. Query-dependent loss characterizes how helpful provided credentials for an adversarial to determine the probability density or probability mass function of Q. 33

Two types of privacy loss n (2) Query-dependent privacy loss n n n User determines a set of potential queries Q that she is reluctant to answer Provided credentials reveal information of attribute set A. Q is a function of A. Query-dependent loss characterizes how helpful provided credentials for an adversarial to determine the probability density or probability mass function of Q. 33

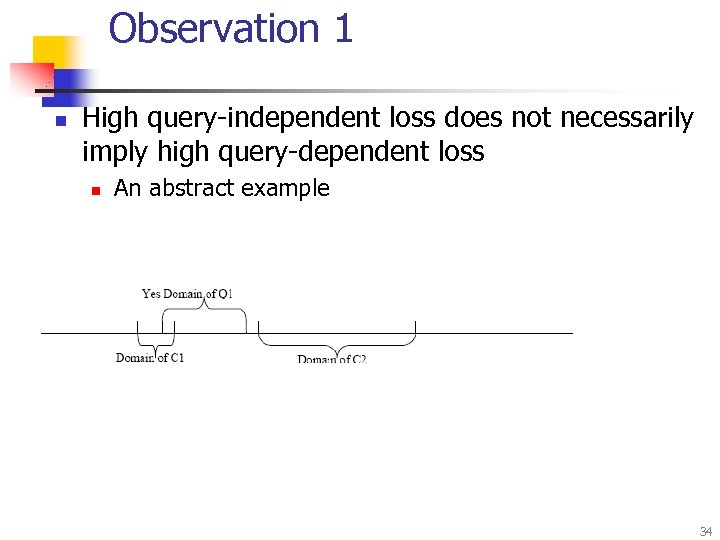

Observation 1 n High query-independent loss does not necessarily imply high query-dependent loss n An abstract example 34

Observation 1 n High query-independent loss does not necessarily imply high query-dependent loss n An abstract example 34

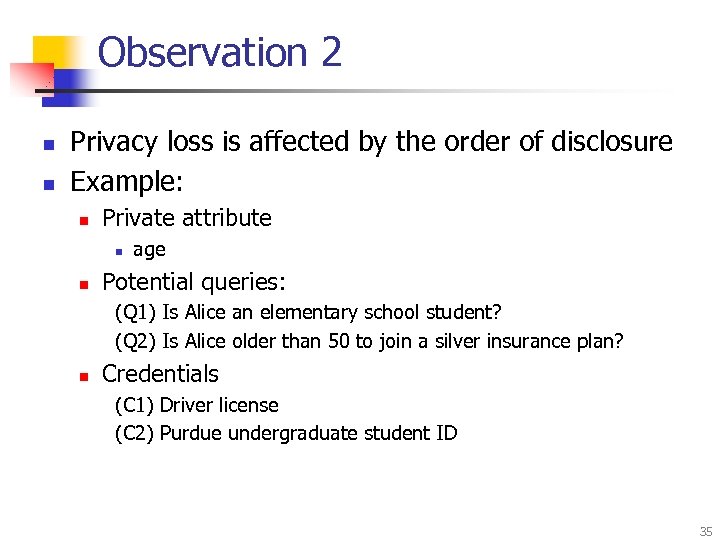

Observation 2 n n Privacy loss is affected by the order of disclosure Example: n Private attribute n n age Potential queries: (Q 1) Is Alice an elementary school student? (Q 2) Is Alice older than 50 to join a silver insurance plan? n Credentials (C 1) Driver license (C 2) Purdue undergraduate student ID 35

Observation 2 n n Privacy loss is affected by the order of disclosure Example: n Private attribute n n age Potential queries: (Q 1) Is Alice an elementary school student? (Q 2) Is Alice older than 50 to join a silver insurance plan? n Credentials (C 1) Driver license (C 2) Purdue undergraduate student ID 35

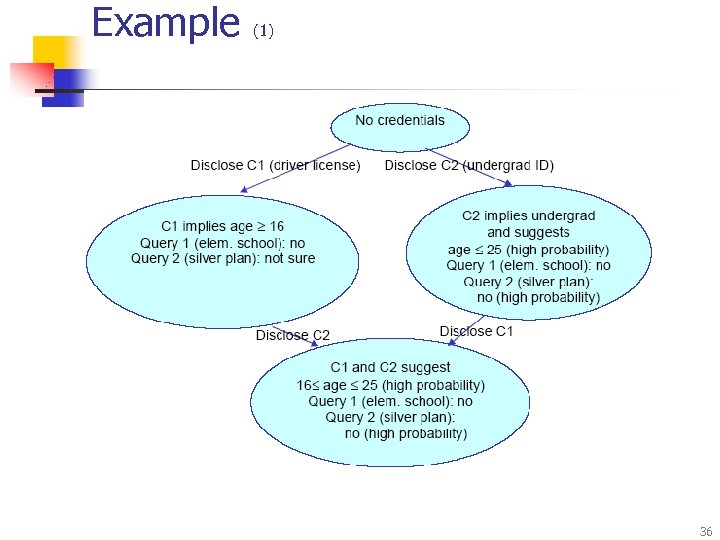

Example (1) 36

Example (1) 36

Example n C 1 C 2 n Disclosing C 1 n n low query-independent loss (wide range for age) 100% loss for Query 1 (elem. school student) low loss for Query 2 (silver plan) Disclosing C 2 n n (2) high query-independent loss (narrow range for age) zero loss for Query 1 (because privacy was lost by disclosing license) high loss for Query 2 (“not sure” “no - high probability” C 2 C 1 n Disclosing C 2 n n low query-independent loss (wide range for age) 100% loss for Query 1 (elem. school student) high loss for Query 2 (silver plan) Disclosing C 1 n n n high query-independent loss (narrow range of age) zero loss for Query 1 (because privacy was lost by disclosing ID) zero loss for Query 2 37

Example n C 1 C 2 n Disclosing C 1 n n low query-independent loss (wide range for age) 100% loss for Query 1 (elem. school student) low loss for Query 2 (silver plan) Disclosing C 2 n n (2) high query-independent loss (narrow range for age) zero loss for Query 1 (because privacy was lost by disclosing license) high loss for Query 2 (“not sure” “no - high probability” C 2 C 1 n Disclosing C 2 n n low query-independent loss (wide range for age) 100% loss for Query 1 (elem. school student) high loss for Query 2 (silver plan) Disclosing C 1 n n n high query-independent loss (narrow range of age) zero loss for Query 1 (because privacy was lost by disclosing ID) zero loss for Query 2 37

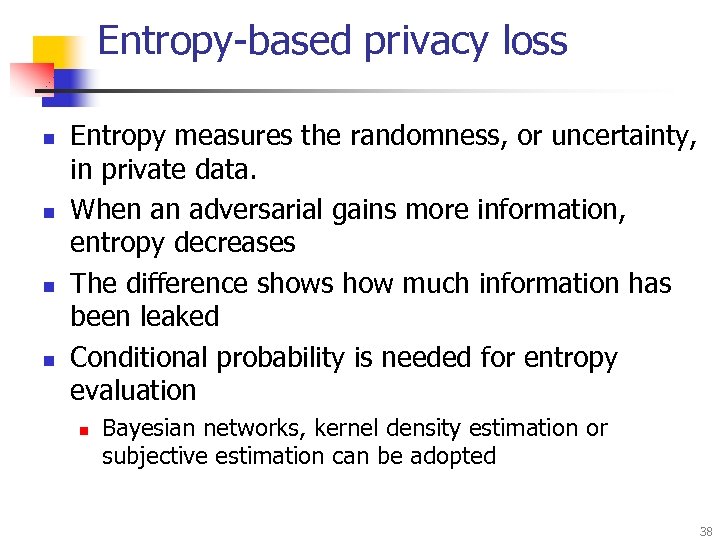

Entropy-based privacy loss n n Entropy measures the randomness, or uncertainty, in private data. When an adversarial gains more information, entropy decreases The difference shows how much information has been leaked Conditional probability is needed for entropy evaluation n Bayesian networks, kernel density estimation or subjective estimation can be adopted 38

Entropy-based privacy loss n n Entropy measures the randomness, or uncertainty, in private data. When an adversarial gains more information, entropy decreases The difference shows how much information has been leaked Conditional probability is needed for entropy evaluation n Bayesian networks, kernel density estimation or subjective estimation can be adopted 38

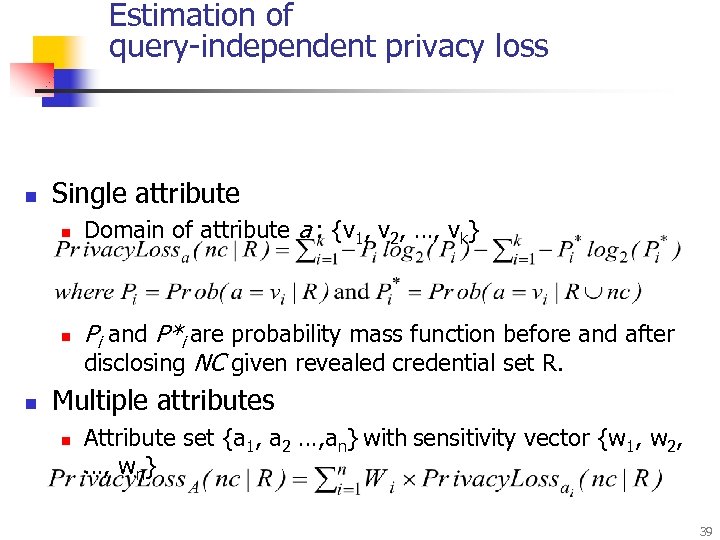

Estimation of query-independent privacy loss n Single attribute n n n Domain of attribute a : {v 1, v 2, …, vk} Pi and P*i are probability mass function before and after disclosing NC given revealed credential set R. Multiple attributes n Attribute set {a 1, a 2 …, an} with sensitivity vector {w 1, w 2, …, wn} 39

Estimation of query-independent privacy loss n Single attribute n n n Domain of attribute a : {v 1, v 2, …, vk} Pi and P*i are probability mass function before and after disclosing NC given revealed credential set R. Multiple attributes n Attribute set {a 1, a 2 …, an} with sensitivity vector {w 1, w 2, …, wn} 39

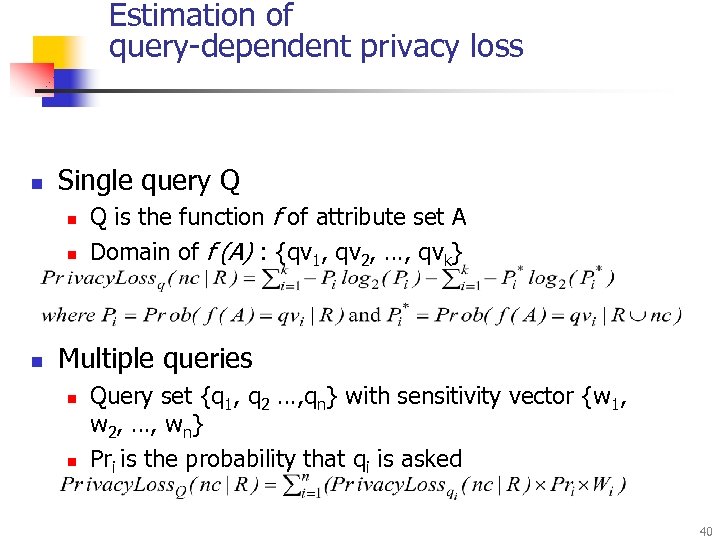

Estimation of query-dependent privacy loss n Single query Q n n n Q is the function f of attribute set A Domain of f (A) : {qv 1, qv 2, …, qvk} Multiple queries n n Query set {q 1, q 2 …, qn} with sensitivity vector {w 1, w 2, …, wn} Pri is the probability that qi is asked 40

Estimation of query-dependent privacy loss n Single query Q n n n Q is the function f of attribute set A Domain of f (A) : {qv 1, qv 2, …, qvk} Multiple queries n n Query set {q 1, q 2 …, qn} with sensitivity vector {w 1, w 2, …, wn} Pri is the probability that qi is asked 40

Estimate privacy damage n n Assume user provides one damage function dusage(Privacy. Loss) for each information usage Privacy. Damage(Privacy. Loss, Usage, Receiver) = Dmax(Privacy. Loss)×(1 -Trustreceiver) + dusage(Privacy. Loss) ×Trustreceiver n n Trustreceiver is a number Є [0, 1] representing the trustworthy of information receiver Dmax(Privacy. Loss) = Max(dusage(Privacy. Loss) for all usage) 41

Estimate privacy damage n n Assume user provides one damage function dusage(Privacy. Loss) for each information usage Privacy. Damage(Privacy. Loss, Usage, Receiver) = Dmax(Privacy. Loss)×(1 -Trustreceiver) + dusage(Privacy. Loss) ×Trustreceiver n n Trustreceiver is a number Є [0, 1] representing the trustworthy of information receiver Dmax(Privacy. Loss) = Max(dusage(Privacy. Loss) for all usage) 41

Estimate trust gain n Increasing trust level n n Benefit function TB(trust_level) n n Adopt research on trust establishment and management Provided by service provider or derived from user’s utility function Trust gain n TB(trust_levelnew) - TB(tust_levelprev) 42

Estimate trust gain n Increasing trust level n n Benefit function TB(trust_level) n n Adopt research on trust establishment and management Provided by service provider or derived from user’s utility function Trust gain n TB(trust_levelnew) - TB(tust_levelprev) 42

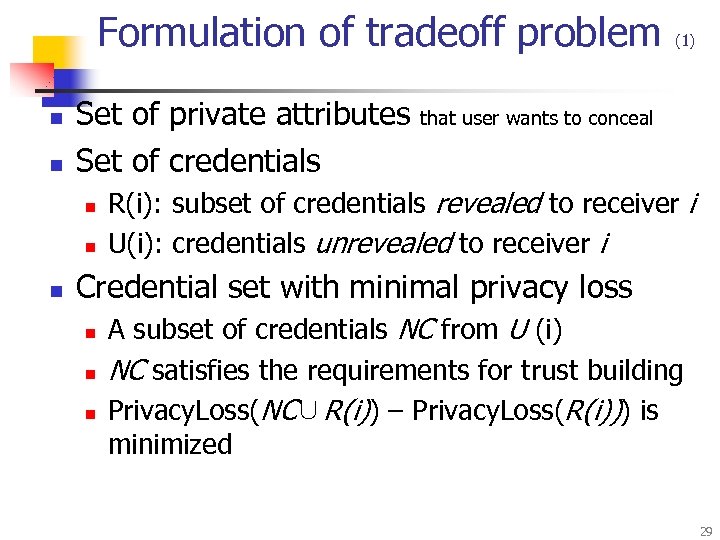

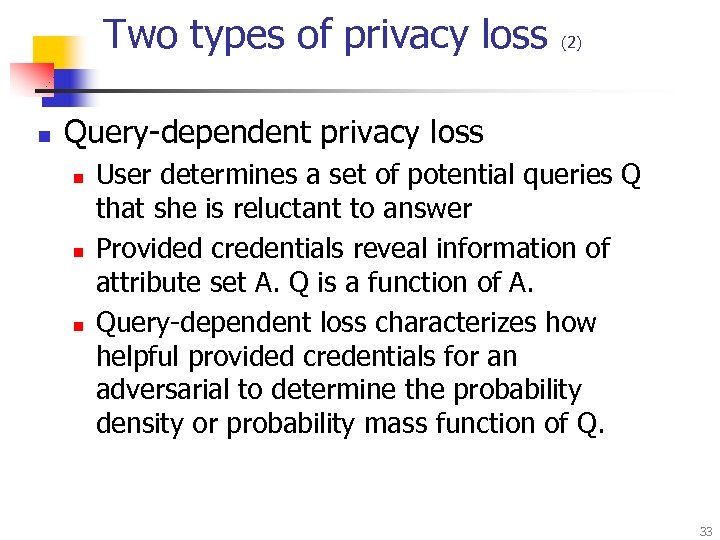

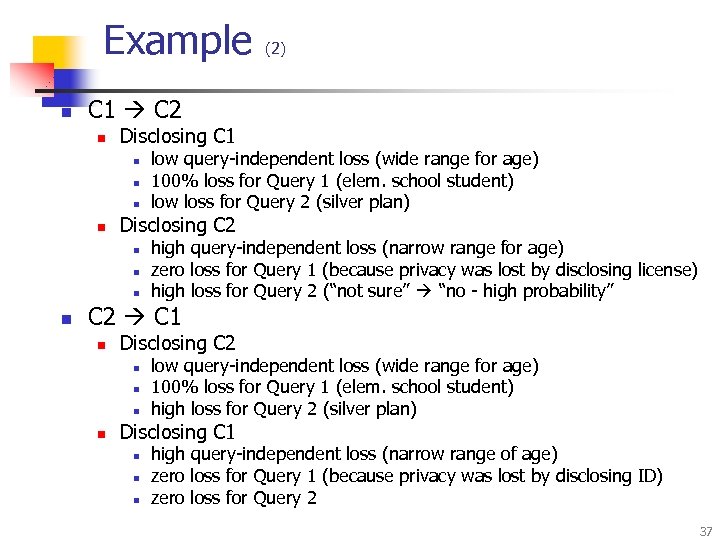

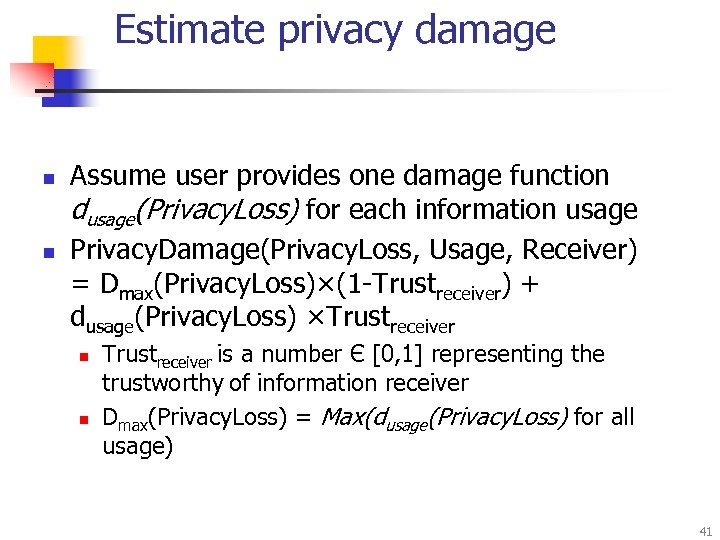

![PRETTY: Prototype for Experimental Studies (4) (1) (2) [2 c 2] (3) User Role PRETTY: Prototype for Experimental Studies (4) (1) (2) [2 c 2] (3) User Role](https://present5.com/presentation/7ad84ee68f01a7f9d709bbea08569740/image-43.jpg) PRETTY: Prototype for Experimental Studies (4) (1) (2) [2 c 2] (3) User Role [2 b] [2 d] [2 a] [2 c 1] (

PRETTY: Prototype for Experimental Studies (4) (1) (2) [2 c 2] (3) User Role [2 b] [2 d] [2 a] [2 c 1] (

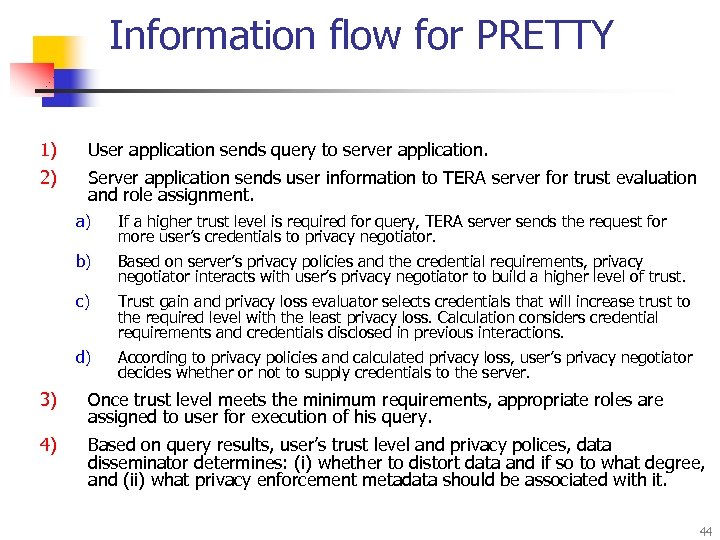

Information flow for PRETTY 1) User application sends query to server application. 2) Server application sends user information to TERA server for trust evaluation and role assignment. a) If a higher trust level is required for query, TERA server sends the request for more user’s credentials to privacy negotiator. b) Based on server’s privacy policies and the credential requirements, privacy negotiator interacts with user’s privacy negotiator to build a higher level of trust. c) Trust gain and privacy loss evaluator selects credentials that will increase trust to the required level with the least privacy loss. Calculation considers credential requirements and credentials disclosed in previous interactions. d) According to privacy policies and calculated privacy loss, user’s privacy negotiator decides whether or not to supply credentials to the server. 3) Once trust level meets the minimum requirements, appropriate roles are assigned to user for execution of his query. 4) Based on query results, user’s trust level and privacy polices, data disseminator determines: (i) whether to distort data and if so to what degree, and (ii) what privacy enforcement metadata should be associated with it. 44

Information flow for PRETTY 1) User application sends query to server application. 2) Server application sends user information to TERA server for trust evaluation and role assignment. a) If a higher trust level is required for query, TERA server sends the request for more user’s credentials to privacy negotiator. b) Based on server’s privacy policies and the credential requirements, privacy negotiator interacts with user’s privacy negotiator to build a higher level of trust. c) Trust gain and privacy loss evaluator selects credentials that will increase trust to the required level with the least privacy loss. Calculation considers credential requirements and credentials disclosed in previous interactions. d) According to privacy policies and calculated privacy loss, user’s privacy negotiator decides whether or not to supply credentials to the server. 3) Once trust level meets the minimum requirements, appropriate roles are assigned to user for execution of his query. 4) Based on query results, user’s trust level and privacy polices, data disseminator determines: (i) whether to distort data and if so to what degree, and (ii) what privacy enforcement metadata should be associated with it. 44

Conclusion n n This research addresses the tradeoff issues between privacy and trust. Tradeoff problems are formally defined. An entropy-based approach is proposed to estimate privacy loss. A prototype is under development for experimental study. 45

Conclusion n n This research addresses the tradeoff issues between privacy and trust. Tradeoff problems are formally defined. An entropy-based approach is proposed to estimate privacy loss. A prototype is under development for experimental study. 45