25c81ccfea59b0a26b24670cad1f5b28.ppt

- Количество слайдов: 80

3 rd PRAGMA Workshop Tutorial Programming on the Grid using Grid. RPC National Institute of Advanced Industrial Science and Technology Yoshio Tanaka

3 rd PRAGMA Workshop Tutorial Programming on the Grid using Grid. RPC National Institute of Advanced Industrial Science and Technology Yoshio Tanaka

Tutorial Goal Understand “how to develop” Grid applications using Grid. RPC Ninf-G is used as an sample Grid. RPC system

Tutorial Goal Understand “how to develop” Grid applications using Grid. RPC Ninf-G is used as an sample Grid. RPC system

Outline l What is Grid. RPC? Ø Overview Ø What’s going on? l Ninf-G Ø Overview and architecture Ø How to install Ninf-G Ø How to build remote libraries (server side ops) Ø How to call remote libraries (client side ops) Ø Examples Ø Ongoing work and future plan Ø Demonstration » Weather Forecasting Portal on the Ap. Grid Testbed

Outline l What is Grid. RPC? Ø Overview Ø What’s going on? l Ninf-G Ø Overview and architecture Ø How to install Ninf-G Ø How to build remote libraries (server side ops) Ø How to call remote libraries (client side ops) Ø Examples Ø Ongoing work and future plan Ø Demonstration » Weather Forecasting Portal on the Ap. Grid Testbed

Overview of Grid RPC

Overview of Grid RPC

Some Significant Grid Programming Models/Systems l Data Parallel Ø MPI - MPICH-G 2, Stampi, PACX-MPI, Mag. Pie l Task Parallel Ø Grid. RPC – Ninf, Netsolve, Punch… l Distributed Objects Ø CORBA, Java/RMI/xxx, … l Data Intensive Processing Ø Data. Cutter, Gfarm, … l Peer-To-Peer Ø Various Research and Commercial Systems » UD, Entropia, Parabon, JXTA, … l Others…

Some Significant Grid Programming Models/Systems l Data Parallel Ø MPI - MPICH-G 2, Stampi, PACX-MPI, Mag. Pie l Task Parallel Ø Grid. RPC – Ninf, Netsolve, Punch… l Distributed Objects Ø CORBA, Java/RMI/xxx, … l Data Intensive Processing Ø Data. Cutter, Gfarm, … l Peer-To-Peer Ø Various Research and Commercial Systems » UD, Entropia, Parabon, JXTA, … l Others…

Grid RPC System (1/5) l A simple RPC-based programming model for the Grid Ø Utilize remote resources Ø Clients makes calls with data to be computed Ø Task Parallelism (synch. and asynch. calls) l Key property: EASE OF USE Data Server Client Result

Grid RPC System (1/5) l A simple RPC-based programming model for the Grid Ø Utilize remote resources Ø Clients makes calls with data to be computed Ø Task Parallelism (synch. and asynch. calls) l Key property: EASE OF USE Data Server Client Result

Grid. RPC System (2/5) l RPC System “tailored” for the Grid Ø Medium to Coarse-grained calls » Call Duration < 1 sec to > week Ø Task-Parallel Programming on the Grid » Asynchronous calls, 1000 s of scalable parallel calls Ø Large Matrix Data & File Transfer » Call-by-reference, shared-memory matrix arguments Ø Grid-level Security (e. g. , Ninf-G with GSI) Ø Simple Client-side Programming & Management » No client-side stub programming or IDL management Ø Server Job monitoring and control Ø Other features…

Grid. RPC System (2/5) l RPC System “tailored” for the Grid Ø Medium to Coarse-grained calls » Call Duration < 1 sec to > week Ø Task-Parallel Programming on the Grid » Asynchronous calls, 1000 s of scalable parallel calls Ø Large Matrix Data & File Transfer » Call-by-reference, shared-memory matrix arguments Ø Grid-level Security (e. g. , Ninf-G with GSI) Ø Simple Client-side Programming & Management » No client-side stub programming or IDL management Ø Server Job monitoring and control Ø Other features…

Grid. RPC System (3/5) l Grid RPC can be effectively used as a programming abstraction in situations including Ø Utilize resources that are available on a specific computer on the Grid » Binary programs, libraries, etc. Ø Execute compute/data intensive routines on large servers on the Grid Ø Parameter Sweep Studies using multiple servers on the Grid Ø General and scalable Task-Parallel Programs on the Grid

Grid. RPC System (3/5) l Grid RPC can be effectively used as a programming abstraction in situations including Ø Utilize resources that are available on a specific computer on the Grid » Binary programs, libraries, etc. Ø Execute compute/data intensive routines on large servers on the Grid Ø Parameter Sweep Studies using multiple servers on the Grid Ø General and scalable Task-Parallel Programs on the Grid

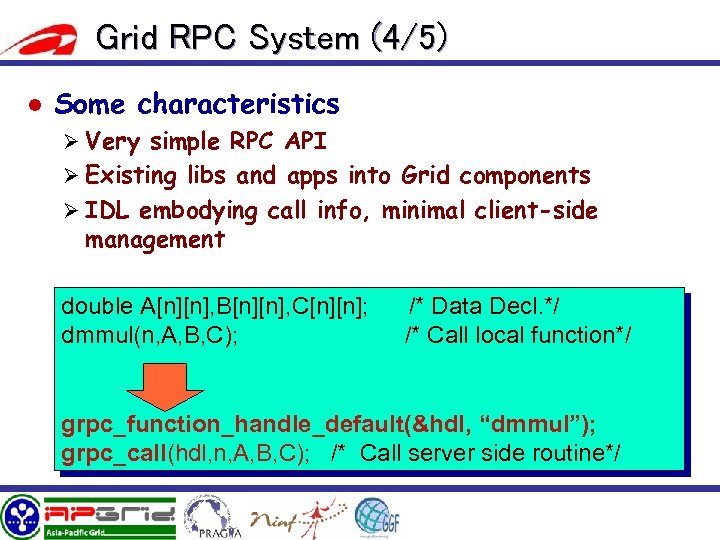

Grid RPC System (4/5) l Some characteristics Ø Very simple RPC API Ø Existing libs and apps into Grid components Ø IDL embodying call info, minimal client-side management double A[n][n], B[n][n], C[n][n]; dmmul(n, A, B, C); /* Data Decl. */ /* Call local function*/ grpc_function_handle_default(&hdl, “dmmul”); grpc_call(hdl, n, A, B, C); /* Call server side routine*/

Grid RPC System (4/5) l Some characteristics Ø Very simple RPC API Ø Existing libs and apps into Grid components Ø IDL embodying call info, minimal client-side management double A[n][n], B[n][n], C[n][n]; dmmul(n, A, B, C); /* Data Decl. */ /* Call local function*/ grpc_function_handle_default(&hdl, “dmmul”); grpc_call(hdl, n, A, B, C); /* Call server side routine*/

Grid RPC System (5/5) l Programming Model middleware between “the Grid toolkit” and Application Ø Bases for more complex form of global computing l Success stories of large computational problems happening Ø Parameter sweeping » Monte Carlo Simulation – MCell (Netsolve) Ø Medium to Coarse-Grained multi simulations » REXMC (Ninf), Weather Forecasting (Ninf-G) Ø Fine to Medium-Grained Iterative Algorithms » BMI, SDPA Apps (Ninf/Ninf-G) Ø Network-enabled “generic” Application libraries » SCLAPACK for Netsolve/Ninf-G Grid RPC System Lower-level Grid Systems

Grid RPC System (5/5) l Programming Model middleware between “the Grid toolkit” and Application Ø Bases for more complex form of global computing l Success stories of large computational problems happening Ø Parameter sweeping » Monte Carlo Simulation – MCell (Netsolve) Ø Medium to Coarse-Grained multi simulations » REXMC (Ninf), Weather Forecasting (Ninf-G) Ø Fine to Medium-Grained Iterative Algorithms » BMI, SDPA Apps (Ninf/Ninf-G) Ø Network-enabled “generic” Application libraries » SCLAPACK for Netsolve/Ninf-G Grid RPC System Lower-level Grid Systems

Sample Architecture and Protocol of Grid. RPC System – Ninf Client side l Call remote library Server side setup Build Remote Library Executable n Register it to the Ninf Server Numerical IDL file Library n Ø Retrieve interface information Ø Invoke Remote Library Executable Ø It Calls back to the client IDL Compiler Client Interface Request Interface Reply Connect back Generate Invoke Executable Ninf Server fork Register Remote Library Executable

Sample Architecture and Protocol of Grid. RPC System – Ninf Client side l Call remote library Server side setup Build Remote Library Executable n Register it to the Ninf Server Numerical IDL file Library n Ø Retrieve interface information Ø Invoke Remote Library Executable Ø It Calls back to the client IDL Compiler Client Interface Request Interface Reply Connect back Generate Invoke Executable Ninf Server fork Register Remote Library Executable

Grid. RPC – What’s going on? l Activities at the GGF Ø Standard Grid. RPC API » Proposed at the GGF APM RG » In collaboration between Ninf team and Net. Solve team Ø Grid. RPC WG will be launched at GGF 7 » Short term (18 months) » Define Standard Grid. RPC API » Develop reference implementation l System developments Ø Grid. Solve, Ninf-G/G 2 Ø Will propose to include these systems in NMI Software Package (October release? )

Grid. RPC – What’s going on? l Activities at the GGF Ø Standard Grid. RPC API » Proposed at the GGF APM RG » In collaboration between Ninf team and Net. Solve team Ø Grid. RPC WG will be launched at GGF 7 » Short term (18 months) » Define Standard Grid. RPC API » Develop reference implementation l System developments Ø Grid. Solve, Ninf-G/G 2 Ø Will propose to include these systems in NMI Software Package (October release? )

Ninf-G Overview and Architecture

Ninf-G Overview and Architecture

Ninf Project l l Started in 1994 Collaborators from various organizations Ø AIST » Satoshi Sekiguchi, Umpei Nagashima, Hidemoto Nakada, Hiromitsu Takagi, Osamu Tatebe, Yoshio Tanaka, Kazuyuki Shudo Ø University of Tsukuba » Mitsuhisa Sato, Taisuke Boku Ø Tokyo Institute of Technology » Satoshi Matsuoka, Kento Aida, Hirotaka Ogawa Ø Tokyo Electronic University » Katsuki Fujisawa Ø Ochanomizu University » Atsuko Takefusa Ø Kyoto University » Masaaki Shimasaki

Ninf Project l l Started in 1994 Collaborators from various organizations Ø AIST » Satoshi Sekiguchi, Umpei Nagashima, Hidemoto Nakada, Hiromitsu Takagi, Osamu Tatebe, Yoshio Tanaka, Kazuyuki Shudo Ø University of Tsukuba » Mitsuhisa Sato, Taisuke Boku Ø Tokyo Institute of Technology » Satoshi Matsuoka, Kento Aida, Hirotaka Ogawa Ø Tokyo Electronic University » Katsuki Fujisawa Ø Ochanomizu University » Atsuko Takefusa Ø Kyoto University » Masaaki Shimasaki

Brief History of Ninf/Ninf-G 1994 1997 2000 Ninf project launched Ninf-G development Release Ninf version 1 Standard Grid. RPC API proposed Start collaboration with Net. Solve team Release Ninf-G version 0. 9 2003 Implement formal spec. of Grid. RPC 1 st Grid. RPC WG at GGF 7 Release Ninf-G version 1. 0

Brief History of Ninf/Ninf-G 1994 1997 2000 Ninf project launched Ninf-G development Release Ninf version 1 Standard Grid. RPC API proposed Start collaboration with Net. Solve team Release Ninf-G version 0. 9 2003 Implement formal spec. of Grid. RPC 1 st Grid. RPC WG at GGF 7 Release Ninf-G version 1. 0

What is Ninf-G? l l A software package which supports programming and execution of Grid applications using Grid. RPC. Ninf-G includes Ø C/C++, Java APIs, libraries for software development Ø IDL compiler for stub generation Ø Shell scripts to » compile client program » build and publish remote libraries Ø sample programs Ø manual documents

What is Ninf-G? l l A software package which supports programming and execution of Grid applications using Grid. RPC. Ninf-G includes Ø C/C++, Java APIs, libraries for software development Ø IDL compiler for stub generation Ø Shell scripts to » compile client program » build and publish remote libraries Ø sample programs Ø manual documents

Ninf-G: Features At-a-Glance l l l Ease-of-use, client-server, Numericaloriented RPC system No stub information at the client side User’s view: ordinary software library Ø Asymmetric client vs. server l Built on top of the Globus Toolkit Ø Uses GSI, GRAM, MDS, GASS, and Globus-IO l Supports various platforms Ø Ninf-G is available on Globus-enabled platforms l Client APIs: C/C++, Java

Ninf-G: Features At-a-Glance l l l Ease-of-use, client-server, Numericaloriented RPC system No stub information at the client side User’s view: ordinary software library Ø Asymmetric client vs. server l Built on top of the Globus Toolkit Ø Uses GSI, GRAM, MDS, GASS, and Globus-IO l Supports various platforms Ø Ninf-G is available on Globus-enabled platforms l Client APIs: C/C++, Java

Architecture of Ninf Client side Server side IDL file IDL Compiler Client Interface Request Numerical Library Interface Reply Connect back Generate Invoke Executable Ninf Server fork Register Remote Library Executable

Architecture of Ninf Client side Server side IDL file IDL Compiler Client Interface Request Numerical Library Interface Reply Connect back Generate Invoke Executable Ninf Server fork Register Remote Library Executable

Architecture of Ninf-G Client side Server side IDL file Numerical Library IDL Compiler Client Connect back Interface Request Interface Reply Invoke Executable GRAM GRIS Globus-IO fork retrieve Generate Remote Library Executable Interface Information LDIF File

Architecture of Ninf-G Client side Server side IDL file Numerical Library IDL Compiler Client Connect back Interface Request Interface Reply Invoke Executable GRAM GRIS Globus-IO fork retrieve Generate Remote Library Executable Interface Information LDIF File

Ninf-G How to install Ninf-G

Ninf-G How to install Ninf-G

Getting Started l Globus Toolkit (ver. 1 or 2) must be installed prior to the Ninf-G installation Ø from source bundles Ø all bundles (resource, data, info - server/client/SDK) (recommended) Ø same flavor l l Create a user ‘ninf’ (recommended) Download Ninf-G Ø http: //ninf. apgrid. org/packages/ Ø Ninf-G is provided as a single gzipped tarball. l Before starting installation, be sure that Globus related env. variable is correctly defined (e. g. GLOBUS_LOCATION, GLOBUS_INSTALL_PATH)

Getting Started l Globus Toolkit (ver. 1 or 2) must be installed prior to the Ninf-G installation Ø from source bundles Ø all bundles (resource, data, info - server/client/SDK) (recommended) Ø same flavor l l Create a user ‘ninf’ (recommended) Download Ninf-G Ø http: //ninf. apgrid. org/packages/ Ø Ninf-G is provided as a single gzipped tarball. l Before starting installation, be sure that Globus related env. variable is correctly defined (e. g. GLOBUS_LOCATION, GLOBUS_INSTALL_PATH)

Installation Steps (1/4) l Uncompress and untar the tarball. % gunzip –c ng-latest. tgz | tar xvf – This creates a directory “ng” which makes up the source tree along with its subdirectories.

Installation Steps (1/4) l Uncompress and untar the tarball. % gunzip –c ng-latest. tgz | tar xvf – This creates a directory “ng” which makes up the source tree along with its subdirectories.

Installation Steps (2/4) l Change directory to the “ng-1. 0” directory and run configure script. % cd ng-1. 0 %. /configure l Example: %. /configure --prefix=/usr/local/ng Ø Notes for GT 2. 2 or later » --without-gpt option is required if GPT_LOCATION is not defined. Ø Run configure with --help option for detailed options of the configure script.

Installation Steps (2/4) l Change directory to the “ng-1. 0” directory and run configure script. % cd ng-1. 0 %. /configure l Example: %. /configure --prefix=/usr/local/ng Ø Notes for GT 2. 2 or later » --without-gpt option is required if GPT_LOCATION is not defined. Ø Run configure with --help option for detailed options of the configure script.

Installation Steps (3/4) l Compile all components and install Ninf-G % make install l Register the host information by running the following commands (required at the server side) % cd ng-1. 0/bin %. /server_install This command will create ${GLOBUS_LOCATION}/var/gridrpc/ and copies a required LDIF file Ø Notes: these commands should be done as root (for GT 2) or user globus (for GT 1)

Installation Steps (3/4) l Compile all components and install Ninf-G % make install l Register the host information by running the following commands (required at the server side) % cd ng-1. 0/bin %. /server_install This command will create ${GLOBUS_LOCATION}/var/gridrpc/ and copies a required LDIF file Ø Notes: these commands should be done as root (for GT 2) or user globus (for GT 1)

Installation Steps (4/4) l Add the information provider to GRIS (required at the server side) Ø For GT 1: » Add the following line to ${GLOBUS_DEPLOY_PATH}/etc/grid-info-resource. conf 0 cat ${GLOBUS_DEPLOY_PATH}/var/gridrpc/*. ldif Ø For GT 2: » Add the following line to ${GLOBUS_LOCATION}/etc/grid-info-slapd. conf include ${GLOBUS_LOCATION}/etc/grpc. schema The line should be put just below the following line: include ${GLOBUS_LOCATION}/etc/grid-info-resource. schema » Restart GRIS. % ${GLOBUS_LOCATION}/sbin/SXXGris stop % ${GLOBUS_LOCATION}/sbin/SXXGris start

Installation Steps (4/4) l Add the information provider to GRIS (required at the server side) Ø For GT 1: » Add the following line to ${GLOBUS_DEPLOY_PATH}/etc/grid-info-resource. conf 0 cat ${GLOBUS_DEPLOY_PATH}/var/gridrpc/*. ldif Ø For GT 2: » Add the following line to ${GLOBUS_LOCATION}/etc/grid-info-slapd. conf include ${GLOBUS_LOCATION}/etc/grpc. schema The line should be put just below the following line: include ${GLOBUS_LOCATION}/etc/grid-info-resource. schema » Restart GRIS. % ${GLOBUS_LOCATION}/sbin/SXXGris stop % ${GLOBUS_LOCATION}/sbin/SXXGris start

Ninf-G How to Build Remote Libraries - server side operations -

Ninf-G How to Build Remote Libraries - server side operations -

Prerequisite l l GRAM (gatekeeper) and GRIS are correctly configured and running Following env. variables are appropriately defined: Ø GLOBUS_LOCATION / GLOBUS_INSTALL_PATH Ø NS_DIR l l Add ${NS_DIR}/bin to $PATH (recommended) Notes for dynamic linkage of the Globus shared libraries: Globus dynamic libraries (shared libraries) must be linked with the Ninf-G stub executables. For example on Linux, this is enabled by adding ${GLOBUS_LOCATION}/lib in /etc/ld. so. conf and run ldconfig command.

Prerequisite l l GRAM (gatekeeper) and GRIS are correctly configured and running Following env. variables are appropriately defined: Ø GLOBUS_LOCATION / GLOBUS_INSTALL_PATH Ø NS_DIR l l Add ${NS_DIR}/bin to $PATH (recommended) Notes for dynamic linkage of the Globus shared libraries: Globus dynamic libraries (shared libraries) must be linked with the Ninf-G stub executables. For example on Linux, this is enabled by adding ${GLOBUS_LOCATION}/lib in /etc/ld. so. conf and run ldconfig command.

Ninf-G remote libraries l Ninf-G remote libraries are implemented as executable programs (Ninf-G executables) which Ø contains stub routine and the main routine Ø will be spawned off by GRAM l The stub routine handles Ø communication with clients and Ninf-G system itself Ø argument marshalling l Underlying executable (main routine) can be written in C, C++, Fortran, etc.

Ninf-G remote libraries l Ninf-G remote libraries are implemented as executable programs (Ninf-G executables) which Ø contains stub routine and the main routine Ø will be spawned off by GRAM l The stub routine handles Ø communication with clients and Ninf-G system itself Ø argument marshalling l Underlying executable (main routine) can be written in C, C++, Fortran, etc.

How to build Ninf-G remote libraries (1/3) l Write an interface information using Ninf-G Interface Description Language (Ninf-G IDL). Example: Module mmul; Define dmmul (IN int n, IN double A[n][n], IN double B[n][n], OUT double C[n][n]) Require “libmmul. o” Calls “C” dmmul(n, A, B, C); l Compile the Ninf-G IDL with Ninf-G IDL compiler % ns_gen

How to build Ninf-G remote libraries (1/3) l Write an interface information using Ninf-G Interface Description Language (Ninf-G IDL). Example: Module mmul; Define dmmul (IN int n, IN double A[n][n], IN double B[n][n], OUT double C[n][n]) Require “libmmul. o” Calls “C” dmmul(n, A, B, C); l Compile the Ninf-G IDL with Ninf-G IDL compiler % ns_gen

How to build Ninf-G remote libraries (2/3) l Compile stub source files and generate Ninf-G executables and LDIF files (used to register Ninf-G remote libs information to GRIS). % make –f

How to build Ninf-G remote libraries (2/3) l Compile stub source files and generate Ninf-G executables and LDIF files (used to register Ninf-G remote libs information to GRIS). % make –f

How to build Ninf-G remote libraries (3/3) Ninf-G IDL file

How to build Ninf-G remote libraries (3/3) Ninf-G IDL file

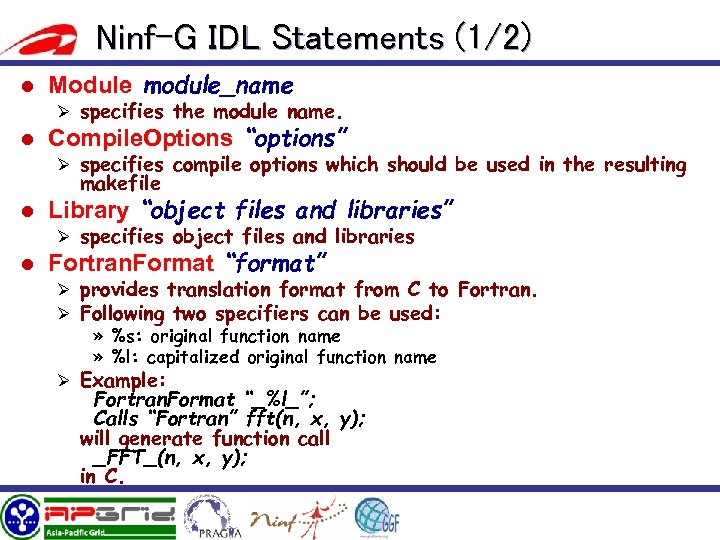

Ninf-G IDL Statements (1/2) l Module module_name l Compile. Options “options” Ø specifies the module name. Ø specifies compile options which should be used in the resulting makefile l Library “object files and libraries” l Fortran. Format “format” Ø specifies object files and libraries Ø provides translation format from C to Fortran. Ø Following two specifiers can be used: » %s: original function name » %l: capitalized original function name Ø Example: Fortran. Format “_%l_”; Calls “Fortran” fft(n, x, y); will generate function call _FFT_(n, x, y); in C.

Ninf-G IDL Statements (1/2) l Module module_name l Compile. Options “options” Ø specifies the module name. Ø specifies compile options which should be used in the resulting makefile l Library “object files and libraries” l Fortran. Format “format” Ø specifies object files and libraries Ø provides translation format from C to Fortran. Ø Following two specifiers can be used: » %s: original function name » %l: capitalized original function name Ø Example: Fortran. Format “_%l_”; Calls “Fortran” fft(n, x, y); will generate function call _FFT_(n, x, y); in C.

Ninf-G IDL Statements (2/2) l Globals { … C descriptions } Ø declares global variables shared by all functions l Define routine_name (parameters…) [“description”] [Required “object files or libraries”] [Backend “MPI”|”BLACS”] [Shrink “yes”|”no”] {{C descriptions} | Calls “C”|”Fortran” calling sequence} Ø declares function interface, required libraries and the main routine. Ø Syntax of parameter description: [mode-spec] [type-spec] formal_parameter [[dimension [: range]]+]+

Ninf-G IDL Statements (2/2) l Globals { … C descriptions } Ø declares global variables shared by all functions l Define routine_name (parameters…) [“description”] [Required “object files or libraries”] [Backend “MPI”|”BLACS”] [Shrink “yes”|”no”] {{C descriptions} | Calls “C”|”Fortran” calling sequence} Ø declares function interface, required libraries and the main routine. Ø Syntax of parameter description: [mode-spec] [type-spec] formal_parameter [[dimension [: range]]+]+

Syntax of parameter description (detailed) l mode-spec: one of the following Ø IN: parameter will be transferred from client to server Ø OUT: parameter will be transferred from server to client Ø INOUT: at the beginning of RPC, parameter will be transferred from client to server. at the end of RPC, parameter will be transferred from server to client Ø WORK: no transfers will be occurred. Specified memory will be allocated at the server side. l l type-spec should be either char, short, int, float, longlong, double, complex, or filename. For arrays, you can specify the size of the array. The size can be specified using scalar IN parameters. Ø Example: IN int n, IN double a[n]

Syntax of parameter description (detailed) l mode-spec: one of the following Ø IN: parameter will be transferred from client to server Ø OUT: parameter will be transferred from server to client Ø INOUT: at the beginning of RPC, parameter will be transferred from client to server. at the end of RPC, parameter will be transferred from server to client Ø WORK: no transfers will be occurred. Specified memory will be allocated at the server side. l l type-spec should be either char, short, int, float, longlong, double, complex, or filename. For arrays, you can specify the size of the array. The size can be specified using scalar IN parameters. Ø Example: IN int n, IN double a[n]

Sample Ninf-G IDL (1/2) l Matrix Multiply Module matrix; Define dmmul (IN int n, IN double A[n][n], IN double B[n][n], OUT double C[n][n]) “Matrix multiply: C = A x B“ Required “libmmul. o” Calls “C” dmmul(n, A, B, C);

Sample Ninf-G IDL (1/2) l Matrix Multiply Module matrix; Define dmmul (IN int n, IN double A[n][n], IN double B[n][n], OUT double C[n][n]) “Matrix multiply: C = A x B“ Required “libmmul. o” Calls “C” dmmul(n, A, B, C);

Sample Ninf-G IDL (2/2) l Sca. LAPACK (pdgesv) Module SCALAPACK; Compile. Options “NS_COMPILER = cc”; Compile. Options “NS_LINKER = f 77”; Compile. Options “CFLAGS = -DAdd_ -O 2 – 64 –mips 4 –r 10000”; Compile. Options “FFLAGS = -O 2 -64 –mips 4 –r 10000”; Library “scalapack. a pblas. a redist. a tools. a libmpiblacs. a –lblas –lmpi –lm”; Define pdgesv (IN int n, IN int nrhs, INOUT double global_a[n][lda: n], IN int lda, INOUT double global_b[nrhs][ldb: n], IN int ldb, OUT int info[1]) Backend “BLACS” Shrink “yes” Required “procmap. o pdgesv_ninf. o ninf_make_grid. of Cnumroc. o descinit. o” Calls “C” ninf_pdgesv(n, nrhs, global_a, lda, global_b, ldb, info);

Sample Ninf-G IDL (2/2) l Sca. LAPACK (pdgesv) Module SCALAPACK; Compile. Options “NS_COMPILER = cc”; Compile. Options “NS_LINKER = f 77”; Compile. Options “CFLAGS = -DAdd_ -O 2 – 64 –mips 4 –r 10000”; Compile. Options “FFLAGS = -O 2 -64 –mips 4 –r 10000”; Library “scalapack. a pblas. a redist. a tools. a libmpiblacs. a –lblas –lmpi –lm”; Define pdgesv (IN int n, IN int nrhs, INOUT double global_a[n][lda: n], IN int lda, INOUT double global_b[nrhs][ldb: n], IN int ldb, OUT int info[1]) Backend “BLACS” Shrink “yes” Required “procmap. o pdgesv_ninf. o ninf_make_grid. of Cnumroc. o descinit. o” Calls “C” ninf_pdgesv(n, nrhs, global_a, lda, global_b, ldb, info);

Ninf-G How to call Remote Libraries - client side APIs and operations -

Ninf-G How to call Remote Libraries - client side APIs and operations -

Prerequisite l should be able to submit jobs to the Globus Gatekeeper on servers Ø has a user certificate Ø User’s dn appears on grid-mapfile Ø Run grid-proxy-init command before executing Ninf -G apps l Following env. variables are appropriately defined: Ø GLOBUS_LOCATION / GLOBUS_INSTALL_PATH Ø NS_DIR l Add ${NS_DIR}/bin to $PATH (recommended)

Prerequisite l should be able to submit jobs to the Globus Gatekeeper on servers Ø has a user certificate Ø User’s dn appears on grid-mapfile Ø Run grid-proxy-init command before executing Ninf -G apps l Following env. variables are appropriately defined: Ø GLOBUS_LOCATION / GLOBUS_INSTALL_PATH Ø NS_DIR l Add ${NS_DIR}/bin to $PATH (recommended)

(Client) User’s Scenario l l l Write client programs using Ninf-G API Compile and link with the supplied Ninf-G client compile driver (ns_client_gen) Write a configuration file in which runtime environments can be described l Run grid-proxy-init command l Run the program

(Client) User’s Scenario l l l Write client programs using Ninf-G API Compile and link with the supplied Ninf-G client compile driver (ns_client_gen) Write a configuration file in which runtime environments can be described l Run grid-proxy-init command l Run the program

Ninf-G APIs l Two kinds of APIs Ø Ninf API » compatible with Ninf Ver. 1 » simple but less functional Ø Grid. RPC API » Proposed at GGF for standardization » recommended!

Ninf-G APIs l Two kinds of APIs Ø Ninf API » compatible with Ninf Ver. 1 » simple but less functional Ø Grid. RPC API » Proposed at GGF for standardization » recommended!

GRPC API (1) – Terminology l Function Handle Ø abstraction of serve-side function l Session ID Ø Non-negative value to identify asynchronous RPC

GRPC API (1) – Terminology l Function Handle Ø abstraction of serve-side function l Session ID Ø Non-negative value to identify asynchronous RPC

Grid. RPC API (2) - Status Code - code GRPC_OK GRPC_ERROR value 0 -1 SUCCESS FAILURE

Grid. RPC API (2) - Status Code - code GRPC_OK GRPC_ERROR value 0 -1 SUCCESS FAILURE

Grid. RPC API (3) - Error Code GRPCERR_NOERROR Not an error GRPCERR_NOT_INITIALIZED Not initialized GRPCERR_SERVER_NOT_FOUND Server is not found GRPCERR_CONNECTION_REFUSED Failed in connection to the server GRPCERR_NO_SUCH_FUNCTION Function not found GRPCERR_AUTHENTICATION_FAILED Failed in authentication GRPCERR_RPC_REFUSED RPC is refused GRPCERR_COMMUNICATION_FAILED Failed in communication GRPCERR_PROTOCOL_ERROR Invalid protocol GRPCERR_CLIENT_INTERNAL_ERROR Violation inside the client GRPCERR_SERVER_INTERNAL_ERROR Violation inside the server GRPCERR_EXECUTABLE_INOPERATIVE Executable is died GRPCERR_SIGNAL_CAUGHT Signal was occurred GRPCERR_INVALID_ARGUMENT Invalid argument GRPCERR_STACK_OVERFLOW Stack overflow GRPCERR_FILE_READ Error in reading a file GRPCERR_FILE_WRITE Error in writing a file GRPCERR_UNKNOWN_ERROR Unknown error

Grid. RPC API (3) - Error Code GRPCERR_NOERROR Not an error GRPCERR_NOT_INITIALIZED Not initialized GRPCERR_SERVER_NOT_FOUND Server is not found GRPCERR_CONNECTION_REFUSED Failed in connection to the server GRPCERR_NO_SUCH_FUNCTION Function not found GRPCERR_AUTHENTICATION_FAILED Failed in authentication GRPCERR_RPC_REFUSED RPC is refused GRPCERR_COMMUNICATION_FAILED Failed in communication GRPCERR_PROTOCOL_ERROR Invalid protocol GRPCERR_CLIENT_INTERNAL_ERROR Violation inside the client GRPCERR_SERVER_INTERNAL_ERROR Violation inside the server GRPCERR_EXECUTABLE_INOPERATIVE Executable is died GRPCERR_SIGNAL_CAUGHT Signal was occurred GRPCERR_INVALID_ARGUMENT Invalid argument GRPCERR_STACK_OVERFLOW Stack overflow GRPCERR_FILE_READ Error in reading a file GRPCERR_FILE_WRITE Error in writing a file GRPCERR_UNKNOWN_ERROR Unknown error

Grid. RPC API (4) - Initialize/Finalize l int grpc_initialize( char * config_file_name); Ø reads the configuration file and initialize client. Ø Any calls of other GRPC APIs prior to grpc_initialize would fail Ø Returns GRPC_OK (success) or GRPC_ERROR (failure) l int grpc_finalize(); Ø Frees resources (memory, etc. ) Ø Any calls of other GRPC APIs after grpc_finalize would fail Ø Returns GRPC_OK (success) or GRPC_ERROR (failure)

Grid. RPC API (4) - Initialize/Finalize l int grpc_initialize( char * config_file_name); Ø reads the configuration file and initialize client. Ø Any calls of other GRPC APIs prior to grpc_initialize would fail Ø Returns GRPC_OK (success) or GRPC_ERROR (failure) l int grpc_finalize(); Ø Frees resources (memory, etc. ) Ø Any calls of other GRPC APIs after grpc_finalize would fail Ø Returns GRPC_OK (success) or GRPC_ERROR (failure)

Grid. RPC API (5) – Function Handles l int grpc_function_handle_default ( grpc_function_handle_t * handle, char * func_name); Ø Initializes a function handle for the function using default host and port. Ø func_name should be “module_name/routine_name”. Ø Returns GRPC_OK (success) or GRPC_ERROR (failure) l int grpc_function_handle_init ( grpc_function_handle_t * handle, char * host_name, int port, char * func_name); Ø Initializes a function handle for the function along with the specified host and port. Ø func_name should be “module_name/routine_name”. Ø Returns GRPC_OK (success) or GRPC_ERROR (failure) l int grpc_function_handle_destruct ( grpc_function_handle_t * handle); Ø Destructs the function handle Ø Returns GRPC_OK (success) or GRPC_ERROR (failure) l grpc_function_handle_t *grpc_get_handle ( int session. Id); Ø Returns a function handle used in the session specified by session. ID.

Grid. RPC API (5) – Function Handles l int grpc_function_handle_default ( grpc_function_handle_t * handle, char * func_name); Ø Initializes a function handle for the function using default host and port. Ø func_name should be “module_name/routine_name”. Ø Returns GRPC_OK (success) or GRPC_ERROR (failure) l int grpc_function_handle_init ( grpc_function_handle_t * handle, char * host_name, int port, char * func_name); Ø Initializes a function handle for the function along with the specified host and port. Ø func_name should be “module_name/routine_name”. Ø Returns GRPC_OK (success) or GRPC_ERROR (failure) l int grpc_function_handle_destruct ( grpc_function_handle_t * handle); Ø Destructs the function handle Ø Returns GRPC_OK (success) or GRPC_ERROR (failure) l grpc_function_handle_t *grpc_get_handle ( int session. Id); Ø Returns a function handle used in the session specified by session. ID.

Grid. RPC API (6) - RPC l int grpc_call ( grpc_function_handle_t *, . . . ); Ø Invokes a blocking RPC using the specified function handle. Ø The rest of the arguments should be the same sequence with the Ninf-G remote executable. Ø Return GRPC_OK (success) or GRPC_ERROR (failure) l int grpc_call_async ( grpc_function_handle_t *, . . . ); Ø Invokes a non-blocking RPC using the specified function handle. Ø The rest of the arguments should be the same sequence with the Ninf-G remote executable. Ø Return the session. ID (success) or GRPC_ERROR (failure)

Grid. RPC API (6) - RPC l int grpc_call ( grpc_function_handle_t *, . . . ); Ø Invokes a blocking RPC using the specified function handle. Ø The rest of the arguments should be the same sequence with the Ninf-G remote executable. Ø Return GRPC_OK (success) or GRPC_ERROR (failure) l int grpc_call_async ( grpc_function_handle_t *, . . . ); Ø Invokes a non-blocking RPC using the specified function handle. Ø The rest of the arguments should be the same sequence with the Ninf-G remote executable. Ø Return the session. ID (success) or GRPC_ERROR (failure)

Grid. RPC API (7) - RPC using stack l grpc_arg_stack * grpc_arg_stack_new ( int size ); l int grpc_arg_stack_push_arg ( grpc_arg_stack* arg. Stack, void *arg); Ø Allocates an argument stack. Ø Returns a pointer to the stack. Null is returned on allocation error. Ø Pushes an argument to the stack. Ø Returns GRPC_OK (success) or GRPC_ERROR (failure) l void * grpc_arg_stack_pop_arg ( grpc_arg_stack* arg. Stack); Ø Pops an argument from the stack. Ø Returns a pinter to the argument. Null is returned on error. l int grpc_arg_stack_destruct ( grpc_arg_stack *arg. Stack ); l int grpc_call_arg_stack ( grpc_function_handle_t *handle, grpc_arg_stack *arg. Stack ); Ø Free the stack. Ø Returns GRPC_OK (success) or GRPC_ERROR (failure). Ø Performs blocking RPC, which corresponds to grpc_call(). l int grpc_call_arg_stack_async ( grpc_function_handle_t *handle, grpc_arg_stack *arg. Stack ); Ø Performs non-blocking RPC, which corresponds to grpc_call_async().

Grid. RPC API (7) - RPC using stack l grpc_arg_stack * grpc_arg_stack_new ( int size ); l int grpc_arg_stack_push_arg ( grpc_arg_stack* arg. Stack, void *arg); Ø Allocates an argument stack. Ø Returns a pointer to the stack. Null is returned on allocation error. Ø Pushes an argument to the stack. Ø Returns GRPC_OK (success) or GRPC_ERROR (failure) l void * grpc_arg_stack_pop_arg ( grpc_arg_stack* arg. Stack); Ø Pops an argument from the stack. Ø Returns a pinter to the argument. Null is returned on error. l int grpc_arg_stack_destruct ( grpc_arg_stack *arg. Stack ); l int grpc_call_arg_stack ( grpc_function_handle_t *handle, grpc_arg_stack *arg. Stack ); Ø Free the stack. Ø Returns GRPC_OK (success) or GRPC_ERROR (failure). Ø Performs blocking RPC, which corresponds to grpc_call(). l int grpc_call_arg_stack_async ( grpc_function_handle_t *handle, grpc_arg_stack *arg. Stack ); Ø Performs non-blocking RPC, which corresponds to grpc_call_async().

Grid. RPC API (8) - Wait Functions l l l int grpc_wait (int session. ID); Ø Waits outstanding RPC specified by session. ID Ø Returns GRPC_OK (success) or GRPC_ERROR (failure) int grpc_wait_and (int * id. Array, int length); Ø Waits all outstanding RPCs specified by an array of session. IDs Ø Returns GRPC_OK if the wait for all RPCs are succeeded, otherwise GRPC_ERROR is returned. int grpc_wait_or ( int * id. Array, int length, int * id. Ptr); Ø Waits any one of RPCs specified by an array of session. IDs. Ø Session ID of the finished RPC is store in the third argument. Ø Returns GRPC_OK if the finished RPC is suceeded, otherwise GRPC_ERROR is returned. int grpc_wait_all ( ); Ø Waits until all outstanding RPCs are completed. Ø Returns GRPC_OK if all the sessions are succeeded, otherwise GRPC_ERROR is returned. int grpc_wait_any ( int * id. Ptr); Ø Waits any one of outstanding RPCs. Ø Session ID of the finished RPC is store in the argument. Ø Returns GRPC_OK if the finished RPC is suceeded, otherwise GRPC_ERROR is returned.

Grid. RPC API (8) - Wait Functions l l l int grpc_wait (int session. ID); Ø Waits outstanding RPC specified by session. ID Ø Returns GRPC_OK (success) or GRPC_ERROR (failure) int grpc_wait_and (int * id. Array, int length); Ø Waits all outstanding RPCs specified by an array of session. IDs Ø Returns GRPC_OK if the wait for all RPCs are succeeded, otherwise GRPC_ERROR is returned. int grpc_wait_or ( int * id. Array, int length, int * id. Ptr); Ø Waits any one of RPCs specified by an array of session. IDs. Ø Session ID of the finished RPC is store in the third argument. Ø Returns GRPC_OK if the finished RPC is suceeded, otherwise GRPC_ERROR is returned. int grpc_wait_all ( ); Ø Waits until all outstanding RPCs are completed. Ø Returns GRPC_OK if all the sessions are succeeded, otherwise GRPC_ERROR is returned. int grpc_wait_any ( int * id. Ptr); Ø Waits any one of outstanding RPCs. Ø Session ID of the finished RPC is store in the argument. Ø Returns GRPC_OK if the finished RPC is suceeded, otherwise GRPC_ERROR is returned.

Grid. RPC API (9) - Session Control l int grpc_probe (int session. ID); Ø probes the job specified by Session. ID whether the job has been completed. Ø Returns 1 if the job is done and 0 is returned if the job is still running. Returns GRPC_ERROR if the probe is failed. l int grpc_cancel (int session. ID); Ø Cancel outstanding RPC specified by session. ID. Ø Returns GRPC_OK (success) or GRPC_ERROR (failure) Ø Returns GRPC_OK if the RPC is already done.

Grid. RPC API (9) - Session Control l int grpc_probe (int session. ID); Ø probes the job specified by Session. ID whether the job has been completed. Ø Returns 1 if the job is done and 0 is returned if the job is still running. Returns GRPC_ERROR if the probe is failed. l int grpc_cancel (int session. ID); Ø Cancel outstanding RPC specified by session. ID. Ø Returns GRPC_OK (success) or GRPC_ERROR (failure) Ø Returns GRPC_OK if the RPC is already done.

Grid. RPC API (10) – Error Functions l int grpc_get_last_error ( ); Ø Returns error code of the last Grid. RPC API call l int grpc_get_error (int session. ID); Ø Returns error code of the session specified by session. ID. Ø Returns -1 if the specified session is not found. l void grpc_perror (char * str); Ø Concatenates the argument and error string of the last Grid. RPC API call and returns the string. char * grpc_error_string (int error_code); Ø Returns the error string of the error code. l

Grid. RPC API (10) – Error Functions l int grpc_get_last_error ( ); Ø Returns error code of the last Grid. RPC API call l int grpc_get_error (int session. ID); Ø Returns error code of the session specified by session. ID. Ø Returns -1 if the specified session is not found. l void grpc_perror (char * str); Ø Concatenates the argument and error string of the last Grid. RPC API call and returns the string. char * grpc_error_string (int error_code); Ø Returns the error string of the error code. l

Data Parallel Application l l Call parallel libraries (e. g. MPI apps). Backend “MPI” or Backend “BLACS” should be specified in the IDL Parallel Computer Parallel Numerical Libraries Parallel Applications

Data Parallel Application l l Call parallel libraries (e. g. MPI apps). Backend “MPI” or Backend “BLACS” should be specified in the IDL Parallel Computer Parallel Numerical Libraries Parallel Applications

Task Parallel Application l Parallel RPCs using asynchronous call. Server

Task Parallel Application l Parallel RPCs using asynchronous call. Server

Task Parallel Application l Asynchronous Call grpc_call_async(. . . ); l Waiting for reply grpc_wait(session. ID); grpc_wait_all(); grpc_wait_any(id. Ptr); grpc_wait_and(id. Array, len); grpc_wait_or(id. Array, len, id. Ptr); grpc_cancel(session. ID); Client Server. A Server. B grpc_call_async grpc_wait_all Various task parallel programs spanning clusters are easy to write

Task Parallel Application l Asynchronous Call grpc_call_async(. . . ); l Waiting for reply grpc_wait(session. ID); grpc_wait_all(); grpc_wait_any(id. Ptr); grpc_wait_and(id. Array, len); grpc_wait_or(id. Array, len, id. Ptr); grpc_cancel(session. ID); Client Server. A Server. B grpc_call_async grpc_wait_all Various task parallel programs spanning clusters are easy to write

How the server can be specified? l Server is determined when the function handle is initialized. Ø grpc_function_handle_init(); » hostname is given as the second argument Ø grpc_function_handle_default(); » hostname is specified in the client configuration file which must be passed as the first argument of the client program. l Notes: Ninf-G Ver. 1 is designed to focus on Grid. RPC core mechanism and it does not support any brokering/scheduling functions.

How the server can be specified? l Server is determined when the function handle is initialized. Ø grpc_function_handle_init(); » hostname is given as the second argument Ø grpc_function_handle_default(); » hostname is specified in the client configuration file which must be passed as the first argument of the client program. l Notes: Ninf-G Ver. 1 is designed to focus on Grid. RPC core mechanism and it does not support any brokering/scheduling functions.

Automatic file staging l l l Ninf-G provides "Filename" type Local file is automatically shipped to the server Server side output file is forwarded to the client grpc_function_handle_default ( &handle, “plot/plot”); grpc_call (&handle, “input. gp”, “output. ps”); inputfile outputfile Client Program Module plot; Define plot (IN filename src, OUT filename out) { char buf[1000]; sprintf(buf, “gnuplot %s > %s, src, out); system(buf); } Server

Automatic file staging l l l Ninf-G provides "Filename" type Local file is automatically shipped to the server Server side output file is forwarded to the client grpc_function_handle_default ( &handle, “plot/plot”); grpc_call (&handle, “input. gp”, “output. ps”); inputfile outputfile Client Program Module plot; Define plot (IN filename src, OUT filename out) { char buf[1000]; sprintf(buf, “gnuplot %s > %s, src, out); system(buf); } Server

Compile and run l Compile the program using ns_client_gen command. % ns_client_gen –o myapp app. c l Before running the application, generate a proxy certificate. % grid-proxy-init l When running the application, client configuration file must be passed as the first argument. %. /myapp config. cl [args…]

Compile and run l Compile the program using ns_client_gen command. % ns_client_gen –o myapp app. c l Before running the application, generate a proxy certificate. % grid-proxy-init l When running the application, client configuration file must be passed as the first argument. %. /myapp config. cl [args…]

Client Configuration File (1/2) l l Specifies runtime environments. Available attributes: Ø host » specifies client’s hostname (callback contact) Ø port » specifies client’s port number (callback contact) Ø serverhost » specifies default server’s hostname Ø ldaphost » specifies hostname of GRIS/GIIS Ø ldapport » specifies port number of GRIS/GIIS (default: 2135) Ø vo_name » specifies Mds-Vo-Name for querying GIIS (default: local) Ø jobmanager » specifies jobmanager (default: jobmanager)

Client Configuration File (1/2) l l Specifies runtime environments. Available attributes: Ø host » specifies client’s hostname (callback contact) Ø port » specifies client’s port number (callback contact) Ø serverhost » specifies default server’s hostname Ø ldaphost » specifies hostname of GRIS/GIIS Ø ldapport » specifies port number of GRIS/GIIS (default: 2135) Ø vo_name » specifies Mds-Vo-Name for querying GIIS (default: local) Ø jobmanager » specifies jobmanager (default: jobmanager)

Client Configuration File (2/2) l Available attributes (cont’d): Ø loglevel » specifies log leve (0 -3, 3 is the most detail) Ø redirect_outerr » specifies whether stdout/stderr are redirect to the client side (yes or no, default: no) Ø forkgdb, debug_exe » enables debugging Ninf-G executables using gdb at server side (TRUE or FALSE, default: FALSE) Ø debug_display » specifies DISPLAY on which xterm will be opened. Ø debug_xterm » specifies absolute path of xterm command Ø debug_gdb » specifies absolute path of gdb command

Client Configuration File (2/2) l Available attributes (cont’d): Ø loglevel » specifies log leve (0 -3, 3 is the most detail) Ø redirect_outerr » specifies whether stdout/stderr are redirect to the client side (yes or no, default: no) Ø forkgdb, debug_exe » enables debugging Ninf-G executables using gdb at server side (TRUE or FALSE, default: FALSE) Ø debug_display » specifies DISPLAY on which xterm will be opened. Ø debug_xterm » specifies absolute path of xterm command Ø debug_gdb » specifies absolute path of gdb command

Sample Configuration File # call remote library on UME cluster serverhost = ume. hpcc. jp # grd jobmanager is used to launch jobs jobmanager = jobmanager-grd # query to Ap. Grid GIIS ldaphost = mds. apgrid. org ldapport = 2135 vo_name = Ap. Grid # get detailed loglevel = 3

Sample Configuration File # call remote library on UME cluster serverhost = ume. hpcc. jp # grd jobmanager is used to launch jobs jobmanager = jobmanager-grd # query to Ap. Grid GIIS ldaphost = mds. apgrid. org ldapport = 2135 vo_name = Ap. Grid # get detailed loglevel = 3

Ninf Examples

Ninf Examples

Examples l Ninfy the existing library Ø Matrix multiply l Ninfy task-parallel program Ø Calculate PI using a simple Monte-Carlo Method

Examples l Ninfy the existing library Ø Matrix multiply l Ninfy task-parallel program Ø Calculate PI using a simple Monte-Carlo Method

Matrix Multiply l Server side Ø Write an IDL file Ø Generate stubs Ø Register stub information to GRIS l Client side Ø Change local function call to remote library call Ø Compile by ns_client_gen Ø write a client configuration file Ø run the application

Matrix Multiply l Server side Ø Write an IDL file Ø Generate stubs Ø Register stub information to GRIS l Client side Ø Change local function call to remote library call Ø Compile by ns_client_gen Ø write a client configuration file Ø run the application

Matrix Multiply - Sample Code void mmul(int n, double * a, double * b, double * c) { double t; int i, j, k; for (i = 0; i < n; i++) { for (j = 0; j < n; j++) { t = 0; for (k = 0; k < n; k++){ t += a[i * n + k] * b[k * n + j]; } c[i*N+j] = t; }}} l The matrix do not itself embody size as type info.

Matrix Multiply - Sample Code void mmul(int n, double * a, double * b, double * c) { double t; int i, j, k; for (i = 0; i < n; i++) { for (j = 0; j < n; j++) { t = 0; for (k = 0; k < n; k++){ t += a[i * n + k] * b[k * n + j]; } c[i*N+j] = t; }}} l The matrix do not itself embody size as type info.

Matrix Multiply- Server Side (1/3) l Write IDL file describing the interface information (mmul. idl) Module mmul; Define mmul(IN int N, IN double A[N*N], IN double B[N*N], OUT double C[N*N]) “Matrix Multiply: C = A x B" Required "mmul_lib. o" Calls "C" mmul(N, A, B, C);

Matrix Multiply- Server Side (1/3) l Write IDL file describing the interface information (mmul. idl) Module mmul; Define mmul(IN int N, IN double A[N*N], IN double B[N*N], OUT double C[N*N]) “Matrix Multiply: C = A x B" Required "mmul_lib. o" Calls "C" mmul(N, A, B, C);

Matrix Multiply - Server Side (2/3) l Generate stub source and compile it > ns_gen mmul. idl > make -f mmul. mak mmul. idl ns_gen mmul. mak _stub_mmul. c mmul_lib. o cc _stub_mmul: : mmul. ldif

Matrix Multiply - Server Side (2/3) l Generate stub source and compile it > ns_gen mmul. idl > make -f mmul. mak mmul. idl ns_gen mmul. mak _stub_mmul. c mmul_lib. o cc _stub_mmul: : mmul. ldif

Matrix Multiply - Server Side (3/3) l Regisgter stub information to GRIS dn: Grid. RPC-Funcname=mmul/mmul, Mds-Software-deployment=Grid. RPC-Ninf-G, __ROOT_DN__ object. Class: Globus. Software object. Class: Mds. Software object. Class: Grid. RPCEntry Mds-Software-deployment: Grid. RPC-Ninf-G Grid. RPC-Funcname: mmul/mmul Grid. RPC-Module: mmul Grid. RPC-Entry: mmul Grid. RPC-Path: /usr/users/yoshio/work/Ninf-G/test/_stub_mmul Grid. RPC-Stub: : PGZ 1 bm. N 0 a. W 9 u. ICB 2 ZXJza. W 9 u. PSIy. Mj. Eu. MDAw. Ii. A+PGZ 1 bm. N 0 a. W 9 PSJtb. XVs. Ii. Blbn. Rye. T 0 ib. W 11 b. CIg. Lz 4 g. PGFy. Zy. Bk. YXRh. X 3 R 5 c. GU 9 Imlud. CIgb. W 9 k. ZV 90 e. XBl PSJpbi. Ig. Pgog. PC 9 hcmc+Ci. A 8 YXJn. IGRhd. GFfd. Hlw. ZT 0 i. ZG 91 Ymxl. Ii. Btb 2 Rl. X 3 R 5 c. GU 9 Imlu Ii. A+Ci. A 8 c 3 Vic 2 Nya. XB 0 Pjxza. Xpl. Pjxle. HBy. ZXNza. W 9 u. Pjxia. V 9 hcml 0 a. G 1 ld. Glj. IG 5 hb. WU 9 > make –f mmul. mak install

Matrix Multiply - Server Side (3/3) l Regisgter stub information to GRIS dn: Grid. RPC-Funcname=mmul/mmul, Mds-Software-deployment=Grid. RPC-Ninf-G, __ROOT_DN__ object. Class: Globus. Software object. Class: Mds. Software object. Class: Grid. RPCEntry Mds-Software-deployment: Grid. RPC-Ninf-G Grid. RPC-Funcname: mmul/mmul Grid. RPC-Module: mmul Grid. RPC-Entry: mmul Grid. RPC-Path: /usr/users/yoshio/work/Ninf-G/test/_stub_mmul Grid. RPC-Stub: : PGZ 1 bm. N 0 a. W 9 u. ICB 2 ZXJza. W 9 u. PSIy. Mj. Eu. MDAw. Ii. A+PGZ 1 bm. N 0 a. W 9 PSJtb. XVs. Ii. Blbn. Rye. T 0 ib. W 11 b. CIg. Lz 4 g. PGFy. Zy. Bk. YXRh. X 3 R 5 c. GU 9 Imlud. CIgb. W 9 k. ZV 90 e. XBl PSJpbi. Ig. Pgog. PC 9 hcmc+Ci. A 8 YXJn. IGRhd. GFfd. Hlw. ZT 0 i. ZG 91 Ymxl. Ii. Btb 2 Rl. X 3 R 5 c. GU 9 Imlu Ii. A+Ci. A 8 c 3 Vic 2 Nya. XB 0 Pjxza. Xpl. Pjxle. HBy. ZXNza. W 9 u. Pjxia. V 9 hcml 0 a. G 1 ld. Glj. IG 5 hb. WU 9 > make –f mmul. mak install

Matrix Multiply - Client Side (1/3) l Modify source code main(int argc, char ** argv){ grpc_function_handle_t handle; … grpc_initialize(argv[1]); … grpc_function_handle_default(&handle, “mmul/mmul”); … if (grpc_call(&handle, n, A, B, C) == GRPC_ERROR) { … } … grpc_function_handle_destruct(&handle); grpc_finalize(); }

Matrix Multiply - Client Side (1/3) l Modify source code main(int argc, char ** argv){ grpc_function_handle_t handle; … grpc_initialize(argv[1]); … grpc_function_handle_default(&handle, “mmul/mmul”); … if (grpc_call(&handle, n, A, B, C) == GRPC_ERROR) { … } … grpc_function_handle_destruct(&handle); grpc_finalize(); }

Matrix Multiply - Client Side (2/3) l Compile the program by ns_client_gen > ns_client_gen -o mmul_ninf. c l Generate a proxy certificate > grid-proxy-init l Write a client configuration file serverhost = ume. hpcc. jp ldapport = 2135 jobmanager = jobmanager-grd loglevel = 3 redirect_outerr = no

Matrix Multiply - Client Side (2/3) l Compile the program by ns_client_gen > ns_client_gen -o mmul_ninf. c l Generate a proxy certificate > grid-proxy-init l Write a client configuration file serverhost = ume. hpcc. jp ldapport = 2135 jobmanager = jobmanager-grd loglevel = 3 redirect_outerr = no

Matrix Multiply - Client Side (3/3) l Generate a proxy certificate > grid-proxy-init l Run >. /mmul_ninf config. cl

Matrix Multiply - Client Side (3/3) l Generate a proxy certificate > grid-proxy-init l Run >. /mmul_ninf config. cl

Task Parallel Programs (Compute PI using Monte-Carlo Method) l Generate a large number of random points within the square region that exactly encloses a unit circle (1/4 of a circle) Ø PI = 4 p

Task Parallel Programs (Compute PI using Monte-Carlo Method) l Generate a large number of random points within the square region that exactly encloses a unit circle (1/4 of a circle) Ø PI = 4 p

Compute PI - Server Side pi. idl Module pi; Define pi_trial ( IN int seed, IN long times, OUT long * count) "monte carlo pi computation" Required "pi_trial. o" { long counter; counter = pi_trial(seed, times); *count = counter; } pi_trial. c long pi_trial (int seed, long times) { long l, counter = 0; srandom(seed); for (l = 0; l < times; l++) { double x = (double)random() / RAND_MAX; double y = (double)random() / RAND_MAX; if (x * x + y * y < 1. 0) counter++; } } return counter;

Compute PI - Server Side pi. idl Module pi; Define pi_trial ( IN int seed, IN long times, OUT long * count) "monte carlo pi computation" Required "pi_trial. o" { long counter; counter = pi_trial(seed, times); *count = counter; } pi_trial. c long pi_trial (int seed, long times) { long l, counter = 0; srandom(seed); for (l = 0; l < times; l++) { double x = (double)random() / RAND_MAX; double y = (double)random() / RAND_MAX; if (x * x + y * y < 1. 0) counter++; } } return counter;

Ongoing and Future Work Towards the release of Ninf-G Ver. 2

Ongoing and Future Work Towards the release of Ninf-G Ver. 2

Planned Additional Features l l l Get stub information without using LDAP Initialize multiple function handles with one GRAM call Callback from remote executable to the client Ø heatbeat Ø visualize Ø debugging Ø … l l statefull stubs (remote object) revise the structure of client configuration file Ø multiple servers Ø jobmanager for each server Ø multiple ldapserver Ø …

Planned Additional Features l l l Get stub information without using LDAP Initialize multiple function handles with one GRAM call Callback from remote executable to the client Ø heatbeat Ø visualize Ø debugging Ø … l l statefull stubs (remote object) revise the structure of client configuration file Ø multiple servers Ø jobmanager for each server Ø multiple ldapserver Ø …

Ninf-G Demonstration

Ninf-G Demonstration

Weather Forecasting System n Goal n Barotropic S-Model n Long term, global weather prediction n Winding of Jet-Stream n Blocking phenomenon of high atmospheric pressure n Weather forecasting model proposed by Prof. Tanaka n Simple and precise Modeling complicated 3 D turbulence as a horizontal one n 200 sec for 100 -days prediction/ 1 simulation (Pentium III machine) Keep high precision over long periods n Taking a statistical ensemble mean n ~50 simulations n Introducing perturbation at every time step n Typical parameter survey Gridifying the program enables quick response

Weather Forecasting System n Goal n Barotropic S-Model n Long term, global weather prediction n Winding of Jet-Stream n Blocking phenomenon of high atmospheric pressure n Weather forecasting model proposed by Prof. Tanaka n Simple and precise Modeling complicated 3 D turbulence as a horizontal one n 200 sec for 100 -days prediction/ 1 simulation (Pentium III machine) Keep high precision over long periods n Taking a statistical ensemble mean n ~50 simulations n Introducing perturbation at every time step n Typical parameter survey Gridifying the program enables quick response

Ninfy the Program n Dividing a program into two parts as a client-server system n Client: n Pre-processing: reading input data n Post-processing: averaging results of ensembles, visualizing results n Server n Weather forecasting simulation n Integrating the system by using grid-middle ware n Ninf-g library: communication between C/S n Grid lib portal: easy and secure access from a user’s machine Ninf-g S-model Program Reading data Web browser user Grid Lib Solving Equations Averaging results Visualizing results Ninf-g

Ninfy the Program n Dividing a program into two parts as a client-server system n Client: n Pre-processing: reading input data n Post-processing: averaging results of ensembles, visualizing results n Server n Weather forecasting simulation n Integrating the system by using grid-middle ware n Ninf-g library: communication between C/S n Grid lib portal: easy and secure access from a user’s machine Ninf-g S-model Program Reading data Web browser user Grid Lib Solving Equations Averaging results Visualizing results Ninf-g

GO TO DEMO! Weather forecast Server program APGrid 64 cpu Cluster AIST, Japan client auth. Grid. Lib Portal Core Sign. On/Sign. Off Job Control Weather forecast client program Weather forecast Server program 64 cpu Cluster KISTI, Korea Weather forecast Server program 22 cpu Cluster KU, Thailand globusrun Call Remote server program using Ninf. G submission/query /cancel Job Queuing Manager & Signing Server accounting information HTTP server + Servlet (Apache + Tomcat) JDBC Interface (TCP/IP) Accounting DB (Postgress) user

GO TO DEMO! Weather forecast Server program APGrid 64 cpu Cluster AIST, Japan client auth. Grid. Lib Portal Core Sign. On/Sign. Off Job Control Weather forecast client program Weather forecast Server program 64 cpu Cluster KISTI, Korea Weather forecast Server program 22 cpu Cluster KU, Thailand globusrun Call Remote server program using Ninf. G submission/query /cancel Job Queuing Manager & Signing Server accounting information HTTP server + Servlet (Apache + Tomcat) JDBC Interface (TCP/IP) Accounting DB (Postgress) user

Acknowledgement l All users of Ninf-G l Ap. Grid participants, especially for Ø KISTI (Sangwan, …) Ø KU (Sugree, …) Ø SDSC (Mason, …)

Acknowledgement l All users of Ninf-G l Ap. Grid participants, especially for Ø KISTI (Sangwan, …) Ø KU (Sugree, …) Ø SDSC (Mason, …)

For More Info l Grid. RPC Tutorial at GGF 7 Ø Grid. RPC: Ninf-G and Net. Solve » Hidemoto Nakada (AIST) and Keith Seymour (UTK) » March 4 (Tue) 13: 30 – 17: 30 » March 7 (Fri) 13: 30 – 17: 30 l Ninf home page Ø http: //ninf. apgrid. org l GGF APM WG home page Ø http: //www. globalgridforum. org/ l Contacts Ø ninf@apgrid. org

For More Info l Grid. RPC Tutorial at GGF 7 Ø Grid. RPC: Ninf-G and Net. Solve » Hidemoto Nakada (AIST) and Keith Seymour (UTK) » March 4 (Tue) 13: 30 – 17: 30 » March 7 (Fri) 13: 30 – 17: 30 l Ninf home page Ø http: //ninf. apgrid. org l GGF APM WG home page Ø http: //www. globalgridforum. org/ l Contacts Ø ninf@apgrid. org