98f4ff3768cb1c43c24fd6df65f4f89f.ppt

- Количество слайдов: 26

2009 International Workshop on Multi-Core Computing Systems (Mu. Cos’ 09) Fukuoka, Japan, March 16 -19, 2009, in conjunction with CISIS'09 Optimistic Parallel Discrete Event Simulation Based on Multi-core Platform and its Performance Analysis Nianle Su, Hongtao Hou, Feng Yang, Qun Li and Weiping Wang System Simulation Laboratory, National University of Defense Technology, China sunianle@nudt. edu. cn

2009 International Workshop on Multi-Core Computing Systems (Mu. Cos’ 09) Fukuoka, Japan, March 16 -19, 2009, in conjunction with CISIS'09 Optimistic Parallel Discrete Event Simulation Based on Multi-core Platform and its Performance Analysis Nianle Su, Hongtao Hou, Feng Yang, Qun Li and Weiping Wang System Simulation Laboratory, National University of Defense Technology, China sunianle@nudt. edu. cn

Agenda Ø Introduction Ø Multi-core computer Ø Optimistic parallel discrete event simulation based on multi-core platform ü Parallel programming model ü Synchronization algorithm ü Optimistic PDES simulator Ø Performance analysis Ø Conclusion and future work Mu. Cos'09 2

Agenda Ø Introduction Ø Multi-core computer Ø Optimistic parallel discrete event simulation based on multi-core platform ü Parallel programming model ü Synchronization algorithm ü Optimistic PDES simulator Ø Performance analysis Ø Conclusion and future work Mu. Cos'09 2

1. Introduction Ø Hardware Trend: Multi-core Era Ø Software Trend: Concurrency Revolution Ø Modeling and Simulation Field ü Simulation applications put forward higher and higher requirement on the executing speed as the modeled physical systems are becoming more and more complicated ü Parallel simulation is an effective way to speed up the running of simulation ü Multi-core computer will offer a new parallel computing platform with high performance-price ratio and small volume to parallel simulation This paper researches the parallel discrete event simulation based on multi-core platform using optimistic synchronization algorithm.

1. Introduction Ø Hardware Trend: Multi-core Era Ø Software Trend: Concurrency Revolution Ø Modeling and Simulation Field ü Simulation applications put forward higher and higher requirement on the executing speed as the modeled physical systems are becoming more and more complicated ü Parallel simulation is an effective way to speed up the running of simulation ü Multi-core computer will offer a new parallel computing platform with high performance-price ratio and small volume to parallel simulation This paper researches the parallel discrete event simulation based on multi-core platform using optimistic synchronization algorithm.

2. Multi-core computer Ø Thread Level Parallelism ü Simultaneous Multi-threading ü Chip Multi-Processor p Multi-core p Many-core Ø Two conclusions for applications running on multi-core platform ü Conclusion 1. When multiple applications are executed, the performance on multi-core platform is better than single-core platform with the same clock frequency. ü Conclusion 2. Applications without parallelization can’t make full use of computing power on multi-core platform.

2. Multi-core computer Ø Thread Level Parallelism ü Simultaneous Multi-threading ü Chip Multi-Processor p Multi-core p Many-core Ø Two conclusions for applications running on multi-core platform ü Conclusion 1. When multiple applications are executed, the performance on multi-core platform is better than single-core platform with the same clock frequency. ü Conclusion 2. Applications without parallelization can’t make full use of computing power on multi-core platform.

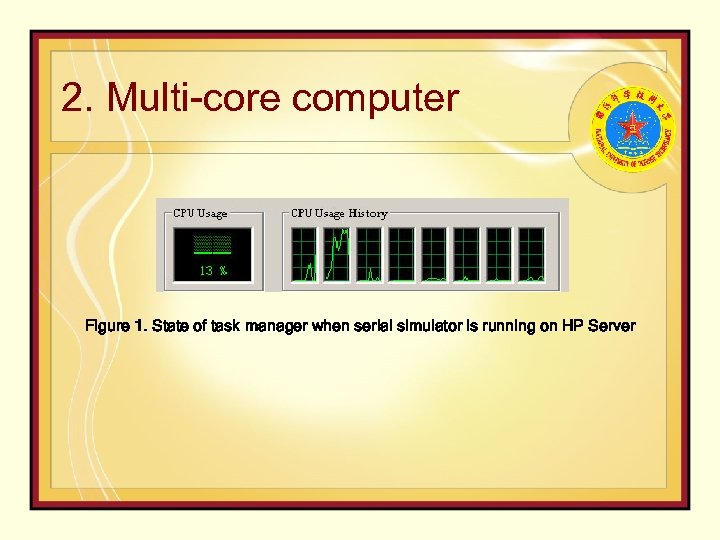

2. Multi-core computer Figure 1. State of task manager when serial simulator is running on HP Server

2. Multi-core computer Figure 1. State of task manager when serial simulator is running on HP Server

3. Optimistic parallel discrete event simulation based on multi-core platform Ø Discrete Event Simulation Ø Parallel Discrete Event Simulation (PDES) Ø Platform ü Traditional Super. Computer pshared-memory multiprocessors pdistributed-memory multicomputers The prices are too high to be afforded, which limits the extensive popularization of PDES ü Multi-core Computer high performance-price ratio, small purchasing risk, easy moving, high memory access speed, windows OS compatible, easy operation, etc Platform of PDES: Shift from traditional supercomputer to multi-core computer Future Desktop Simulation: Parallel Simulation based on Multi-core or Many-core Platform

3. Optimistic parallel discrete event simulation based on multi-core platform Ø Discrete Event Simulation Ø Parallel Discrete Event Simulation (PDES) Ø Platform ü Traditional Super. Computer pshared-memory multiprocessors pdistributed-memory multicomputers The prices are too high to be afforded, which limits the extensive popularization of PDES ü Multi-core Computer high performance-price ratio, small purchasing risk, easy moving, high memory access speed, windows OS compatible, easy operation, etc Platform of PDES: Shift from traditional supercomputer to multi-core computer Future Desktop Simulation: Parallel Simulation based on Multi-core or Many-core Platform

3. Optimistic parallel discrete event simulation based on multi-core platform Ø In order to parallelize discrete event simulation on multi-core platform, two of the most important problems to be solved: ü parallel programming model ü synchronization algorithm

3. Optimistic parallel discrete event simulation based on multi-core platform Ø In order to parallelize discrete event simulation on multi-core platform, two of the most important problems to be solved: ü parallel programming model ü synchronization algorithm

3. 1. Parallel programming model Ø Function: to partition simulation into multiple logical processes (LPs) and distribute these LPs among executing cores on multi-core platforms for running p Shared memory model a software thread will be created for each LP and threads communicate with each other by accessing shared variables and using thread synchronization primitives ü system calls (Windows and Unix system functions) ü thread libraries (such as Win 32 threads, POSIX threads, Open. MP, Threading Building Blocks) ü programming language support (such as JAVA and C#)

3. 1. Parallel programming model Ø Function: to partition simulation into multiple logical processes (LPs) and distribute these LPs among executing cores on multi-core platforms for running p Shared memory model a software thread will be created for each LP and threads communicate with each other by accessing shared variables and using thread synchronization primitives ü system calls (Windows and Unix system functions) ü thread libraries (such as Win 32 threads, POSIX threads, Open. MP, Threading Building Blocks) ü programming language support (such as JAVA and C#)

3. 1. Parallel programming model p Message passing model a software process will be created for each LP and processes communicate with each other by sending and receiving explicit messages. üMPI üPVM Ø the multiple processes/threads created are all scheduled by operating systems. Generally they will be assigned the same priority. Programmers need not to distribute them to executing cores manually. Ø Considering the good portability of message passing model, MPI is chosen in this paper.

3. 1. Parallel programming model p Message passing model a software process will be created for each LP and processes communicate with each other by sending and receiving explicit messages. üMPI üPVM Ø the multiple processes/threads created are all scheduled by operating systems. Generally they will be assigned the same priority. Programmers need not to distribute them to executing cores manually. Ø Considering the good portability of message passing model, MPI is chosen in this paper.

3. 1. Parallel programming model Ø With MPI adopted, interaction among LPs in PDES is completed entirely through explicit messages. Ø Several kinds of messages need to be transferred: ü ü ü initialization message start message event message negative event message GVT update message terminate token Ø Before these messages are sent, they have to be transformed into byte stream through serialization. After received, byte stream has to be transformed back into different kinds of messages through deserialization.

3. 1. Parallel programming model Ø With MPI adopted, interaction among LPs in PDES is completed entirely through explicit messages. Ø Several kinds of messages need to be transferred: ü ü ü initialization message start message event message negative event message GVT update message terminate token Ø Before these messages are sent, they have to be transformed into byte stream through serialization. After received, byte stream has to be transformed back into different kinds of messages through deserialization.

3. 2. Synchronization algorithm Ø By parallel programming model, we distribute multiple LPs to multiple cores on multi-core platform and execute LPs simultaneously. Unfortunately, events can’t be ensured to access LPs in time-stamp order. Ø This problem is called synchronization of PDES and it’s the central problem of PDES. Ø A synchronization algorithm is needed to ensure that events are processed in a correct order and the parallel execution of the simulator yields the same results as a sequential execution. Ø Optimistic synchronization VS Conservative synchronization Ø Optimistic algorithms use a detection and recovery approach.

3. 2. Synchronization algorithm Ø By parallel programming model, we distribute multiple LPs to multiple cores on multi-core platform and execute LPs simultaneously. Unfortunately, events can’t be ensured to access LPs in time-stamp order. Ø This problem is called synchronization of PDES and it’s the central problem of PDES. Ø A synchronization algorithm is needed to ensure that events are processed in a correct order and the parallel execution of the simulator yields the same results as a sequential execution. Ø Optimistic synchronization VS Conservative synchronization Ø Optimistic algorithms use a detection and recovery approach.

3. 2. Synchronization algorithm Ø Concepts related to optimistic algorithms ü ü State Saving Roll Back In order to recover from errors, the states Global Virtual Time before LP processes events should be When LP receives ansome commonly used saved. There are event with time. Fossil Collection stamp smaller than itssaving, such as whole methods for state local simulation clock (this event is called a straggler state saving, periodic state saving, Optimistic synchronization algorithm should GVT at wallclock time T (GVTT) during the event), it should state saving and send incremental restore its state reverse consume much memory to save simulation execution is defined as the states and anti-message to cancel the event sent computation. events. After GVT is calculated, memory time-stamp among all unprocessed minimum earlier. This process is called roll back. used by states and events that are older and partially processed messages and antithan GVT can be reclaimed and messages in the system at wallclock time T. reused. This process is called fossil collection. Samadi’s GVT algorithm and Mattern’s GVT algorithm are two of the most commonly used algorithms.

3. 2. Synchronization algorithm Ø Concepts related to optimistic algorithms ü ü State Saving Roll Back In order to recover from errors, the states Global Virtual Time before LP processes events should be When LP receives ansome commonly used saved. There are event with time. Fossil Collection stamp smaller than itssaving, such as whole methods for state local simulation clock (this event is called a straggler state saving, periodic state saving, Optimistic synchronization algorithm should GVT at wallclock time T (GVTT) during the event), it should state saving and send incremental restore its state reverse consume much memory to save simulation execution is defined as the states and anti-message to cancel the event sent computation. events. After GVT is calculated, memory time-stamp among all unprocessed minimum earlier. This process is called roll back. used by states and events that are older and partially processed messages and antithan GVT can be reclaimed and messages in the system at wallclock time T. reused. This process is called fossil collection. Samadi’s GVT algorithm and Mattern’s GVT algorithm are two of the most commonly used algorithms.

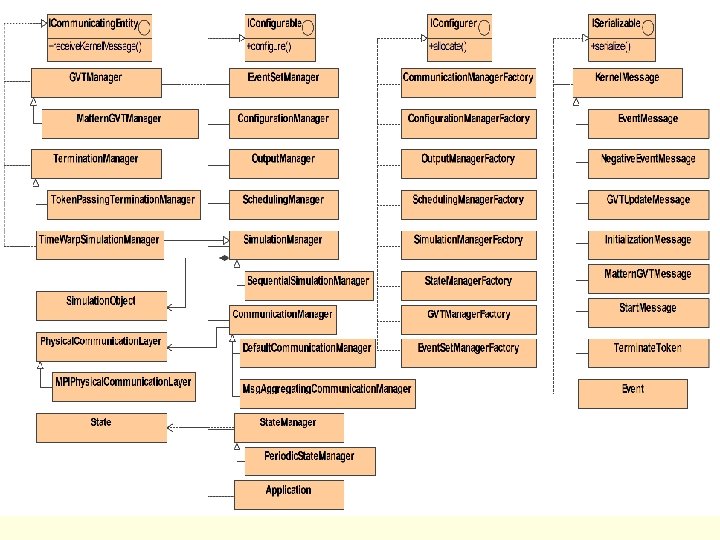

Optimistic PDES simulator Ø Existing PDES simulators commonly run on parallel computers or clusters with Linux or Unix OS. They can’t run on multi-core platform with Windows OS directly. Ø Referring to open-source PDES simulators such as WARPED 2 , we choose the C++ language and MPICH message passing library to develop an optimistic PDES simulator which can run effectively on multi-core computer with Windows OS.

Optimistic PDES simulator Ø Existing PDES simulators commonly run on parallel computers or clusters with Linux or Unix OS. They can’t run on multi-core platform with Windows OS directly. Ø Referring to open-source PDES simulators such as WARPED 2 , we choose the C++ language and MPICH message passing library to develop an optimistic PDES simulator which can run effectively on multi-core computer with Windows OS.

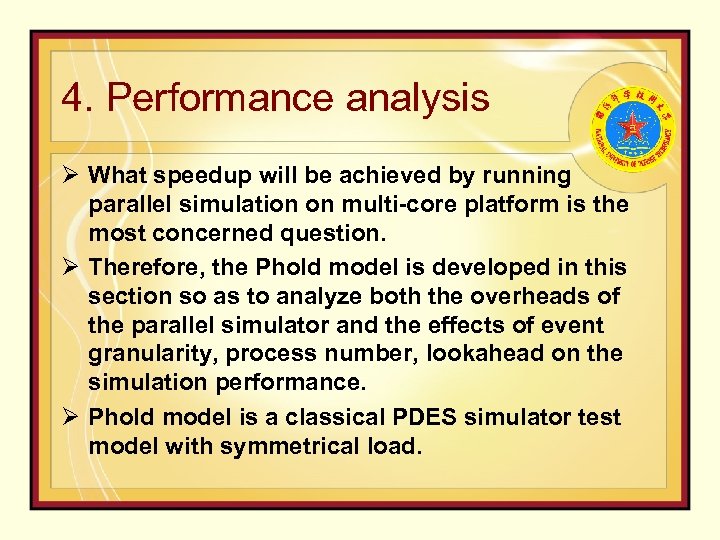

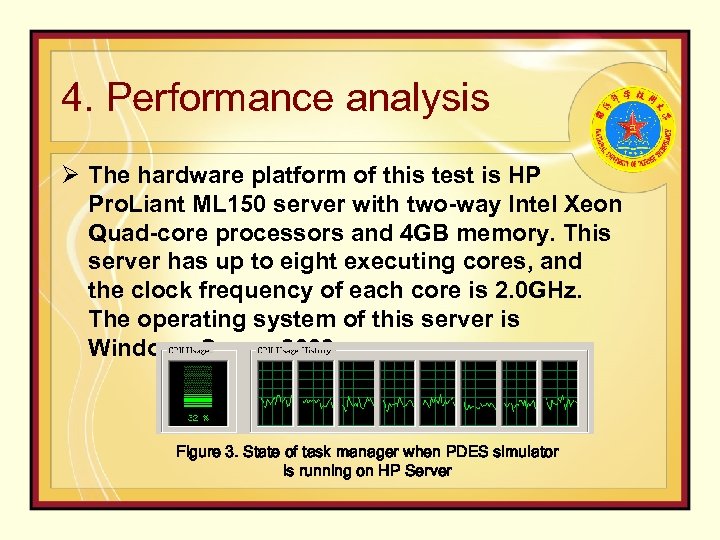

4. Performance analysis Ø What speedup will be achieved by running parallel simulation on multi-core platform is the most concerned question. Ø Therefore, the Phold model is developed in this section so as to analyze both the overheads of the parallel simulator and the effects of event granularity, process number, lookahead on the simulation performance. Ø Phold model is a classical PDES simulator test model with symmetrical load.

4. Performance analysis Ø What speedup will be achieved by running parallel simulation on multi-core platform is the most concerned question. Ø Therefore, the Phold model is developed in this section so as to analyze both the overheads of the parallel simulator and the effects of event granularity, process number, lookahead on the simulation performance. Ø Phold model is a classical PDES simulator test model with symmetrical load.

Phold Model There are N entities that are distributed to M LPs and finally distributed to M processor cores. In the initial phase of simulation, each entity generates an initial event. During the simulation, when an entity receives an event, it executes G matrix multiplication operations and then generates a new event. The time-stamp of the new event is determined according to the following rule: if RANDdt is true, the time-stamp of the new event is LVT +lookahead+dt, where LVT is the local virtual time of entity, lookahead refers to the minimum time between two events, and dt is a random variable that conforms to uniform distribution between 0 and 1; else if RANDdt is false, the time-stamp of the new event is LVT +lookahead. The receiver of the new event is determined according to the following rule: if RANDdest is false, the receiver is the next adjacent entity; else if RANDdest is true, then a random number is generated, if this number is smaller than locality (a factor that means the ratio of local events to global events), then an entity is randomly chosen from the local LP as the receiver, else an entity is randomly chosen from all LPs as the receiver. The terminated time of the simulation is Tend.

Phold Model There are N entities that are distributed to M LPs and finally distributed to M processor cores. In the initial phase of simulation, each entity generates an initial event. During the simulation, when an entity receives an event, it executes G matrix multiplication operations and then generates a new event. The time-stamp of the new event is determined according to the following rule: if RANDdt is true, the time-stamp of the new event is LVT +lookahead+dt, where LVT is the local virtual time of entity, lookahead refers to the minimum time between two events, and dt is a random variable that conforms to uniform distribution between 0 and 1; else if RANDdt is false, the time-stamp of the new event is LVT +lookahead. The receiver of the new event is determined according to the following rule: if RANDdest is false, the receiver is the next adjacent entity; else if RANDdest is true, then a random number is generated, if this number is smaller than locality (a factor that means the ratio of local events to global events), then an entity is randomly chosen from the local LP as the receiver, else an entity is randomly chosen from all LPs as the receiver. The terminated time of the simulation is Tend.

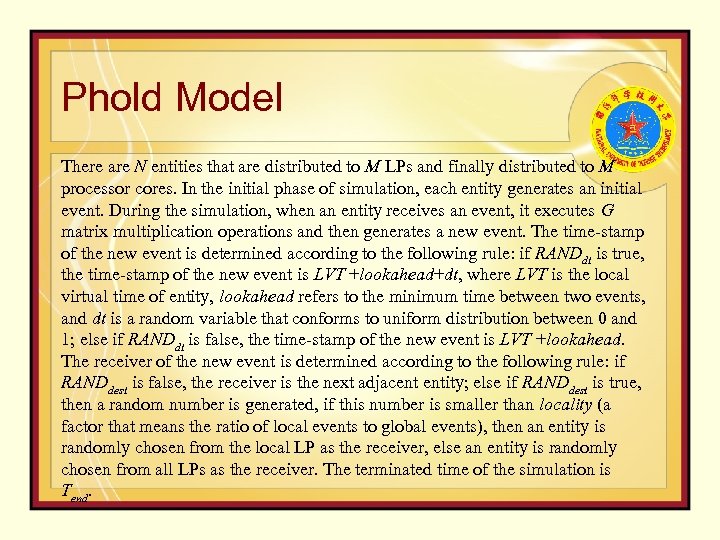

4. Performance analysis Ø The hardware platform of this test is HP Pro. Liant ML 150 server with two-way Intel Xeon Quad-core processors and 4 GB memory. This server has up to eight executing cores, and the clock frequency of each core is 2. 0 GHz. The operating system of this server is Windows Server 2003. Figure 3. State of task manager when PDES simulator is running on HP Server

4. Performance analysis Ø The hardware platform of this test is HP Pro. Liant ML 150 server with two-way Intel Xeon Quad-core processors and 4 GB memory. This server has up to eight executing cores, and the clock frequency of each core is 2. 0 GHz. The operating system of this server is Windows Server 2003. Figure 3. State of task manager when PDES simulator is running on HP Server

4. Performance analysis Ø Two indexes are usually used to measure the performance of PDES simulator ü Speedup is defined as the ratio of serial running time to parallel running time. ü Event’s executing efficiency (we will refer to this as efficiency, for short) Efficiency is defined as the ratio of the submitted events to processed events during the optimistic parallel simulation. The higher the efficiency is, the less the number of rollbacks is.

4. Performance analysis Ø Two indexes are usually used to measure the performance of PDES simulator ü Speedup is defined as the ratio of serial running time to parallel running time. ü Event’s executing efficiency (we will refer to this as efficiency, for short) Efficiency is defined as the ratio of the submitted events to processed events during the optimistic parallel simulation. The higher the efficiency is, the less the number of rollbacks is.

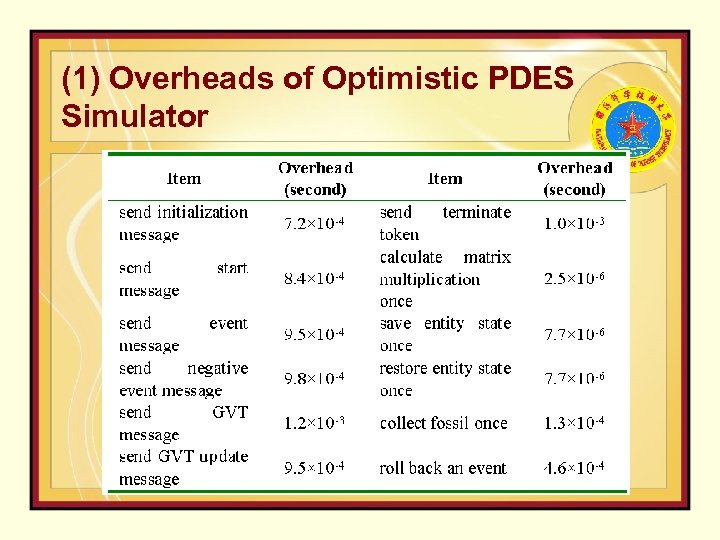

(1) Overheads of Optimistic PDES Simulator

(1) Overheads of Optimistic PDES Simulator

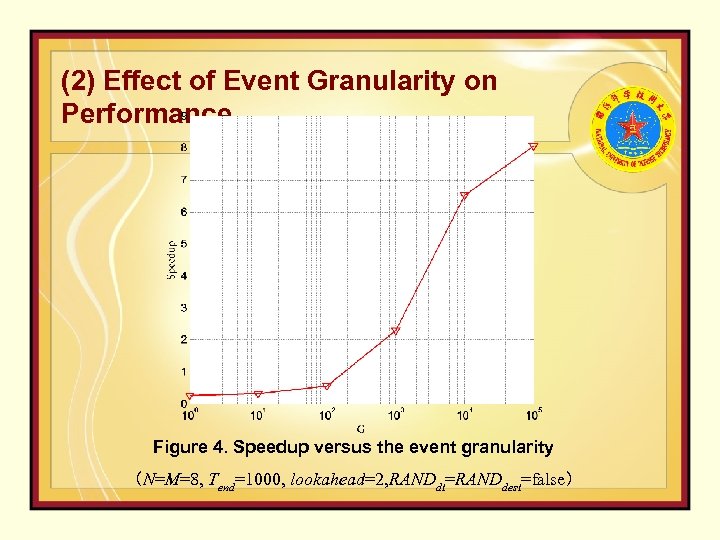

(2) Effect of Event Granularity on Performance Figure 4. Speedup versus the event granularity (N=M=8, Tend=1000, lookahead=2, RANDdt=RANDdest=false)

(2) Effect of Event Granularity on Performance Figure 4. Speedup versus the event granularity (N=M=8, Tend=1000, lookahead=2, RANDdt=RANDdest=false)

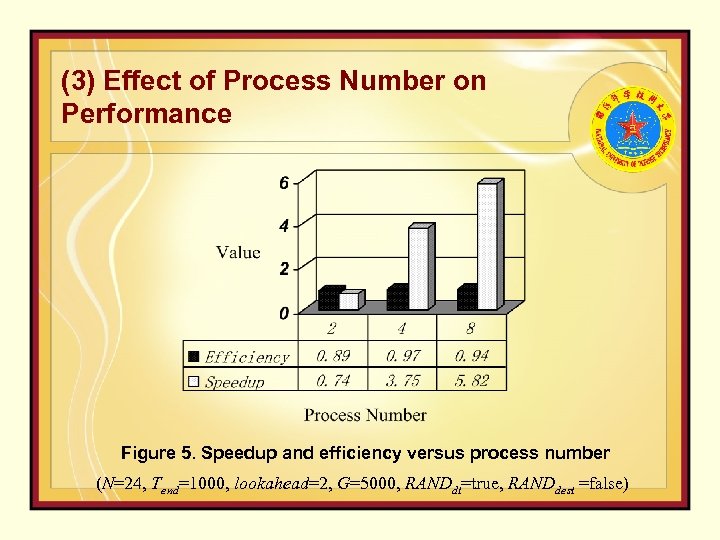

(3) Effect of Process Number on Performance Figure 5. Speedup and efficiency versus process number (N=24, Tend=1000, lookahead=2, G=5000, RANDdt=true, RANDdest =false)

(3) Effect of Process Number on Performance Figure 5. Speedup and efficiency versus process number (N=24, Tend=1000, lookahead=2, G=5000, RANDdt=true, RANDdest =false)

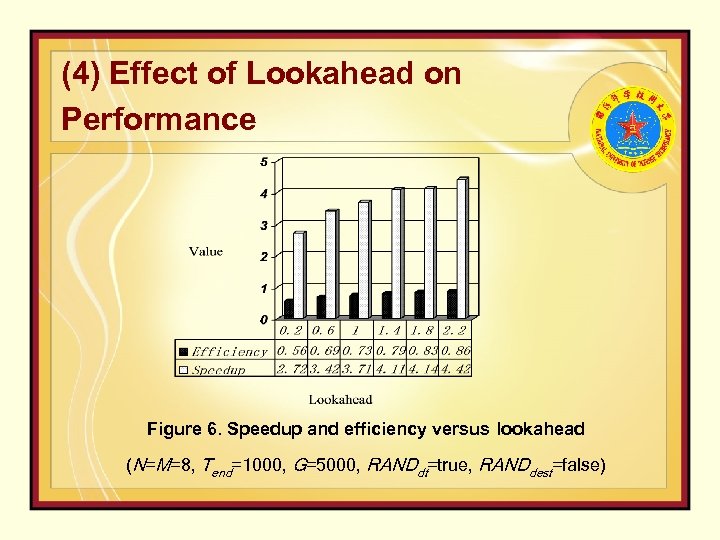

(4) Effect of Lookahead on Performance Figure 6. Speedup and efficiency versus lookahead (N=M=8, Tend=1000, G=5000, RANDdt=true, RANDdest=false)

(4) Effect of Lookahead on Performance Figure 6. Speedup and efficiency versus lookahead (N=M=8, Tend=1000, G=5000, RANDdt=true, RANDdest=false)

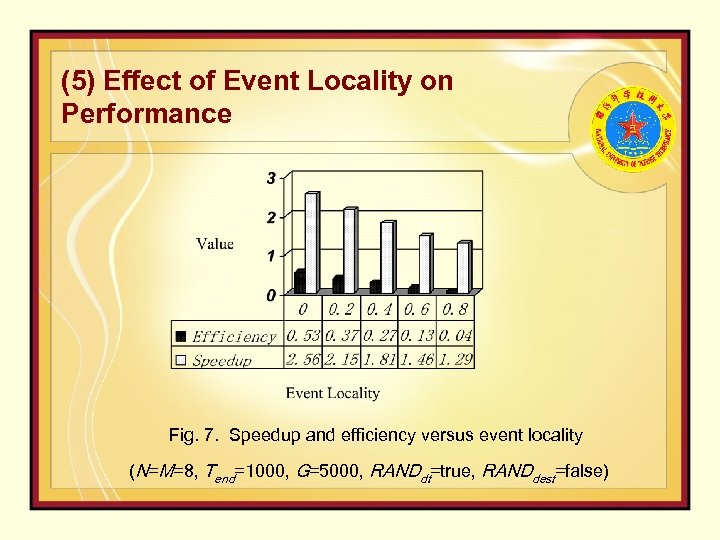

(5) Effect of Event Locality on Performance Fig. 7. Speedup and efficiency versus event locality (N=M=8, Tend=1000, G=5000, RANDdt=true, RANDdest=false)

(5) Effect of Event Locality on Performance Fig. 7. Speedup and efficiency versus event locality (N=M=8, Tend=1000, G=5000, RANDdt=true, RANDdest=false)

5. Conclusion Ø In this paper, we make an effort to run parallel simulation on desktop multi-core platform. Ø The experiment results show that the optimistic PDES based on multi-core platform could achieve good speedup for applications with coarse-grained events. Ø With the increasingly advancing development of multicore processor, the available executing cores will become more and more, and the performance of PDES based on multi-core platform will become better and better. Ø PDES based on multi-core has a good prospect. Parallel simulation that could only be run on super computer and large scale cluster formerly is hopeful to be applied to desktop simulation software.

5. Conclusion Ø In this paper, we make an effort to run parallel simulation on desktop multi-core platform. Ø The experiment results show that the optimistic PDES based on multi-core platform could achieve good speedup for applications with coarse-grained events. Ø With the increasingly advancing development of multicore processor, the available executing cores will become more and more, and the performance of PDES based on multi-core platform will become better and better. Ø PDES based on multi-core has a good prospect. Parallel simulation that could only be run on super computer and large scale cluster formerly is hopeful to be applied to desktop simulation software.

What are we doing now? Ø The Key to Parallel Simulation based on Desktop Multi-core Platform ü easy development of parallel models ü easy execution of parallel simulation ü stability of performance gain Ø How? ü Parallelization of the Simulation Model Specification SMP 2: Simulation Model Portability specification, version 2 p model-driven & component-based design and development method of parallel simulation model p Automated Model Partitioning p Automated Selection of Synchronization Algorithms

What are we doing now? Ø The Key to Parallel Simulation based on Desktop Multi-core Platform ü easy development of parallel models ü easy execution of parallel simulation ü stability of performance gain Ø How? ü Parallelization of the Simulation Model Specification SMP 2: Simulation Model Portability specification, version 2 p model-driven & component-based design and development method of parallel simulation model p Automated Model Partitioning p Automated Selection of Synchronization Algorithms