e76e590ec2b2de19eec72609d12b24dd.ppt

- Количество слайдов: 100

1 Temporal Information Extraction Inderjeet Mani imani@mitre. org

1 Temporal Information Extraction Inderjeet Mani imani@mitre. org

2 Outline • • Introduction Linguistic Theories AI Theories Annotation Schemes Rule-based and machine-learning methods. Challenges Links

2 Outline • • Introduction Linguistic Theories AI Theories Annotation Schemes Rule-based and machine-learning methods. Challenges Links

3 Motivation: Question-Answering • When is Ramadan this year? • What was the largest U. S. military operation since Vietnam? • Tell me the best time of the year to go cherrypicking. • How often do you feed a pet gerbil? • Is Gates currently CEO of Microsoft? • Did the Enron merger with Dynegy take place? • How long did the hostage situation in Beirut last? • What is the current unemployment rate? • How many Iraqi civilian casualties were there in the first week of the U. S. invasion of Iraq? • Who was Secretary of Defense during the Gulf War?

3 Motivation: Question-Answering • When is Ramadan this year? • What was the largest U. S. military operation since Vietnam? • Tell me the best time of the year to go cherrypicking. • How often do you feed a pet gerbil? • Is Gates currently CEO of Microsoft? • Did the Enron merger with Dynegy take place? • How long did the hostage situation in Beirut last? • What is the current unemployment rate? • How many Iraqi civilian casualties were there in the first week of the U. S. invasion of Iraq? • Who was Secretary of Defense during the Gulf War?

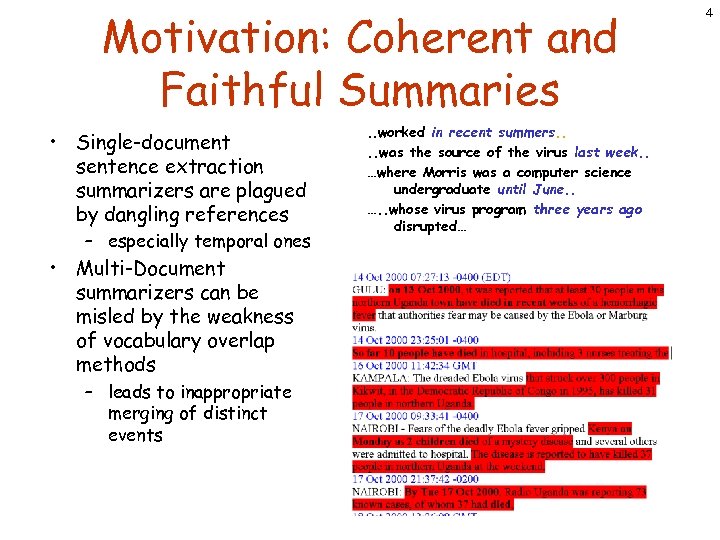

Motivation: Coherent and Faithful Summaries • Single-document sentence extraction summarizers are plagued by dangling references – especially temporal ones • Multi-Document summarizers can be misled by the weakness of vocabulary overlap methods – leads to inappropriate merging of distinct events . . worked in recent summers. . was the source of the virus last week. . …where Morris was a computer science undergraduate until June. . …. . whose virus program three years ago disrupted… 4

Motivation: Coherent and Faithful Summaries • Single-document sentence extraction summarizers are plagued by dangling references – especially temporal ones • Multi-Document summarizers can be misled by the weakness of vocabulary overlap methods – leads to inappropriate merging of distinct events . . worked in recent summers. . was the source of the virus last week. . …where Morris was a computer science undergraduate until June. . …. . whose virus program three years ago disrupted… 4

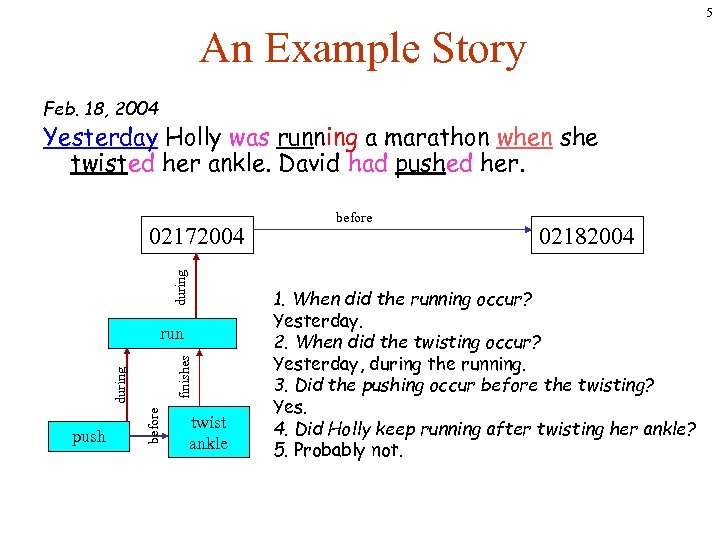

5 An Example Story Feb. 18, 2004 Yesterday Holly was running a marathon when she twisted her ankle. David had pushed her. during 02172004 push before during finishes run twist ankle before 02182004 1. When did the running occur? Yesterday. 2. When did the twisting occur? Yesterday, during the running. 3. Did the pushing occur before the twisting? Yes. 4. Did Holly keep running after twisting her ankle? 5. Probably not.

5 An Example Story Feb. 18, 2004 Yesterday Holly was running a marathon when she twisted her ankle. David had pushed her. during 02172004 push before during finishes run twist ankle before 02182004 1. When did the running occur? Yesterday. 2. When did the twisting occur? Yesterday, during the running. 3. Did the pushing occur before the twisting? Yes. 4. Did Holly keep running after twisting her ankle? 5. Probably not.

Temporal Information Extraction Problem Feb. 18, 2004 Yesterday Holly was running a marathon when she twisted her ankle. David had pushed her. • Input: A natural language discourse • Output: representation of events and their temporal relations 6

Temporal Information Extraction Problem Feb. 18, 2004 Yesterday Holly was running a marathon when she twisted her ankle. David had pushed her. • Input: A natural language discourse • Output: representation of events and their temporal relations 6

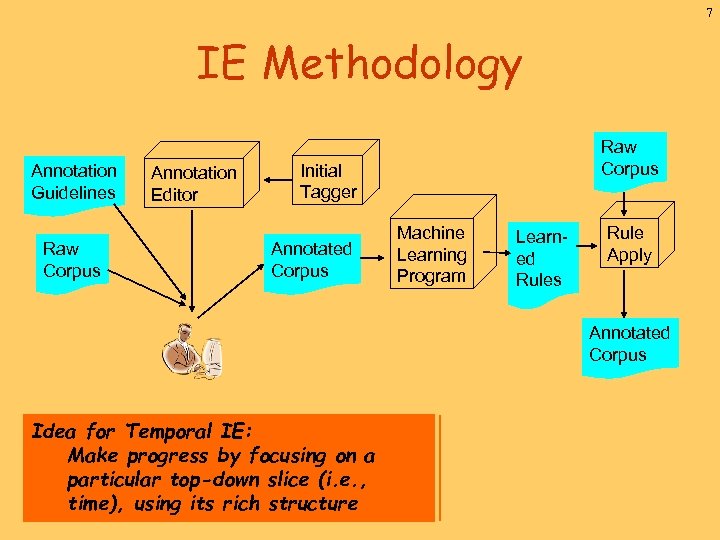

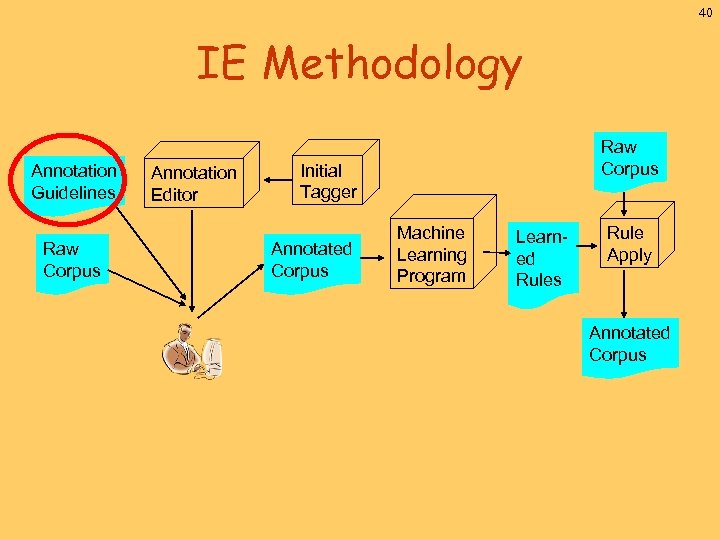

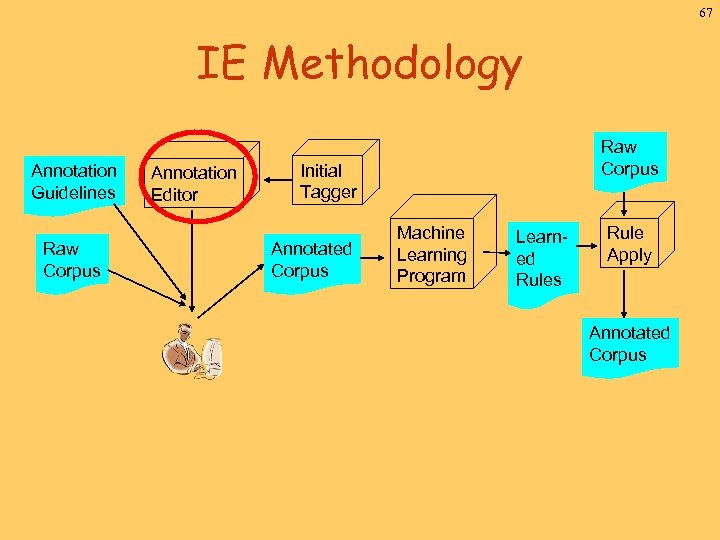

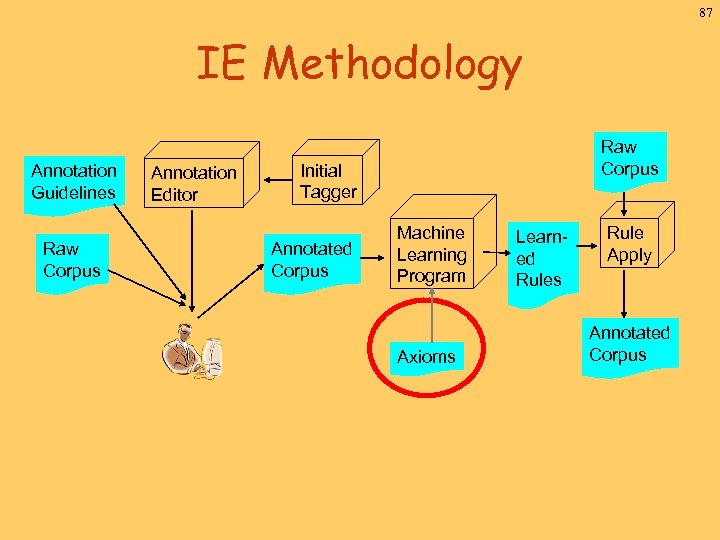

7 IE Methodology Annotation Guidelines Raw Corpus Annotation Editor Raw Corpus Initial Tagger Annotated Corpus Machine Learning Program Learned Rules Rule Apply Annotated Corpus Idea for Temporal IE: Make progress by focusing on a particular top-down slice (i. e. , time), using its rich structure

7 IE Methodology Annotation Guidelines Raw Corpus Annotation Editor Raw Corpus Initial Tagger Annotated Corpus Machine Learning Program Learned Rules Rule Apply Annotated Corpus Idea for Temporal IE: Make progress by focusing on a particular top-down slice (i. e. , time), using its rich structure

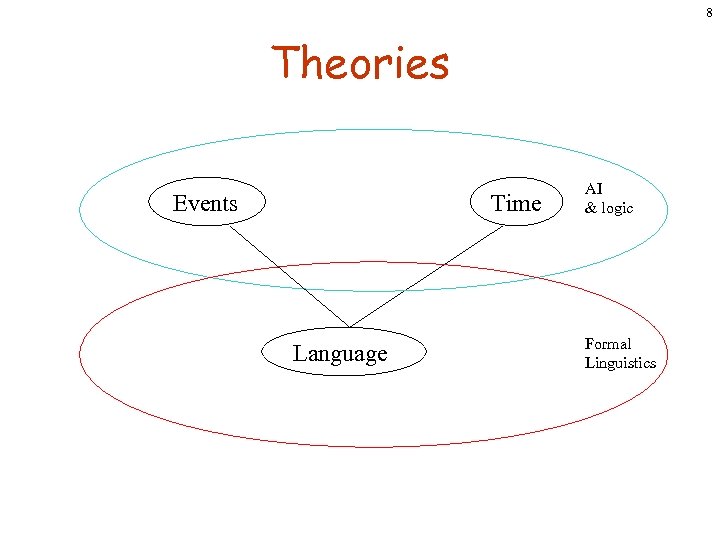

8 Theories Events Time Language AI & logic Formal Linguistics

8 Theories Events Time Language AI & logic Formal Linguistics

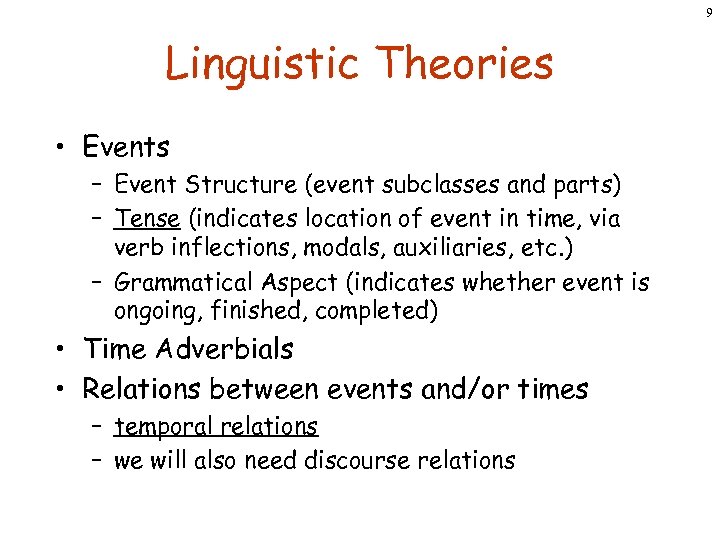

9 Linguistic Theories • Events – Event Structure (event subclasses and parts) – Tense (indicates location of event in time, via verb inflections, modals, auxiliaries, etc. ) – Grammatical Aspect (indicates whether event is ongoing, finished, completed) • Time Adverbials • Relations between events and/or times – temporal relations – we will also need discourse relations

9 Linguistic Theories • Events – Event Structure (event subclasses and parts) – Tense (indicates location of event in time, via verb inflections, modals, auxiliaries, etc. ) – Grammatical Aspect (indicates whether event is ongoing, finished, completed) • Time Adverbials • Relations between events and/or times – temporal relations – we will also need discourse relations

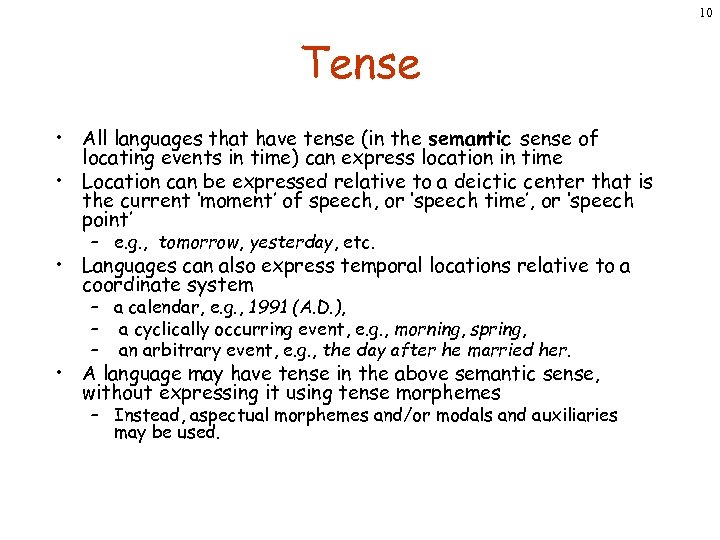

10 Tense • All languages that have tense (in the semantic sense of locating events in time) can express location in time • Location can be expressed relative to a deictic center that is the current ‘moment’ of speech, or ‘speech time’, or ‘speech point’ – e. g. , tomorrow, yesterday, etc. • Languages can also express temporal locations relative to a coordinate system – a calendar, e. g. , 1991 (A. D. ), – a cyclically occurring event, e. g. , morning, spring, – an arbitrary event, e. g. , the day after he married her. • A language may have tense in the above semantic sense, without expressing it using tense morphemes – Instead, aspectual morphemes and/or modals and auxiliaries may be used.

10 Tense • All languages that have tense (in the semantic sense of locating events in time) can express location in time • Location can be expressed relative to a deictic center that is the current ‘moment’ of speech, or ‘speech time’, or ‘speech point’ – e. g. , tomorrow, yesterday, etc. • Languages can also express temporal locations relative to a coordinate system – a calendar, e. g. , 1991 (A. D. ), – a cyclically occurring event, e. g. , morning, spring, – an arbitrary event, e. g. , the day after he married her. • A language may have tense in the above semantic sense, without expressing it using tense morphemes – Instead, aspectual morphemes and/or modals and auxiliaries may be used.

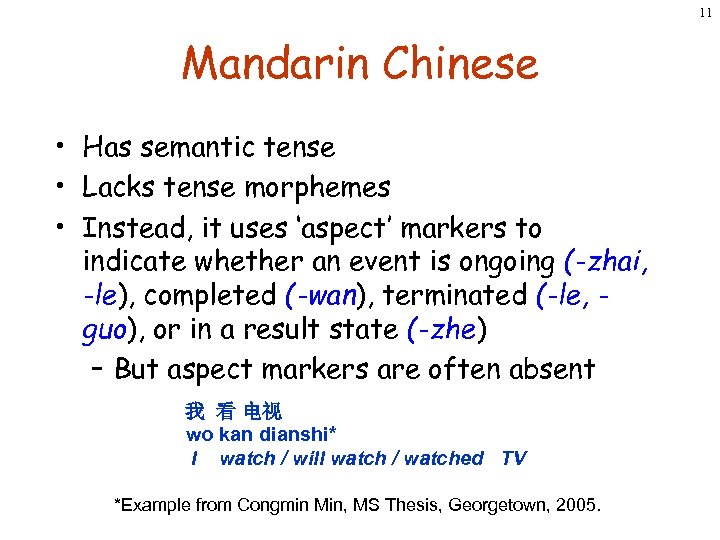

11 Mandarin Chinese • Has semantic tense • Lacks tense morphemes • Instead, it uses ‘aspect’ markers to indicate whether an event is ongoing (-zhai, -le), completed (-wan), terminated (-le, guo), or in a result state (-zhe) – But aspect markers are often absent 我 看 电视 wo kan dianshi* I watch / will watch / watched TV *Example from Congmin Min, MS Thesis, Georgetown, 2005.

11 Mandarin Chinese • Has semantic tense • Lacks tense morphemes • Instead, it uses ‘aspect’ markers to indicate whether an event is ongoing (-zhai, -le), completed (-wan), terminated (-le, guo), or in a result state (-zhe) – But aspect markers are often absent 我 看 电视 wo kan dianshi* I watch / will watch / watched TV *Example from Congmin Min, MS Thesis, Georgetown, 2005.

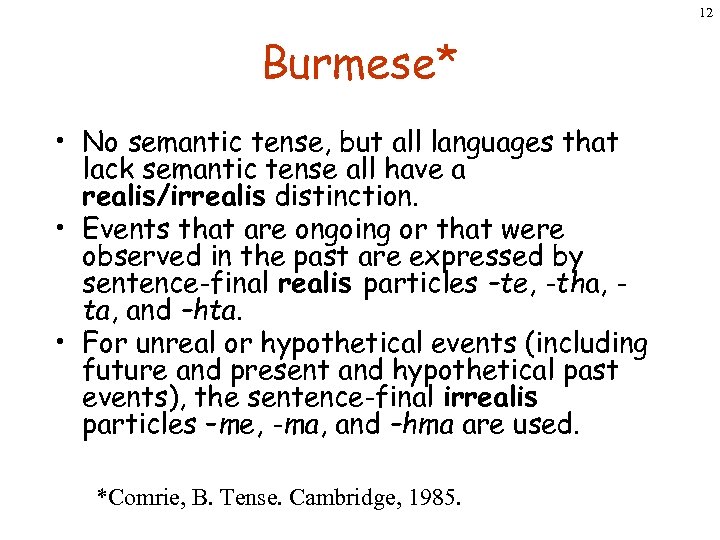

12 Burmese* • No semantic tense, but all languages that lack semantic tense all have a realis/irrealis distinction. • Events that are ongoing or that were observed in the past are expressed by sentence-final realis particles –te, -tha, ta, and –hta. • For unreal or hypothetical events (including future and present and hypothetical past events), the sentence-final irrealis particles –me, -ma, and –hma are used. *Comrie, B. Tense. Cambridge, 1985.

12 Burmese* • No semantic tense, but all languages that lack semantic tense all have a realis/irrealis distinction. • Events that are ongoing or that were observed in the past are expressed by sentence-final realis particles –te, -tha, ta, and –hta. • For unreal or hypothetical events (including future and present and hypothetical past events), the sentence-final irrealis particles –me, -ma, and –hma are used. *Comrie, B. Tense. Cambridge, 1985.

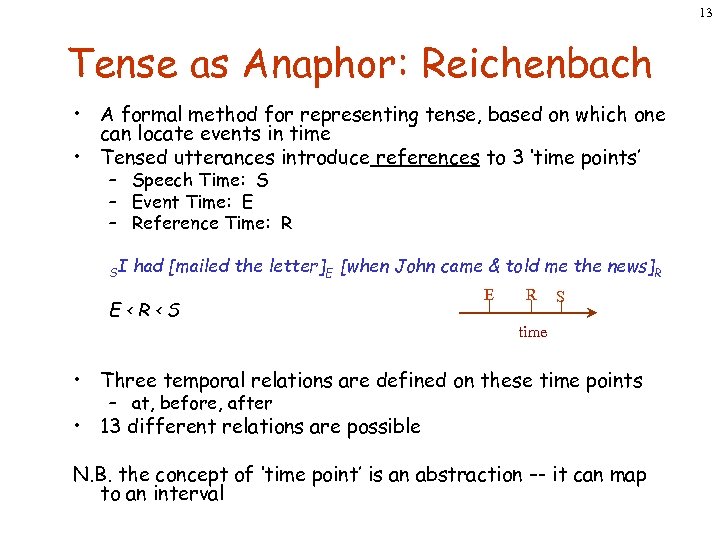

13 Tense as Anaphor: Reichenbach • A formal method for representing tense, based on which one can locate events in time • Tensed utterances introduce references to 3 ‘time points’ – Speech Time: S – Event Time: E – Reference Time: R SI had [mailed the letter]E [when John came & told me the news]R E

13 Tense as Anaphor: Reichenbach • A formal method for representing tense, based on which one can locate events in time • Tensed utterances introduce references to 3 ‘time points’ – Speech Time: S – Event Time: E – Reference Time: R SI had [mailed the letter]E [when John came & told me the news]R E

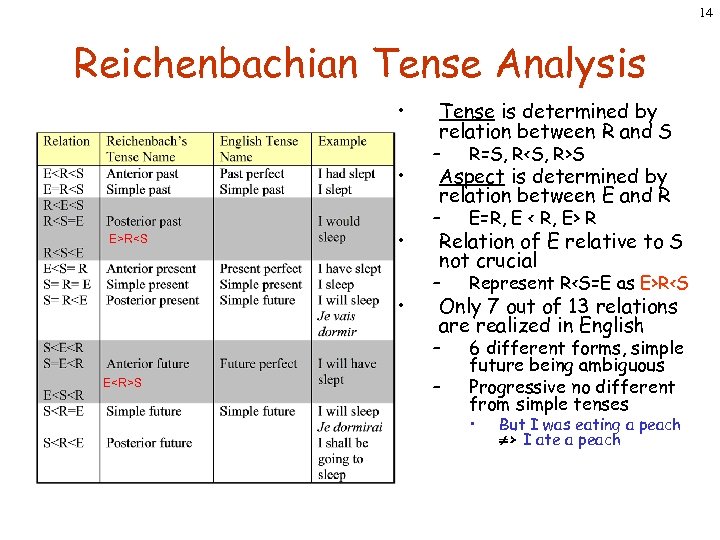

14 Reichenbachian Tense Analysis • Tense is determined by relation between R and S E>R

14 Reichenbachian Tense Analysis • Tense is determined by relation between R and S E>RS R=S, R I ate a peach

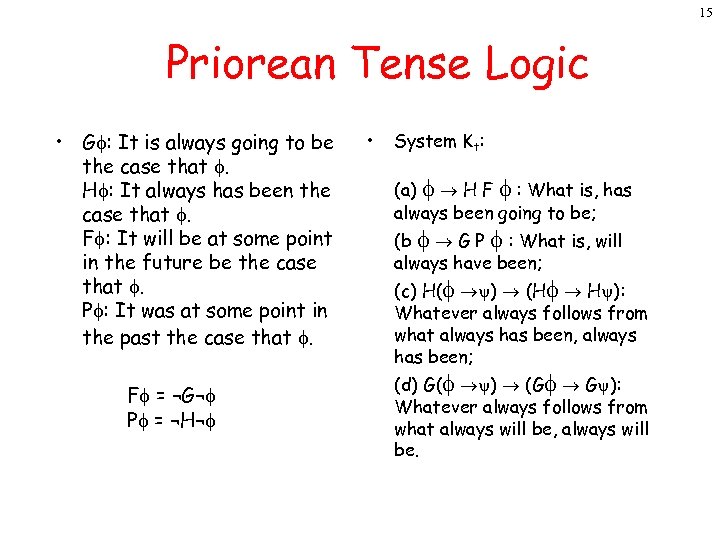

15 Priorean Tense Logic • G : It is always going to be the case that . H : It always has been the case that . F : It will be at some point in the future be the case that . P : It was at some point in the past the case that . F = ¬G¬ P = ¬H¬ • System Kt: (a) H F : What is, has always been going to be; (b G P : What is, will always have been; (c) H( ) (H H ): Whatever always follows from what always has been, always has been; (d) G( ) (G G ): Whatever always follows from what always will be, always will be.

15 Priorean Tense Logic • G : It is always going to be the case that . H : It always has been the case that . F : It will be at some point in the future be the case that . P : It was at some point in the past the case that . F = ¬G¬ P = ¬H¬ • System Kt: (a) H F : What is, has always been going to be; (b G P : What is, will always have been; (c) H( ) (H H ): Whatever always follows from what always has been, always has been; (d) G( ) (G G ): Whatever always follows from what always will be, always will be.

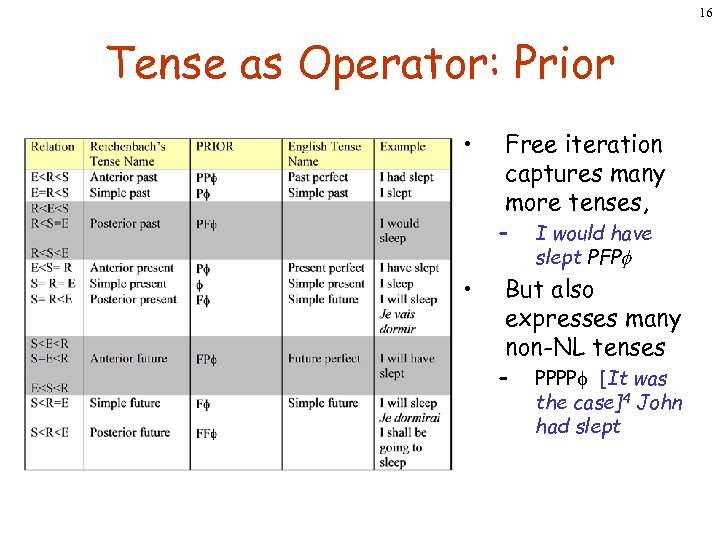

16 Tense as Operator: Prior • Free iteration captures many more tenses, – • I would have slept PFP But also expresses many non-NL tenses – PPPP [It was the case]4 John had slept

16 Tense as Operator: Prior • Free iteration captures many more tenses, – • I would have slept PFP But also expresses many non-NL tenses – PPPP [It was the case]4 John had slept

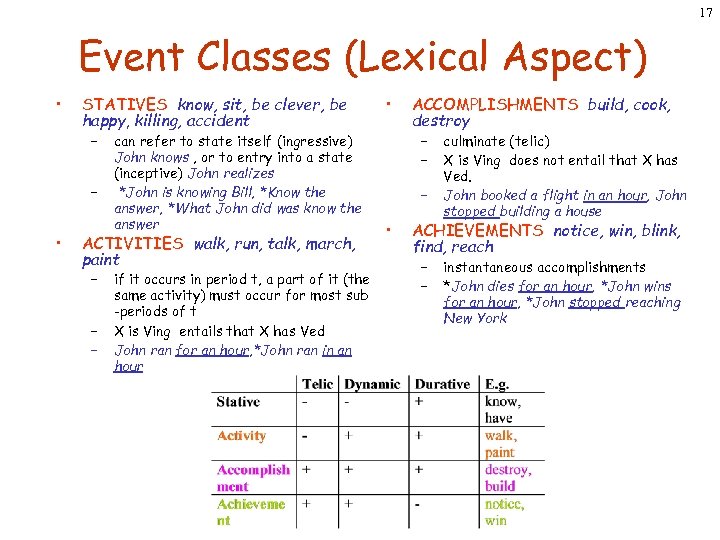

17 Event Classes (Lexical Aspect) • STATIVES know, sit, be clever, be happy, killing, accident – – • can refer to state itself (ingressive) John knows , or to entry into a state (inceptive) John realizes *John is knowing Bill, *Know the answer, *What John did was know the answer ACTIVITIES walk, run, talk, march, paint – – – if it occurs in period t, a part of it (the same activity) must occur for most sub -periods of t X is Ving entails that X has Ved John ran for an hour, *John ran in an hour • ACCOMPLISHMENTS build, cook, destroy – – – • culminate (telic) X is Ving does not entail that X has Ved. John booked a flight in an hour, John stopped building a house ACHIEVEMENTS notice, win, blink, find, reach – – instantaneous accomplishments *John dies for an hour, *John wins for an hour, *John stopped reaching New York

17 Event Classes (Lexical Aspect) • STATIVES know, sit, be clever, be happy, killing, accident – – • can refer to state itself (ingressive) John knows , or to entry into a state (inceptive) John realizes *John is knowing Bill, *Know the answer, *What John did was know the answer ACTIVITIES walk, run, talk, march, paint – – – if it occurs in period t, a part of it (the same activity) must occur for most sub -periods of t X is Ving entails that X has Ved John ran for an hour, *John ran in an hour • ACCOMPLISHMENTS build, cook, destroy – – – • culminate (telic) X is Ving does not entail that X has Ved. John booked a flight in an hour, John stopped building a house ACHIEVEMENTS notice, win, blink, find, reach – – instantaneous accomplishments *John dies for an hour, *John wins for an hour, *John stopped reaching New York

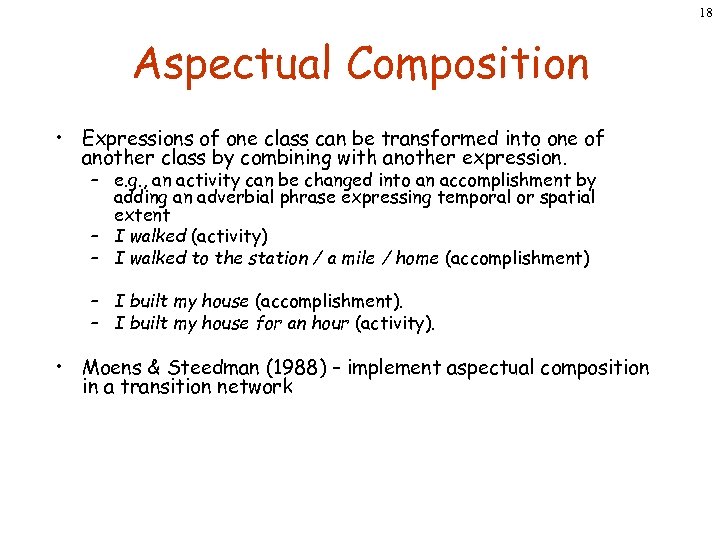

18 Aspectual Composition • Expressions of one class can be transformed into one of another class by combining with another expression. – e. g. , an activity can be changed into an accomplishment by adding an adverbial phrase expressing temporal or spatial extent – I walked (activity) – I walked to the station / a mile / home (accomplishment) – I built my house (accomplishment). – I built my house for an hour (activity). • Moens & Steedman (1988) – implement aspectual composition in a transition network

18 Aspectual Composition • Expressions of one class can be transformed into one of another class by combining with another expression. – e. g. , an activity can be changed into an accomplishment by adding an adverbial phrase expressing temporal or spatial extent – I walked (activity) – I walked to the station / a mile / home (accomplishment) – I built my house (accomplishment). – I built my house for an hour (activity). • Moens & Steedman (1988) – implement aspectual composition in a transition network

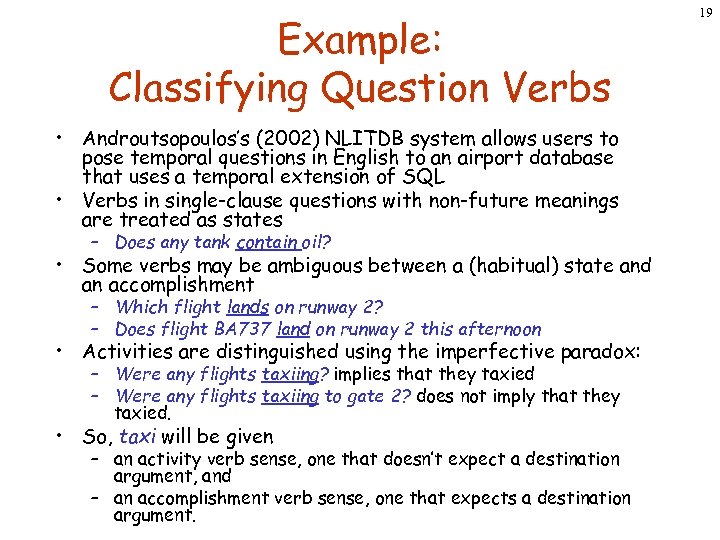

Example: Classifying Question Verbs • Androutsopoulos’s (2002) NLITDB system allows users to pose temporal questions in English to an airport database that uses a temporal extension of SQL • Verbs in single-clause questions with non-future meanings are treated as states – Does any tank contain oil? • Some verbs may be ambiguous between a (habitual) state and an accomplishment – Which flight lands on runway 2? – Does flight BA 737 land on runway 2 this afternoon • Activities are distinguished using the imperfective paradox: – Were any flights taxiing? implies that they taxied – Were any flights taxiing to gate 2? does not imply that they taxied. • So, taxi will be given – an activity verb sense, one that doesn’t expect a destination argument, and – an accomplishment verb sense, one that expects a destination argument. 19

Example: Classifying Question Verbs • Androutsopoulos’s (2002) NLITDB system allows users to pose temporal questions in English to an airport database that uses a temporal extension of SQL • Verbs in single-clause questions with non-future meanings are treated as states – Does any tank contain oil? • Some verbs may be ambiguous between a (habitual) state and an accomplishment – Which flight lands on runway 2? – Does flight BA 737 land on runway 2 this afternoon • Activities are distinguished using the imperfective paradox: – Were any flights taxiing? implies that they taxied – Were any flights taxiing to gate 2? does not imply that they taxied. • So, taxi will be given – an activity verb sense, one that doesn’t expect a destination argument, and – an accomplishment verb sense, one that expects a destination argument. 19

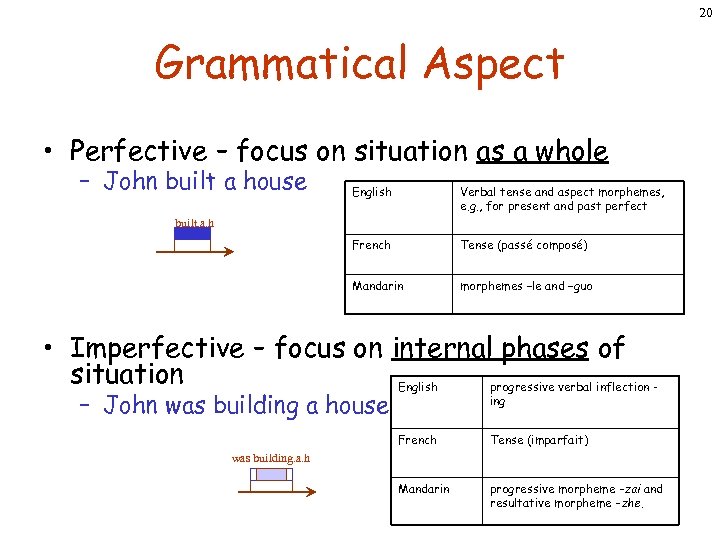

20 Grammatical Aspect • Perfective – focus on situation as a whole – John built a house English Verbal tense and aspect morphemes, e. g. , for present and past perfect French Tense (passé composé) Mandarin morphemes –le and –guo built. a. h • Imperfective – focus on internal phases of situation English progressive verbal inflection – John was building a house ing French Tense (imparfait) Mandarin progressive morpheme –zai and resultative morpheme –zhe. was building. a. h

20 Grammatical Aspect • Perfective – focus on situation as a whole – John built a house English Verbal tense and aspect morphemes, e. g. , for present and past perfect French Tense (passé composé) Mandarin morphemes –le and –guo built. a. h • Imperfective – focus on internal phases of situation English progressive verbal inflection – John was building a house ing French Tense (imparfait) Mandarin progressive morpheme –zai and resultative morpheme –zhe. was building. a. h

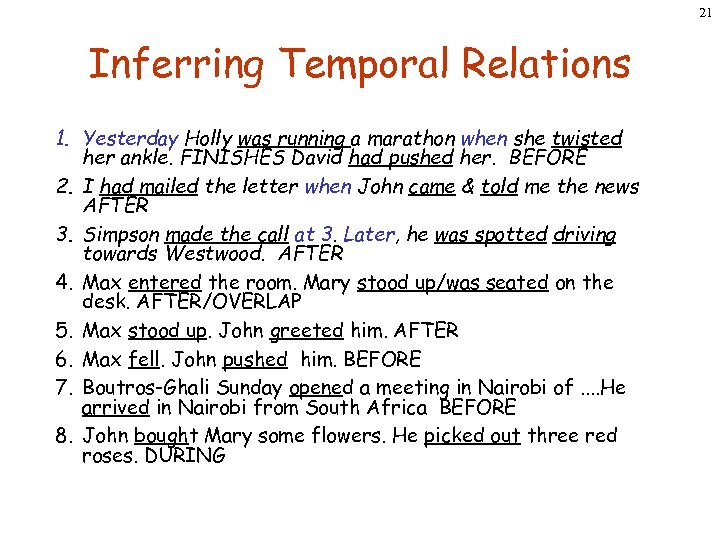

21 Inferring Temporal Relations 1. Yesterday Holly was running a marathon when she twisted her ankle. FINISHES David had pushed her. BEFORE 2. I had mailed the letter when John came & told me the news AFTER 3. Simpson made the call at 3. Later, he was spotted driving towards Westwood. AFTER 4. Max entered the room. Mary stood up/was seated on the desk. AFTER/OVERLAP 5. Max stood up. John greeted him. AFTER 6. Max fell. John pushed him. BEFORE 7. Boutros-Ghali Sunday opened a meeting in Nairobi of. . He arrived in Nairobi from South Africa BEFORE 8. John bought Mary some flowers. He picked out three red roses. DURING

21 Inferring Temporal Relations 1. Yesterday Holly was running a marathon when she twisted her ankle. FINISHES David had pushed her. BEFORE 2. I had mailed the letter when John came & told me the news AFTER 3. Simpson made the call at 3. Later, he was spotted driving towards Westwood. AFTER 4. Max entered the room. Mary stood up/was seated on the desk. AFTER/OVERLAP 5. Max stood up. John greeted him. AFTER 6. Max fell. John pushed him. BEFORE 7. Boutros-Ghali Sunday opened a meeting in Nairobi of. . He arrived in Nairobi from South Africa BEFORE 8. John bought Mary some flowers. He picked out three red roses. DURING

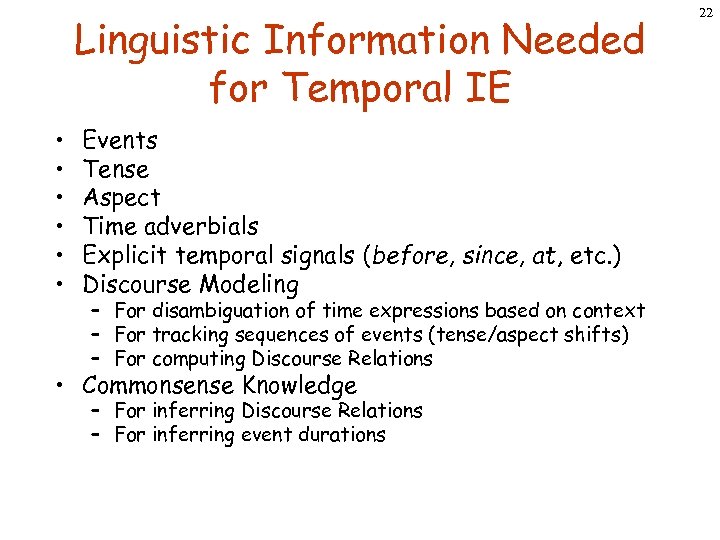

Linguistic Information Needed for Temporal IE • • • Events Tense Aspect Time adverbials Explicit temporal signals (before, since, at, etc. ) Discourse Modeling – For disambiguation of time expressions based on context – For tracking sequences of events (tense/aspect shifts) – For computing Discourse Relations • Commonsense Knowledge – For inferring Discourse Relations – For inferring event durations 22

Linguistic Information Needed for Temporal IE • • • Events Tense Aspect Time adverbials Explicit temporal signals (before, since, at, etc. ) Discourse Modeling – For disambiguation of time expressions based on context – For tracking sequences of events (tense/aspect shifts) – For computing Discourse Relations • Commonsense Knowledge – For inferring Discourse Relations – For inferring event durations 22

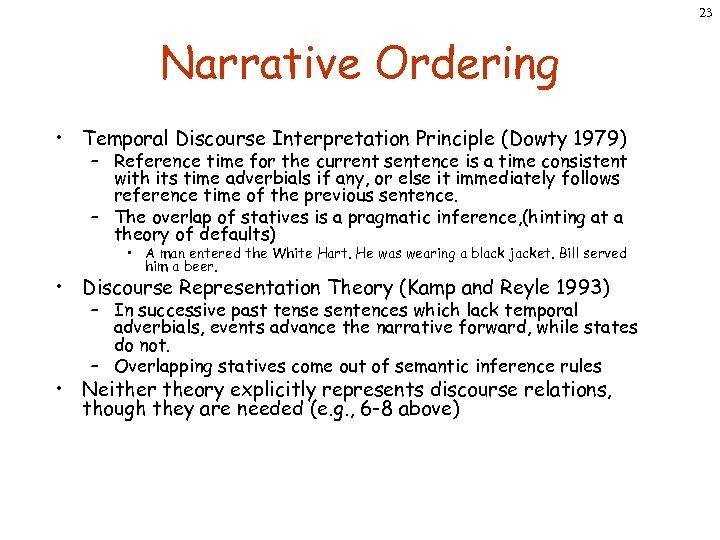

23 Narrative Ordering • Temporal Discourse Interpretation Principle (Dowty 1979) – Reference time for the current sentence is a time consistent with its time adverbials if any, or else it immediately follows reference time of the previous sentence. – The overlap of statives is a pragmatic inference, (hinting at a theory of defaults) • A man entered the White Hart. He was wearing a black jacket. Bill served him a beer. • Discourse Representation Theory (Kamp and Reyle 1993) – In successive past tense sentences which lack temporal adverbials, events advance the narrative forward, while states do not. – Overlapping statives come out of semantic inference rules • Neither theory explicitly represents discourse relations, though they are needed (e. g. , 6 -8 above)

23 Narrative Ordering • Temporal Discourse Interpretation Principle (Dowty 1979) – Reference time for the current sentence is a time consistent with its time adverbials if any, or else it immediately follows reference time of the previous sentence. – The overlap of statives is a pragmatic inference, (hinting at a theory of defaults) • A man entered the White Hart. He was wearing a black jacket. Bill served him a beer. • Discourse Representation Theory (Kamp and Reyle 1993) – In successive past tense sentences which lack temporal adverbials, events advance the narrative forward, while states do not. – Overlapping statives come out of semantic inference rules • Neither theory explicitly represents discourse relations, though they are needed (e. g. , 6 -8 above)

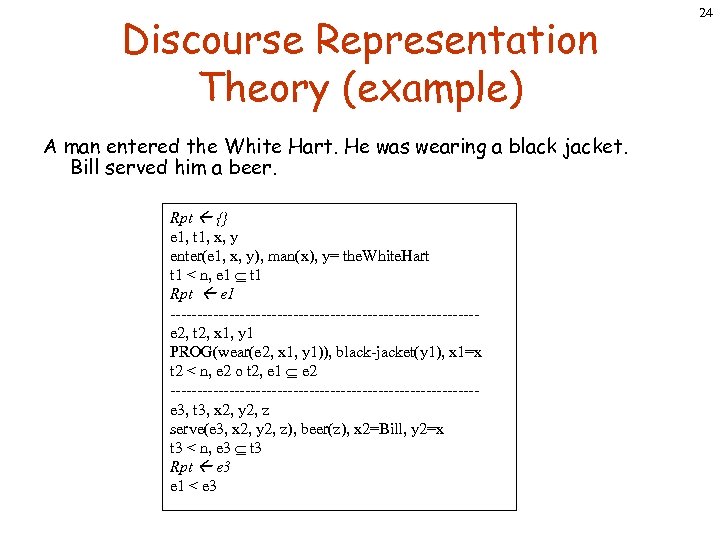

Discourse Representation Theory (example) A man entered the White Hart. He was wearing a black jacket. Bill served him a beer. Rpt {} e 1, t 1, x, y enter(e 1, x, y), man(x), y= the. White. Hart t 1 < n, e 1 t 1 Rpt e 1 -----------------------------e 2, t 2, x 1, y 1 PROG(wear(e 2, x 1, y 1)), black-jacket(y 1), x 1=x t 2 < n, e 2 ο t 2, e 1 e 2 -----------------------------e 3, t 3, x 2, y 2, z serve(e 3, x 2, y 2, z), beer(z), x 2=Bill, y 2=x t 3 < n, e 3 t 3 Rpt e 3 e 1 < e 3 24

Discourse Representation Theory (example) A man entered the White Hart. He was wearing a black jacket. Bill served him a beer. Rpt {} e 1, t 1, x, y enter(e 1, x, y), man(x), y= the. White. Hart t 1 < n, e 1 t 1 Rpt e 1 -----------------------------e 2, t 2, x 1, y 1 PROG(wear(e 2, x 1, y 1)), black-jacket(y 1), x 1=x t 2 < n, e 2 ο t 2, e 1 e 2 -----------------------------e 3, t 3, x 2, y 2, z serve(e 3, x 2, y 2, z), beer(z), x 2=Bill, y 2=x t 3 < n, e 3 t 3 Rpt e 3 e 1 < e 3 24

25 Overriding Defaults • Lascarides and Asher (1993)*: temporal ordering is derived entirely from discourse relations (that link together DRS’s, based on SDRT formalism). • Example – Max switched off the light. The room was pitch dark. – Default inference: OVERLAP – Use an inference rule that if the room is dark and the light was just switched off, the switching off caused the room to become dark. – Inference: AFTER • Problem: requires large doses of world knowledge *L&P 1993

25 Overriding Defaults • Lascarides and Asher (1993)*: temporal ordering is derived entirely from discourse relations (that link together DRS’s, based on SDRT formalism). • Example – Max switched off the light. The room was pitch dark. – Default inference: OVERLAP – Use an inference rule that if the room is dark and the light was just switched off, the switching off caused the room to become dark. – Inference: AFTER • Problem: requires large doses of world knowledge *L&P 1993

26 Outline • • Introduction Linguistic Theories AI Theories Annotation Schemes Rule-based and machine-learning methods. Challenges Links

26 Outline • • Introduction Linguistic Theories AI Theories Annotation Schemes Rule-based and machine-learning methods. Challenges Links

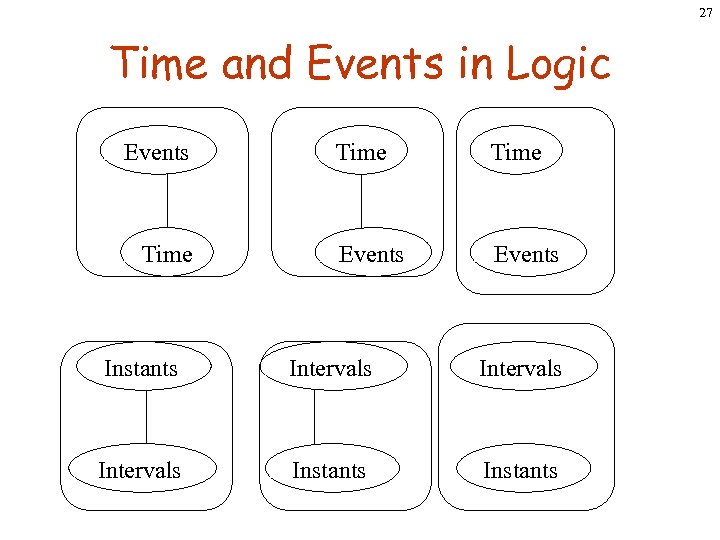

27 Time and Events in Logic Events Time Events Instants Intervals Instants

27 Time and Events in Logic Events Time Events Instants Intervals Instants

28 Instant Ontology • Consider the event of John’s reading the book • Decompose into an infinite set of infinitesimal instants • Let T be a set of temporal instants. < • Let (BEFORE) be a temporal ordering relation between instants • Properties: irreflexive, antisymmetric, transitive, and complete – Antisymmetric => time has only one direction of movement – Irreflexive and Transitive => time is non-cyclical – Complete => < is a total ordering

28 Instant Ontology • Consider the event of John’s reading the book • Decompose into an infinite set of infinitesimal instants • Let T be a set of temporal instants. < • Let (BEFORE) be a temporal ordering relation between instants • Properties: irreflexive, antisymmetric, transitive, and complete – Antisymmetric => time has only one direction of movement – Irreflexive and Transitive => time is non-cyclical – Complete => < is a total ordering

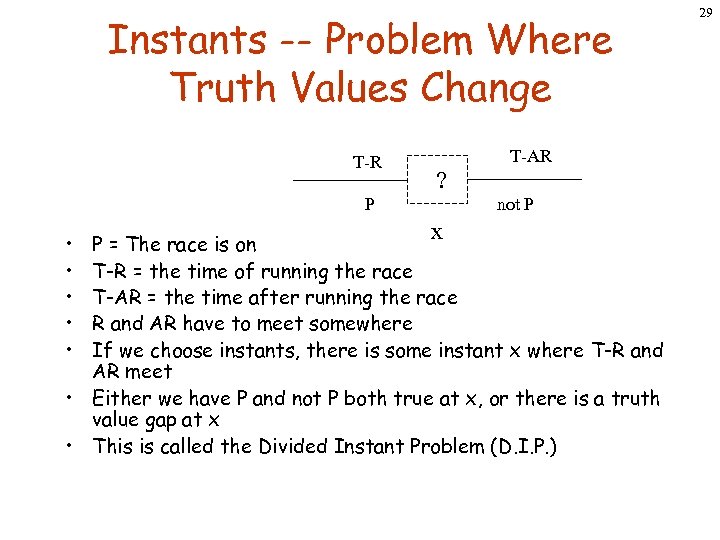

Instants -- Problem Where Truth Values Change T-R T-AR ? P • • • not P x P = The race is on T-R = the time of running the race T-AR = the time after running the race R and AR have to meet somewhere If we choose instants, there is some instant x where T-R and AR meet • Either we have P and not P both true at x, or there is a truth value gap at x • This is called the Divided Instant Problem (D. I. P. ) 29

Instants -- Problem Where Truth Values Change T-R T-AR ? P • • • not P x P = The race is on T-R = the time of running the race T-AR = the time after running the race R and AR have to meet somewhere If we choose instants, there is some instant x where T-R and AR meet • Either we have P and not P both true at x, or there is a truth value gap at x • This is called the Divided Instant Problem (D. I. P. ) 29

30 Ordering Relations on Intervals • Unlike instants, where we have only <, we can have at least 3 ordering relations on intervals – Precedence <: I 1 < I 2 iff t 1 I 1, t 2 I 2, t 1 < t 2 (where < is defined over instants) – Temporal Overlap O: I 1 O I 2 iff I 1 I 2 – Temporal Inclusion : I 1 I 2 iff I 1 I 2

30 Ordering Relations on Intervals • Unlike instants, where we have only <, we can have at least 3 ordering relations on intervals – Precedence <: I 1 < I 2 iff t 1 I 1, t 2 I 2, t 1 < t 2 (where < is defined over instants) – Temporal Overlap O: I 1 O I 2 iff I 1 I 2 – Temporal Inclusion : I 1 I 2 iff I 1 I 2

31 Instants versus Intervals • Instants – We understand the idea of truth at an instant – In cases of continuous change, e. g. , a tossed ball, we need a notion of a durationless event in order to explain the trajectory of the ball just before it falls • Intervals – We often conceive of time as broken up in terms of events which have a certain duration, rather than as a (infinite) sequence of durationless instants. – Many verbs do not describe instantaneous events. , e. g. , has read, ripened – Duration expressions like yesterday afternoon aren’t construed as instants

31 Instants versus Intervals • Instants – We understand the idea of truth at an instant – In cases of continuous change, e. g. , a tossed ball, we need a notion of a durationless event in order to explain the trajectory of the ball just before it falls • Intervals – We often conceive of time as broken up in terms of events which have a certain duration, rather than as a (infinite) sequence of durationless instants. – Many verbs do not describe instantaneous events. , e. g. , has read, ripened – Duration expressions like yesterday afternoon aren’t construed as instants

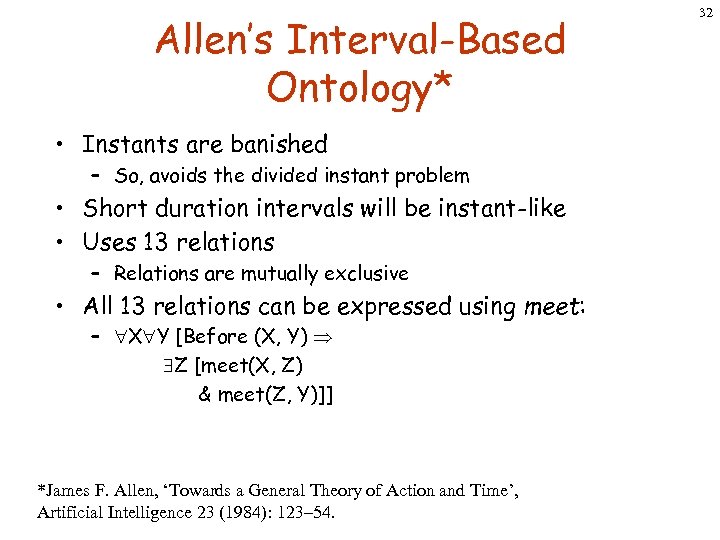

Allen’s Interval-Based Ontology* • Instants are banished – So, avoids the divided instant problem • Short duration intervals will be instant-like • Uses 13 relations – Relations are mutually exclusive • All 13 relations can be expressed using meet: – X Y [Before (X, Y) Z [meet(X, Z) & meet(Z, Y)]] *James F. Allen, ‘Towards a General Theory of Action and Time’, Artificial Intelligence 23 (1984): 123– 54. 32

Allen’s Interval-Based Ontology* • Instants are banished – So, avoids the divided instant problem • Short duration intervals will be instant-like • Uses 13 relations – Relations are mutually exclusive • All 13 relations can be expressed using meet: – X Y [Before (X, Y) Z [meet(X, Z) & meet(Z, Y)]] *James F. Allen, ‘Towards a General Theory of Action and Time’, Artificial Intelligence 23 (1984): 123– 54. 32

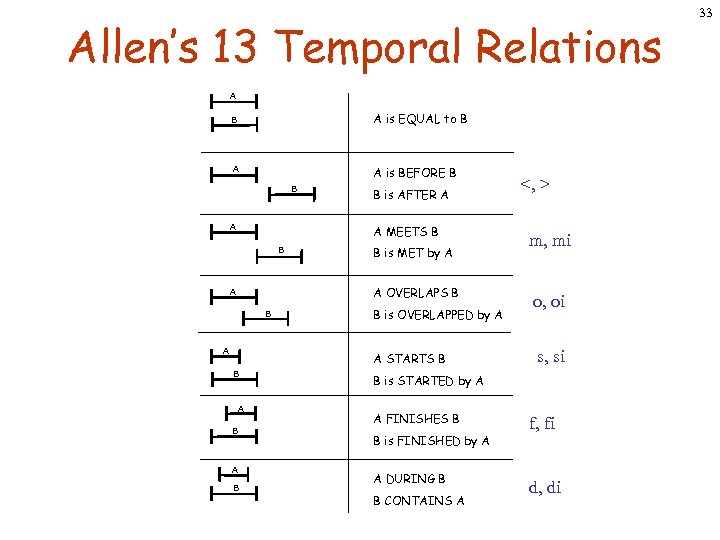

Allen’s 13 Temporal Relations A B A is EQUAL to B A A is BEFORE B B A B is AFTER A A MEETS B B B is MET by A A OVERLAPS B A B is OVERLAPPED by A A STARTS B B A B <, > m, mi o, oi s, si B is STARTED by A A FINISHES B B is FINISHED by A A DURING B B CONTAINS A f, fi d, di 33

Allen’s 13 Temporal Relations A B A is EQUAL to B A A is BEFORE B B A B is AFTER A A MEETS B B B is MET by A A OVERLAPS B A B is OVERLAPPED by A A STARTS B B A B <, > m, mi o, oi s, si B is STARTED by A A FINISHES B B is FINISHED by A A DURING B B CONTAINS A f, fi d, di 33

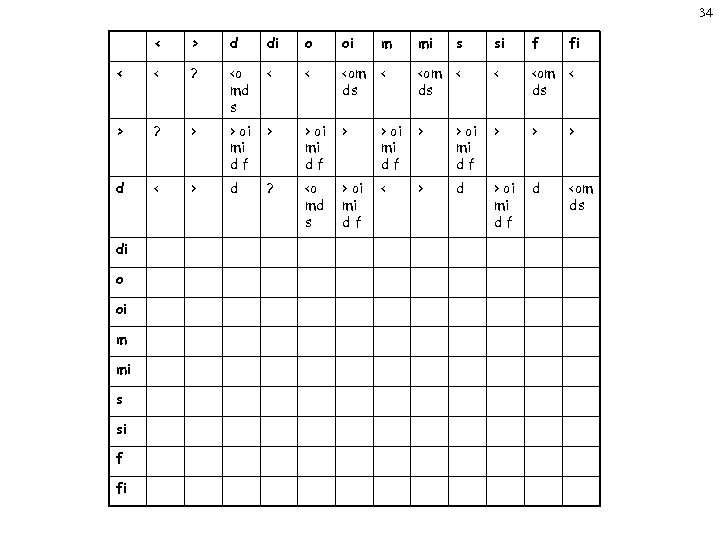

34 < > d di o oi m mi s si f fi < < ?

34 < > d di o oi m mi s si f fi < < ?

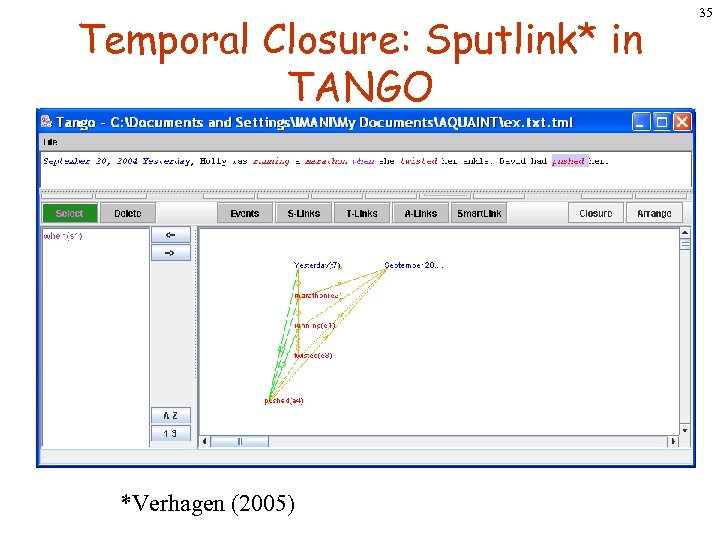

Temporal Closure: Sputlink* in TANGO *Verhagen (2005) 35

Temporal Closure: Sputlink* in TANGO *Verhagen (2005) 35

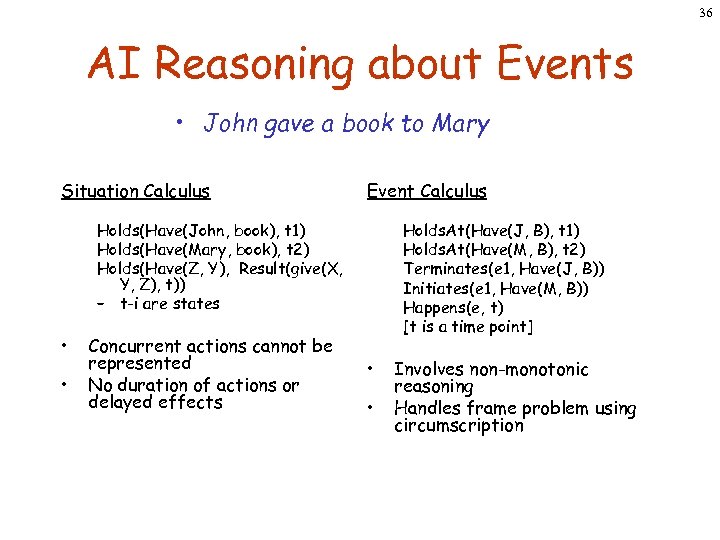

36 AI Reasoning about Events • John gave a book to Mary Situation Calculus Event Calculus Holds(Have(John, book), t 1) Holds(Have(Mary, book), t 2) Holds(Have(Z, Y), Result(give(X, Y, Z), t)) – t-i are states • • Concurrent actions cannot be represented No duration of actions or delayed effects Holds. At(Have(J, B), t 1) Holds. At(Have(M, B), t 2) Terminates(e 1, Have(J, B)) Initiates(e 1, Have(M, B)) Happens(e, t) [t is a time point] • • Involves non-monotonic reasoning Handles frame problem using circumscription

36 AI Reasoning about Events • John gave a book to Mary Situation Calculus Event Calculus Holds(Have(John, book), t 1) Holds(Have(Mary, book), t 2) Holds(Have(Z, Y), Result(give(X, Y, Z), t)) – t-i are states • • Concurrent actions cannot be represented No duration of actions or delayed effects Holds. At(Have(J, B), t 1) Holds. At(Have(M, B), t 2) Terminates(e 1, Have(J, B)) Initiates(e 1, Have(M, B)) Happens(e, t) [t is a time point] • • Involves non-monotonic reasoning Handles frame problem using circumscription

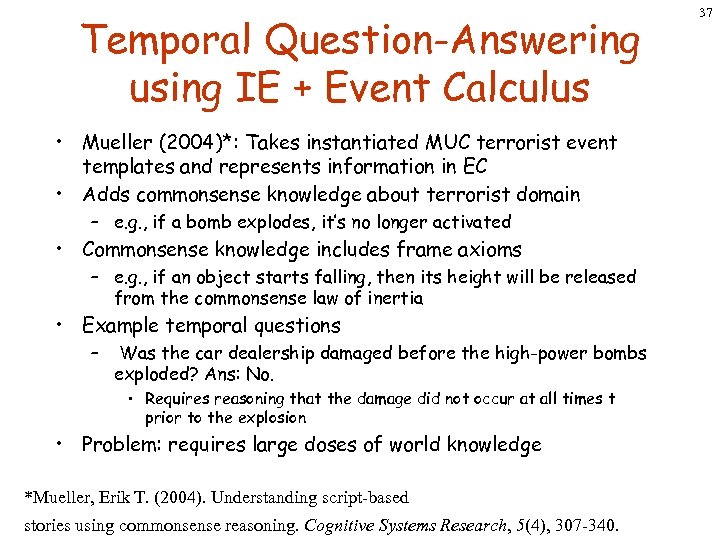

Temporal Question-Answering using IE + Event Calculus • Mueller (2004)*: Takes instantiated MUC terrorist event templates and represents information in EC • Adds commonsense knowledge about terrorist domain – e. g. , if a bomb explodes, it’s no longer activated • Commonsense knowledge includes frame axioms – e. g. , if an object starts falling, then its height will be released from the commonsense law of inertia • Example temporal questions – Was the car dealership damaged before the high-power bombs exploded? Ans: No. • Requires reasoning that the damage did not occur at all times t prior to the explosion • Problem: requires large doses of world knowledge *Mueller, Erik T. (2004). Understanding script-based stories using commonsense reasoning. Cognitive Systems Research, 5(4), 307 -340. 37

Temporal Question-Answering using IE + Event Calculus • Mueller (2004)*: Takes instantiated MUC terrorist event templates and represents information in EC • Adds commonsense knowledge about terrorist domain – e. g. , if a bomb explodes, it’s no longer activated • Commonsense knowledge includes frame axioms – e. g. , if an object starts falling, then its height will be released from the commonsense law of inertia • Example temporal questions – Was the car dealership damaged before the high-power bombs exploded? Ans: No. • Requires reasoning that the damage did not occur at all times t prior to the explosion • Problem: requires large doses of world knowledge *Mueller, Erik T. (2004). Understanding script-based stories using commonsense reasoning. Cognitive Systems Research, 5(4), 307 -340. 37

Temporal Question Answering using IE + Temporal Databases • In NLITDB, semantic relation between a question event and the adverbial it combines with is inferred by a variety of inference rules. • State + ‘point’ adverbial – Which flight was queueing for runway 2 at 5: 00 pm? : • state coerced to an achievement, viewed as holding at the time specified by the adverbial. • Activity + point adverbial • – can mean that the activity holds at that time, or that the activity starts at that time, e. g. , Which flight queued for runway 2 at 5: 00 pm? An accomplishment may indicate inception or termination – Which flight taxied to gate 4 at 5: 00 pm? can mean the taxiing starts or ends at 5 pm. 38

Temporal Question Answering using IE + Temporal Databases • In NLITDB, semantic relation between a question event and the adverbial it combines with is inferred by a variety of inference rules. • State + ‘point’ adverbial – Which flight was queueing for runway 2 at 5: 00 pm? : • state coerced to an achievement, viewed as holding at the time specified by the adverbial. • Activity + point adverbial • – can mean that the activity holds at that time, or that the activity starts at that time, e. g. , Which flight queued for runway 2 at 5: 00 pm? An accomplishment may indicate inception or termination – Which flight taxied to gate 4 at 5: 00 pm? can mean the taxiing starts or ends at 5 pm. 38

39 Outline • • Introduction Linguistic Theories AI Theories Annotation Schemes Rule-based and machine-learning methods. Challenges Links

39 Outline • • Introduction Linguistic Theories AI Theories Annotation Schemes Rule-based and machine-learning methods. Challenges Links

40 IE Methodology Annotation Guidelines Raw Corpus Annotation Editor Raw Corpus Initial Tagger Annotated Corpus Machine Learning Program Learned Rules Rule Apply Annotated Corpus

40 IE Methodology Annotation Guidelines Raw Corpus Annotation Editor Raw Corpus Initial Tagger Annotated Corpus Machine Learning Program Learned Rules Rule Apply Annotated Corpus

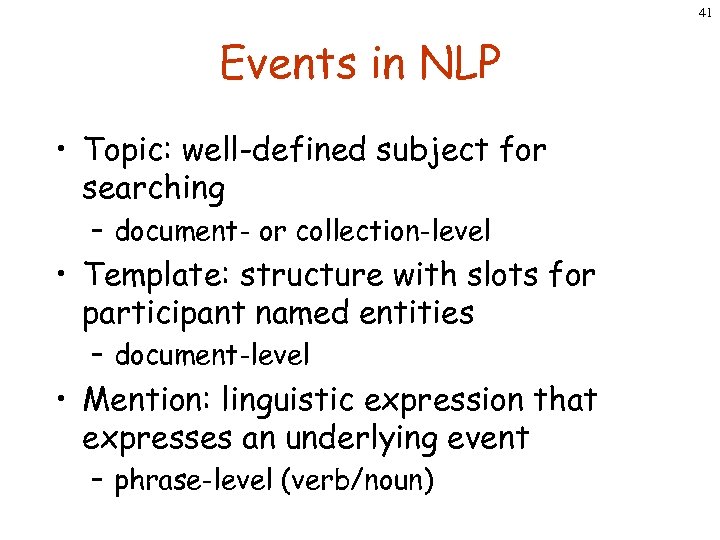

41 Events in NLP • Topic: well-defined subject for searching – document- or collection-level • Template: structure with slots for participant named entities – document-level • Mention: linguistic expression that expresses an underlying event – phrase-level (verb/noun)

41 Events in NLP • Topic: well-defined subject for searching – document- or collection-level • Template: structure with slots for participant named entities – document-level • Mention: linguistic expression that expresses an underlying event – phrase-level (verb/noun)

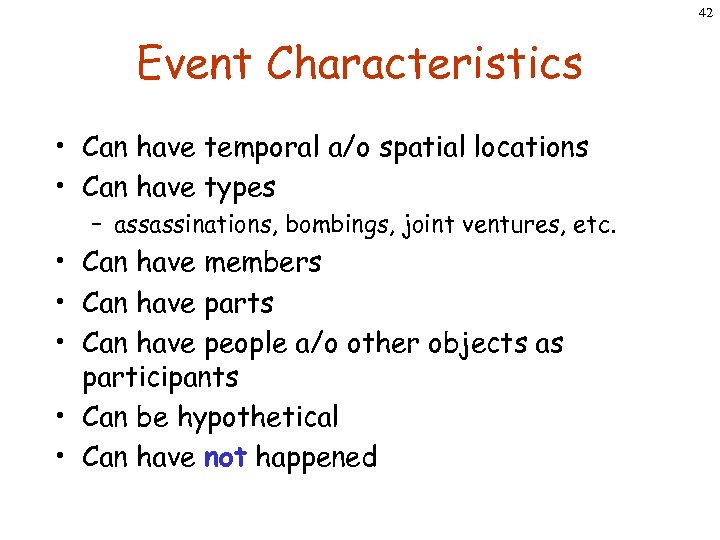

42 Event Characteristics • Can have temporal a/o spatial locations • Can have types – assassinations, bombings, joint ventures, etc. • Can have members • Can have parts • Can have people a/o other objects as participants • Can be hypothetical • Can have not happened

42 Event Characteristics • Can have temporal a/o spatial locations • Can have types – assassinations, bombings, joint ventures, etc. • Can have members • Can have parts • Can have people a/o other objects as participants • Can be hypothetical • Can have not happened

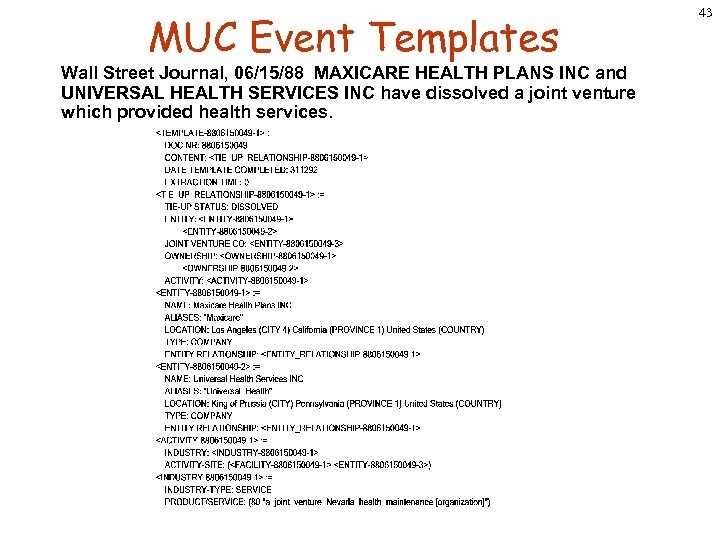

MUC Event Templates Wall Street Journal, 06/15/88 MAXICARE HEALTH PLANS INC and UNIVERSAL HEALTH SERVICES INC have dissolved a joint venture which provided health services. 43

MUC Event Templates Wall Street Journal, 06/15/88 MAXICARE HEALTH PLANS INC and UNIVERSAL HEALTH SERVICES INC have dissolved a joint venture which provided health services. 43

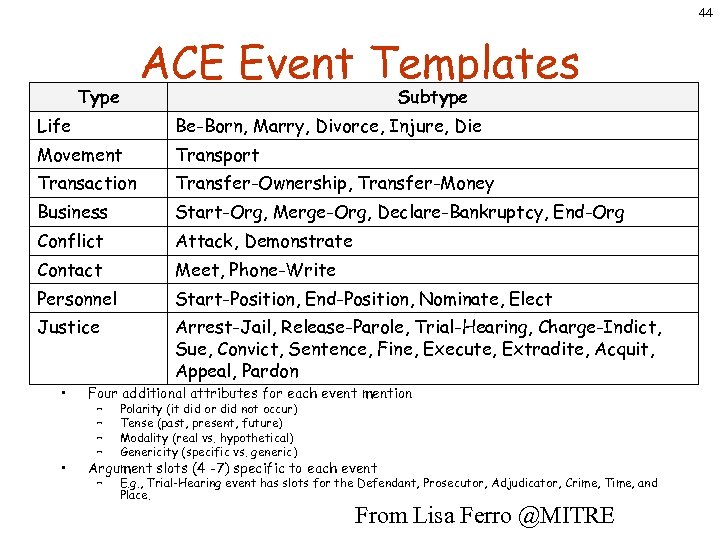

44 Type ACE Event Templates Subtype Life Be-Born, Marry, Divorce, Injure, Die Movement Transport Transaction Transfer-Ownership, Transfer-Money Business Start-Org, Merge-Org, Declare-Bankruptcy, End-Org Conflict Attack, Demonstrate Contact Meet, Phone-Write Personnel Start-Position, End-Position, Nominate, Elect Justice Arrest-Jail, Release-Parole, Trial-Hearing, Charge-Indict, Sue, Convict, Sentence, Fine, Execute, Extradite, Acquit, Appeal, Pardon • Four additional attributes for each event mention • Argument slots (4 -7) specific to each event – – Polarity (it did or did not occur) Tense (past, present, future) Modality (real vs. hypothetical) Genericity (specific vs. generic) – E. g. , Trial-Hearing event has slots for the Defendant, Prosecutor, Adjudicator, Crime, Time, and Place. From Lisa Ferro @MITRE

44 Type ACE Event Templates Subtype Life Be-Born, Marry, Divorce, Injure, Die Movement Transport Transaction Transfer-Ownership, Transfer-Money Business Start-Org, Merge-Org, Declare-Bankruptcy, End-Org Conflict Attack, Demonstrate Contact Meet, Phone-Write Personnel Start-Position, End-Position, Nominate, Elect Justice Arrest-Jail, Release-Parole, Trial-Hearing, Charge-Indict, Sue, Convict, Sentence, Fine, Execute, Extradite, Acquit, Appeal, Pardon • Four additional attributes for each event mention • Argument slots (4 -7) specific to each event – – Polarity (it did or did not occur) Tense (past, present, future) Modality (real vs. hypothetical) Genericity (specific vs. generic) – E. g. , Trial-Hearing event has slots for the Defendant, Prosecutor, Adjudicator, Crime, Time, and Place. From Lisa Ferro @MITRE

45 Mention-Level Events • Event expressions: – tensed verbs; has left, was captured, will resign; – stative adjectives; sunken, stalled, on board; – event nominals; merger, Military Operation, war; • Dependencies between events and times: – Anchoring; John left on Monday. – Orderings; The party happened after midnight. – Embedding; John said Mary left.

45 Mention-Level Events • Event expressions: – tensed verbs; has left, was captured, will resign; – stative adjectives; sunken, stalled, on board; – event nominals; merger, Military Operation, war; • Dependencies between events and times: – Anchoring; John left on Monday. – Orderings; The party happened after midnight. – Embedding; John said Mary left.

the third week" src="https://present5.com/presentation/e76e590ec2b2de19eec72609d12b24dd/image-46.jpg" alt="TIMEX 2 (TIDES/ACE) Annotation Scheme Time Points

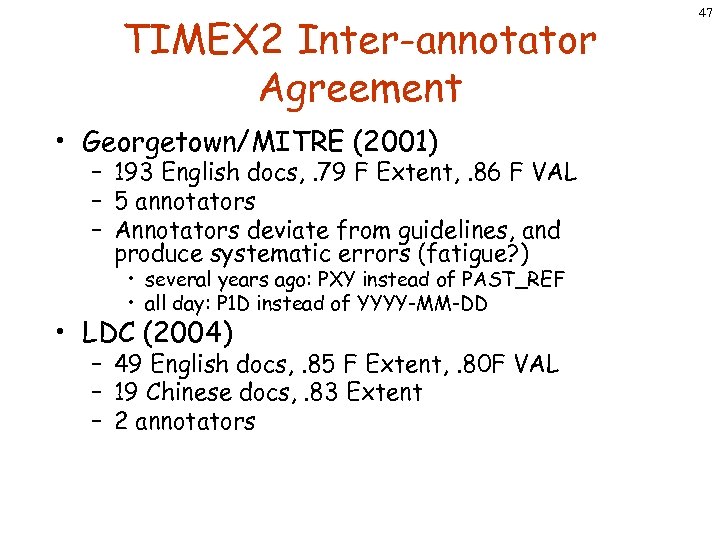

TIMEX 2 Inter-annotator Agreement • Georgetown/MITRE (2001) – 193 English docs, . 79 F Extent, . 86 F VAL – 5 annotators – Annotators deviate from guidelines, and produce systematic errors (fatigue? ) • several years ago: PXY instead of PAST_REF • all day: P 1 D instead of YYYY-MM-DD • LDC (2004) – 49 English docs, . 85 F Extent, . 80 F VAL – 19 Chinese docs, . 83 Extent – 2 annotators 47

TIMEX 2 Inter-annotator Agreement • Georgetown/MITRE (2001) – 193 English docs, . 79 F Extent, . 86 F VAL – 5 annotators – Annotators deviate from guidelines, and produce systematic errors (fatigue? ) • several years ago: PXY instead of PAST_REF • all day: P 1 D instead of YYYY-MM-DD • LDC (2004) – 49 English docs, . 85 F Extent, . 80 F VAL – 19 Chinese docs, . 83 Extent – 2 annotators 47

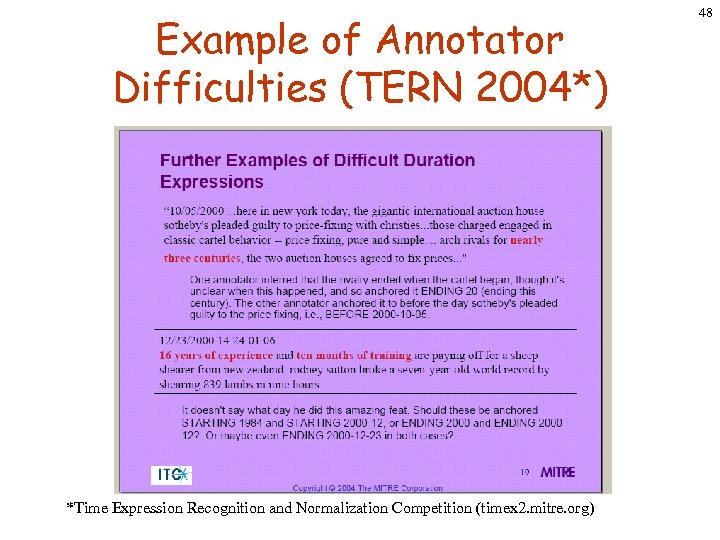

Example of Annotator Difficulties (TERN 2004*) *Time Expression Recognition and Normalization Competition (timex 2. mitre. org) 48

Example of Annotator Difficulties (TERN 2004*) *Time Expression Recognition and Normalization Competition (timex 2. mitre. org) 48

49 TIMEX 2 – A Mature Standard • Extensively debugged • Detailed guidelines for English and Chinese • Evaluated for English, Arabic, Chinese, Korean, Spanish, French, Swedish, and Hindi • Applied to news, scheduling dialogues, other types of data • Corpora available through ACE, MITRE

49 TIMEX 2 – A Mature Standard • Extensively debugged • Detailed guidelines for English and Chinese • Evaluated for English, Arabic, Chinese, Korean, Spanish, French, Swedish, and Hindi • Applied to news, scheduling dialogues, other types of data • Corpora available through ACE, MITRE

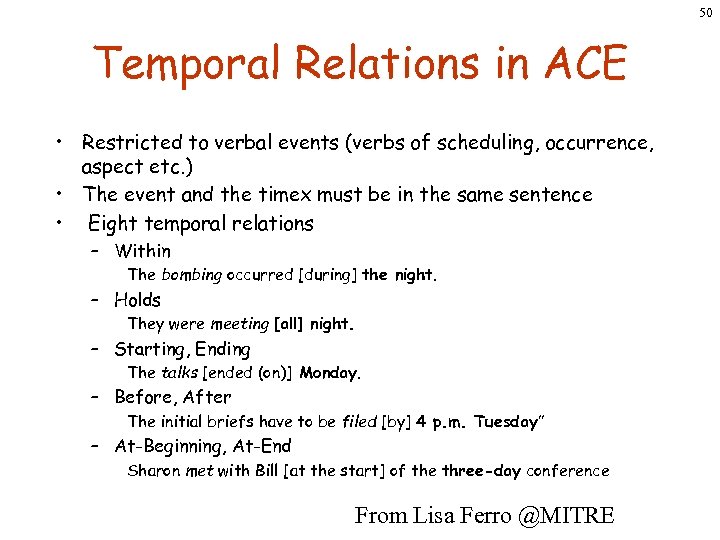

50 Temporal Relations in ACE • Restricted to verbal events (verbs of scheduling, occurrence, aspect etc. ) • The event and the timex must be in the same sentence • Eight temporal relations – Within The bombing occurred [during] the night. – Holds They were meeting [all] night. – Starting, Ending The talks [ended (on)] Monday. – Before, After The initial briefs have to be filed [by] 4 p. m. Tuesday” – At-Beginning, At-End Sharon met with Bill [at the start] of the three-day conference From Lisa Ferro @MITRE

50 Temporal Relations in ACE • Restricted to verbal events (verbs of scheduling, occurrence, aspect etc. ) • The event and the timex must be in the same sentence • Eight temporal relations – Within The bombing occurred [during] the night. – Holds They were meeting [all] night. – Starting, Ending The talks [ended (on)] Monday. – Before, After The initial briefs have to be filed [by] 4 p. m. Tuesday” – At-Beginning, At-End Sharon met with Bill [at the start] of the three-day conference From Lisa Ferro @MITRE

51 Outline • • Introduction Linguistic Theories AI Theories Annotation Schemes Rule-based and machine-learning methods. Challenges Links

51 Outline • • Introduction Linguistic Theories AI Theories Annotation Schemes Rule-based and machine-learning methods. Challenges Links

52 Time. ML Annotation Scheme • A Proposed Metadata Standard for Markup of events, their temporal anchoring, and how they are related to each other • Marks up mention-level events, time expressions, and links between events (and events and times) • Developer: James Pustejovsky (& co. )

52 Time. ML Annotation Scheme • A Proposed Metadata Standard for Markup of events, their temporal anchoring, and how they are related to each other • Marks up mention-level events, time expressions, and links between events (and events and times) • Developer: James Pustejovsky (& co. )

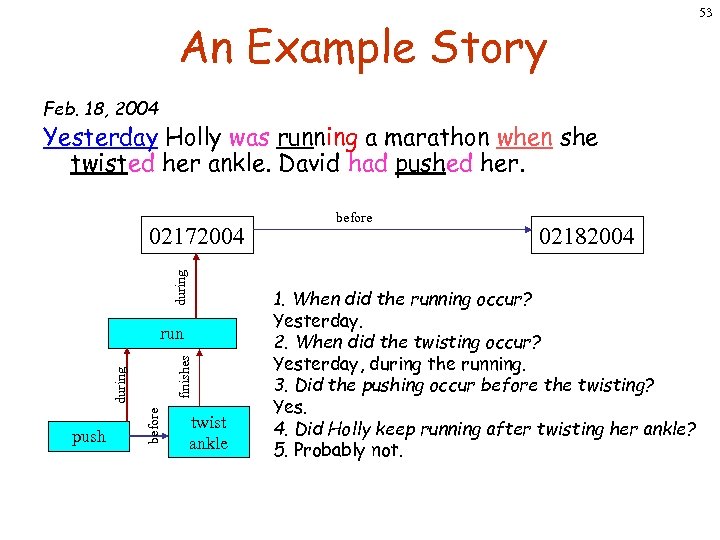

An Example Story Feb. 18, 2004 Yesterday Holly was running a marathon when she twisted her ankle. David had pushed her. during 02172004 push before during finishes run twist ankle before 02182004 1. When did the running occur? Yesterday. 2. When did the twisting occur? Yesterday, during the running. 3. Did the pushing occur before the twisting? Yes. 4. Did Holly keep running after twisting her ankle? 5. Probably not. 53

An Example Story Feb. 18, 2004 Yesterday Holly was running a marathon when she twisted her ankle. David had pushed her. during 02172004 push before during finishes run twist ankle before 02182004 1. When did the running occur? Yesterday. 2. When did the twisting occur? Yesterday, during the running. 3. Did the pushing occur before the twisting? Yes. 4. Did Holly keep running after twisting her ankle? 5. Probably not. 53

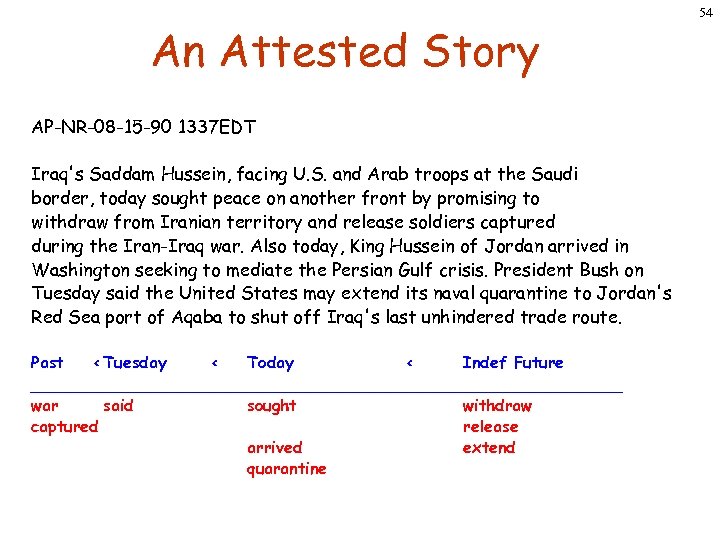

An Attested Story AP-NR-08 -15 -90 1337 EDT Iraq's Saddam Hussein, facing U. S. and Arab troops at the Saudi border, today sought peace on another front by promising to withdraw from Iranian territory and release soldiers captured during the Iran-Iraq war. Also today, King Hussein of Jordan arrived in Washington seeking to mediate the Persian Gulf crisis. President Bush on Tuesday said the United States may extend its naval quarantine to Jordan's Red Sea port of Aqaba to shut off Iraq's last unhindered trade route. Past < Tuesday < Today < Indef Future ______________________________ war said sought withdraw captured release arrived extend quarantine 54

An Attested Story AP-NR-08 -15 -90 1337 EDT Iraq's Saddam Hussein, facing U. S. and Arab troops at the Saudi border, today sought peace on another front by promising to withdraw from Iranian territory and release soldiers captured during the Iran-Iraq war. Also today, King Hussein of Jordan arrived in Washington seeking to mediate the Persian Gulf crisis. President Bush on Tuesday said the United States may extend its naval quarantine to Jordan's Red Sea port of Aqaba to shut off Iraq's last unhindered trade route. Past < Tuesday < Today < Indef Future ______________________________ war said sought withdraw captured release arrived extend quarantine 54

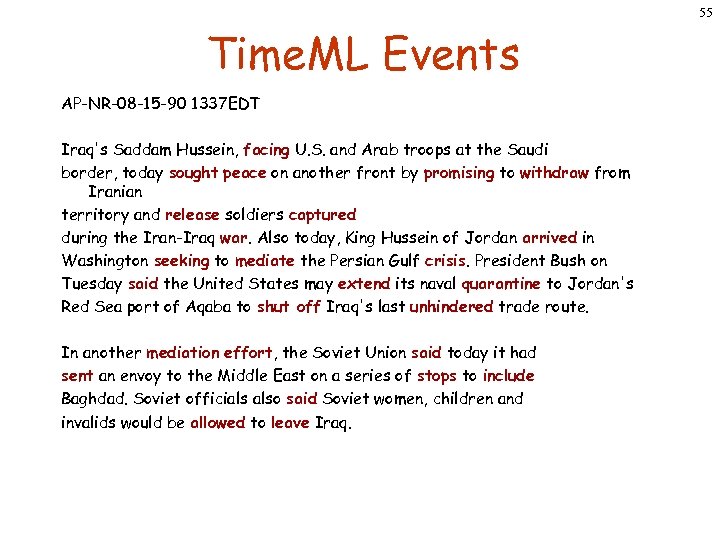

55 Time. ML Events AP-NR-08 -15 -90 1337 EDT Iraq's Saddam Hussein, facing U. S. and Arab troops at the Saudi border, today sought peace on another front by promising to withdraw from Iranian territory and release soldiers captured during the Iran-Iraq war. Also today, King Hussein of Jordan arrived in Washington seeking to mediate the Persian Gulf crisis. President Bush on Tuesday said the United States may extend its naval quarantine to Jordan's Red Sea port of Aqaba to shut off Iraq's last unhindered trade route. In another mediation effort, the Soviet Union said today it had sent an envoy to the Middle East on a series of stops to include Baghdad. Soviet officials also said Soviet women, children and invalids would be allowed to leave Iraq.

55 Time. ML Events AP-NR-08 -15 -90 1337 EDT Iraq's Saddam Hussein, facing U. S. and Arab troops at the Saudi border, today sought peace on another front by promising to withdraw from Iranian territory and release soldiers captured during the Iran-Iraq war. Also today, King Hussein of Jordan arrived in Washington seeking to mediate the Persian Gulf crisis. President Bush on Tuesday said the United States may extend its naval quarantine to Jordan's Red Sea port of Aqaba to shut off Iraq's last unhindered trade route. In another mediation effort, the Soviet Union said today it had sent an envoy to the Middle East on a series of stops to include Baghdad. Soviet officials also said Soviet women, children and invalids would be allowed to leave Iraq.

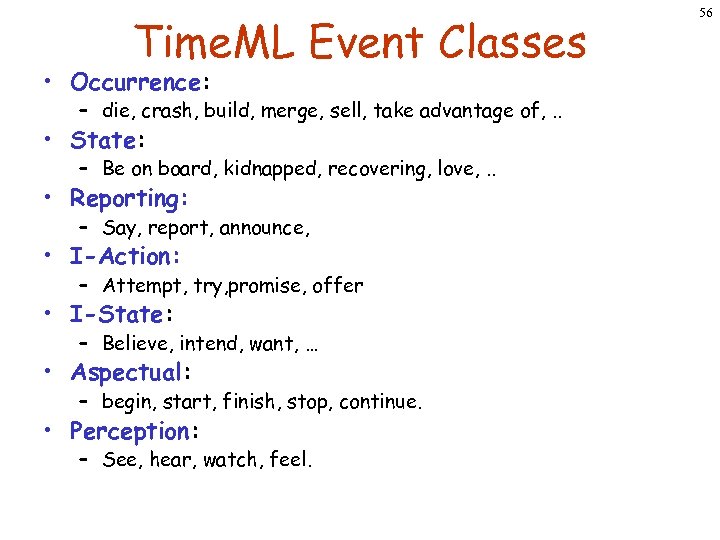

Time. ML Event Classes • Occurrence: – die, crash, build, merge, sell, take advantage of, . . • State: – Be on board, kidnapped, recovering, love, . . • Reporting: – Say, report, announce, • I-Action: – Attempt, try, promise, offer • I-State: – Believe, intend, want, … • Aspectual: – begin, start, finish, stop, continue. • Perception: – See, hear, watch, feel. 56

Time. ML Event Classes • Occurrence: – die, crash, build, merge, sell, take advantage of, . . • State: – Be on board, kidnapped, recovering, love, . . • Reporting: – Say, report, announce, • I-Action: – Attempt, try, promise, offer • I-State: – Believe, intend, want, … • Aspectual: – begin, start, finish, stop, continue. • Perception: – See, hear, watch, feel. 56

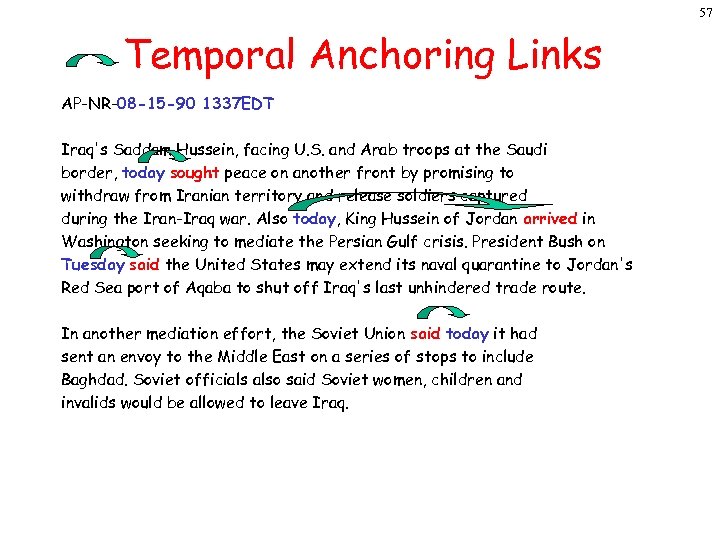

57 Temporal Anchoring Links AP-NR-08 -15 -90 1337 EDT Iraq's Saddam Hussein, facing U. S. and Arab troops at the Saudi border, today sought peace on another front by promising to withdraw from Iranian territory and release soldiers captured during the Iran-Iraq war. Also today, King Hussein of Jordan arrived in Washington seeking to mediate the Persian Gulf crisis. President Bush on Tuesday said the United States may extend its naval quarantine to Jordan's Red Sea port of Aqaba to shut off Iraq's last unhindered trade route. In another mediation effort, the Soviet Union said today it had sent an envoy to the Middle East on a series of stops to include Baghdad. Soviet officials also said Soviet women, children and invalids would be allowed to leave Iraq.

57 Temporal Anchoring Links AP-NR-08 -15 -90 1337 EDT Iraq's Saddam Hussein, facing U. S. and Arab troops at the Saudi border, today sought peace on another front by promising to withdraw from Iranian territory and release soldiers captured during the Iran-Iraq war. Also today, King Hussein of Jordan arrived in Washington seeking to mediate the Persian Gulf crisis. President Bush on Tuesday said the United States may extend its naval quarantine to Jordan's Red Sea port of Aqaba to shut off Iraq's last unhindered trade route. In another mediation effort, the Soviet Union said today it had sent an envoy to the Middle East on a series of stops to include Baghdad. Soviet officials also said Soviet women, children and invalids would be allowed to leave Iraq.

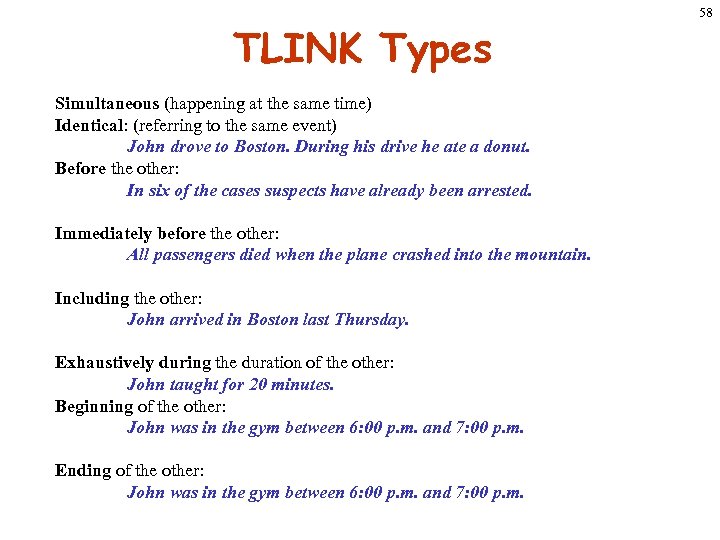

TLINK Types Simultaneous (happening at the same time) Identical: (referring to the same event) John drove to Boston. During his drive he ate a donut. Before the other: In six of the cases suspects have already been arrested. Immediately before the other: All passengers died when the plane crashed into the mountain. Including the other: John arrived in Boston last Thursday. Exhaustively during the duration of the other: John taught for 20 minutes. Beginning of the other: John was in the gym between 6: 00 p. m. and 7: 00 p. m. Ending of the other: John was in the gym between 6: 00 p. m. and 7: 00 p. m. 58

TLINK Types Simultaneous (happening at the same time) Identical: (referring to the same event) John drove to Boston. During his drive he ate a donut. Before the other: In six of the cases suspects have already been arrested. Immediately before the other: All passengers died when the plane crashed into the mountain. Including the other: John arrived in Boston last Thursday. Exhaustively during the duration of the other: John taught for 20 minutes. Beginning of the other: John was in the gym between 6: 00 p. m. and 7: 00 p. m. Ending of the other: John was in the gym between 6: 00 p. m. and 7: 00 p. m. 58

taught" src="https://present5.com/presentation/e76e590ec2b2de19eec72609d12b24dd/image-59.jpg" alt="TLINK Example John taught 20 minutes every Monday. John

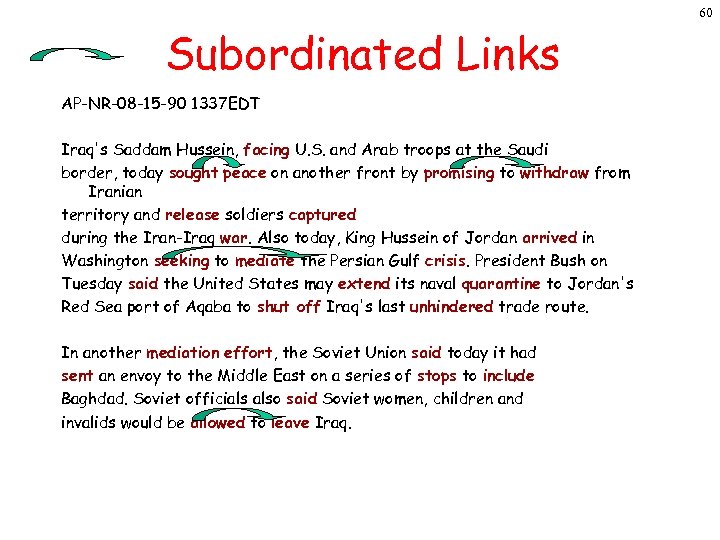

60 Subordinated Links AP-NR-08 -15 -90 1337 EDT Iraq's Saddam Hussein, facing U. S. and Arab troops at the Saudi border, today sought peace on another front by promising to withdraw from Iranian territory and release soldiers captured during the Iran-Iraq war. Also today, King Hussein of Jordan arrived in Washington seeking to mediate the Persian Gulf crisis. President Bush on Tuesday said the United States may extend its naval quarantine to Jordan's Red Sea port of Aqaba to shut off Iraq's last unhindered trade route. In another mediation effort, the Soviet Union said today it had sent an envoy to the Middle East on a series of stops to include Baghdad. Soviet officials also said Soviet women, children and invalids would be allowed to leave Iraq.

60 Subordinated Links AP-NR-08 -15 -90 1337 EDT Iraq's Saddam Hussein, facing U. S. and Arab troops at the Saudi border, today sought peace on another front by promising to withdraw from Iranian territory and release soldiers captured during the Iran-Iraq war. Also today, King Hussein of Jordan arrived in Washington seeking to mediate the Persian Gulf crisis. President Bush on Tuesday said the United States may extend its naval quarantine to Jordan's Red Sea port of Aqaba to shut off Iraq's last unhindered trade route. In another mediation effort, the Soviet Union said today it had sent an envoy to the Middle East on a series of stops to include Baghdad. Soviet officials also said Soviet women, children and invalids would be allowed to leave Iraq.

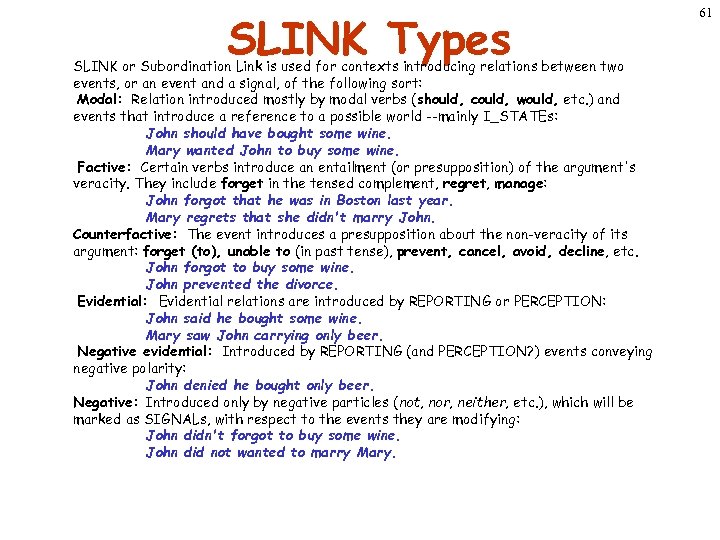

SLINK Types SLINK or Subordination Link is used for contexts introducing relations between two events, or an event and a signal, of the following sort: Modal: Relation introduced mostly by modal verbs (should, could, would, etc. ) and events that introduce a reference to a possible world --mainly I_STATEs: John should have bought some wine. Mary wanted John to buy some wine. Factive: Certain verbs introduce an entailment (or presupposition) of the argument's veracity. They include forget in the tensed complement, regret, manage: John forgot that he was in Boston last year. Mary regrets that she didn't marry John. Counterfactive: The event introduces a presupposition about the non-veracity of its argument: forget (to), unable to (in past tense), prevent, cancel, avoid, decline, etc. John forgot to buy some wine. John prevented the divorce. Evidential: Evidential relations are introduced by REPORTING or PERCEPTION: John said he bought some wine. Mary saw John carrying only beer. Negative evidential: Introduced by REPORTING (and PERCEPTION? ) events conveying negative polarity: John denied he bought only beer. Negative: Introduced only by negative particles (not, nor, neither, etc. ), which will be marked as SIGNALs, with respect to the events they are modifying: John didn't forgot to buy some wine. John did not wanted to marry Mary. 61

SLINK Types SLINK or Subordination Link is used for contexts introducing relations between two events, or an event and a signal, of the following sort: Modal: Relation introduced mostly by modal verbs (should, could, would, etc. ) and events that introduce a reference to a possible world --mainly I_STATEs: John should have bought some wine. Mary wanted John to buy some wine. Factive: Certain verbs introduce an entailment (or presupposition) of the argument's veracity. They include forget in the tensed complement, regret, manage: John forgot that he was in Boston last year. Mary regrets that she didn't marry John. Counterfactive: The event introduces a presupposition about the non-veracity of its argument: forget (to), unable to (in past tense), prevent, cancel, avoid, decline, etc. John forgot to buy some wine. John prevented the divorce. Evidential: Evidential relations are introduced by REPORTING or PERCEPTION: John said he bought some wine. Mary saw John carrying only beer. Negative evidential: Introduced by REPORTING (and PERCEPTION? ) events conveying negative polarity: John denied he bought only beer. Negative: Introduced only by negative particles (not, nor, neither, etc. ), which will be marked as SIGNALs, with respect to the events they are modifying: John didn't forgot to buy some wine. John did not wanted to marry Mary. 61

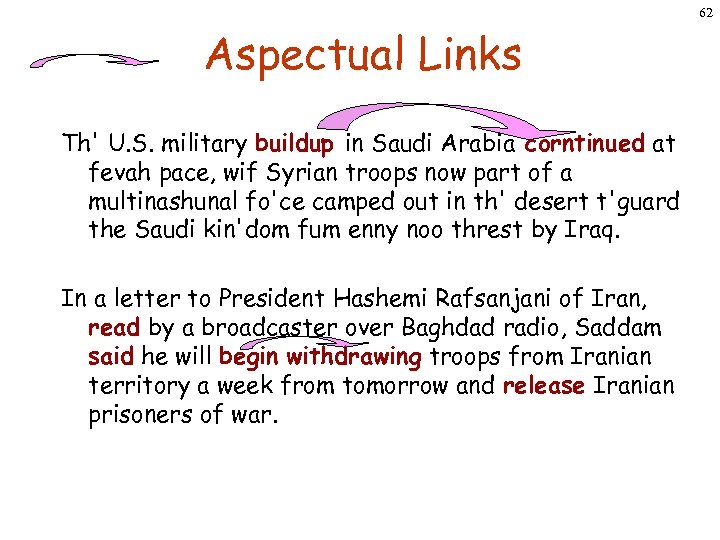

62 Aspectual Links Th' U. S. military buildup in Saudi Arabia corntinued at fevah pace, wif Syrian troops now part of a multinashunal fo'ce camped out in th' desert t'guard the Saudi kin'dom fum enny noo threst by Iraq. In a letter to President Hashemi Rafsanjani of Iran, read by a broadcaster over Baghdad radio, Saddam said he will begin withdrawing troops from Iranian territory a week from tomorrow and release Iranian prisoners of war.

62 Aspectual Links Th' U. S. military buildup in Saudi Arabia corntinued at fevah pace, wif Syrian troops now part of a multinashunal fo'ce camped out in th' desert t'guard the Saudi kin'dom fum enny noo threst by Iraq. In a letter to President Hashemi Rafsanjani of Iran, read by a broadcaster over Baghdad radio, Saddam said he will begin withdrawing troops from Iranian territory a week from tomorrow and release Iranian prisoners of war.

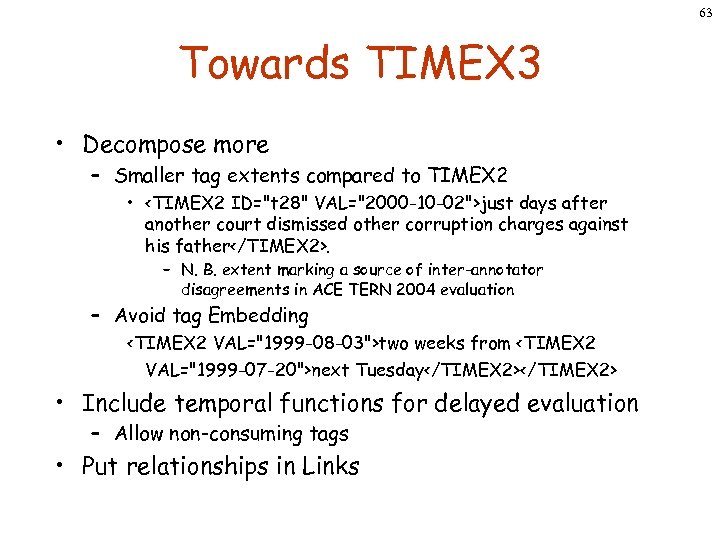

63 Towards TIMEX 3 • Decompose more – Smaller tag extents compared to TIMEX 2 •

63 Towards TIMEX 3 • Decompose more – Smaller tag extents compared to TIMEX 2 •

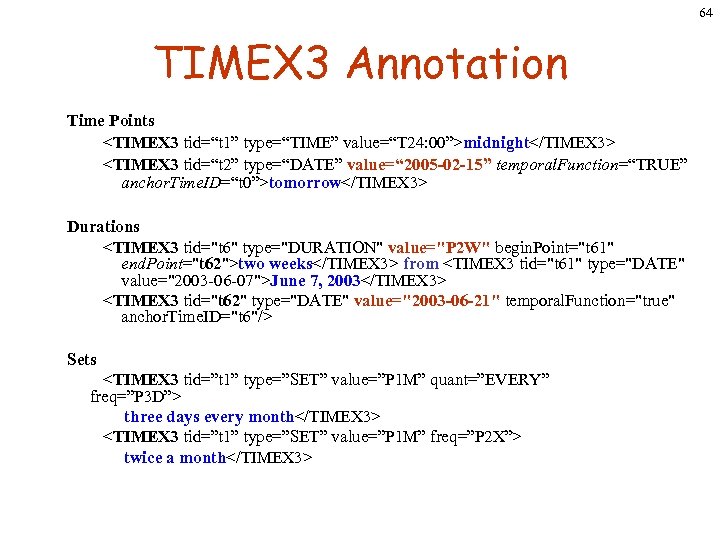

64 TIMEX 3 Annotation Time Points

64 TIMEX 3 Annotation Time Points

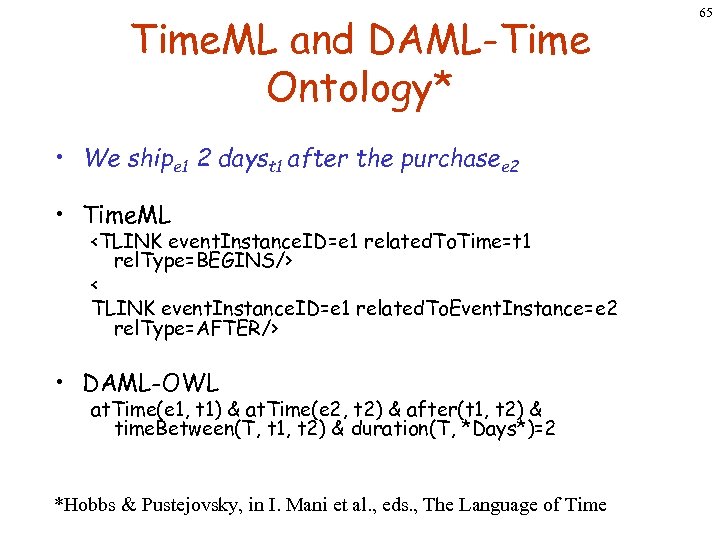

Time. ML and DAML-Time Ontology* • We shipe 1 2 dayst 1 after the purchasee 2 • Time. ML

Time. ML and DAML-Time Ontology* • We shipe 1 2 dayst 1 after the purchasee 2 • Time. ML

66 Outline • • Introduction Linguistic Theories AI Theories Annotation Schemes Rule-based and machine-learning methods. Challenges Links

66 Outline • • Introduction Linguistic Theories AI Theories Annotation Schemes Rule-based and machine-learning methods. Challenges Links

67 IE Methodology Annotation Guidelines Raw Corpus Annotation Editor Raw Corpus Initial Tagger Annotated Corpus Machine Learning Program Learned Rules Rule Apply Annotated Corpus

67 IE Methodology Annotation Guidelines Raw Corpus Annotation Editor Raw Corpus Initial Tagger Annotated Corpus Machine Learning Program Learned Rules Rule Apply Annotated Corpus

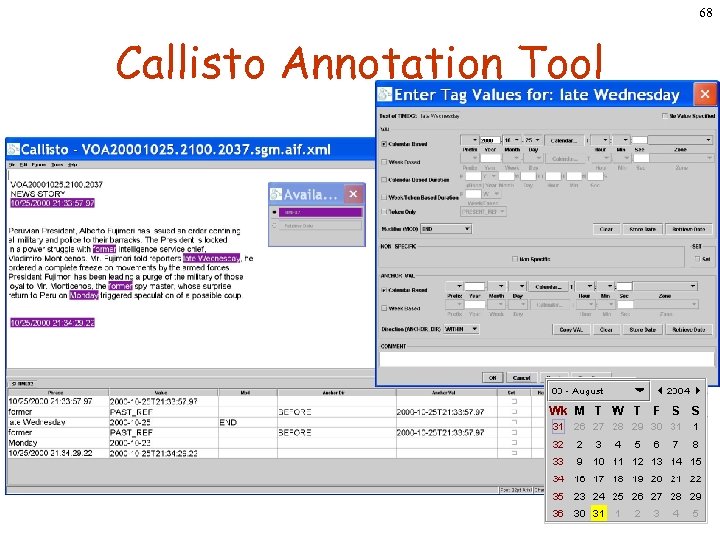

68 Callisto Annotation Tool

68 Callisto Annotation Tool

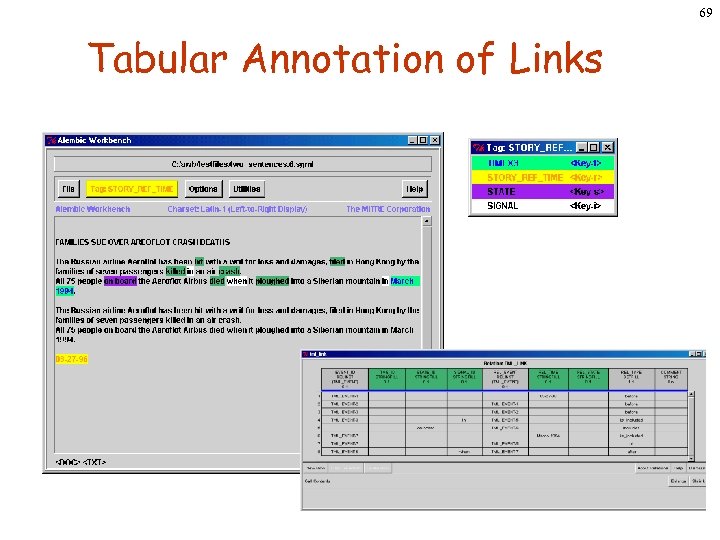

69 Tabular Annotation of Links

69 Tabular Annotation of Links

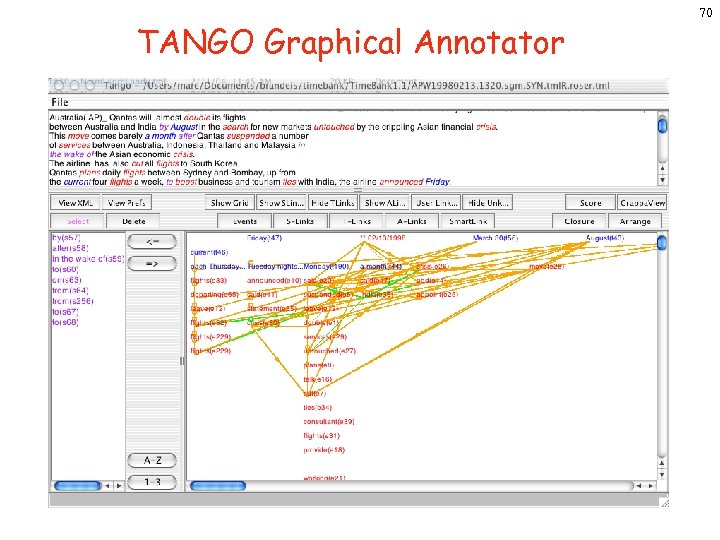

TANGO Graphical Annotator 70

TANGO Graphical Annotator 70

71 Outline • • Introduction Linguistic Theories AI Theories Annotation Schemes Rule-based and machine-learning methods. Challenges Links

71 Outline • • Introduction Linguistic Theories AI Theories Annotation Schemes Rule-based and machine-learning methods. Challenges Links

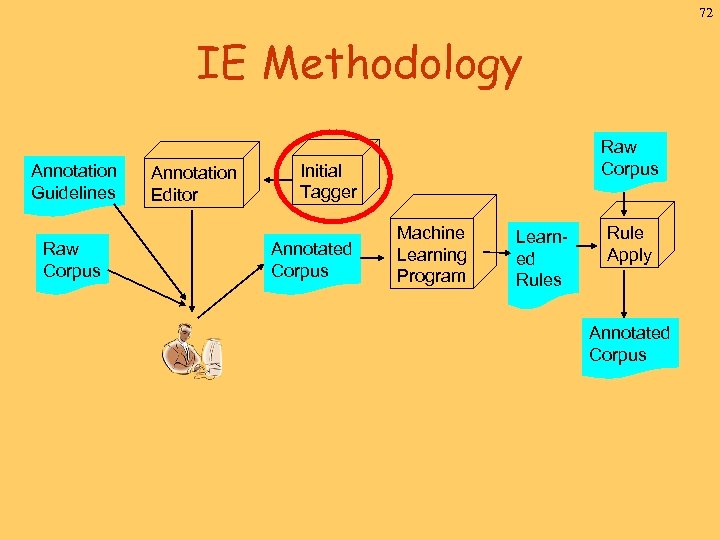

72 IE Methodology Annotation Guidelines Raw Corpus Annotation Editor Raw Corpus Initial Tagger Annotated Corpus Machine Learning Program Learned Rules Rule Apply Annotated Corpus

72 IE Methodology Annotation Guidelines Raw Corpus Annotation Editor Raw Corpus Initial Tagger Annotated Corpus Machine Learning Program Learned Rules Rule Apply Annotated Corpus

73 Timex 2/3 Extraction • Accuracy – Best systems: TIMEX 2: 95 F Extent, . 8 XF VAL (TERN* 2004 English) – GUTime: . 85 F Extent, . 82 F VAL (TERN 2004 training data English) – KTX: . 87 F Extent, . 86 F VAL (100 Korean documents) • Machine Learning – Tagging Extent: easily trained – Normalizing Values: harder to train

73 Timex 2/3 Extraction • Accuracy – Best systems: TIMEX 2: 95 F Extent, . 8 XF VAL (TERN* 2004 English) – GUTime: . 85 F Extent, . 82 F VAL (TERN 2004 training data English) – KTX: . 87 F Extent, . 86 F VAL (100 Korean documents) • Machine Learning – Tagging Extent: easily trained – Normalizing Values: harder to train

74 Time. ML Event Extraction • Easier than MUC template events (those were. 6 F) • Part-of-speech tagging to find verbs • Lexical patterns to detect tense and lexical and grammatical aspect • Syntactic rules to determine subordination relations • Recognition and Disambiguation of event nominals, e. g. , war, building, construction, etc. • Evita (Brandeis): – 0. 8 F on verbal events (overgenerates generic events which weren’t marked in Time. Bank) – 0. 64 F on event nominals (Word. Net-derived, disambiguated via Sem. Cor training)

74 Time. ML Event Extraction • Easier than MUC template events (those were. 6 F) • Part-of-speech tagging to find verbs • Lexical patterns to detect tense and lexical and grammatical aspect • Syntactic rules to determine subordination relations • Recognition and Disambiguation of event nominals, e. g. , war, building, construction, etc. • Evita (Brandeis): – 0. 8 F on verbal events (overgenerates generic events which weren’t marked in Time. Bank) – 0. 64 F on event nominals (Word. Net-derived, disambiguated via Sem. Cor training)

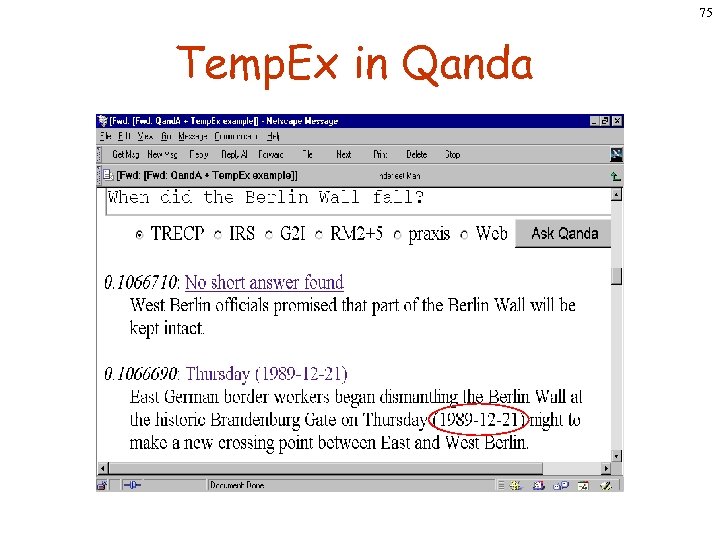

75 Temp. Ex in Qanda

75 Temp. Ex in Qanda

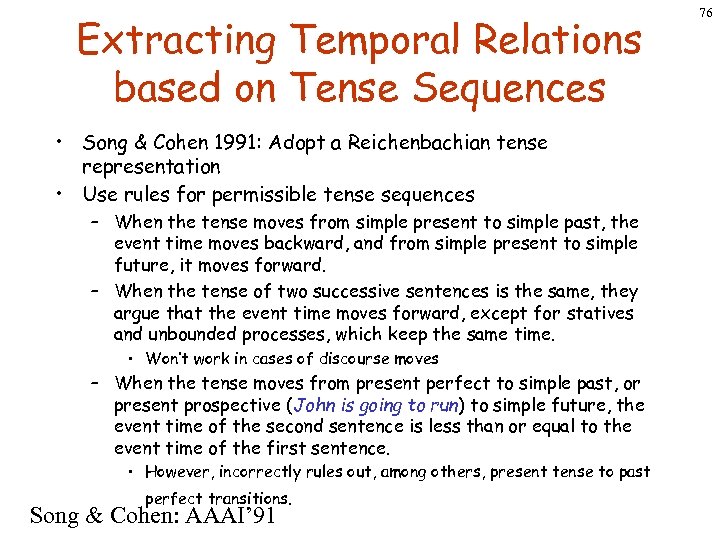

Extracting Temporal Relations based on Tense Sequences • Song & Cohen 1991: Adopt a Reichenbachian tense representation • Use rules for permissible tense sequences – When the tense moves from simple present to simple past, the event time moves backward, and from simple present to simple future, it moves forward. – When the tense of two successive sentences is the same, they argue that the event time moves forward, except for statives and unbounded processes, which keep the same time. • Won’t work in cases of discourse moves – When the tense moves from present perfect to simple past, or present prospective (John is going to run) to simple future, the event time of the second sentence is less than or equal to the event time of the first sentence. • However, incorrectly rules out, among others, present tense to past perfect transitions. Song & Cohen: AAAI’ 91 76

Extracting Temporal Relations based on Tense Sequences • Song & Cohen 1991: Adopt a Reichenbachian tense representation • Use rules for permissible tense sequences – When the tense moves from simple present to simple past, the event time moves backward, and from simple present to simple future, it moves forward. – When the tense of two successive sentences is the same, they argue that the event time moves forward, except for statives and unbounded processes, which keep the same time. • Won’t work in cases of discourse moves – When the tense moves from present perfect to simple past, or present prospective (John is going to run) to simple future, the event time of the second sentence is less than or equal to the event time of the first sentence. • However, incorrectly rules out, among others, present tense to past perfect transitions. Song & Cohen: AAAI’ 91 76

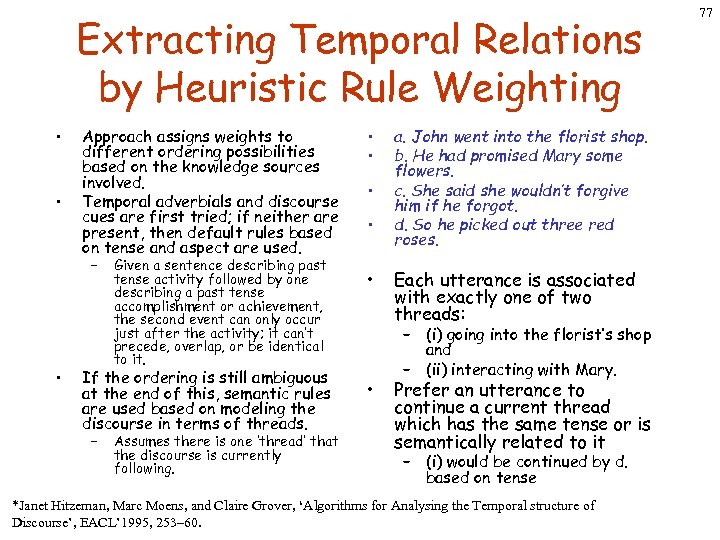

Extracting Temporal Relations by Heuristic Rule Weighting • • Approach assigns weights to different ordering possibilities based on the knowledge sources involved. Temporal adverbials and discourse cues are first tried; if neither are present, then default rules based on tense and aspect are used. – • Given a sentence describing past tense activity followed by one describing a past tense accomplishment or achievement, the second event can only occur just after the activity; it can’t precede, overlap, or be identical to it. If the ordering is still ambiguous at the end of this, semantic rules are used based on modeling the discourse in terms of threads. – Assumes there is one ‘thread’ that the discourse is currently following. • • • a. John went into the florist shop. b. He had promised Mary some flowers. c. She said she wouldn’t forgive him if he forgot. d. So he picked out three red roses. Each utterance is associated with exactly one of two threads: – (i) going into the florist’s shop and – (ii) interacting with Mary. Prefer an utterance to continue a current thread which has the same tense or is semantically related to it – (i) would be continued by d. based on tense *Janet Hitzeman, Marc Moens, and Claire Grover, ‘Algorithms for Analysing the Temporal structure of Discourse’, EACL’ 1995, 253– 60. 77

Extracting Temporal Relations by Heuristic Rule Weighting • • Approach assigns weights to different ordering possibilities based on the knowledge sources involved. Temporal adverbials and discourse cues are first tried; if neither are present, then default rules based on tense and aspect are used. – • Given a sentence describing past tense activity followed by one describing a past tense accomplishment or achievement, the second event can only occur just after the activity; it can’t precede, overlap, or be identical to it. If the ordering is still ambiguous at the end of this, semantic rules are used based on modeling the discourse in terms of threads. – Assumes there is one ‘thread’ that the discourse is currently following. • • • a. John went into the florist shop. b. He had promised Mary some flowers. c. She said she wouldn’t forgive him if he forgot. d. So he picked out three red roses. Each utterance is associated with exactly one of two threads: – (i) going into the florist’s shop and – (ii) interacting with Mary. Prefer an utterance to continue a current thread which has the same tense or is semantically related to it – (i) would be continued by d. based on tense *Janet Hitzeman, Marc Moens, and Claire Grover, ‘Algorithms for Analysing the Temporal structure of Discourse’, EACL’ 1995, 253– 60. 77

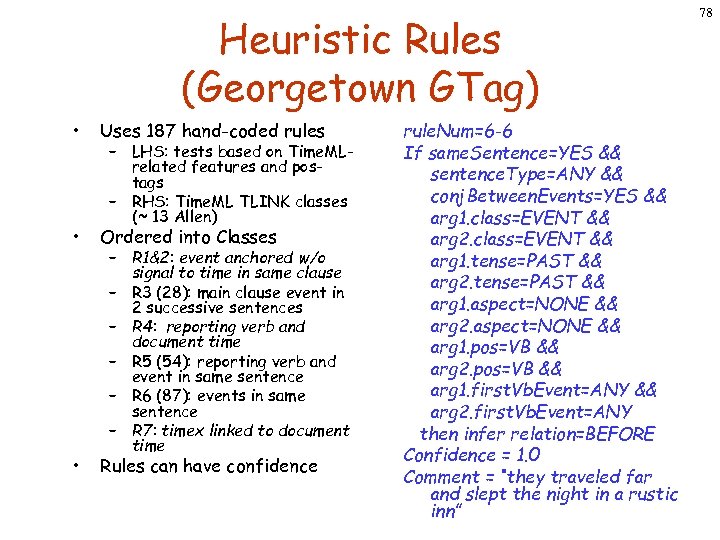

Heuristic Rules (Georgetown GTag) • Uses 187 hand-coded rules • Ordered into Classes • Rules can have confidence – LHS: tests based on Time. MLrelated features and postags – RHS: Time. ML TLINK classes (~ 13 Allen) – R 1&2: event anchored w/o signal to time in same clause – R 3 (28): main clause event in 2 successive sentences – R 4: reporting verb and document time – R 5 (54): reporting verb and event in same sentence – R 6 (87): events in same sentence – R 7: timex linked to document time rule. Num=6 -6 If same. Sentence=YES && sentence. Type=ANY && conj. Between. Events=YES && arg 1. class=EVENT && arg 2. class=EVENT && arg 1. tense=PAST && arg 2. tense=PAST && arg 1. aspect=NONE && arg 2. aspect=NONE && arg 1. pos=VB && arg 2. pos=VB && arg 1. first. Vb. Event=ANY && arg 2. first. Vb. Event=ANY then infer relation=BEFORE Confidence = 1. 0 Comment = “they traveled far and slept the night in a rustic inn” 78

Heuristic Rules (Georgetown GTag) • Uses 187 hand-coded rules • Ordered into Classes • Rules can have confidence – LHS: tests based on Time. MLrelated features and postags – RHS: Time. ML TLINK classes (~ 13 Allen) – R 1&2: event anchored w/o signal to time in same clause – R 3 (28): main clause event in 2 successive sentences – R 4: reporting verb and document time – R 5 (54): reporting verb and event in same sentence – R 6 (87): events in same sentence – R 7: timex linked to document time rule. Num=6 -6 If same. Sentence=YES && sentence. Type=ANY && conj. Between. Events=YES && arg 1. class=EVENT && arg 2. class=EVENT && arg 1. tense=PAST && arg 2. tense=PAST && arg 1. aspect=NONE && arg 2. aspect=NONE && arg 1. pos=VB && arg 2. pos=VB && arg 1. first. Vb. Event=ANY && arg 2. first. Vb. Event=ANY then infer relation=BEFORE Confidence = 1. 0 Comment = “they traveled far and slept the night in a rustic inn” 78

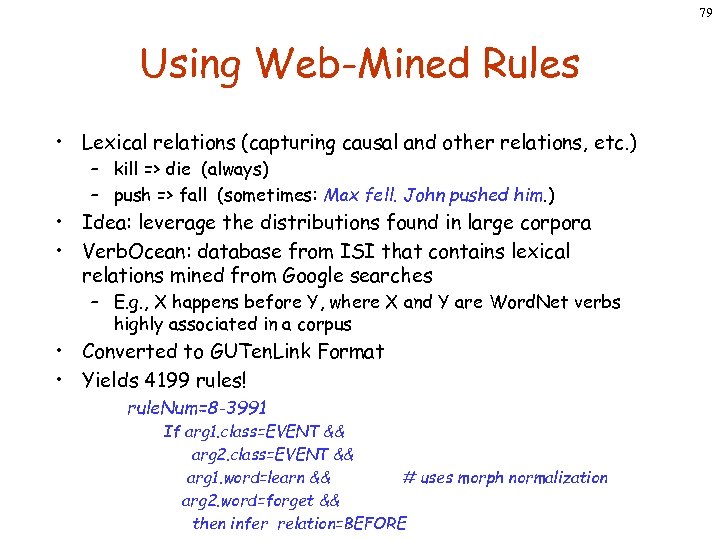

79 Using Web-Mined Rules • Lexical relations (capturing causal and other relations, etc. ) – kill => die (always) – push => fall (sometimes: Max fell. John pushed him. ) • Idea: leverage the distributions found in large corpora • Verb. Ocean: database from ISI that contains lexical relations mined from Google searches – E. g. , X happens before Y, where X and Y are Word. Net verbs highly associated in a corpus • Converted to GUTen. Link Format • Yields 4199 rules! rule. Num=8 -3991 If arg 1. class=EVENT && arg 2. class=EVENT && arg 1. word=learn && # uses morph normalization arg 2. word=forget && then infer relation=BEFORE

79 Using Web-Mined Rules • Lexical relations (capturing causal and other relations, etc. ) – kill => die (always) – push => fall (sometimes: Max fell. John pushed him. ) • Idea: leverage the distributions found in large corpora • Verb. Ocean: database from ISI that contains lexical relations mined from Google searches – E. g. , X happens before Y, where X and Y are Word. Net verbs highly associated in a corpus • Converted to GUTen. Link Format • Yields 4199 rules! rule. Num=8 -3991 If arg 1. class=EVENT && arg 2. class=EVENT && arg 1. word=learn && # uses morph normalization arg 2. word=forget && then infer relation=BEFORE

80 Outline • • Introduction Linguistic Theories AI Theories Annotation Schemes Rule-based and machine-learning methods. Challenges Links

80 Outline • • Introduction Linguistic Theories AI Theories Annotation Schemes Rule-based and machine-learning methods. Challenges Links

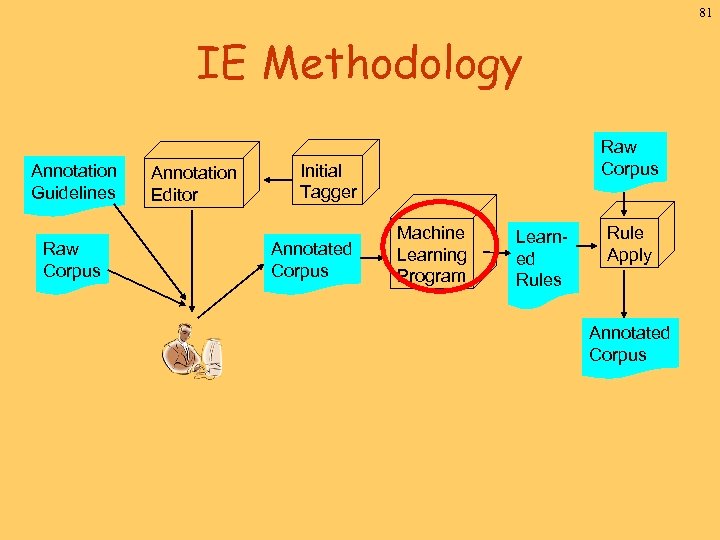

81 IE Methodology Annotation Guidelines Raw Corpus Annotation Editor Raw Corpus Initial Tagger Annotated Corpus Machine Learning Program Learned Rules Rule Apply Annotated Corpus

81 IE Methodology Annotation Guidelines Raw Corpus Annotation Editor Raw Corpus Initial Tagger Annotated Corpus Machine Learning Program Learned Rules Rule Apply Annotated Corpus

82 Related Machine Learning Work • (Li et al. ACL’ 2004) obtained 78 -88% accuracy on ordering within-sentence temporal relations in Chinese texts. • (Mani et al. , HLT’ 2003 short) obtained 80. 2 Fmeasure training a decision tree on 2069 clauses in anchoring events to reference times that were inferred for each clause. • (Lapata and Lascarides NAACL’ 2004) used found data to successfully learn which (possibly ambiguous) temporal markers connect a main and subordinate clause, without inferring underlying temporal relations.

82 Related Machine Learning Work • (Li et al. ACL’ 2004) obtained 78 -88% accuracy on ordering within-sentence temporal relations in Chinese texts. • (Mani et al. , HLT’ 2003 short) obtained 80. 2 Fmeasure training a decision tree on 2069 clauses in anchoring events to reference times that were inferred for each clause. • (Lapata and Lascarides NAACL’ 2004) used found data to successfully learn which (possibly ambiguous) temporal markers connect a main and subordinate clause, without inferring underlying temporal relations.

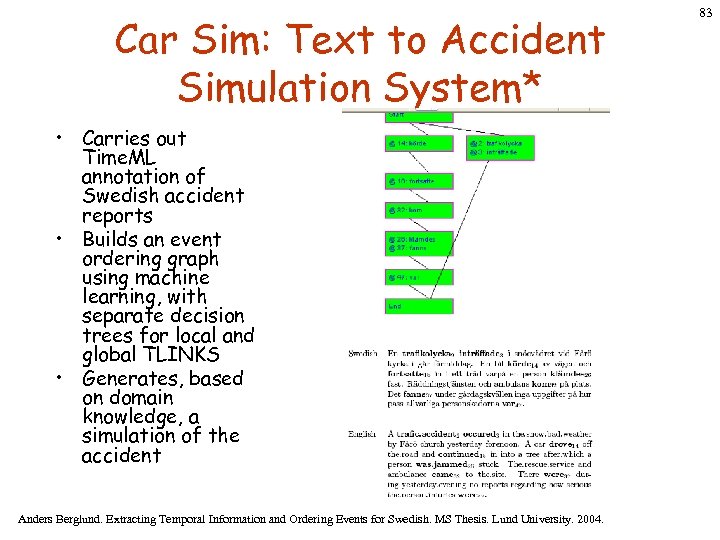

Car Sim: Text to Accident Simulation System* • Carries out Time. ML annotation of Swedish accident reports • Builds an event ordering graph using machine learning, with separate decision trees for local and global TLINKS • Generates, based on domain knowledge, a simulation of the accident Anders Berglund. Extracting Temporal Information and Ordering Events for Swedish. MS Thesis. Lund University. 2004. 83

Car Sim: Text to Accident Simulation System* • Carries out Time. ML annotation of Swedish accident reports • Builds an event ordering graph using machine learning, with separate decision trees for local and global TLINKS • Generates, based on domain knowledge, a simulation of the accident Anders Berglund. Extracting Temporal Information and Ordering Events for Swedish. MS Thesis. Lund University. 2004. 83

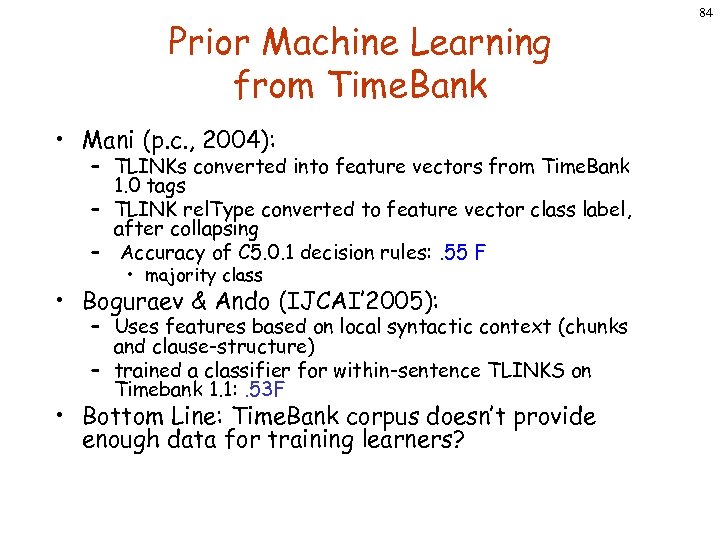

Prior Machine Learning from Time. Bank • Mani (p. c. , 2004): – TLINKs converted into feature vectors from Time. Bank 1. 0 tags – TLINK rel. Type converted to feature vector class label, after collapsing – Accuracy of C 5. 0. 1 decision rules: . 55 F • majority class • Boguraev & Ando (IJCAI’ 2005): – Uses features based on local syntactic context (chunks and clause-structure) – trained a classifier for within-sentence TLINKS on Timebank 1. 1: . 53 F • Bottom Line: Time. Bank corpus doesn’t provide enough data for training learners? 84

Prior Machine Learning from Time. Bank • Mani (p. c. , 2004): – TLINKs converted into feature vectors from Time. Bank 1. 0 tags – TLINK rel. Type converted to feature vector class label, after collapsing – Accuracy of C 5. 0. 1 decision rules: . 55 F • majority class • Boguraev & Ando (IJCAI’ 2005): – Uses features based on local syntactic context (chunks and clause-structure) – trained a classifier for within-sentence TLINKS on Timebank 1. 1: . 53 F • Bottom Line: Time. Bank corpus doesn’t provide enough data for training learners? 84

85 Insight: TLINK Annotation (Humans) • Inter-annotator reliability is ~. 55 F – But agreement on LINK labels: 77% • So, the problem is largely which events to link – Within sentence, adjacent sentences, across document? – Guidelines aren’t that helpful • Conclusion: global TLINKing is too fatiguing – 0. 84 TLINKS/event in corpus

85 Insight: TLINK Annotation (Humans) • Inter-annotator reliability is ~. 55 F – But agreement on LINK labels: 77% • So, the problem is largely which events to link – Within sentence, adjacent sentences, across document? – Guidelines aren’t that helpful • Conclusion: global TLINKing is too fatiguing – 0. 84 TLINKS/event in corpus

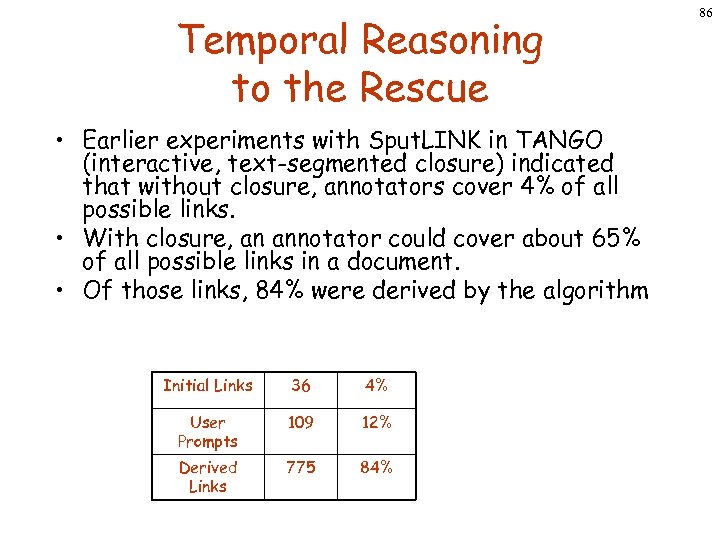

Temporal Reasoning to the Rescue • Earlier experiments with Sput. LINK in TANGO (interactive, text-segmented closure) indicated that without closure, annotators cover 4% of all possible links. • With closure, an annotator could cover about 65% of all possible links in a document. • Of those links, 84% were derived by the algorithm Initial Links 36 4% User Prompts 109 12% Derived Links 775 84% 86

Temporal Reasoning to the Rescue • Earlier experiments with Sput. LINK in TANGO (interactive, text-segmented closure) indicated that without closure, annotators cover 4% of all possible links. • With closure, an annotator could cover about 65% of all possible links in a document. • Of those links, 84% were derived by the algorithm Initial Links 36 4% User Prompts 109 12% Derived Links 775 84% 86

87 IE Methodology Annotation Guidelines Raw Corpus Annotation Editor Raw Corpus Initial Tagger Annotated Corpus Machine Learning Program Axioms Learned Rules Rule Apply Annotated Corpus

87 IE Methodology Annotation Guidelines Raw Corpus Annotation Editor Raw Corpus Initial Tagger Annotated Corpus Machine Learning Program Axioms Learned Rules Rule Apply Annotated Corpus

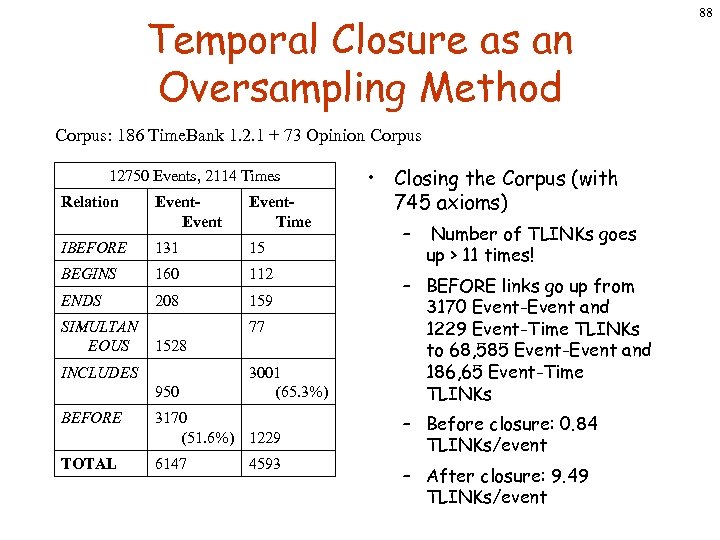

Temporal Closure as an Oversampling Method Corpus: 186 Time. Bank 1. 2. 1 + 73 Opinion Corpus 12750 Events, 2114 Times Relation Event. Time IBEFORE 131 15 BEGINS 160 112 ENDS 208 159 SIMULTAN EOUS 77 1528 INCLUDES 950 3001 (65. 3%) BEFORE 3170 (51. 6%) 1229 TOTAL 6147 4593 • Closing the Corpus (with 745 axioms) – Number of TLINKs goes up > 11 times! – BEFORE links go up from 3170 Event-Event and 1229 Event-Time TLINKs to 68, 585 Event-Event and 186, 65 Event-Time TLINKs – Before closure: 0. 84 TLINKs/event – After closure: 9. 49 TLINKs/event 88

Temporal Closure as an Oversampling Method Corpus: 186 Time. Bank 1. 2. 1 + 73 Opinion Corpus 12750 Events, 2114 Times Relation Event. Time IBEFORE 131 15 BEGINS 160 112 ENDS 208 159 SIMULTAN EOUS 77 1528 INCLUDES 950 3001 (65. 3%) BEFORE 3170 (51. 6%) 1229 TOTAL 6147 4593 • Closing the Corpus (with 745 axioms) – Number of TLINKs goes up > 11 times! – BEFORE links go up from 3170 Event-Event and 1229 Event-Time TLINKs to 68, 585 Event-Event and 186, 65 Event-Time TLINKs – Before closure: 0. 84 TLINKs/event – After closure: 9. 49 TLINKs/event 88

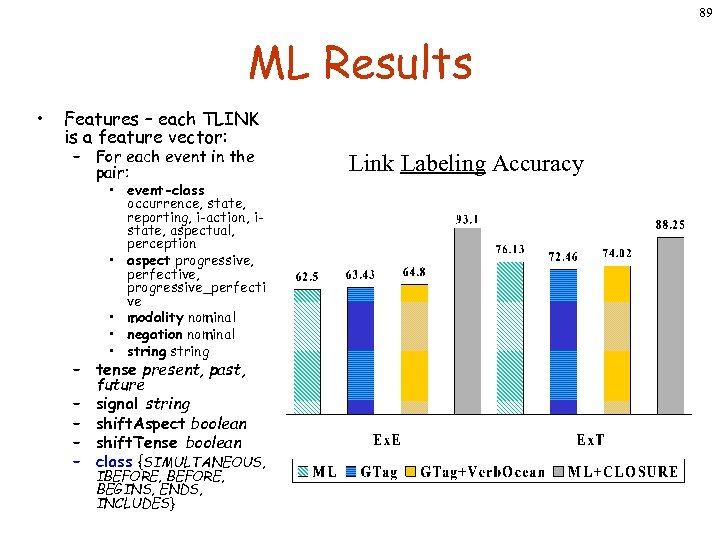

89 ML Results • Features – each TLINK is a feature vector: – For each event in the pair: • event-class occurrence, state, reporting, i-action, istate, aspectual, perception • aspect progressive, perfective, progressive_perfecti ve • modality nominal • negation nominal • string – tense present, past, future – signal string – shift. Aspect boolean – shift. Tense boolean – class {SIMULTANEOUS, IBEFORE, BEGINS, ENDS, INCLUDES} Link Labeling Accuracy

89 ML Results • Features – each TLINK is a feature vector: – For each event in the pair: • event-class occurrence, state, reporting, i-action, istate, aspectual, perception • aspect progressive, perfective, progressive_perfecti ve • modality nominal • negation nominal • string – tense present, past, future – signal string – shift. Aspect boolean – shift. Tense boolean – class {SIMULTANEOUS, IBEFORE, BEGINS, ENDS, INCLUDES} Link Labeling Accuracy

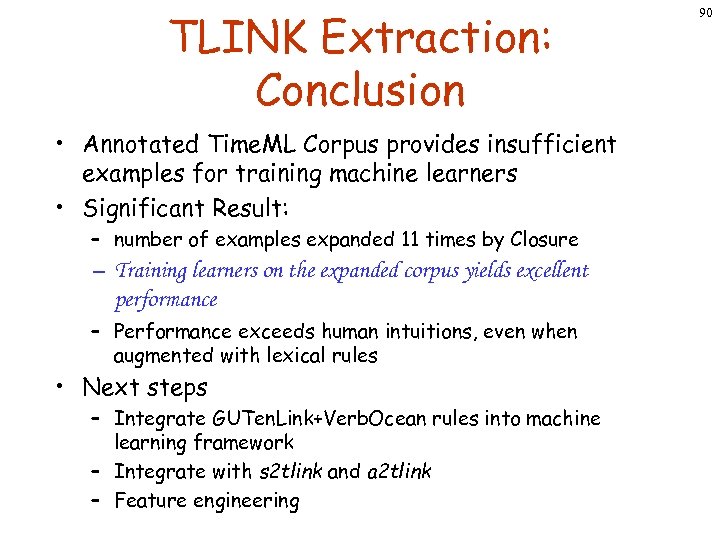

TLINK Extraction: Conclusion • Annotated Time. ML Corpus provides insufficient examples for training machine learners • Significant Result: – number of examples expanded 11 times by Closure – Training learners on the expanded corpus yields excellent performance – Performance exceeds human intuitions, even when augmented with lexical rules • Next steps – Integrate GUTen. Link+Verb. Ocean rules into machine learning framework – Integrate with s 2 tlink and a 2 tlink – Feature engineering 90

TLINK Extraction: Conclusion • Annotated Time. ML Corpus provides insufficient examples for training machine learners • Significant Result: – number of examples expanded 11 times by Closure – Training learners on the expanded corpus yields excellent performance – Performance exceeds human intuitions, even when augmented with lexical rules • Next steps – Integrate GUTen. Link+Verb. Ocean rules into machine learning framework – Integrate with s 2 tlink and a 2 tlink – Feature engineering 90

91 Challenges: Temporal Reasoning • Temporal reasoning for IE has used qualitative temporal relations • Trivial metric relations (distances in time) can be extracted from anchored durations and sorted time expressions • But commonsense metric constraints are missing – Time(Haircut) << Time(fly Boston 2 Sydney) • First steps: – Hobbs et al. at ACL’ 06 – Mani & Wellner at ARTE’ 06 workshop

91 Challenges: Temporal Reasoning • Temporal reasoning for IE has used qualitative temporal relations • Trivial metric relations (distances in time) can be extracted from anchored durations and sorted time expressions • But commonsense metric constraints are missing – Time(Haircut) << Time(fly Boston 2 Sydney) • First steps: – Hobbs et al. at ACL’ 06 – Mani & Wellner at ARTE’ 06 workshop

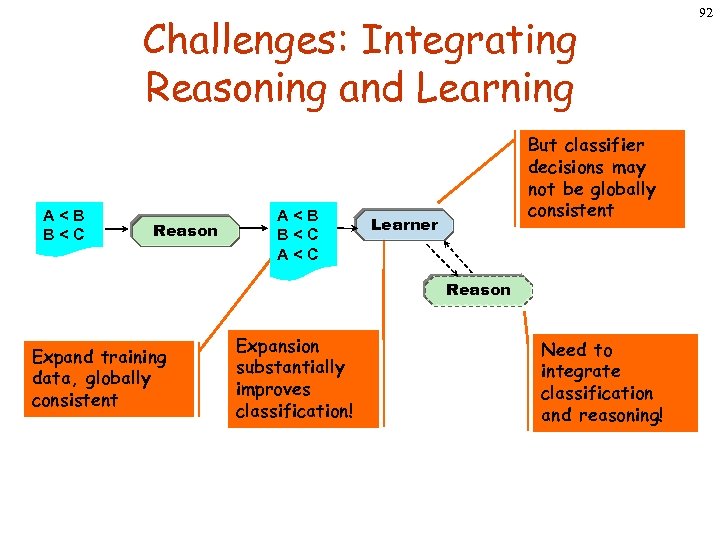

Challenges: Integrating Reasoning and Learning A

Challenges: Integrating Reasoning and Learning A

93 Difficulties in Annotation In an interview with Barbara Walters to be shown on ABC’s “Friday nights”, Shapiro said he tried on the gloves and realized they would never fit Simpson’s larger hands. • BEFORE or MEET? • More coarse-grained annotation may suffice

93 Difficulties in Annotation In an interview with Barbara Walters to be shown on ABC’s “Friday nights”, Shapiro said he tried on the gloves and realized they would never fit Simpson’s larger hands. • BEFORE or MEET? • More coarse-grained annotation may suffice

94 Discourse Relations • Lexical Rules from Verb. Ocean are still very sparse, even though they are less brittle • But need to match arguments when applying lexical rules (e. g. , subj/obj of push/fall) • A discourse model should in fact be used

94 Discourse Relations • Lexical Rules from Verb. Ocean are still very sparse, even though they are less brittle • But need to match arguments when applying lexical rules (e. g. , subj/obj of push/fall) • A discourse model should in fact be used

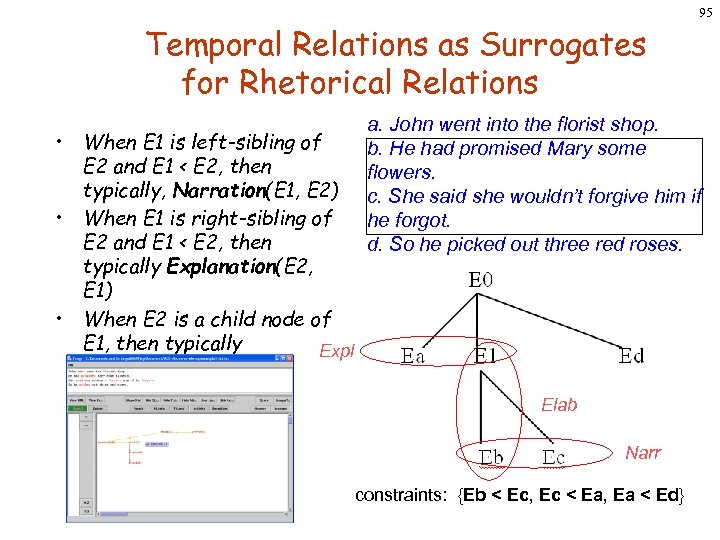

Temporal Relations as Surrogates for Rhetorical Relations • When E 1 is left-sibling of E 2 and E 1 < E 2, then typically, Narration(E 1, E 2) • When E 1 is right-sibling of E 2 and E 1 < E 2, then typically Explanation(E 2, E 1) • When E 2 is a child node of E 1, then typically Expl Elaboration(E 1, E 2) 95 a. John went into the florist shop. b. He had promised Mary some flowers. c. She said she wouldn’t forgive him if he forgot. d. So he picked out three red roses. Elab Narr constraints: {Eb < Ec, Ec < Ea, Ea < Ed}

Temporal Relations as Surrogates for Rhetorical Relations • When E 1 is left-sibling of E 2 and E 1 < E 2, then typically, Narration(E 1, E 2) • When E 1 is right-sibling of E 2 and E 1 < E 2, then typically Explanation(E 2, E 1) • When E 2 is a child node of E 1, then typically Expl Elaboration(E 1, E 2) 95 a. John went into the florist shop. b. He had promised Mary some flowers. c. She said she wouldn’t forgive him if he forgot. d. So he picked out three red roses. Elab Narr constraints: {Eb < Ec, Ec < Ea, Ea < Ed}

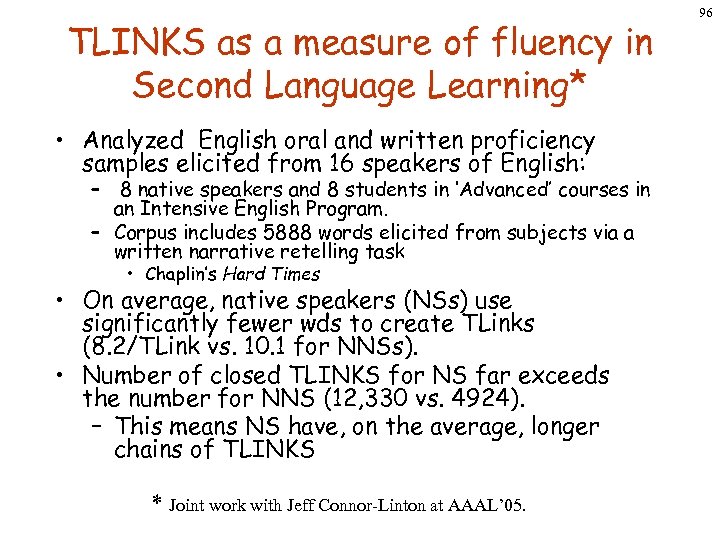

TLINKS as a measure of fluency in Second Language Learning* • Analyzed English oral and written proficiency samples elicited from 16 speakers of English: – 8 native speakers and 8 students in ‘Advanced’ courses in an Intensive English Program. – Corpus includes 5888 words elicited from subjects via a written narrative retelling task • Chaplin’s Hard Times • On average, native speakers (NSs) use significantly fewer wds to create TLinks (8. 2/TLink vs. 10. 1 for NNSs). • Number of closed TLINKS for NS far exceeds the number for NNS (12, 330 vs. 4924). – This means NS have, on the average, longer chains of TLINKS * Joint work with Jeff Connor-Linton at AAAL’ 05. 96

TLINKS as a measure of fluency in Second Language Learning* • Analyzed English oral and written proficiency samples elicited from 16 speakers of English: – 8 native speakers and 8 students in ‘Advanced’ courses in an Intensive English Program. – Corpus includes 5888 words elicited from subjects via a written narrative retelling task • Chaplin’s Hard Times • On average, native speakers (NSs) use significantly fewer wds to create TLinks (8. 2/TLink vs. 10. 1 for NNSs). • Number of closed TLINKS for NS far exceeds the number for NNS (12, 330 vs. 4924). – This means NS have, on the average, longer chains of TLINKS * Joint work with Jeff Connor-Linton at AAAL’ 05. 96

97 Outline • • Introduction Linguistic Theories AI Theories Annotation Schemes Rule-based and machine-learning methods. Challenges Links

97 Outline • • Introduction Linguistic Theories AI Theories Annotation Schemes Rule-based and machine-learning methods. Challenges Links

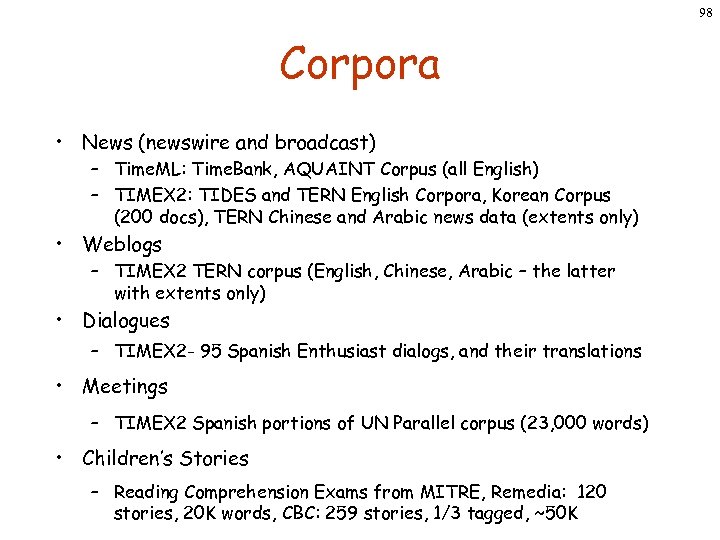

98 Corpora • News (newswire and broadcast) – Time. ML: Time. Bank, AQUAINT Corpus (all English) – TIMEX 2: TIDES and TERN English Corpora, Korean Corpus (200 docs), TERN Chinese and Arabic news data (extents only) • Weblogs – TIMEX 2 TERN corpus (English, Chinese, Arabic – the latter with extents only) • Dialogues – TIMEX 2 - 95 Spanish Enthusiast dialogs, and their translations • Meetings – TIMEX 2 Spanish portions of UN Parallel corpus (23, 000 words) • Children’s Stories – Reading Comprehension Exams from MITRE, Remedia: 120 stories, 20 K words, CBC: 259 stories, 1/3 tagged, ~50 K

98 Corpora • News (newswire and broadcast) – Time. ML: Time. Bank, AQUAINT Corpus (all English) – TIMEX 2: TIDES and TERN English Corpora, Korean Corpus (200 docs), TERN Chinese and Arabic news data (extents only) • Weblogs – TIMEX 2 TERN corpus (English, Chinese, Arabic – the latter with extents only) • Dialogues – TIMEX 2 - 95 Spanish Enthusiast dialogs, and their translations • Meetings – TIMEX 2 Spanish portions of UN Parallel corpus (23, 000 words) • Children’s Stories – Reading Comprehension Exams from MITRE, Remedia: 120 stories, 20 K words, CBC: 259 stories, 1/3 tagged, ~50 K

99 Links • Time. Bank: (April 17, 2006) – http: //www. ldc. upenn. edu/Catalog. Entry. jsp? catalog. Id=LD C 2006 T 08 • Time. ML: – www. timeml. org • TIMEX 2/TERN ACE data (English, Chinese, Arabic): – timex 2. mitre. org • TIMEX 2/3 Tagger: – http: //complingone. georgetown. edu/~linguist/GU_TIME_DOWN LOAD. HTML • Korean and Spanish data. : imani@mitre. org • Callisto: callisto. mitre. org

99 Links • Time. Bank: (April 17, 2006) – http: //www. ldc. upenn. edu/Catalog. Entry. jsp? catalog. Id=LD C 2006 T 08 • Time. ML: – www. timeml. org • TIMEX 2/TERN ACE data (English, Chinese, Arabic): – timex 2. mitre. org • TIMEX 2/3 Tagger: – http: //complingone. georgetown. edu/~linguist/GU_TIME_DOWN LOAD. HTML • Korean and Spanish data. : imani@mitre. org • Callisto: callisto. mitre. org

100 References 1. Mani, I. , Pustejovsky, J. , and Gaizauskas, R. (eds. ). (2005) The Language of Time: A Reader. Oxford University Press. 2. Mani, I. , and Schiffman, B. (2004). Temporally Anchoring and Ordering Events in News. In Pustejovsky, J. and Gaizauskas, R. (eds), Time and Event Recognition in Natural Language. John Benjamins, to appear. 3. Mani, I. (2004). Recent Developments in Temporal Information Extraction. In Nicolov, N. , and Mitkov, R. Proceedings of RANLP'03, John Benjamins, to appear. 4. Jang, S. , Baldwin, J. , and Mani, I. (2004). Automatic TIMEX 2 Tagging of Korean News. In Mani, I. , Pustejovsky, J. , and Sundheim, B. (eds. ), ACM Transactions on Asian Language Processing: Special issue on Temporal Information Processing. 5. Mani, I. , Schiffman, B. , and Zhang, J. (2003). Inferring Temporal Ordering of Events in News. Short Paper. In Proceedings of the Human Language Technology Conference (HLT-NAACL'03). 6. Ferro, L. , Mani, I. , Sundheim, B. and Wilson G. (2001). TIDES Temporal Annotation Guidelines Draft - Version 1. 02. MITRE Technical Report MTR 01 W 000004. Mc. Lean, Virginia: The MITRE Corporation. 7. Mani, I. and Wilson, G. (2000). Robust Temporal Processing of News. In Proceedings of the 38 th Annual Meeting of the Association for Computational Linguistics (ACL'2000), 69 -76.