1234d05eaae1a214adbf7e901ac455fa.ppt

- Количество слайдов: 52

1 Overview of Grid middleware concepts Peter Kacsuk • MTA SZTAKI, Hungary • Univ. Westminster, UK kacsuk@sztaki. hu

1 Overview of Grid middleware concepts Peter Kacsuk • MTA SZTAKI, Hungary • Univ. Westminster, UK kacsuk@sztaki. hu

2 The goal of this lecture • To overview the main trends of the fast evolution of Grid systems • Explaining the main features of the three generation of Grid systems – 1 st gen. Grids: Metacomputers – 2 nd gen. Grids: Resource-oriented Grids – 3 rd gen. Grids: Service-oriented Grids • To show these Grid systems can be handled by the users

2 The goal of this lecture • To overview the main trends of the fast evolution of Grid systems • Explaining the main features of the three generation of Grid systems – 1 st gen. Grids: Metacomputers – 2 nd gen. Grids: Resource-oriented Grids – 3 rd gen. Grids: Service-oriented Grids • To show these Grid systems can be handled by the users

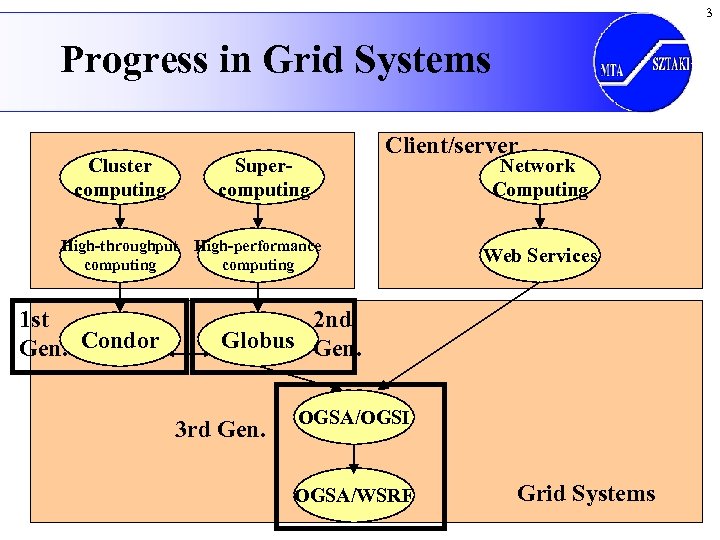

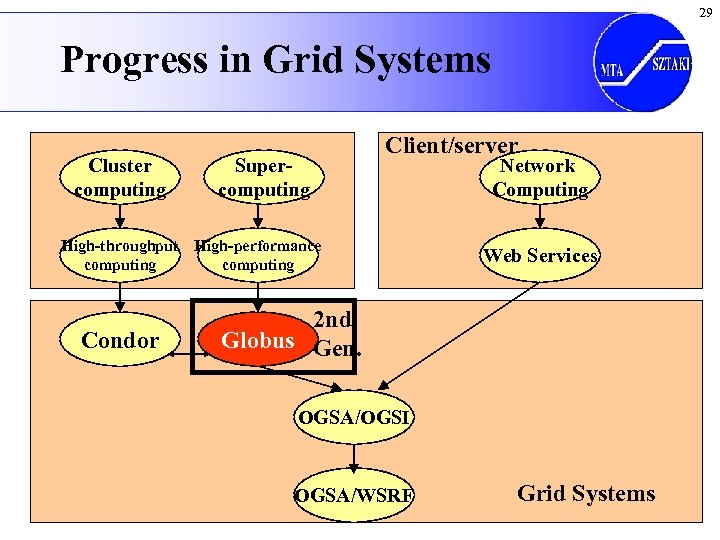

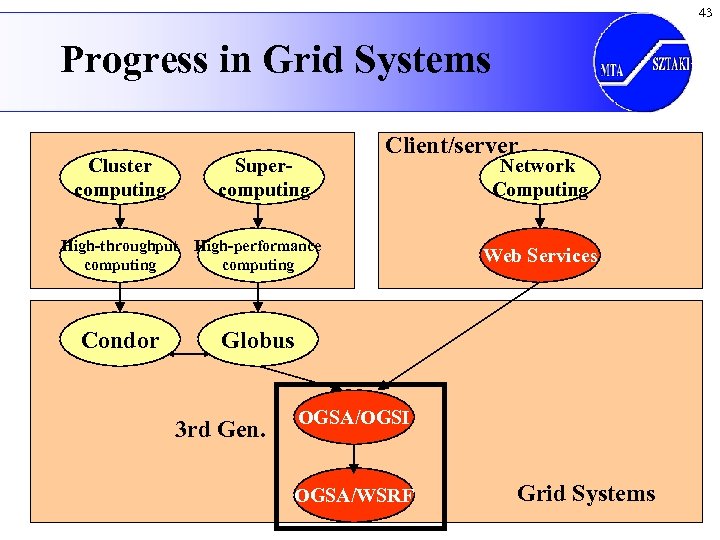

3 Progress in Grid Systems Cluster computing Supercomputing Client/server High-throughput High-performance computing 1 st Gen. Condor Network Computing Web Services 2 nd Globus Gen. 3 rd Gen. OGSA/OGSI OGSA/WSRF Grid Systems

3 Progress in Grid Systems Cluster computing Supercomputing Client/server High-throughput High-performance computing 1 st Gen. Condor Network Computing Web Services 2 nd Globus Gen. 3 rd Gen. OGSA/OGSI OGSA/WSRF Grid Systems

4 1 st Generation Grids Metacomputers

4 1 st Generation Grids Metacomputers

Original motivation for metacomputing • Grand challenge problems run weeks and months even on supercomputers and clusters • Various supercomputers/clusters must be connected by wide area networks in order to solve grand challenge problems in reasonable time 5

Original motivation for metacomputing • Grand challenge problems run weeks and months even on supercomputers and clusters • Various supercomputers/clusters must be connected by wide area networks in order to solve grand challenge problems in reasonable time 5

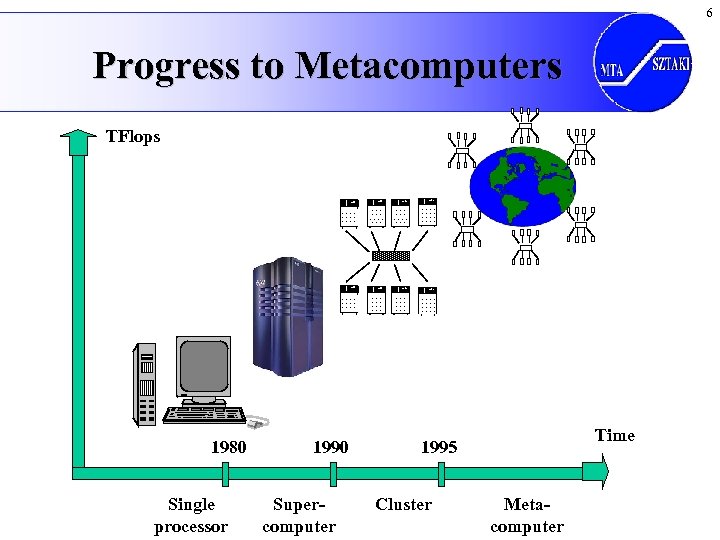

6 Progress to Metacomputers TFlops 2100 Single processor 1990 Supercomputer 2100 1980 2100 Time 1995 Cluster Metacomputer

6 Progress to Metacomputers TFlops 2100 Single processor 1990 Supercomputer 2100 1980 2100 Time 1995 Cluster Metacomputer

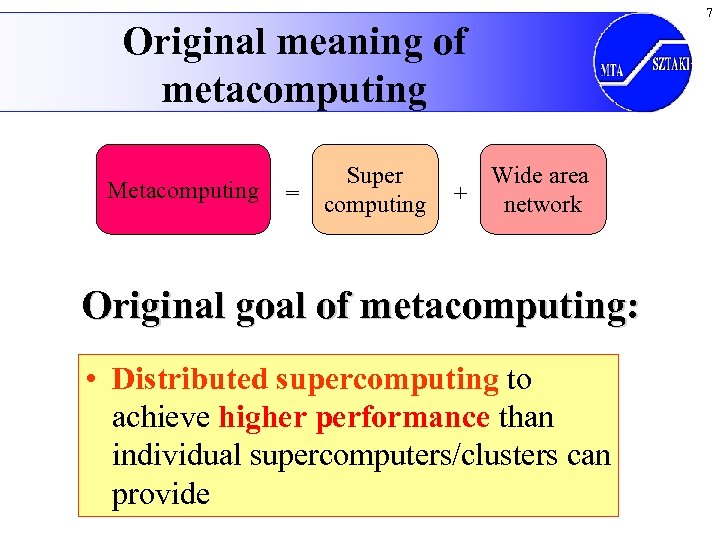

Original meaning of metacomputing Metacomputing Super = computing Wide area + network Original goal of metacomputing: • Distributed supercomputing to achieve higher performance than individual supercomputers/clusters can provide 7

Original meaning of metacomputing Metacomputing Super = computing Wide area + network Original goal of metacomputing: • Distributed supercomputing to achieve higher performance than individual supercomputers/clusters can provide 7

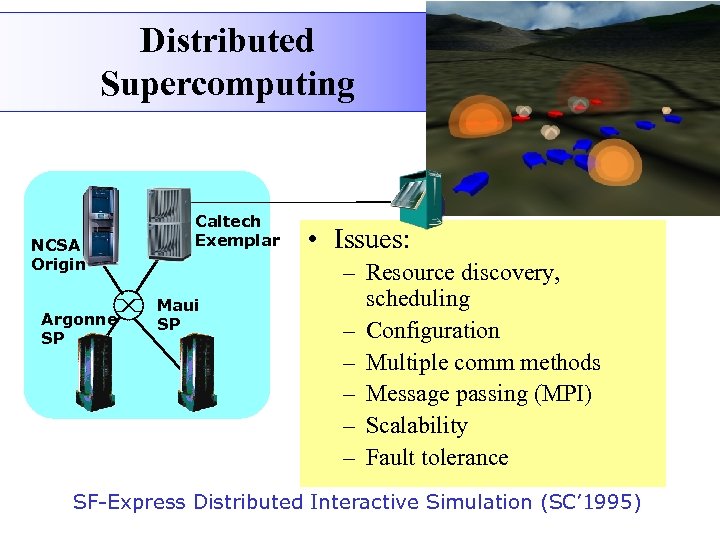

Distributed Supercomputing NCSA Origin Argonne SP Caltech Exemplar Maui SP • Issues: – Resource discovery, scheduling – Configuration – Multiple comm methods – Message passing (MPI) – Scalability – Fault tolerance SF-Express Distributed Interactive Simulation (SC’ 1995) 8

Distributed Supercomputing NCSA Origin Argonne SP Caltech Exemplar Maui SP • Issues: – Resource discovery, scheduling – Configuration – Multiple comm methods – Message passing (MPI) – Scalability – Fault tolerance SF-Express Distributed Interactive Simulation (SC’ 1995) 8

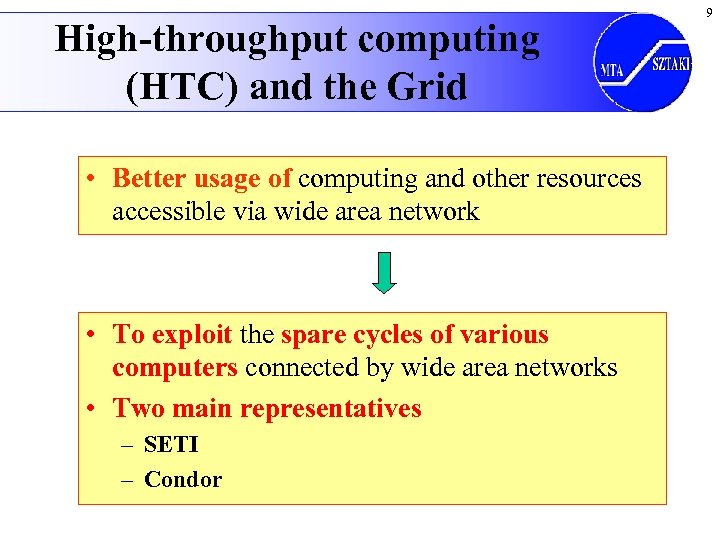

High-throughput computing (HTC) and the Grid • Better usage of computing and other resources accessible via wide area network • To exploit the spare cycles of various computers connected by wide area networks • Two main representatives – SETI – Condor 9

High-throughput computing (HTC) and the Grid • Better usage of computing and other resources accessible via wide area network • To exploit the spare cycles of various computers connected by wide area networks • Two main representatives – SETI – Condor 9

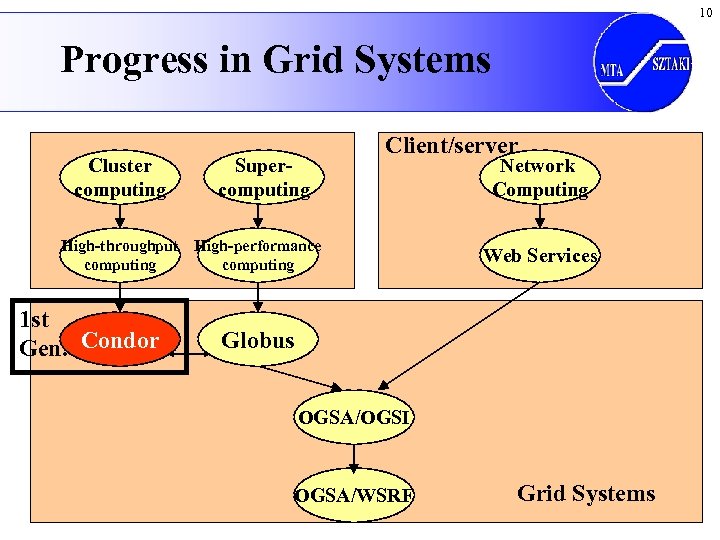

10 Progress in Grid Systems Cluster computing Supercomputing Client/server High-throughput High-performance computing 1 st Gen. Condor Network Computing Web Services Globus OGSA/OGSI OGSA/WSRF Grid Systems

10 Progress in Grid Systems Cluster computing Supercomputing Client/server High-throughput High-performance computing 1 st Gen. Condor Network Computing Web Services Globus OGSA/OGSI OGSA/WSRF Grid Systems

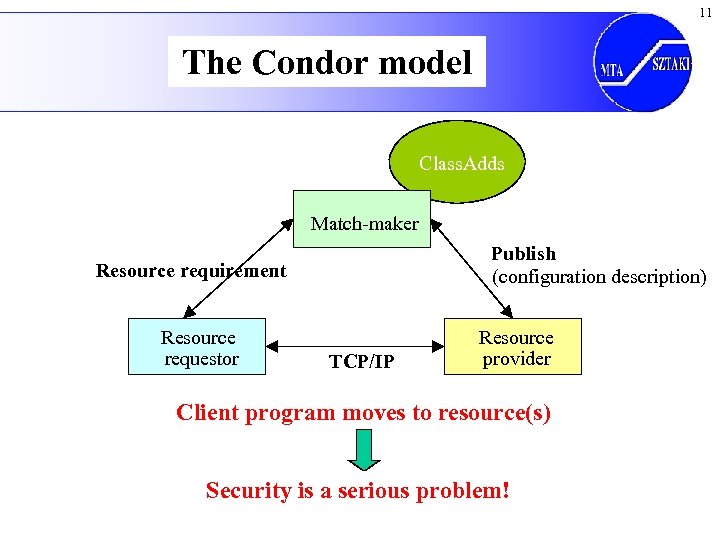

11 The Condor model Class. Adds Match-maker Publish (configuration description) Resource requirement Resource requestor TCP/IP Resource provider Client program moves to resource(s) Security is a serious problem!

11 The Condor model Class. Adds Match-maker Publish (configuration description) Resource requirement Resource requestor TCP/IP Resource provider Client program moves to resource(s) Security is a serious problem!

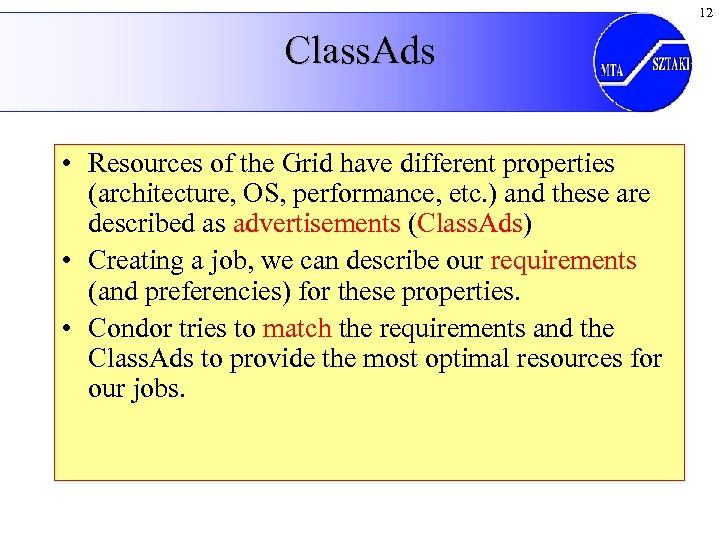

12 Class. Ads • Resources of the Grid have different properties (architecture, OS, performance, etc. ) and these are described as advertisements (Class. Ads) • Creating a job, we can describe our requirements (and preferencies) for these properties. • Condor tries to match the requirements and the Class. Ads to provide the most optimal resources for our jobs.

12 Class. Ads • Resources of the Grid have different properties (architecture, OS, performance, etc. ) and these are described as advertisements (Class. Ads) • Creating a job, we can describe our requirements (and preferencies) for these properties. • Condor tries to match the requirements and the Class. Ads to provide the most optimal resources for our jobs.

13 The concept of personal Condor jobs personal your workstation Condor

13 The concept of personal Condor jobs personal your workstation Condor

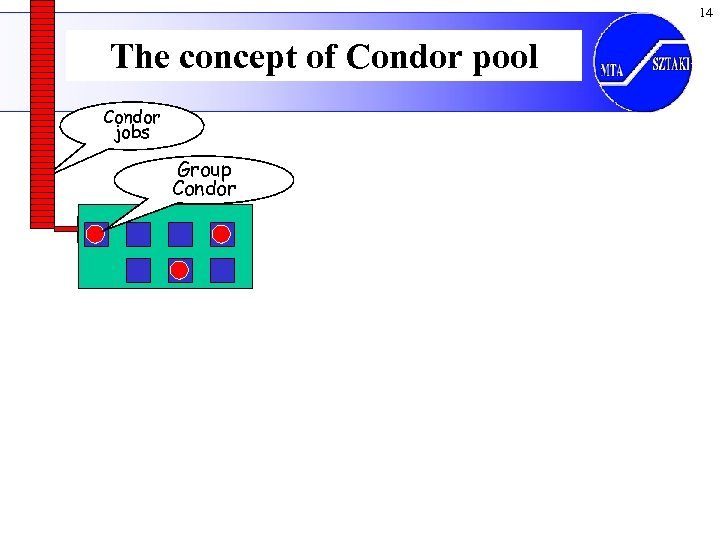

14 The concept of Condor pool Condor jobs personal Group your workstation Condor

14 The concept of Condor pool Condor jobs personal Group your workstation Condor

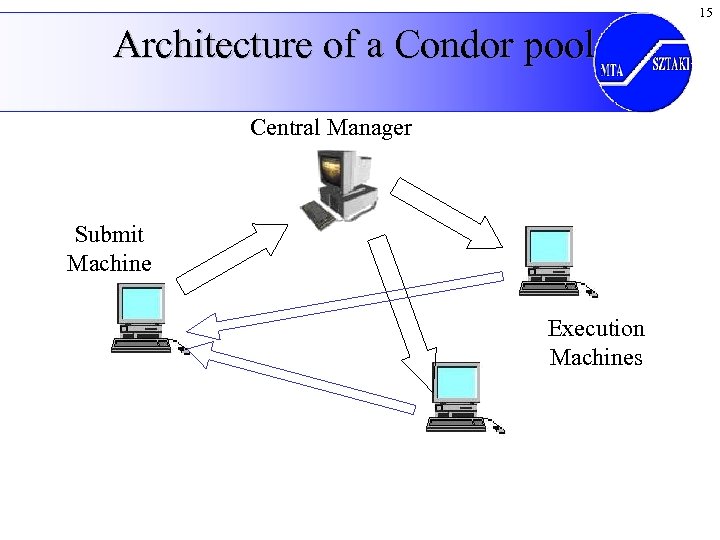

15 Architecture of a Condor pool Central Manager Submit Machine Execution Machines

15 Architecture of a Condor pool Central Manager Submit Machine Execution Machines

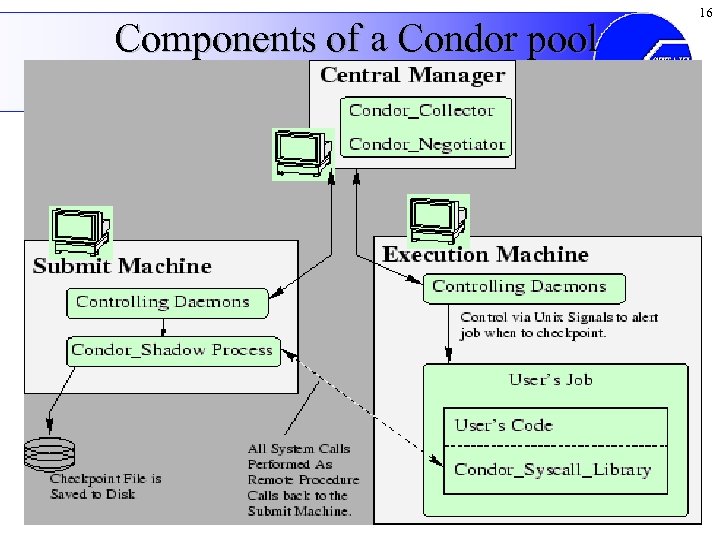

Components of a Condor pool 16

Components of a Condor pool 16

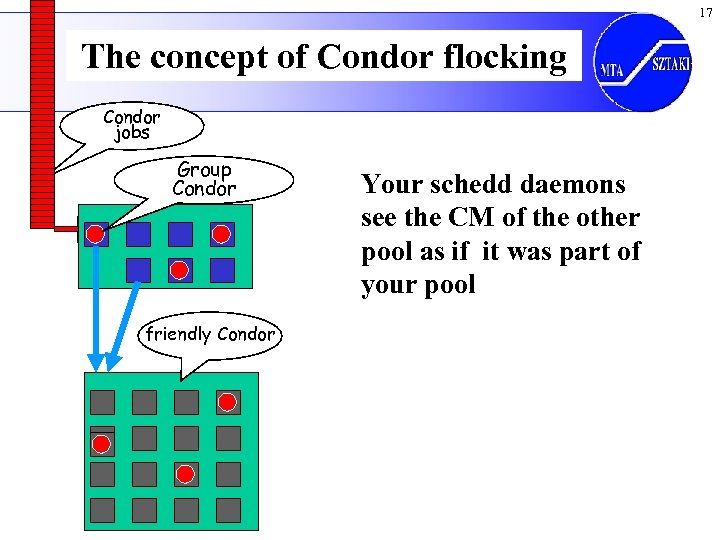

17 The concept of Condor flocking Condor jobs personal Group your workstation Condor friendly Condor Your schedd daemons see the CM of the other pool as if it was part of your pool

17 The concept of Condor flocking Condor jobs personal Group your workstation Condor friendly Condor Your schedd daemons see the CM of the other pool as if it was part of your pool

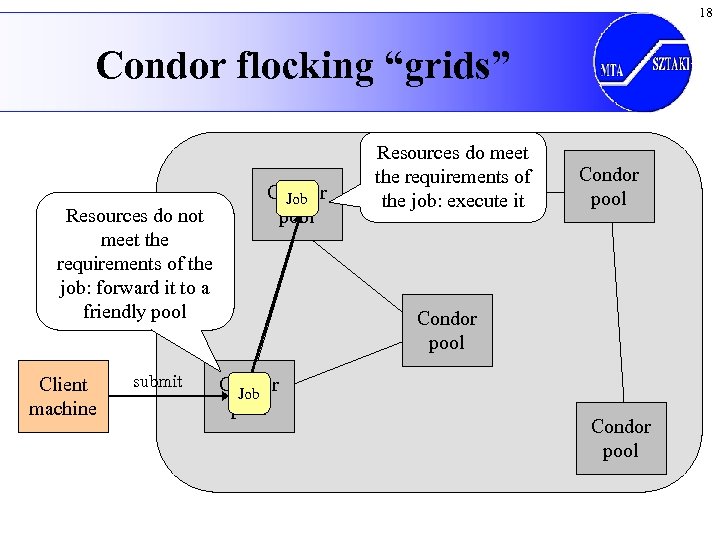

18 Condor flocking “grids” Resources do not meet the requirements of the job: forward it to a friendly pool Client machine submit Condor Job pool Resources do meet the requirements of the job: execute it Condor pool Condor Job pool Condor pool

18 Condor flocking “grids” Resources do not meet the requirements of the job: forward it to a friendly pool Client machine submit Condor Job pool Resources do meet the requirements of the job: execute it Condor pool Condor Job pool Condor pool

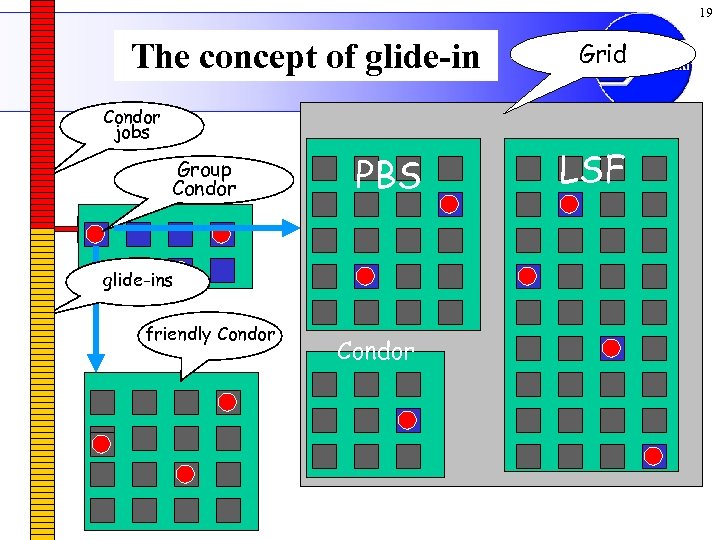

19 The concept of glide-in Condor jobs personal Group your workstation Condor PBS glide-ins friendly Condor Grid LSF

19 The concept of glide-in Condor jobs personal Group your workstation Condor PBS glide-ins friendly Condor Grid LSF

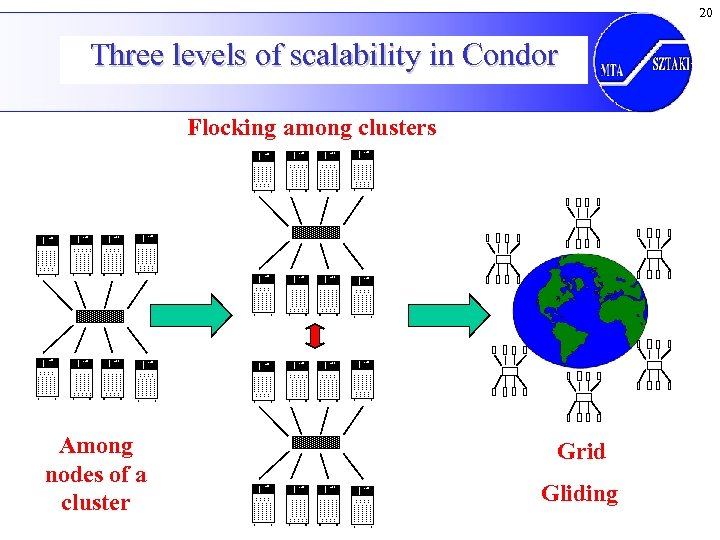

20 Three levels of scalability in Condor Flocking among clusters 2100 2100 Among nodes of a cluster 2100 2100 2100 2100 Grid 2100 Gliding

20 Three levels of scalability in Condor Flocking among clusters 2100 2100 Among nodes of a cluster 2100 2100 2100 2100 Grid 2100 Gliding

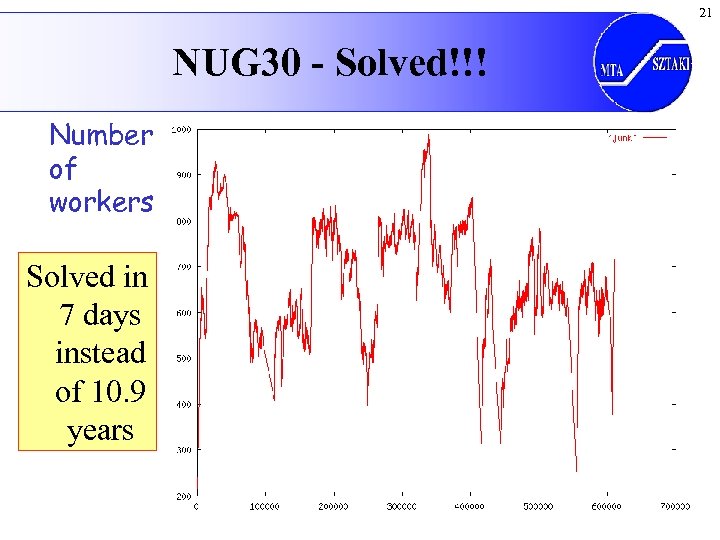

21 NUG 30 - Solved!!! Number of workers Solved in 7 days instead of 10. 9 years

21 NUG 30 - Solved!!! Number of workers Solved in 7 days instead of 10. 9 years

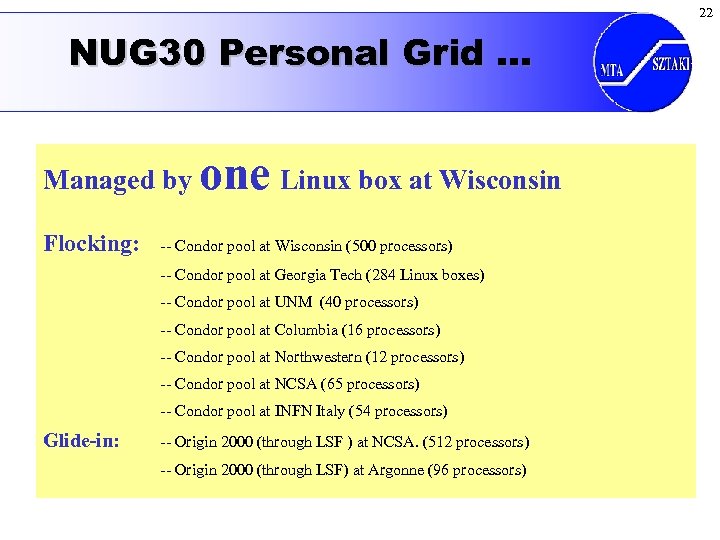

22 NUG 30 Personal Grid … Managed by Flocking: one Linux box at Wisconsin -- Condor pool at Wisconsin (500 processors) -- Condor pool at Georgia Tech (284 Linux boxes) -- Condor pool at UNM (40 processors) -- Condor pool at Columbia (16 processors) -- Condor pool at Northwestern (12 processors) -- Condor pool at NCSA (65 processors) -- Condor pool at INFN Italy (54 processors) Glide-in: -- Origin 2000 (through LSF ) at NCSA. (512 processors) -- Origin 2000 (through LSF) at Argonne (96 processors)

22 NUG 30 Personal Grid … Managed by Flocking: one Linux box at Wisconsin -- Condor pool at Wisconsin (500 processors) -- Condor pool at Georgia Tech (284 Linux boxes) -- Condor pool at UNM (40 processors) -- Condor pool at Columbia (16 processors) -- Condor pool at Northwestern (12 processors) -- Condor pool at NCSA (65 processors) -- Condor pool at INFN Italy (54 processors) Glide-in: -- Origin 2000 (through LSF ) at NCSA. (512 processors) -- Origin 2000 (through LSF) at Argonne (96 processors)

Problems with Condor flocking “grids” • • Friendly relationships are defined statically. Firewalls are not allowed between friendly pools. Client can not choose resources (pools) directly. Private (non-standard) “Condor protocols” are used to connect friendly pools together. • Not service-oriented 23

Problems with Condor flocking “grids” • • Friendly relationships are defined statically. Firewalls are not allowed between friendly pools. Client can not choose resources (pools) directly. Private (non-standard) “Condor protocols” are used to connect friendly pools together. • Not service-oriented 23

24 2 nd Generation Grids Resource-oriented Grid

24 2 nd Generation Grids Resource-oriented Grid

25 The main goal of 2 nd gen. Grids • To enable a – geographically distributed community [of thousands] – to perform sophisticated, computationally intensive analyses – on large set (Petabytes) of data • To provide – on demand – dynamic resource aggregation – as virtual organizations Example virtual organizations : – Physics community (EDG, EGEE) – Climate community, etc.

25 The main goal of 2 nd gen. Grids • To enable a – geographically distributed community [of thousands] – to perform sophisticated, computationally intensive analyses – on large set (Petabytes) of data • To provide – on demand – dynamic resource aggregation – as virtual organizations Example virtual organizations : – Physics community (EDG, EGEE) – Climate community, etc.

26 Resource intensive issues include • Harness data, storage, computing and network resources located in distinct administrative domains • Respect local and global policies governing what can be used for what • Schedule resources efficiently, again subject to local and global constraints • Achieve high performance, with respect to both speed and reliability

26 Resource intensive issues include • Harness data, storage, computing and network resources located in distinct administrative domains • Respect local and global policies governing what can be used for what • Schedule resources efficiently, again subject to local and global constraints • Achieve high performance, with respect to both speed and reliability

27 Grid Protocols, Services and Tools • Protocol-based access to resources – – Mask local heterogeneities Negotiate multi-domain security, policy “Grid-enabled” resources speak Grid protocols Multiple implementations are possible • Broad deployment of protocols facilitates creation of services that provide integrated view of distributed resources • Tools use protocols and services to enable specific classes of applications

27 Grid Protocols, Services and Tools • Protocol-based access to resources – – Mask local heterogeneities Negotiate multi-domain security, policy “Grid-enabled” resources speak Grid protocols Multiple implementations are possible • Broad deployment of protocols facilitates creation of services that provide integrated view of distributed resources • Tools use protocols and services to enable specific classes of applications

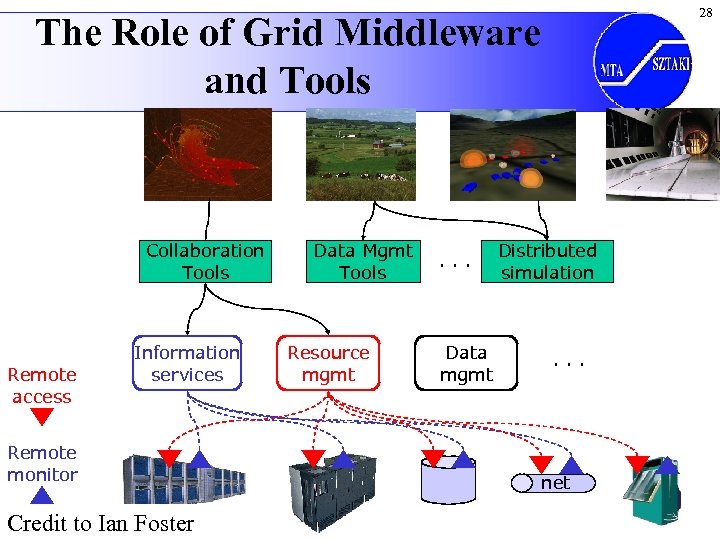

28 The Role of Grid Middleware and Tools Collaboration Tools Remote access Information services Remote monitor Credit to Ian Foster Data Mgmt Tools Resource mgmt . . . Data mgmt Distributed simulation . . . net

28 The Role of Grid Middleware and Tools Collaboration Tools Remote access Information services Remote monitor Credit to Ian Foster Data Mgmt Tools Resource mgmt . . . Data mgmt Distributed simulation . . . net

29 Progress in Grid Systems Cluster computing Supercomputing Client/server High-throughput High-performance computing Condor Network Computing Web Services 2 nd Globus Gen. OGSA/OGSI OGSA/WSRF Grid Systems

29 Progress in Grid Systems Cluster computing Supercomputing Client/server High-throughput High-performance computing Condor Network Computing Web Services 2 nd Globus Gen. OGSA/OGSI OGSA/WSRF Grid Systems

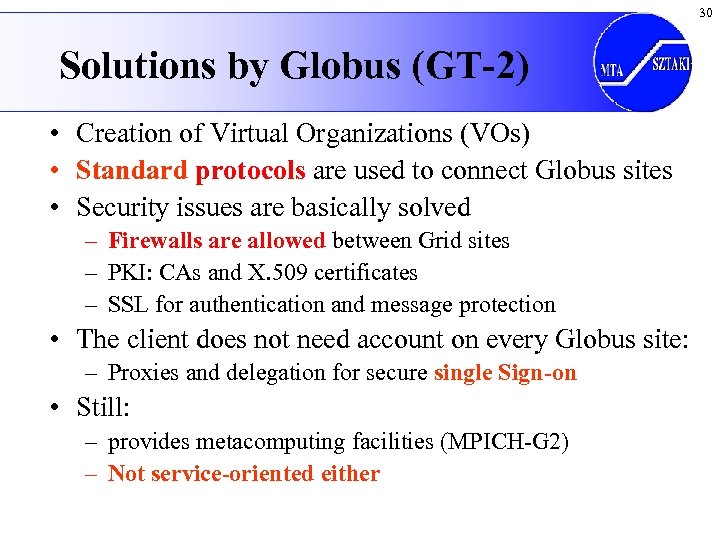

30 Solutions by Globus (GT-2) • Creation of Virtual Organizations (VOs) • Standard protocols are used to connect Globus sites • Security issues are basically solved – Firewalls are allowed between Grid sites – PKI: CAs and X. 509 certificates – SSL for authentication and message protection • The client does not need account on every Globus site: – Proxies and delegation for secure single Sign-on • Still: – provides metacomputing facilities (MPICH-G 2) – Not service-oriented either

30 Solutions by Globus (GT-2) • Creation of Virtual Organizations (VOs) • Standard protocols are used to connect Globus sites • Security issues are basically solved – Firewalls are allowed between Grid sites – PKI: CAs and X. 509 certificates – SSL for authentication and message protection • The client does not need account on every Globus site: – Proxies and delegation for secure single Sign-on • Still: – provides metacomputing facilities (MPICH-G 2) – Not service-oriented either

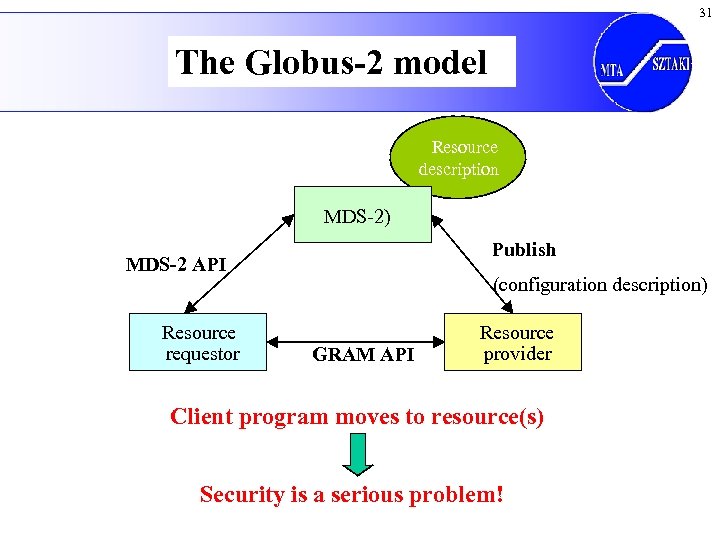

31 The Globus-2 model Resource description MDS-2) Publish MDS-2 API Resource requestor (configuration description) GRAM API Resource provider Client program moves to resource(s) Security is a serious problem!

31 The Globus-2 model Resource description MDS-2) Publish MDS-2 API Resource requestor (configuration description) GRAM API Resource provider Client program moves to resource(s) Security is a serious problem!

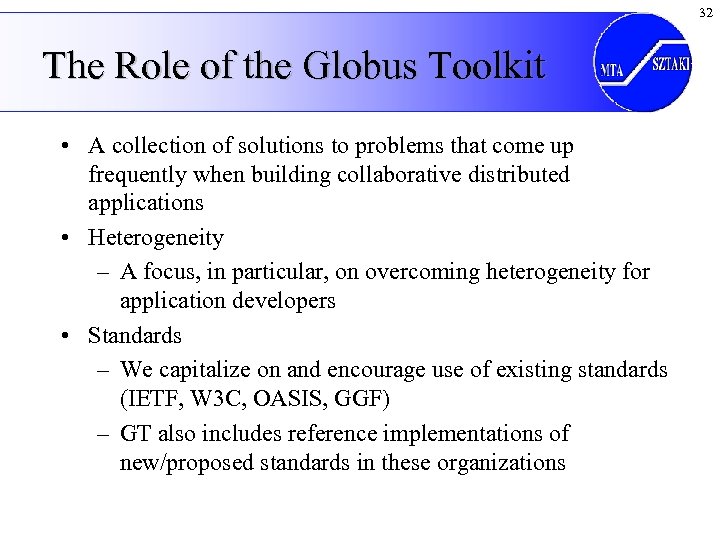

32 The Role of the Globus Toolkit • A collection of solutions to problems that come up frequently when building collaborative distributed applications • Heterogeneity – A focus, in particular, on overcoming heterogeneity for application developers • Standards – We capitalize on and encourage use of existing standards (IETF, W 3 C, OASIS, GGF) – GT also includes reference implementations of new/proposed standards in these organizations

32 The Role of the Globus Toolkit • A collection of solutions to problems that come up frequently when building collaborative distributed applications • Heterogeneity – A focus, in particular, on overcoming heterogeneity for application developers • Standards – We capitalize on and encourage use of existing standards (IETF, W 3 C, OASIS, GGF) – GT also includes reference implementations of new/proposed standards in these organizations

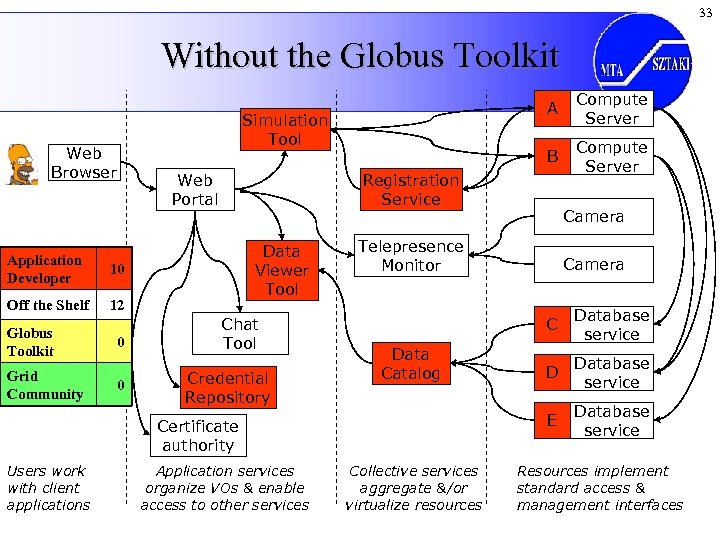

33 Without the Globus Toolkit Web Portal Application Developer Data Viewer Tool 12 Grid Community 0 0 Chat Tool Credential Repository Application services organize VOs & enable access to other services Camera Telepresence Monitor Camera C Collective services aggregate &/or virtualize resources Database service D Database service E Data Catalog Certificate authority Users work with client applications Compute Server Registration Service 10 Off the Shelf Compute Server B Simulation Tool Web Browser Globus Toolkit A Database service Resources implement standard access & management interfaces

33 Without the Globus Toolkit Web Portal Application Developer Data Viewer Tool 12 Grid Community 0 0 Chat Tool Credential Repository Application services organize VOs & enable access to other services Camera Telepresence Monitor Camera C Collective services aggregate &/or virtualize resources Database service D Database service E Data Catalog Certificate authority Users work with client applications Compute Server Registration Service 10 Off the Shelf Compute Server B Simulation Tool Web Browser Globus Toolkit A Database service Resources implement standard access & management interfaces

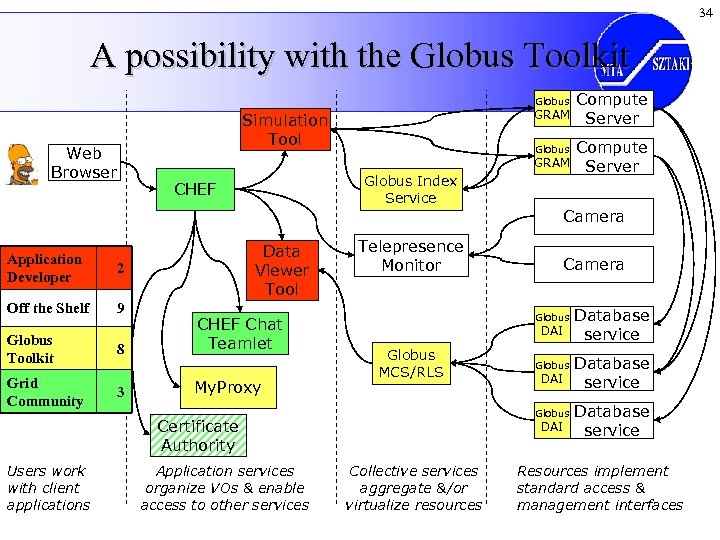

34 A possibility with the Globus Toolkit Globus GRAM Simulation Tool Web Browser Globus GRAM Globus Index Service CHEF Compute Server Camera Application Developer 2 Off the Shelf 9 Data Viewer Tool Globus Toolkit Grid Community 8 3 CHEF Chat Teamlet My. Proxy Telepresence Monitor Globus DAI Globus MCS/RLS Application services organize VOs & enable access to other services Globus DAI Globus Certificate Authority Users work with client applications Camera DAI Collective services aggregate &/or virtualize resources Database service Resources implement standard access & management interfaces

34 A possibility with the Globus Toolkit Globus GRAM Simulation Tool Web Browser Globus GRAM Globus Index Service CHEF Compute Server Camera Application Developer 2 Off the Shelf 9 Data Viewer Tool Globus Toolkit Grid Community 8 3 CHEF Chat Teamlet My. Proxy Telepresence Monitor Globus DAI Globus MCS/RLS Application services organize VOs & enable access to other services Globus DAI Globus Certificate Authority Users work with client applications Camera DAI Collective services aggregate &/or virtualize resources Database service Resources implement standard access & management interfaces

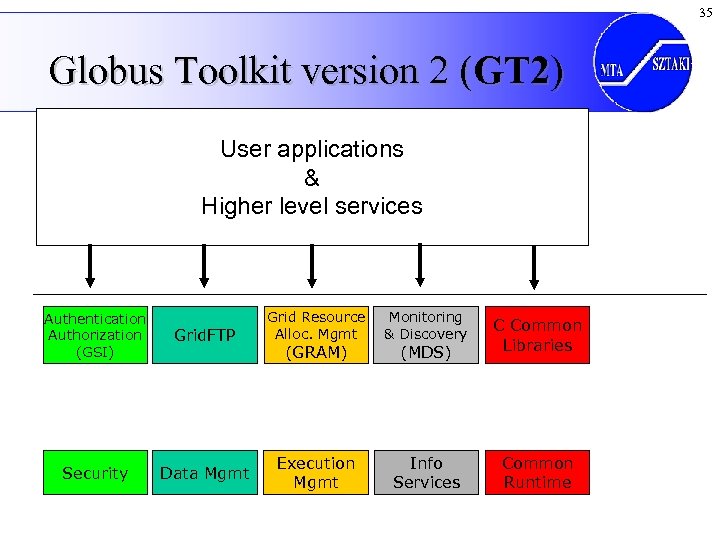

35 Globus Toolkit version 2 (GT 2) User applications & Higher level services Authentication Authorization (GSI) Grid. FTP Security Data Mgmt Grid Resource Alloc. Mgmt Monitoring & Discovery (MDS) C Common Libraries Execution Mgmt Info Services Common Runtime (GRAM)

35 Globus Toolkit version 2 (GT 2) User applications & Higher level services Authentication Authorization (GSI) Grid. FTP Security Data Mgmt Grid Resource Alloc. Mgmt Monitoring & Discovery (MDS) C Common Libraries Execution Mgmt Info Services Common Runtime (GRAM)

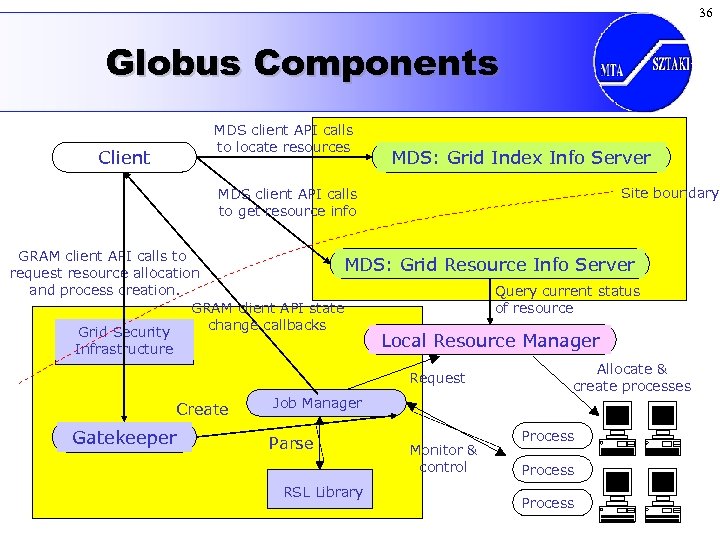

36 Globus Components MDS client API calls to locate resources Client MDS: Grid Index Info Server Site boundary MDS client API calls to get resource info GRAM client API calls to MDS: request resource allocation and process creation. GRAM client API state change callbacks Grid Security Grid Resource Info Server Query current status of resource Local Resource Manager Infrastructure Request Create Gatekeeper Job Manager Parse RSL Library Monitor & control Allocate & create processes Process

36 Globus Components MDS client API calls to locate resources Client MDS: Grid Index Info Server Site boundary MDS client API calls to get resource info GRAM client API calls to MDS: request resource allocation and process creation. GRAM client API state change callbacks Grid Security Grid Resource Info Server Query current status of resource Local Resource Manager Infrastructure Request Create Gatekeeper Job Manager Parse RSL Library Monitor & control Allocate & create processes Process

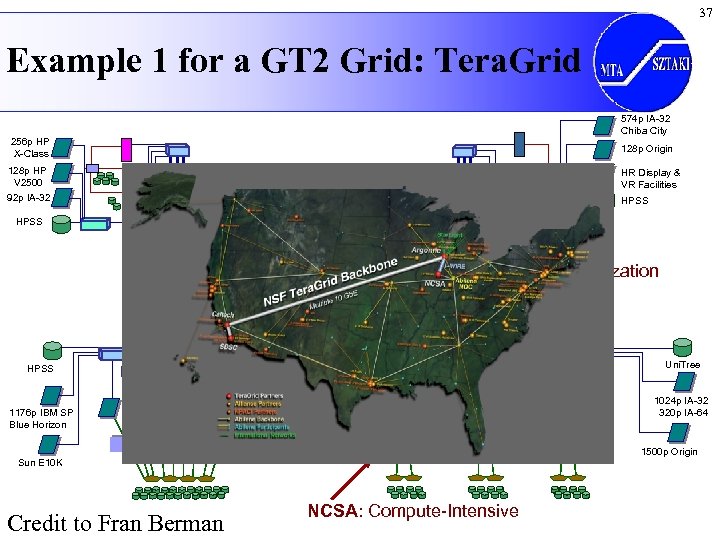

37 Example 1 for a GT 2 Grid: Tera. Grid 574 p IA-32 Chiba City 256 p HP X-Class 128 p Origin 128 p HP V 2500 HR Display & VR Facilities Caltech: Data collection and analysis applications 92 p IA-32 HPSS ANL: Visualization SDSC: Data-oriented computing Uni. Tree HPSS 1024 p IA-32 320 p IA-64 1176 p IBM SP Blue Horizon Myrinet Sun E 10 K Credit to Fran Berman NCSA: Compute-Intensive 1500 p Origin

37 Example 1 for a GT 2 Grid: Tera. Grid 574 p IA-32 Chiba City 256 p HP X-Class 128 p Origin 128 p HP V 2500 HR Display & VR Facilities Caltech: Data collection and analysis applications 92 p IA-32 HPSS ANL: Visualization SDSC: Data-oriented computing Uni. Tree HPSS 1024 p IA-32 320 p IA-64 1176 p IBM SP Blue Horizon Myrinet Sun E 10 K Credit to Fran Berman NCSA: Compute-Intensive 1500 p Origin

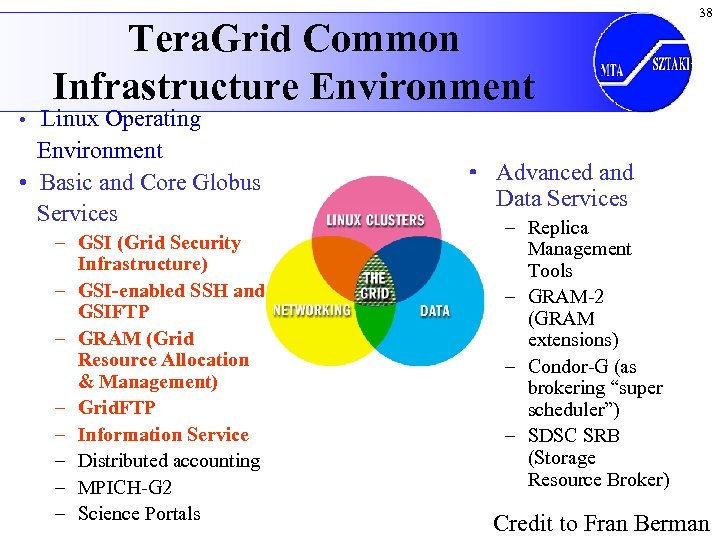

Tera. Grid Common Infrastructure Environment 38 • Linux Operating Environment • Basic and Core Globus Services – GSI (Grid Security Infrastructure) – GSI-enabled SSH and GSIFTP – GRAM (Grid Resource Allocation & Management) – Grid. FTP – Information Service – Distributed accounting – MPICH-G 2 – Science Portals • Advanced and Data Services – Replica Management Tools – GRAM-2 (GRAM extensions) – Condor-G (as brokering “super scheduler”) – SDSC SRB (Storage Resource Broker) Credit to Fran Berman

Tera. Grid Common Infrastructure Environment 38 • Linux Operating Environment • Basic and Core Globus Services – GSI (Grid Security Infrastructure) – GSI-enabled SSH and GSIFTP – GRAM (Grid Resource Allocation & Management) – Grid. FTP – Information Service – Distributed accounting – MPICH-G 2 – Science Portals • Advanced and Data Services – Replica Management Tools – GRAM-2 (GRAM extensions) – Condor-G (as brokering “super scheduler”) – SDSC SRB (Storage Resource Broker) Credit to Fran Berman

39 Example 2 for a GT 2 Grid: LHC Grid and LCG-2 • LHC Grid – A homogeneous Grid developed by CERN – Restrictive policies (global policies overrule local policies) – A dedicated Grid to the Large Hydron Collider experiments • LCG-2 – A homogeneous Grid developed by CERN and the EDG and EGEE projects – Restrictive policies (global policies overrule local policies) – A non-dedicated Grid – Works 24 hours/day and has been used in EGEE and EGEErelated Grids (SEEGRID, Baltic. Grid, etc. )

39 Example 2 for a GT 2 Grid: LHC Grid and LCG-2 • LHC Grid – A homogeneous Grid developed by CERN – Restrictive policies (global policies overrule local policies) – A dedicated Grid to the Large Hydron Collider experiments • LCG-2 – A homogeneous Grid developed by CERN and the EDG and EGEE projects – Restrictive policies (global policies overrule local policies) – A non-dedicated Grid – Works 24 hours/day and has been used in EGEE and EGEErelated Grids (SEEGRID, Baltic. Grid, etc. )

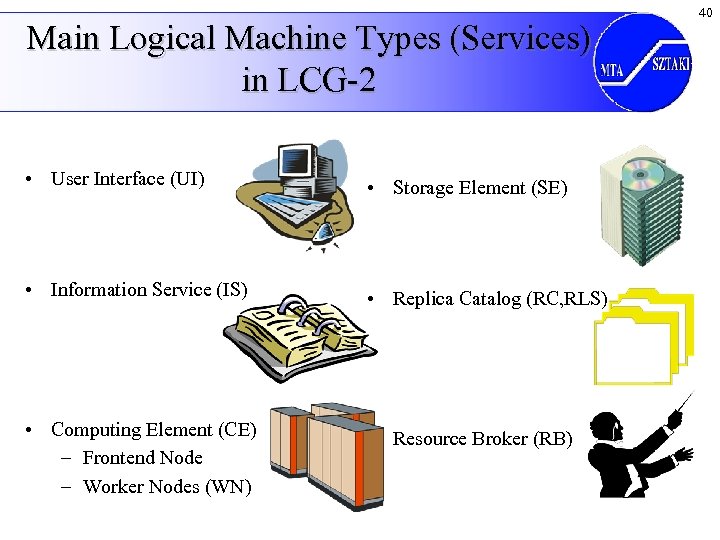

Main Logical Machine Types (Services) in LCG-2 • User Interface (UI) • Storage Element (SE) • Information Service (IS) • Replica Catalog (RC, RLS) • Computing Element (CE) – Frontend Node – Worker Nodes (WN) • Resource Broker (RB) 40

Main Logical Machine Types (Services) in LCG-2 • User Interface (UI) • Storage Element (SE) • Information Service (IS) • Replica Catalog (RC, RLS) • Computing Element (CE) – Frontend Node – Worker Nodes (WN) • Resource Broker (RB) 40

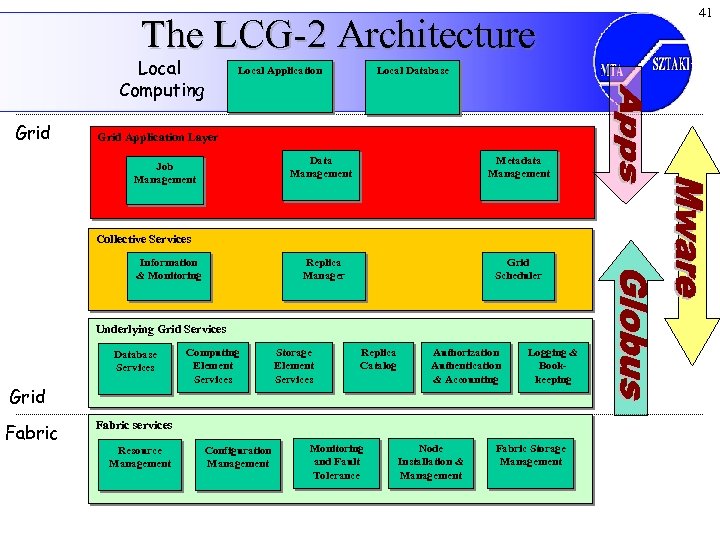

The LCG-2 Architecture Local Computing Grid Local Application Local Database Grid Application Layer Data Management Metadata Management Replica Manager Job Management Grid Scheduler Collective Services Information & Monitoring Underlying Grid Services Database Services Grid Fabric Computing Element Services Storage Element Services Replica Catalog Authorization Authentication & Accounting Logging & Bookkeeping Fabric services Resource Management Configuration Management Monitoring and Fault Tolerance Node Installation & Management Fabric Storage Management 41

The LCG-2 Architecture Local Computing Grid Local Application Local Database Grid Application Layer Data Management Metadata Management Replica Manager Job Management Grid Scheduler Collective Services Information & Monitoring Underlying Grid Services Database Services Grid Fabric Computing Element Services Storage Element Services Replica Catalog Authorization Authentication & Accounting Logging & Bookkeeping Fabric services Resource Management Configuration Management Monitoring and Fault Tolerance Node Installation & Management Fabric Storage Management 41

42 3 rd Generation Grids Service-oriented Grids OGSA (Open Grid Service Architecture) and WSRF (Web Services Resource Framework)

42 3 rd Generation Grids Service-oriented Grids OGSA (Open Grid Service Architecture) and WSRF (Web Services Resource Framework)

43 Progress in Grid Systems Cluster computing Supercomputing Client/server High-throughput High-performance computing Condor Network Computing Web Services Globus 3 rd Gen. OGSA/OGSI OGSA/WSRF Grid Systems

43 Progress in Grid Systems Cluster computing Supercomputing Client/server High-throughput High-performance computing Condor Network Computing Web Services Globus 3 rd Gen. OGSA/OGSI OGSA/WSRF Grid Systems

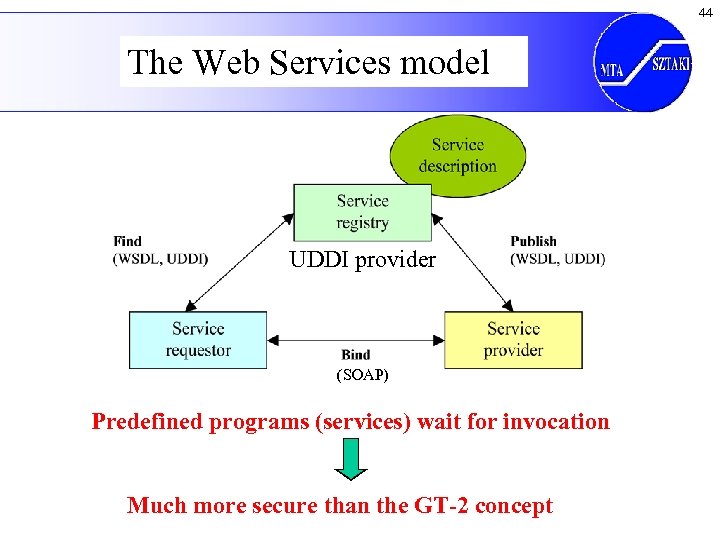

44 The Web Services model UDDI provider (SOAP) Predefined programs (services) wait for invocation Much more secure than the GT-2 concept

44 The Web Services model UDDI provider (SOAP) Predefined programs (services) wait for invocation Much more secure than the GT-2 concept

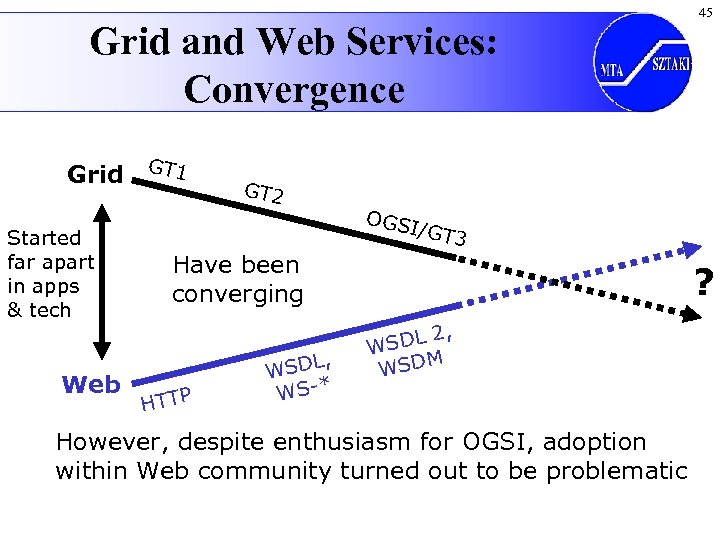

Grid and Web Services: Convergence Grid GT 1 Started far apart in apps & tech Web GT 2 OGS 45 I/GT 3 Have been converging HTTP L, WSD WS-* ? , SDL 2 W M WSD However, despite enthusiasm for OGSI, adoption within Web community turned out to be problematic

Grid and Web Services: Convergence Grid GT 1 Started far apart in apps & tech Web GT 2 OGS 45 I/GT 3 Have been converging HTTP L, WSD WS-* ? , SDL 2 W M WSD However, despite enthusiasm for OGSI, adoption within Web community turned out to be problematic

46 Concerns • Too much stuff in one specification • Does not work well with existing Web services tooling • Too object oriented

46 Concerns • Too much stuff in one specification • Does not work well with existing Web services tooling • Too object oriented

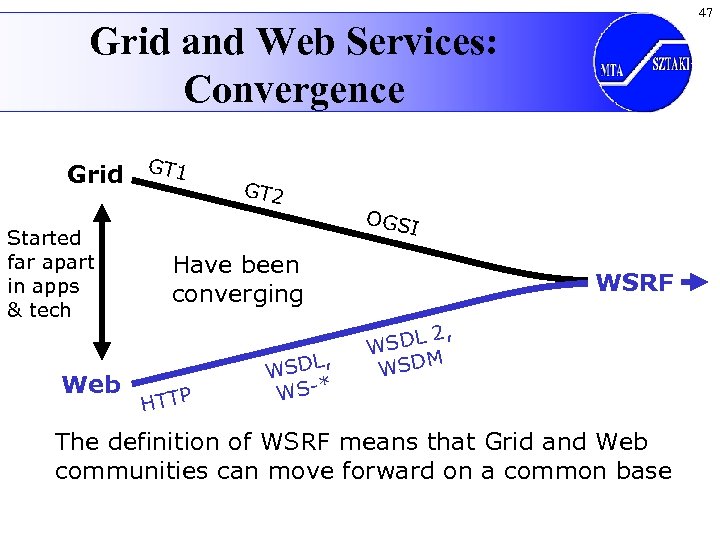

47 Grid and Web Services: Convergence Grid GT 1 Started far apart in apps & tech Web GT 2 OGS I Have been converging HTTP L, WSD WS-* WSRF , SDL 2 W M WSD The definition of WSRF means that Grid and Web communities can move forward on a common base

47 Grid and Web Services: Convergence Grid GT 1 Started far apart in apps & tech Web GT 2 OGS I Have been converging HTTP L, WSD WS-* WSRF , SDL 2 W M WSD The definition of WSRF means that Grid and Web communities can move forward on a common base

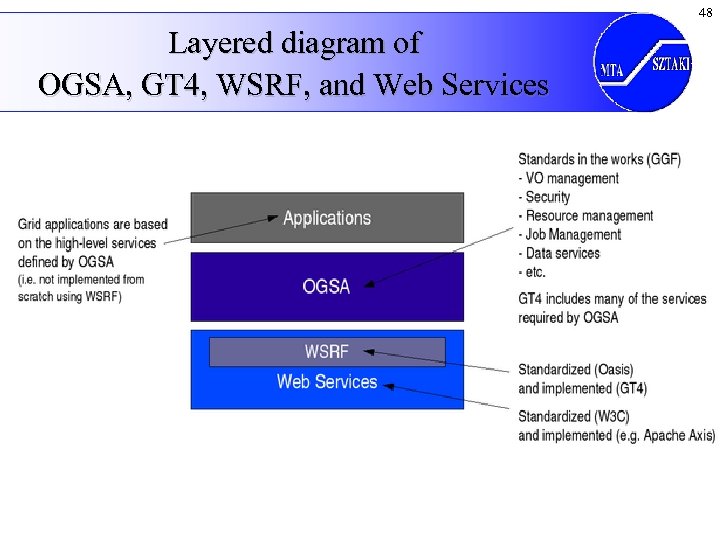

48 Layered diagram of OGSA, GT 4, WSRF, and Web Services

48 Layered diagram of OGSA, GT 4, WSRF, and Web Services

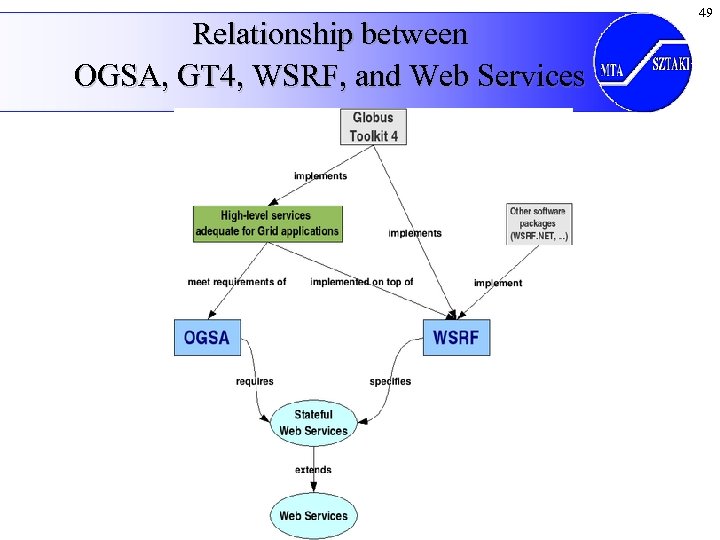

Relationship between OGSA, GT 4, WSRF, and Web Services 49

Relationship between OGSA, GT 4, WSRF, and Web Services 49

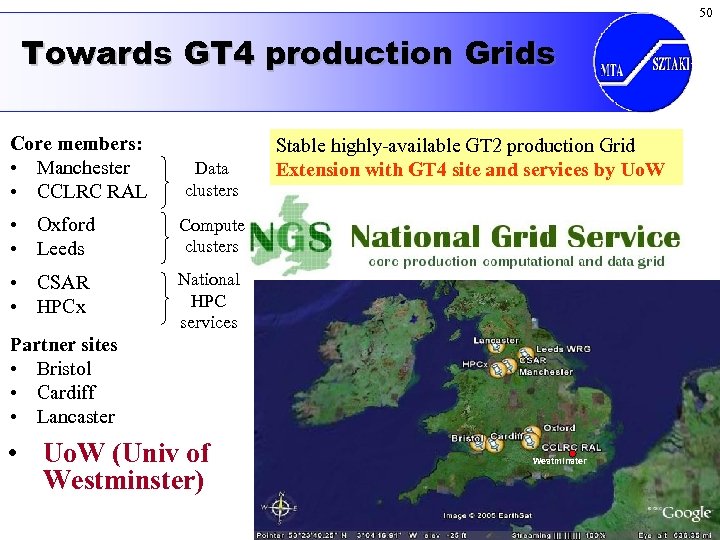

50 Towards GT 4 production Grids Core members: • Manchester • CCLRC RAL Data clusters • Oxford • Leeds Compute clusters • CSAR • HPCx Stable highly-available GT 2 production Grid Extension with GT 4 site and services by Uo. W National HPC services Partner sites • Bristol • Cardiff • Lancaster • Uo. W (Univ of Westminster) Westminster

50 Towards GT 4 production Grids Core members: • Manchester • CCLRC RAL Data clusters • Oxford • Leeds Compute clusters • CSAR • HPCx Stable highly-available GT 2 production Grid Extension with GT 4 site and services by Uo. W National HPC services Partner sites • Bristol • Cardiff • Lancaster • Uo. W (Univ of Westminster) Westminster

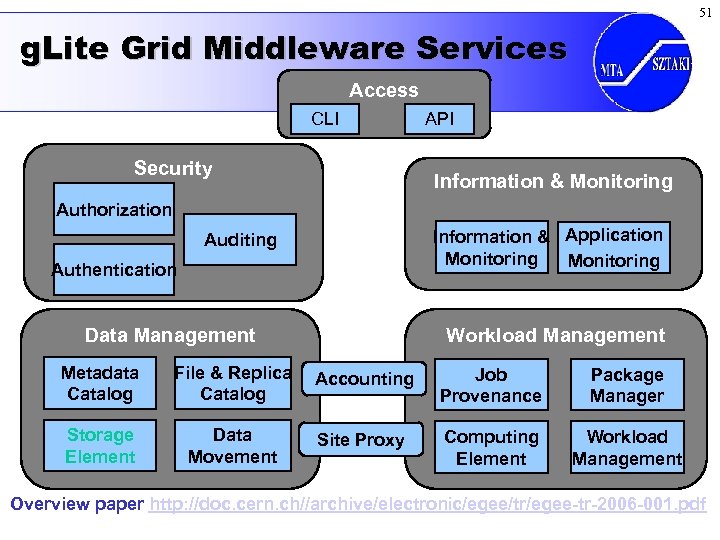

51 g. Lite Grid Middleware Services Access CLI Security API Information & Monitoring Authorization Information & Application Monitoring Auditing Authentication Data Management Workload Management Metadata Catalog File & Replica Catalog Accounting Job Provenance Package Manager Storage Element Data Movement Site Proxy Computing Element Workload Management Overview paper http: //doc. cern. ch//archive/electronic/egee/tr/egee-tr-2006 -001. pdf

51 g. Lite Grid Middleware Services Access CLI Security API Information & Monitoring Authorization Information & Application Monitoring Auditing Authentication Data Management Workload Management Metadata Catalog File & Replica Catalog Accounting Job Provenance Package Manager Storage Element Data Movement Site Proxy Computing Element Workload Management Overview paper http: //doc. cern. ch//archive/electronic/egee/tr/egee-tr-2006 -001. pdf

52 Conclusions • Fast evolution of Grid systems and middleware: – GT 1, GT 2, OGSA, OGSI, GT 3, WSRF, GT 4, … • Current production scientific Grid systems are built based on 1 st and 2 nd gen. Grid technologies • Enterprise Grid systems are emerging based on the new OGSA and WSRF concepts

52 Conclusions • Fast evolution of Grid systems and middleware: – GT 1, GT 2, OGSA, OGSI, GT 3, WSRF, GT 4, … • Current production scientific Grid systems are built based on 1 st and 2 nd gen. Grid technologies • Enterprise Grid systems are emerging based on the new OGSA and WSRF concepts