b684d84a9829175477ef514b7950d5fe.ppt

- Количество слайдов: 43

1 Last update: 13 December 2007 Advanced databases – Inferring implicit/new knowledge from data(bases): Some thoughts about mining and privacy Bettina Berendt Katholieke Universiteit Leuven, Department of Computer Science http: //www. cs. kuleuven. be/~berendt/teaching/2007 w/adb/

2 Agenda (Some) questions (Some) answers Outlook

Is this a man or a woman? clicked on 3

Is this the same person? 4

Who is this? 5

Who is this? (Sample from a search-query log) 6

7 Agenda (Some) questions (Some) answers Outlook

8 Gender prediction I

Data, data preparation and learning 9 Blogspot. com – optional demographic annotation 150, 000 blogs: n 75, 000 male entries / 75, 000 female entries male female n size 200 - 4, 000 characters / entry n post-processing quality of automatic “gender separation” n naïve bayes text classifier l n 140, 000 training set – 10, 000 test set Accuracy: 71% (>> 50% baseline) [Liu, H. & Mihalcea, R. (2007). Of men, women, and computers: Data-driven gender modeling for improved user interfaces, In Proc. of the International Conference on Weblogs and Social Media]

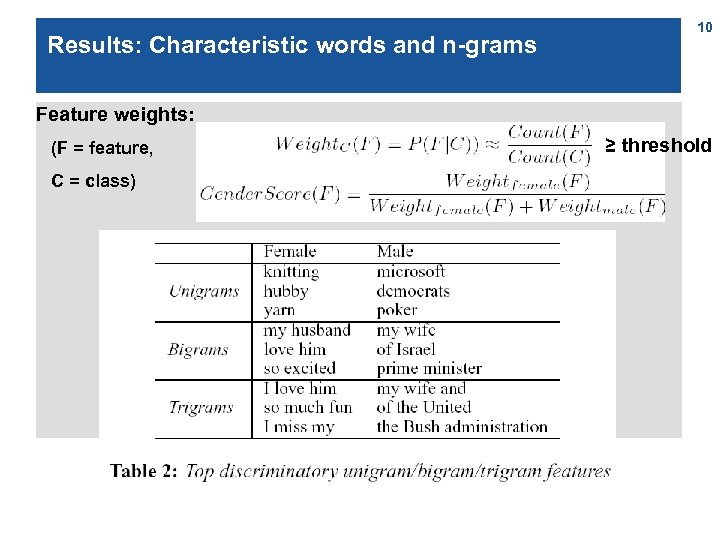

Results: Characteristic words and n-grams 10 Feature weights: (F = feature, C = class) ≥ threshold

Deploying the results – personalization can have different faces (1) 11

Deploying the results – personalization can have different faces (2) 12

13 Gender prediction II clicked on

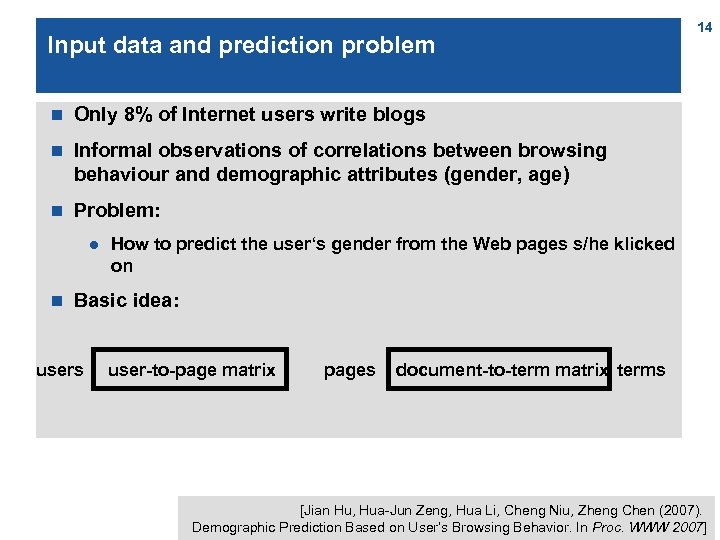

Input data and prediction problem n Only 8% of Internet users write blogs n Informal observations of correlations between browsing behaviour and demographic attributes (gender, age) n 14 Problem: l n How to predict the user‘s gender from the Web pages s/he klicked on Basic idea: users user-to-page matrix pages document-to-term matrix terms [Jian Hu, Hua-Jun Zeng, Hua Li, Cheng Niu, Zheng Chen (2007). Demographic Prediction Based on User’s Browsing Behavior. In Proc. WWW 2007]

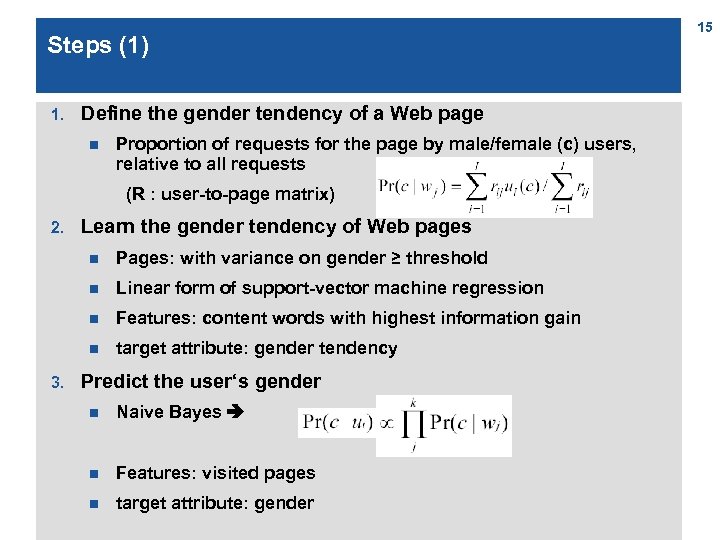

Steps (1) 1. Define the gender tendency of a Web page n Proportion of requests for the page by male/female (c) users, relative to all requests (R : user-to-page matrix) 2. Learn the gender tendency of Web pages n n Linear form of support-vector machine regression n Features: content words with highest information gain n 3. Pages: with variance on gender ≥ threshold target attribute: gender tendency Predict the user‘s gender n Naive Bayes n Features: visited pages n target attribute: gender 15

Steps (2) 4. Optimize the process by leveraging latent semantic structure n n Apply LSI to the user-to-page matrix n n Smooth the user-to-page matrix ( 0 some small constant ) Smooth each predicted value by replacing it by a weighted average of its closest neighbours‘ values (and various other optimizations) 16

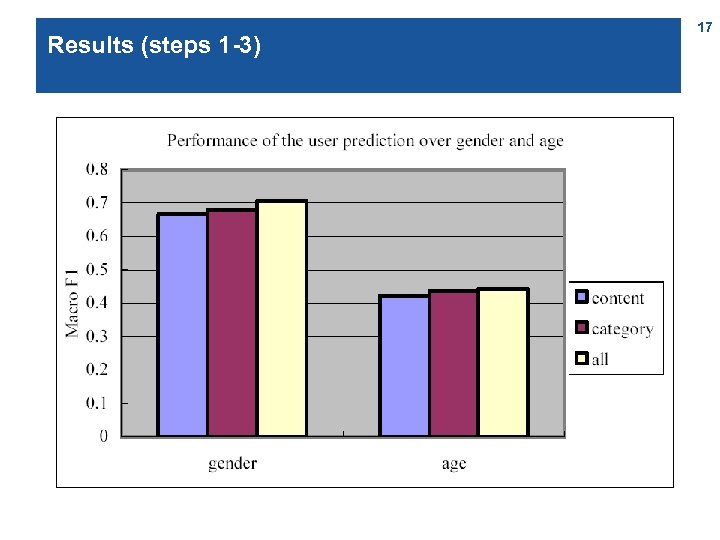

Results (steps 1 -3) 17

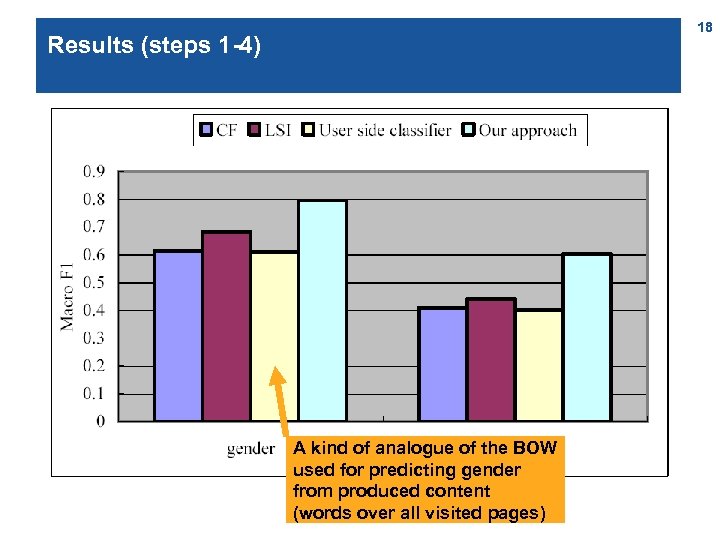

18 Results (steps 1 -4) A kind of analogue of the BOW used for predicting gender from produced content (words over all visited pages)

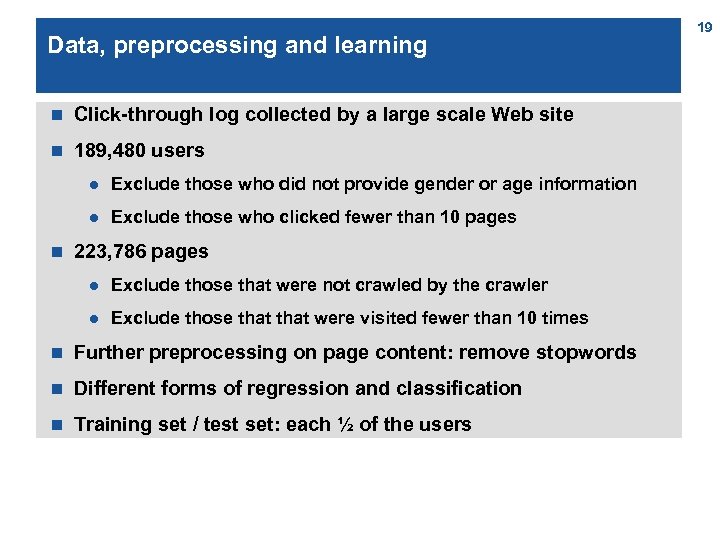

Data, preprocessing and learning n Click-through log collected by a large scale Web site n 189, 480 users l l n Exclude those who did not provide gender or age information Exclude those who clicked fewer than 10 pages 223, 786 pages l Exclude those that were not crawled by the crawler l Exclude those that were visited fewer than 10 times n Further preprocessing on page content: remove stopwords n Different forms of regression and classification n Training set / test set: each ½ of the users 19

20 Re-identification

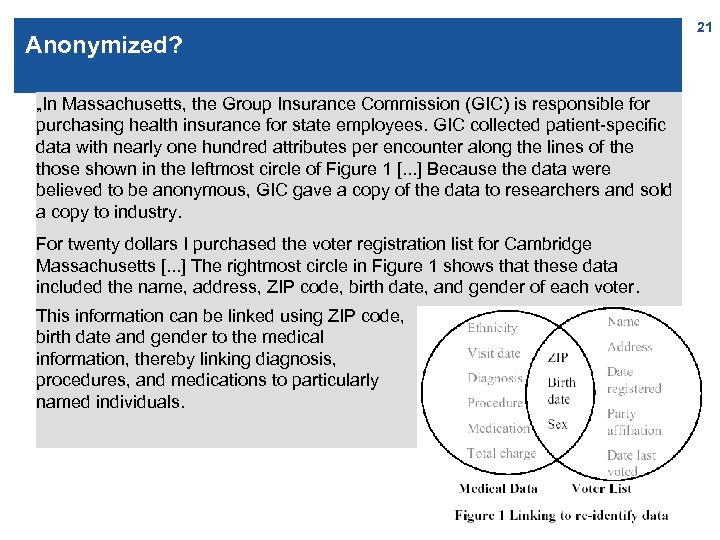

Anonymized? „In Massachusetts, the Group Insurance Commission (GIC) is responsible for purchasing health insurance for state employees. GIC collected patient-specific data with nearly one hundred attributes per encounter along the lines of the those shown in the leftmost circle of Figure 1 [. . . ] Because the data were believed to be anonymous, GIC gave a copy of the data to researchers and sold a copy to industry. For twenty dollars I purchased the voter registration list for Cambridge Massachusetts [. . . ] The rightmost circle in Figure 1 shows that these data included the name, address, ZIP code, birth date, and gender of each voter. This information can be linked using ZIP code, birth date and gender to the medical information, thereby linking diagnosis, procedures, and medications to particularly named individuals. 21

![Results For example, [. . . ] Governor Weld lived in Cambridge Massachusetts. According Results For example, [. . . ] Governor Weld lived in Cambridge Massachusetts. According](https://present5.com/presentation/b684d84a9829175477ef514b7950d5fe/image-22.jpg)

Results For example, [. . . ] Governor Weld lived in Cambridge Massachusetts. According to the Cambridge Voter list, six people had his particular birth date; only three of them were men; and, he was the only one in his 5 -digit ZIP code. “ „ 87% of the population in the United States had reported characteristics that likely made them unique based only on {5 -digit ZIP, gender, date of birth}. “ n Based on that, Sweeney defined k-anonymity: n A relational table is k-anonymous if every sequence of values for an attribute set that can re-identify (that can be used for linking) occurs in at least k records. L. Sweeney. k-anonymity: a model for protecting privacy. International Journal on Uncertainty, Fuzziness and Knowledge-based Systems, 10 (5), 2002; 557 -570. 22

Data and data analysis n Medical records on 135, 000 state employees and their families n The census data n Matching on shared attributes n (Note: This matching is generally not considered to be a data mining technique. However, k-anonymity has become an important goal of privacy-preserving data mining techniques. ) 23

24 Merging identities

Keeping identities apart – the basic setting n Paper published by the Movie. Lens team (collaborative-filtering movie ratings) who were considering publishing a ratings dataset, see http: //movielens. umn. edu/ n Public dataset: users mention films in forum posts n Private dataset (may be released e. g. for research purposes): users‘ ratings n Film IDs can easily be extracted from the posts n 25 Observation: Every user will talk about items from a sparse relation space (those – generally few – films s/he has seen) [Frankowski, D. , Cosley, D. , Sen, S. , Terveen, L. , & Riedl, J. (2006). You are what you say: Privacy risks of public mentions. In Proc. SIGIR‘ 06]

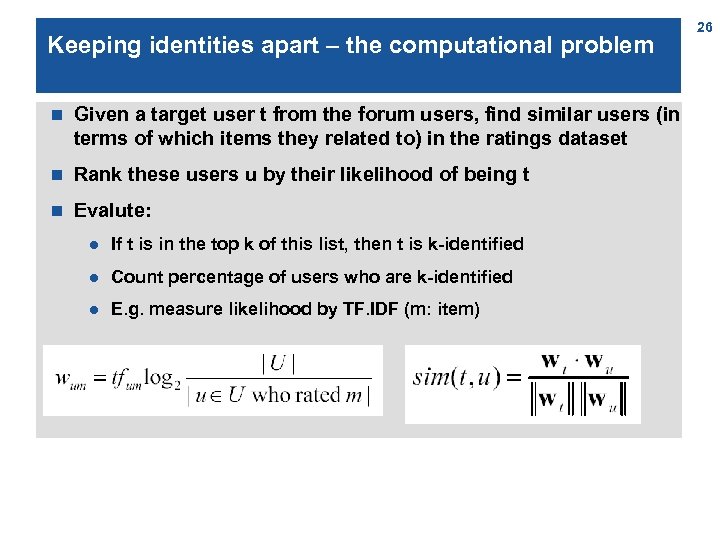

Keeping identities apart – the computational problem n Given a target user t from the forum users, find similar users (in terms of which items they related to) in the ratings dataset n Rank these users u by their likelihood of being t n Evalute: l If t is in the top k of this list, then t is k-identified l Count percentage of users who are k-identified l E. g. measure likelihood by TF. IDF (m: item) 26

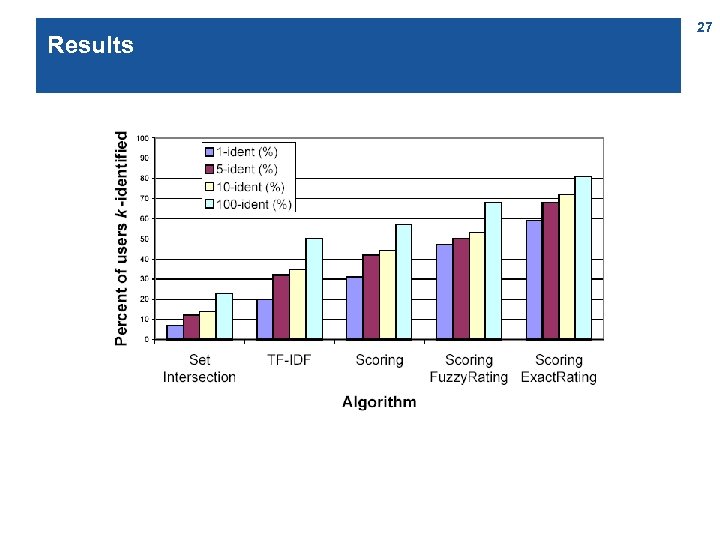

Results 27

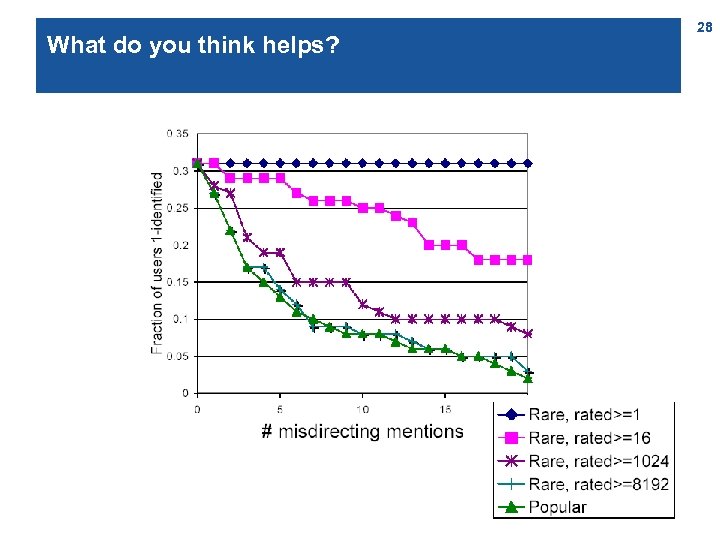

What do you think helps? 28

Data and data analysis n 3, 828 movie references made by 133 forum posters about 1, 685 different movies n 12, 565, 530 ratings from 140, 132 users, on 8, 957 items n Extraction of movie references from the posts n Different algorithms for finding „similar“ users 29

30 Re-identification

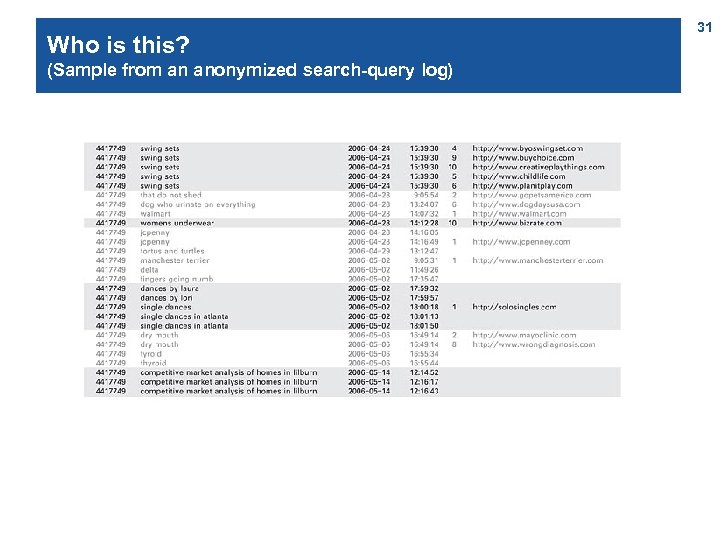

Who is this? (Sample from an anonymized search-query log) 31

Result (a 1 -identified person) 32 [M. Barbaro and T. Zeller. A face is exposed for AOL Searcher No. 4417749. New York Times, 9 August 2006]

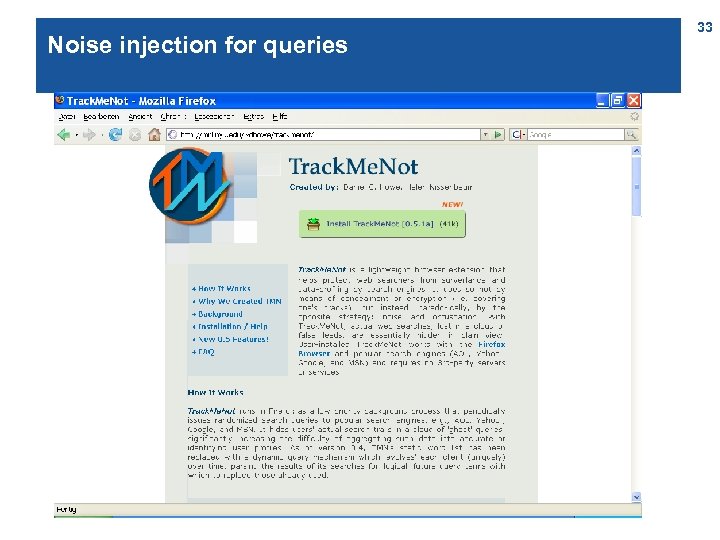

Noise injection for queries 33

34 Agenda (Some) questions (Some) answers Outlook

Trends in Web mining n More data types (e. g. , multimedia) n More varied semantics (e. g. , social networks: documents, people, . . . ) n More action types (usage becomes reading and writing) and richer data (texts instead of queries) n Ubiquity l Of data and devices (e. g. , mobile) l Of people n Privacy-preserving data mining n More users of KD 35

Other uses of data mining / KD: Policing Rodney Munroe, chief of the Richmond, Va. police service, accepted the 2007 Business Intelligence Award for Excellence from Gartner, a leading information technology research and advisory company [. . . ]. The award usually goes to innovative business applications, but judges were impressed with how Munroe turned analytics into a formidable crime-fighting tool. When Munroe took over as chief two years ago, his department was drowning in crime and data. Police had a mass of data from 911 calls and crime reports; what they didn’t have was a way to connect the dots and see a pattern of behaviour. Using some sophisticated software and hardware they started overlaying crime reports with other data, such as weather, traffic, sports events and paydays for large employers. The data was analyzed three times a day and something interesting emerged: Robberies spiked on paydays near cheque cashing storefronts in specific neighbourhoods. Other clusters also became apparent, and pretty soon police were deploying resources in advance and predicting where crime was most likely to occur. Ian Harvey. Fighting crime with databases. Aug. 6, 2007 http: //www. cbc. ca/news/background/tech/data-mining. html 36

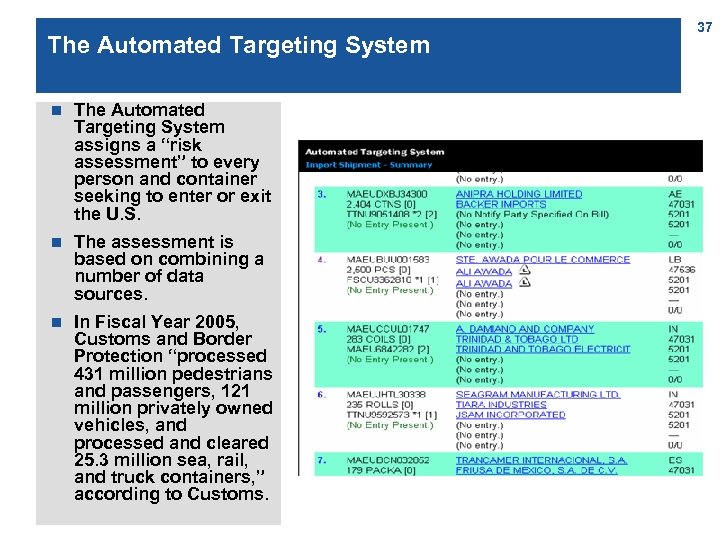

The Automated Targeting System n The Automated Targeting System assigns a “risk assessment” to every person and container seeking to enter or exit the U. S. n The assessment is based on combining a number of data sources. n In Fiscal Year 2005, Customs and Border Protection “processed 431 million pedestrians and passengers, 121 million privately owned vehicles, and processed and cleared 25. 3 million sea, rail, and truck containers, ” according to Customs. 37

Department of Homeland Security – announcement of changes to the ATS, 7 August 2007 „ATS assists U. S. Customs and Border Protection (CBP) frontline officers in frustrating the ability of terrorists to gain entry into the United States, enforcing all import and export laws, and facilitating legitimate trade and travel across our borders. [. . . ] the department received several hundred comments [. . . ], many of which concerned ATS-P, the passenger screening module used by CBP officers. The department responded to these comments by revising the SORN. Notable revisions to the SORN include: n ATS-P will retain the information for a far shorter period of time. Under the revised SORN, the retention period is 15 years (7 years active and 8 years dormant), a significant decrease from the proposed 40 -year period. n Under ATS-P, the purposes for which Passenger Name Record data (PNR) may be used have been narrowed. n The updated SORN implements the department’s mixed system policy, which administratively extends the protections of the Privacy Act of 1974 to non-U. S. persons by providing access and redress to their PNR data. As well, ATS-P treats all passengers equally. ATS does not profile by race, ethnicity or arbitrary assumptions. The department does not collect information on race, ethnicity, religion, or orientation, or make decisions based on such information, and to the extent such information may be provided by a carrier, the department filters that information out. Further, ATS-P does not use a score to determine an individual’s risk level. Rather, ATS-P compares PNR and Advanced Passenger Information System data with law enforcement records and threat-based scenarios for use by law enforcement officials to intercept high-risk travelers, identify persons of concern, and identify patterns of suspicious activity, which may be used to identify other high risk travelers previously unknown to law enforcement. The scenarios are drawn from previous and current law enforcement and intelligence information. Importantly, ATS does not replace human decision making. It is a decision-making support tool for use by trained law enforcement officials. [. . . ]“ http: //www. dhs. gov/xnews/releases/pr_1186178812301. shtm 38

„My data belong to me“ ? ! – or: my mined profile, part 2; or: external effects I 39

„My data belong to me“ ? ! – or: external effects II Friendship is generally symmetric If A wants to hide her friendships, But B shows that „A is my friend“, B has disclosed private information of A. (More elaborate problems follow from this. . . ) For a discussion, see Preibusch, S. , Hoser, B. , Gürses, S. , & Berendt, B. (2007). Ubiquitous social networks opportunities and challenges for privacy-aware user modelling. In Proceedings of the Workshop on Data Mining for User Modelling at UM 2007, Corfu, Greece, June 2007. 40

Scope for action for you! n as a researcher n as a netizen n as a citizen 41

Next lecture: Application example – What is the impact of genetically modified organisms? 42

References and background reading Liu, H. & Mihalcea, R. (2007). Of men, women, and computers: Data-driven gender modeling for improved user interfaces. In Proc. of the International Conference on Weblogs and Social Media. http: //www. icwsm. org/papers/paper 3. html Jian Hu, Hua-Jun Zeng, Hua Li, Cheng Niu, Zheng Chen (2007). Demographic Prediction Based on User’s Browsing Behavior. In Proc. WWW 2007, May 8– 12, 2007, Banff, Alberta, Canada. http: //www 2007. org/papers/paper 686. pdf L. Sweeney (2002). k-anonymity: a model for protecting privacy. International Journal on Uncertainty, Fuzziness and Knowledge-based Systems, 10 (5), 557 -570. http: //privacy. cs. cmu. edu/people/sweeney/kanonymity. html Frankowski, D. , Cosley, D. , Sen, S. , Terveen, L. G. , Riedl, J. (2006). You are what you say: privacy risks of public mentions. In SIGIR 2006: Proceedings of the 29 th Annual International ACM SIGIR Conference on Research and Development in Information Retrieval, Seattle, Washington, USA, August 6 -11, 2006 (pp. 565– 572). ACM. http: //www-users. cs. umn. edu/~dfrankow/files/privacy-sigir 2006. pdf Barbaro, M. , Zeller, T. : A face is exposed for AOL searcher no. 4417749. New York Times (9 August 2006) http: //www. nytimes. com/2006/08/09/technology/09 aol. html Jeff Jonas and Jim Harper (2006). Effective Counterterrorism and the Limited Role of Predictive Data Mining. Policy Analysis No. 584, Dec. 11, 2006. http: //www. cato. org/pubs/pa 584. pdf 43

b684d84a9829175477ef514b7950d5fe.ppt