360a8585ab63cc66a20c7c0cae7de250.ppt

- Количество слайдов: 21

1. Describe how the techniques described in ICS 207 could be used to spot students who cheat on take home tests. What enhancements would be necessary if instead of copying word for word, students replaced some words with synonyms? Discuss the precision/recall trade-off in this case. If the penalty for copying were severe e. g. , expulsion from the university vs. get no credit for copied work, would that effect how the system might work? 1

• Store each answer to each question. (To improve precision, create a separate index per question). Compute TF-IDF weights of terms – Treat each answer as query and see what answers are most similar using cosine similarity. – Return the N pairs most similar, or within a certain threshold. – (or cluster the documents) • Have the professor or TA judge the results. High recall catches all cheaters but has many false alarms. High precision returns few false alarms but lets more cheaters escape punishment. • Severe punishment may decrease the probability of cheating but also increases the importance of not having a false alarm. • Consider using LSA to deal with synonyms, or thesaurus, 2 but be careful. Consider using phrases of 2 -3 words.

2. Give an example of a stemming algorithm. Why are such techniques used as part of IR systems? Describe how the technique is included as part of the indexing, query interpretation and matching processes. Porter stemmer: finds root forms of terms (. *[AEIOU]. *)ED -> /1 // removed past tense –ed ending Stem documents before indexing Stem queries before retrieval Matching: No change 3

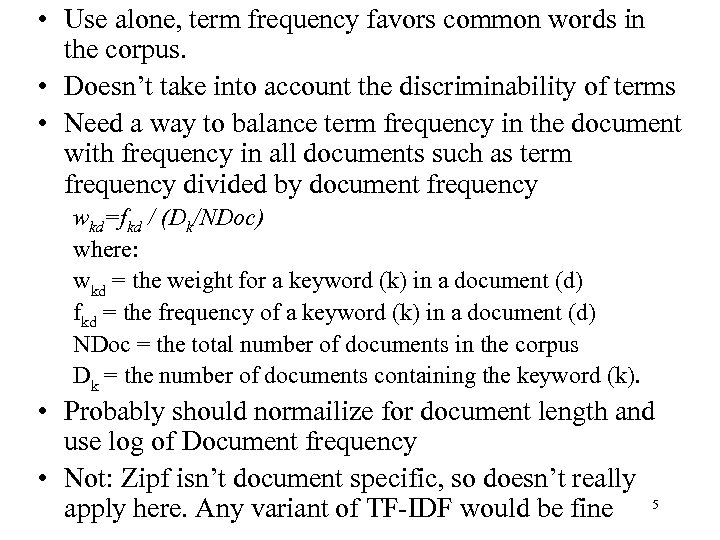

3. Why is the simple frequency of a index's occurrence in a document insufficient as a predictor of its utility? Give an example of a particular index and a particular corpus that demonstrates this. Give the formula for one term weighting scheme, and identify these two factors in the formula. 4

• Use alone, term frequency favors common words in the corpus. • Doesn’t take into account the discriminability of terms • Need a way to balance term frequency in the document with frequency in all documents such as term frequency divided by document frequency wkd=fkd / (Dk/NDoc) where: wkd = the weight for a keyword (k) in a document (d) fkd = the frequency of a keyword (k) in a document (d) NDoc = the total number of documents in the corpus Dk = the number of documents containing the keyword (k). • Probably should normailize for document length and use log of Document frequency • Not: Zipf isn’t document specific, so doesn’t really apply here. Any variant of TF-IDF would be fine 5

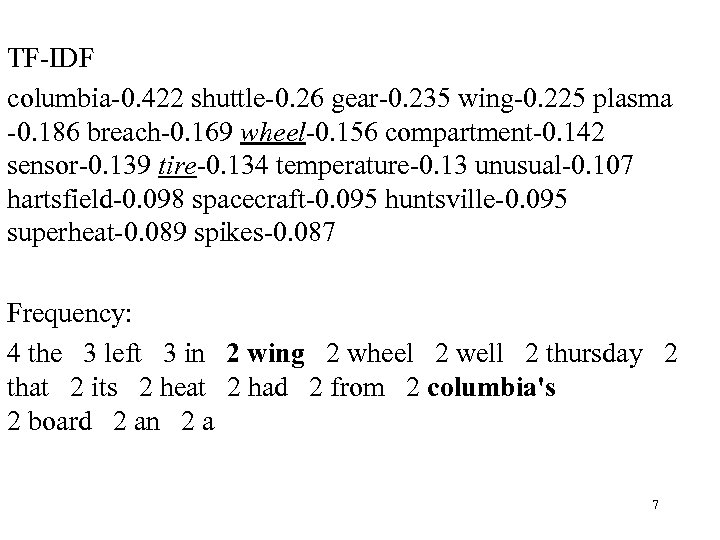

Superheated air almost certainly seeped through a breach in space shuttle Columbia's left wing and possibly its wheel compartment during the craft's fiery descent from orbit, an investigative board has found. Investigators also disclosed Thursday that Columbia had begun to have trouble, including spikes in temperature readings in its left wing, while it was well off the California coast, much farther west than initially thought. Sensors had noticed an unusual heat buildup of about 30 degrees inside Columbia's left wheel well minutes before the spacecraft disintegrated over Texas on Feb. 1. But the accident investigating board said Thursday that heat damage from a missing tile would not be sufficient to cause the unusual temperature increases. Instead, the board, making its first significant conclusion in the case, determined that the temperature spikes were caused by the presence inside Columbia of plasma, or superheated air caused by the craft's re-entry into the Earth's atmosphere, with temperatures of roughly 2, 000 degrees. It said investigators were studying where a breach might have occurred to allow plasma to seep inside the wheel compartment or elsewhere in Columbia's left wing. "We won't speculate about what it means or how important it is, " said retired Adm. Harold Gehman, who heads the investigating board. "We will keep the process open. We won't get into a guessing game at this time. " The board did not specify whether such a breach could be the result of a structural tear in Columbia's aluminum frame or a hole from debris striking the spacecraft. The board also did not indicate when the breach occurred during the shuttle's 16 -day mission. The board visited the Marshall Space Flight Center in Huntsville, Ala. , on Friday to spend two days learning about work performed on the shuttle there. Investigators previously have focused on an unusually large chunk of foam insulation that broke off Columbia's external fuel tank on liftoff on Jan. 16. Video footage showed it struck the shuttle's left wing, including its toughened leading edge and thermal tiles covering the landing gear door. Gehman also said in Huntsville that rigid foam insulation that fell off the external tank on liftoff was still a possible factor. The announcement focused renewed attention on possible catastrophic failures inside the wheel compartment that may have contributed to the Feb. 1 breakup that killed seven astronauts. Officials are not sure where a breach might have opened in Columbia's skin, James Hartsfield, a spokesman for the National Aeronautics and Space Administration, said. But he said the leading edge or elsewhere on the left wing, the fuselage or the left landing gear door were prime candidates. "Any of those could be potential causes for the temperature change we saw, " Hartsfield said. "They do not and have not pinpointed any general location as to where that plasma flow would have to originate. " In an unusual plea for assistance, NASA urged the public to share with them any photographs or videotapes of Columbia's descent from California to eastern Texas. Some members of the public already have handed over images, "but more material will help the investigation of the Columbia accident, " the agency said. The board's announcement didn't surprise those experts who long have believed that a mysterious failure of sensors within Columbia's left wing indicated that super-hot plasma had penetrated the shuttle. "I think there was a substantial hole in the wing, " said Steven P. Schneider, an associate professor at Purdue University's Aerospace Sciences Laboratory. "That would not be at all surprising. All the sensors in the wing failed or gave bad readings" by the time ground controllers lost contact with Columbia, he said. The board also dismissed suggestions that Columbia's left landing gear was improperly lowered as it raced through Earth's atmosphere at more than 12, 000 miles per hour. NASA disclosed earlier Thursday that a sensor indicated the gear was down just 26 seconds before Columbia's destruction. If Columbia's gear was lowered at that speed -- and in those searing temperatures as the shuttle descended over Texas from about 40 miles up -- the heat and rushing air would have sheared off Columbia's tires and led quickly to the spacecraft's tumbling destruction, experts said. Officials said they were confident that the unusual sensor reading was wrong. Tires are supposed to remain raised until the shuttle is about 200 feet over the runway and flying at 345 mph. Two other sensors in the same wheel compartment indicated the gear was still properly raised, they said. While Columbia's piloting computers began almost simultaneously firing thrusters in an attempt to keep the shuttle's wings level, officials said, a mysterious disruption in the air flowing near the left wing was not serious enough to suggest the shuttle's gear might be down. The investigating board concluded that its research "does not support the scenario of an early deployment of the left gear. " NASA also confirmed that searchers near Hemphill, Texas, about 140 miles northeast of Houston, recovered what is believed to be one of Columbia's radial tires. A 6 spokesman was not immediately sure which of the shuttle's tires was found. The tire was blackened and had a massive split across its tread, but it was not known whether the tire was damaged aboard Columbia or when it struck the ground.

TF-IDF columbia-0. 422 shuttle-0. 26 gear-0. 235 wing-0. 225 plasma -0. 186 breach-0. 169 wheel-0. 156 compartment-0. 142 sensor-0. 139 tire-0. 134 temperature-0. 13 unusual-0. 107 hartsfield-0. 098 spacecraft-0. 095 huntsville-0. 095 superheat-0. 089 spikes-0. 087 Frequency: 4 the 3 left 3 in 2 wing 2 wheel 2 well 2 thursday 2 that 2 its 2 heat 2 had 2 from 2 columbia's 2 board 2 an 2 a 7

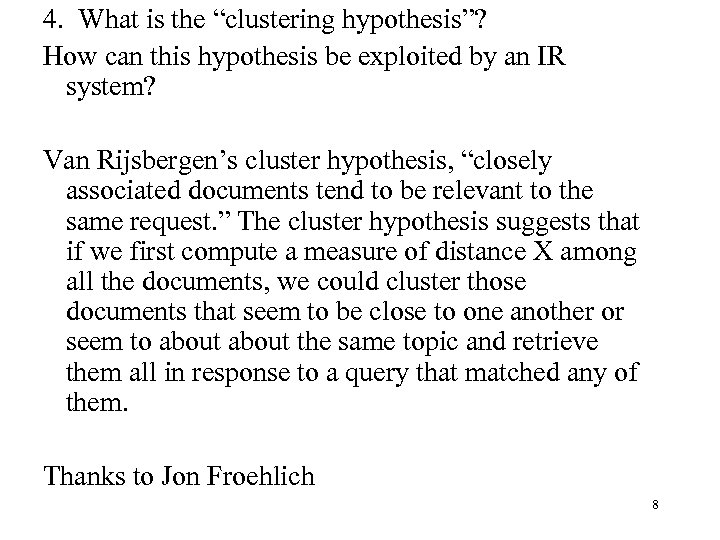

4. What is the “clustering hypothesis”? How can this hypothesis be exploited by an IR system? Van Rijsbergen’s cluster hypothesis, “closely associated documents tend to be relevant to the same request. ” The cluster hypothesis suggests that if we first compute a measure of distance X among all the documents, we could cluster those documents that seem to be close to one another or seem to about the same topic and retrieve them all in response to a query that matched any of them. Thanks to Jon Froehlich 8

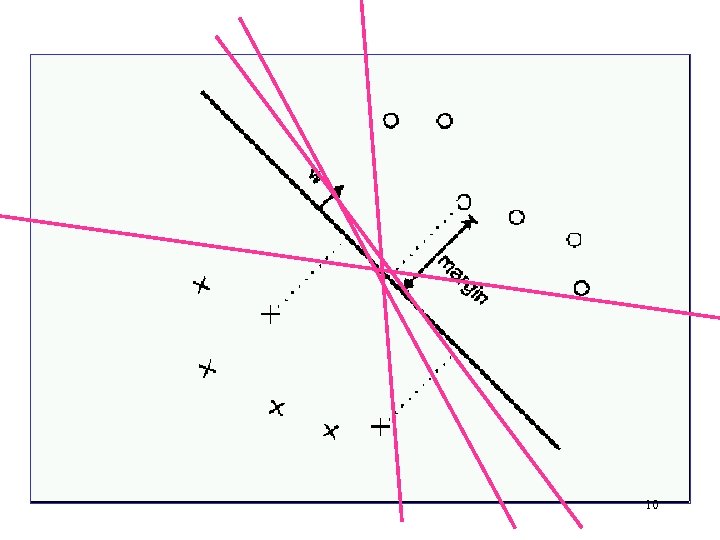

5. How does a linear support vector machine differ from a perceptron in classification? Why is this difference important? Linear support vector machine find the optimal dividing line by maximizing the margin between the positive and negative examples Perceptron finds any separation. SVM has less variance with different training sets and initial conditions and the optimal solution is more often a better generalization than a random one. 9

10

6. Define precision and recall precisely. What foolproof method could an IR system use to achieve perfect recall? What would you expect the precision of this method to be? Retr = a set of retrieved documents Rel = a set of relevant documents N = number of documents Recall = | Retr Rel | / |Rel| Precision = | Retr Rel | / |Retr| Return all documents to get perfect recall. Precision of this method is |Rel|/N which can be close to 0 Note define terms in formulae 11

7. What is a reasonable heuristic for identifying the outstanding papers in an area of research with which you are unfamiliar? The documents with the most citations 12

8. Use any general web search system to determine whether it is safe to take the drugs Viagra and Phentermine at the same time. What query did you use at first? What was your final query? Why isn’t a general-purpose search engine ideally suited for this problem? Would relevance feedback help here? Would relevance feedback be effective if it only used positive feedback in this case? Lots of answers. “Viagra Phentermine” returns a billion online pharmacies, adding Safety, interaction, doesn’t help. Subtracting –sale, buy, -purchase, -order gets closer. Negative feedback could help rules these Just using one drug helps because there isn’t a interaction, and documents tend to list what is not safe instead of what is safe. “drug interactions” get you to specialized site with a database instead of a search engine. Its easier to find something that exists than to verify it doesn’t with search engine technology. 13

9. What problems does Latent Semantic Analysis address? Would this approach be better suited for queries of an encyclopedia or a newspaper? Why? It helps with synonyms such as “car & automobile” “rental and leasing” and homonyms such as “tire” It’s expensive to index but cheap to retrieve so better for collections that don’t change much, such as encyclopedia. 14

11. Pick a topic that interests you. Find an authority for that topic. Find a hub. Argue that the site is a hub or any authority based on the number of links to or from that site. Hint: in Google the query “link: http: //www. uci. edu” will find web sites that link to http: //www. uci. edu 15

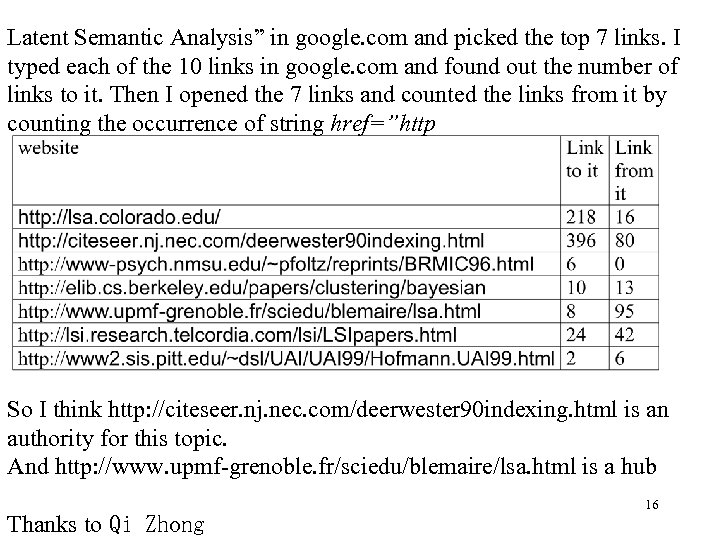

Latent Semantic Analysis” in google. com and picked the top 7 links. I typed each of the 10 links in google. com and found out the number of links to it. Then I opened the 7 links and counted the links from it by counting the occurrence of string href=”http So I think http: //citeseer. nj. nec. com/deerwester 90 indexing. html is an authority for this topic. And http: //www. upmf-grenoble. fr/sciedu/blemaire/lsa. html is a hub Thanks to Qi Zhong 16

11 Would a rule-based learner or collaborative filtering be more appropriate for filtering your email? Why? Rule based: It learns from YOUR behavior Collaborative: Compares you to others, but you are the only one reading your mail, so can’t help filter. (It might do okay at SPAM since many people read that, but SPAM is in the eye of the beholder, some people do want to help smuggle $17 M from Nigeria 17

12. I’m interested in going to the machine learning conference this year. I typed “Machine learning conference” in to Google and it returned the following result: . 1998 International Machine Learning Conference. ICML-2001. ICML 2002 - Sydney. ICML-2000. ECML/PKDD-2002. ICML-99. ECML/PKDD-2001 Home. ICML 2003. International Conferences on Machine Learning and Applications However, most people are more interested in the future conference than the past conference. Why is the 2003 conference ninth instead of first? a. More links to older conference: 172 vs 162. b. Possibly more important links to it 18

13. How could this happen, i. e. , why did google rank Microsoft first to the query “Go to hell”? (although this is no longer first “"Micro$oft" gets you to Microsoft even though the home page obviously doesn’t contain the term "Micro$oft" or “Go to hell”. Microsoft tops Google hell search rankings By John Lettice Posted: 18/09/2002 at 17: 30 GMT Weirdisms in Google's ranking system are sent to entertain us occasionally, and as we've had this one from a couple of people today, we suspect it's new. But don't blame us if it's not, we lead sheltered lives sometimes. Currently, if you type "go to hell" into Google, then Microsoft Corporation, Where do you want to go today? 19 comes first.

• Some people created web pages with the link text being “Go to Hell” and the destination microsoft. E. g. , <a href=http: //www. microsoft. com>Go To Hell</a> • Microsoft has many links pointing to it, so its pagerank score is high • Google indexes terms in the link as well as terms in the page. 20

Final comments • This practice exam is a random sample of questions drawn from the distribution of possible exam questions. Topics not on this exam, but in the study guide for papers and study guide for the text can appear on the final exam. • Pay attention to instructions: USE THE SUBJECT indicated. “Re: Practice Exam for ICS 207" so I can find it easily” • How long did it take? • Final exam emailed at 6 am PST March 16, due 11: 59 PM March 17 and posted on web site. • Final report format email by March 9: 5 pages max 21

360a8585ab63cc66a20c7c0cae7de250.ppt