875d9e9a1da3d8b8f6343130066d2270.ppt

- Количество слайдов: 29

1 COMP 3503 Inductive Decision Trees with Daniel L. Silver

1 COMP 3503 Inductive Decision Trees with Daniel L. Silver

2 Agenda q Explanatory/Descriptive Modeling q Inductive Decision Tree Theory q The Weka IDT System q Weka IDT Tutorial

2 Agenda q Explanatory/Descriptive Modeling q Inductive Decision Tree Theory q The Weka IDT System q Weka IDT Tutorial

3 Explanatory/Descriptive Modeling

3 Explanatory/Descriptive Modeling

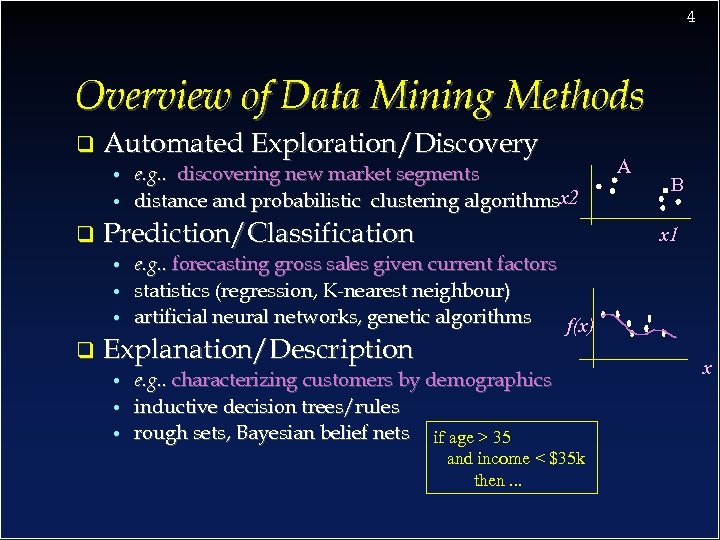

4 Overview of Data Mining Methods q Automated Exploration/Discovery • • q Prediction/Classification • • • q e. g. . discovering new market segments distance and probabilistic clustering algorithmsx 2 • • B x 1 e. g. . forecasting gross sales given current factors statistics (regression, K-nearest neighbour) artificial neural networks, genetic algorithms f(x) Explanation/Description • A e. g. . characterizing customers by demographics inductive decision trees/rules rough sets, Bayesian belief nets if age > 35 and income < $35 k then. . . x

4 Overview of Data Mining Methods q Automated Exploration/Discovery • • q Prediction/Classification • • • q e. g. . discovering new market segments distance and probabilistic clustering algorithmsx 2 • • B x 1 e. g. . forecasting gross sales given current factors statistics (regression, K-nearest neighbour) artificial neural networks, genetic algorithms f(x) Explanation/Description • A e. g. . characterizing customers by demographics inductive decision trees/rules rough sets, Bayesian belief nets if age > 35 and income < $35 k then. . . x

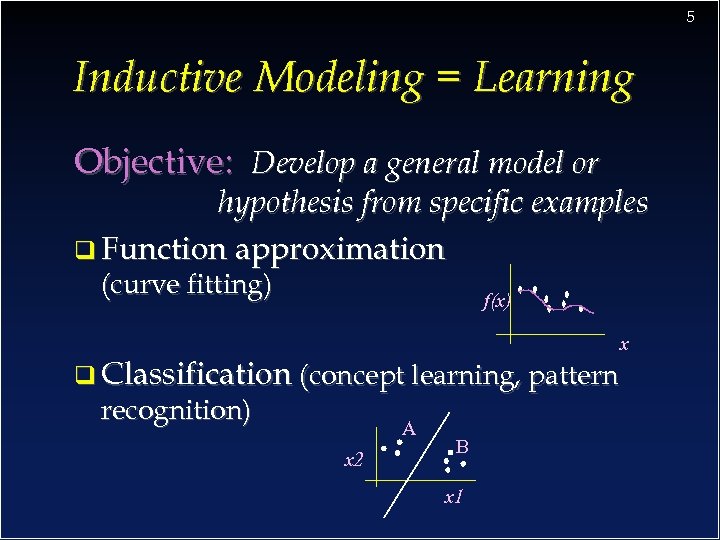

5 Inductive Modeling = Learning Objective: Develop a general model or hypothesis from specific examples q Function approximation (curve fitting) f(x) q Classification (concept learning, pattern recognition) A x 2 B x 1 x

5 Inductive Modeling = Learning Objective: Develop a general model or hypothesis from specific examples q Function approximation (curve fitting) f(x) q Classification (concept learning, pattern recognition) A x 2 B x 1 x

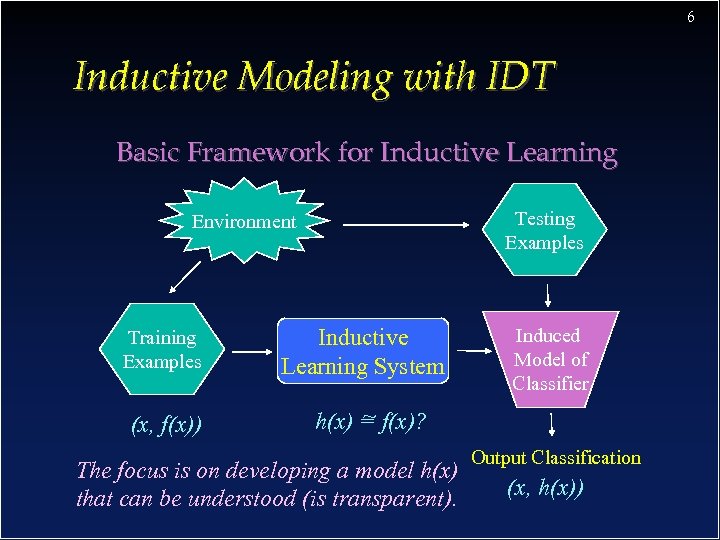

6 Inductive Modeling with IDT Basic Framework for Inductive Learning Testing Examples Environment Training Examples (x, f(x)) Inductive Learning System Induced Model of Classifier ~ h(x) = f(x)? The focus is on developing a model h(x) that can be understood (is transparent). Output Classification (x, h(x))

6 Inductive Modeling with IDT Basic Framework for Inductive Learning Testing Examples Environment Training Examples (x, f(x)) Inductive Learning System Induced Model of Classifier ~ h(x) = f(x)? The focus is on developing a model h(x) that can be understood (is transparent). Output Classification (x, h(x))

7 Inductive Decision Tree Theory

7 Inductive Decision Tree Theory

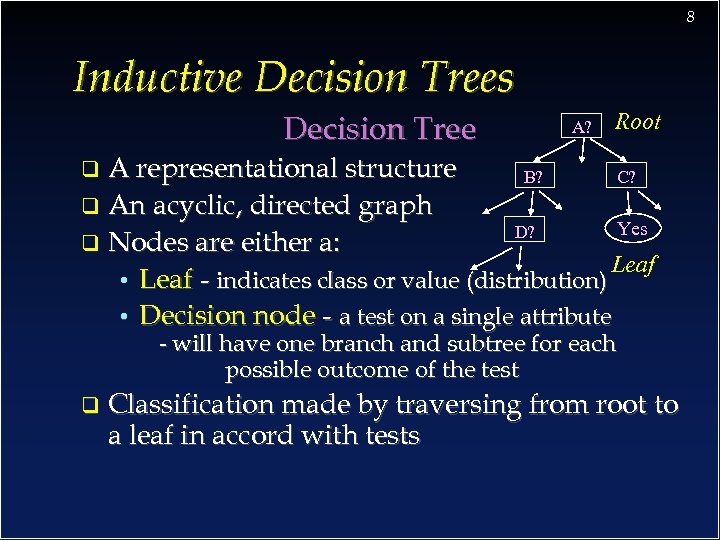

8 Inductive Decision Trees Decision Tree A? Root A representational structure B? C? q An acyclic, directed graph Yes D? q Nodes are either a: Leaf • Leaf - indicates class or value (distribution) • Decision node - a test on a single attribute q - will have one branch and subtree for each possible outcome of the test q Classification made by traversing from root to a leaf in accord with tests

8 Inductive Decision Trees Decision Tree A? Root A representational structure B? C? q An acyclic, directed graph Yes D? q Nodes are either a: Leaf • Leaf - indicates class or value (distribution) • Decision node - a test on a single attribute q - will have one branch and subtree for each possible outcome of the test q Classification made by traversing from root to a leaf in accord with tests

9 Inductive Decision Trees (IDTs) A Long and Diverse History Independently developed in the 60’s and 70’s by researchers in. . . Statistics: L. Breiman & J. Friedman - CART (Classification and Regression Trees) Pattern Recognition: Uof Michigan - AID, closest to G. V. Kass - CHAID (Chi-squared Scenario Automated Interaction Detection) AI and Info. Theory: R. Quinlan - ID 3, C 4. 5 (Iterative Dichotomizer) q

9 Inductive Decision Trees (IDTs) A Long and Diverse History Independently developed in the 60’s and 70’s by researchers in. . . Statistics: L. Breiman & J. Friedman - CART (Classification and Regression Trees) Pattern Recognition: Uof Michigan - AID, closest to G. V. Kass - CHAID (Chi-squared Scenario Automated Interaction Detection) AI and Info. Theory: R. Quinlan - ID 3, C 4. 5 (Iterative Dichotomizer) q

10 Inducing a Decision Tree Given: Set of examples with Pos. & Neg. classes Problem: Generate a Decision Tree model to classify a separate (validation) set of examples with minimal error Approach: Occam’s Razor - produce the simplest model that is consistent with the training examples -> narrow, short tree. Every traverse should be as short as possible Formally: Finding the absolute simplest tree is intractable, but we can at least try our best

10 Inducing a Decision Tree Given: Set of examples with Pos. & Neg. classes Problem: Generate a Decision Tree model to classify a separate (validation) set of examples with minimal error Approach: Occam’s Razor - produce the simplest model that is consistent with the training examples -> narrow, short tree. Every traverse should be as short as possible Formally: Finding the absolute simplest tree is intractable, but we can at least try our best

11 Inducing a Decision Tree How do we produce an optimal tree? Heuristic (strategy) 1: Grow the tree from the top down. Place the most important variable test at the root of each successive subtree The most important variable: • • • the variable (predictor) that gains the most ground in classifying the set of training examples the variable that has the most significant relationship to the response variable to which the response is most dependent or least independent

11 Inducing a Decision Tree How do we produce an optimal tree? Heuristic (strategy) 1: Grow the tree from the top down. Place the most important variable test at the root of each successive subtree The most important variable: • • • the variable (predictor) that gains the most ground in classifying the set of training examples the variable that has the most significant relationship to the response variable to which the response is most dependent or least independent

12 Inducing a Decision Tree Importance of a predictor variable q CHAID/CART • Chi-squared [or F (Fisher)] statistic is used to test the independence between the catagorical [or continuous] response variable and each predictor variable • The lowest probability (p-value) from the test determines the most important predictor (p-values are first corrected by the Bonferroni adjustment) q C 4. 5 (section 4. 3 of WFH, and PDF slides) • Theoretic Information Gain is computed for each predictor and one with the highest Gain is chosen

12 Inducing a Decision Tree Importance of a predictor variable q CHAID/CART • Chi-squared [or F (Fisher)] statistic is used to test the independence between the catagorical [or continuous] response variable and each predictor variable • The lowest probability (p-value) from the test determines the most important predictor (p-values are first corrected by the Bonferroni adjustment) q C 4. 5 (section 4. 3 of WFH, and PDF slides) • Theoretic Information Gain is computed for each predictor and one with the highest Gain is chosen

13 Inducing a Decision Tree How do we produce an optimal tree? Heuristic (strategy) 2: To be fair to predictors variables that have only 2 values, divide variables with multiple values into similar groups or segments which are then treated as separated variables (CART/CHAID only) q The p-values from the Chi-squared or F statistic is used to determine variable/value combinations which are most similar in terms of their relationship to the response variable

13 Inducing a Decision Tree How do we produce an optimal tree? Heuristic (strategy) 2: To be fair to predictors variables that have only 2 values, divide variables with multiple values into similar groups or segments which are then treated as separated variables (CART/CHAID only) q The p-values from the Chi-squared or F statistic is used to determine variable/value combinations which are most similar in terms of their relationship to the response variable

14 Inducing a Decision Tree How do we produce an optimal tree? Heuristic (strategy) 3: Prevent overfitting the tree to the training data so that it generalizes well to a validation set by: Stopping: Prevent the split on a predictor variable if it is above a level of statistical significance - simply make it a leaf (CHAID) Pruning: After a complex tree has been grown, replace a split (subtree) with a leaf if the predicted validation error is no worse than the more complex tree (CART, C 4. 5)

14 Inducing a Decision Tree How do we produce an optimal tree? Heuristic (strategy) 3: Prevent overfitting the tree to the training data so that it generalizes well to a validation set by: Stopping: Prevent the split on a predictor variable if it is above a level of statistical significance - simply make it a leaf (CHAID) Pruning: After a complex tree has been grown, replace a split (subtree) with a leaf if the predicted validation error is no worse than the more complex tree (CART, C 4. 5)

15 Inducing a Decision Tree Stopping (pre-pruning) means a choice of level of significance (CART). . If the probability (p-value) of the statistic is less than the chosen level of significance then a split is allowed q Typically the significance level is set to: • 0. 05 which provides 95% confidence • 0. 01 which provides 99% confidence q

15 Inducing a Decision Tree Stopping (pre-pruning) means a choice of level of significance (CART). . If the probability (p-value) of the statistic is less than the chosen level of significance then a split is allowed q Typically the significance level is set to: • 0. 05 which provides 95% confidence • 0. 01 which provides 99% confidence q

16 Inducing a Decision Tree Stopping means a minimum number of examples at a leaf node (C 4. 5 = J 48). . M factor = minimum number of examples allowed at a leave node q M =2 is default q

16 Inducing a Decision Tree Stopping means a minimum number of examples at a leaf node (C 4. 5 = J 48). . M factor = minimum number of examples allowed at a leave node q M =2 is default q

17 Inducing a Decision Tree Pruning means reducing the complexity of a tree. . (C 4. 5 = J 48). . C factor = confidence in the data used to train the tree q C = 25% is default q If there is 25% confidence that a pruned branch will generate < or = training errors on a test set then prune it. q p. 196 WFH, PDF slides q

17 Inducing a Decision Tree Pruning means reducing the complexity of a tree. . (C 4. 5 = J 48). . C factor = confidence in the data used to train the tree q C = 25% is default q If there is 25% confidence that a pruned branch will generate < or = training errors on a test set then prune it. q p. 196 WFH, PDF slides q

18 The Weka IDT System Weka Simple. CART creates a tree-based classification model q The target or response variable must be categorical (multiple classes allowed) q Uses the Chi-Squared test for significance q Prunes the tree by using a test/tuning set q Copyright (c), 2002 All Rights Reserved

18 The Weka IDT System Weka Simple. CART creates a tree-based classification model q The target or response variable must be categorical (multiple classes allowed) q Uses the Chi-Squared test for significance q Prunes the tree by using a test/tuning set q Copyright (c), 2002 All Rights Reserved

19 The Weka IDT System Weka J 48 creates a tree-based classification model = Ross Quinlan’s orginal C 4. 5 algorithm q The target or response variable must be categorical q Uses information gain test for significance q Prunes the tree by using a test/tuning set q Copyright (c), 2002 All Rights Reserved

19 The Weka IDT System Weka J 48 creates a tree-based classification model = Ross Quinlan’s orginal C 4. 5 algorithm q The target or response variable must be categorical q Uses information gain test for significance q Prunes the tree by using a test/tuning set q Copyright (c), 2002 All Rights Reserved

20 The Weka IDT System Weka M 5 P creates a tree-based classification model = also by Ross Quinlan q The target or response variable must be continuous q Uses information gain test for significance q Prunes the tree by using a test/tuning set q Copyright (c), 2002 All Rights Reserved

20 The Weka IDT System Weka M 5 P creates a tree-based classification model = also by Ross Quinlan q The target or response variable must be continuous q Uses information gain test for significance q Prunes the tree by using a test/tuning set q Copyright (c), 2002 All Rights Reserved

21 IDT Training

21 IDT Training

22 IDT Training How do you ensure that a decision tree has been well trained? Objective: To achieve good generalization accuracy on new examples/cases q Establish a maximum acceptable error rate q Train the tree using a method to prevent overfitting – stopping / pruning q Validate the trained network against a separate test set q

22 IDT Training How do you ensure that a decision tree has been well trained? Objective: To achieve good generalization accuracy on new examples/cases q Establish a maximum acceptable error rate q Train the tree using a method to prevent overfitting – stopping / pruning q Validate the trained network against a separate test set q

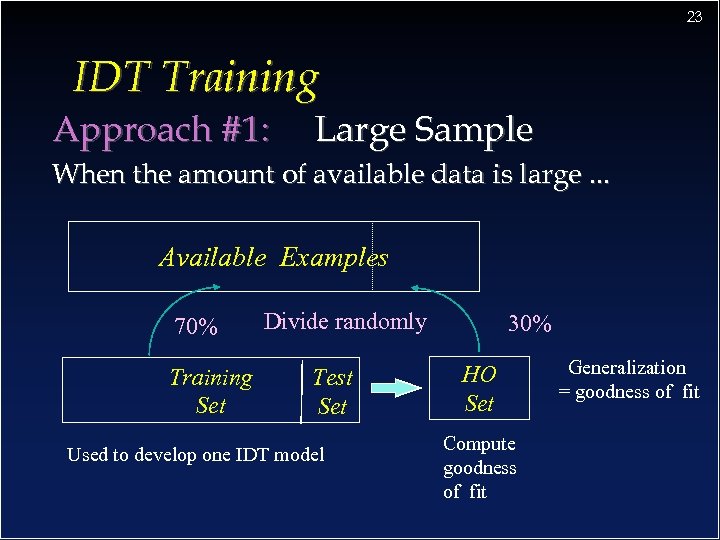

23 IDT Training Approach #1: Large Sample When the amount of available data is large. . . Available Examples 70% Training Set Divide randomly Test Set Used to develop one IDT model 30% HO Set Compute goodness of fit Generalization = goodness of fit

23 IDT Training Approach #1: Large Sample When the amount of available data is large. . . Available Examples 70% Training Set Divide randomly Test Set Used to develop one IDT model 30% HO Set Compute goodness of fit Generalization = goodness of fit

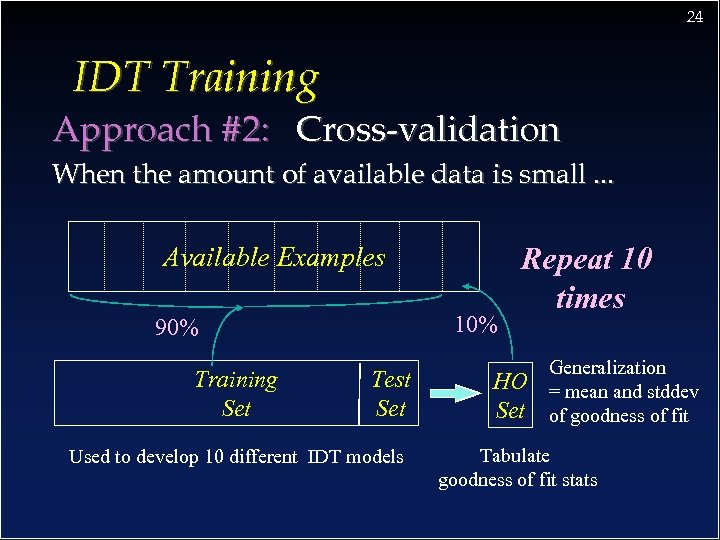

24 IDT Training Approach #2: Cross-validation When the amount of available data is small. . . Available Examples 10% 90% Training Set Test Set Used to develop 10 different IDT models Repeat 10 times HO Set Generalization = mean and stddev of goodness of fit Tabulate goodness of fit stats

24 IDT Training Approach #2: Cross-validation When the amount of available data is small. . . Available Examples 10% 90% Training Set Test Set Used to develop 10 different IDT models Repeat 10 times HO Set Generalization = mean and stddev of goodness of fit Tabulate goodness of fit stats

25 IDT Training How do you select between two induced decision trees ? A statistical test of hypothesis is required to ensure that a significant difference exists between the fit of two IDT models q If Large Sample method has been used then apply Mc. Nemar’s test* or diff. of proportions q If Cross-validation then use a paired t test for difference of two proportions q *We assume a classification problem, if this is function approximation then use paired t test for difference of means

25 IDT Training How do you select between two induced decision trees ? A statistical test of hypothesis is required to ensure that a significant difference exists between the fit of two IDT models q If Large Sample method has been used then apply Mc. Nemar’s test* or diff. of proportions q If Cross-validation then use a paired t test for difference of two proportions q *We assume a classification problem, if this is function approximation then use paired t test for difference of means

26 Pros and Cons of IDTs Cons: q Only one response variable at a time q Different significance tests required for nominal and continuous responses q Can have difficulties with noisy data q Discriminate functions are often suboptimal due to orthogonal decision hyperplanes

26 Pros and Cons of IDTs Cons: q Only one response variable at a time q Different significance tests required for nominal and continuous responses q Can have difficulties with noisy data q Discriminate functions are often suboptimal due to orthogonal decision hyperplanes

27 Pros and Cons of IDTs Pros: Proven modeling method for 20 years q Provides explanation and prediction q Ability to learn arbitrary functions q Handles unknown values well q Rapid training and recognition speed q Has inspired many inductive learning algorithms using statistical regression q

27 Pros and Cons of IDTs Pros: Proven modeling method for 20 years q Provides explanation and prediction q Ability to learn arbitrary functions q Handles unknown values well q Rapid training and recognition speed q Has inspired many inductive learning algorithms using statistical regression q

The IDT Application Development Process Guidelines for inducting decision trees 1. IDTs are good method to start with 2. Get a suitable training set 3. Use a sensible coding for input variables 4. Develop the simplest tree by adjusting tuning parameters (significance level) 5. Use a method to prevent over-fitting 6. Determine confidence in generalization through cross-validation 28

The IDT Application Development Process Guidelines for inducting decision trees 1. IDTs are good method to start with 2. Get a suitable training set 3. Use a sensible coding for input variables 4. Develop the simplest tree by adjusting tuning parameters (significance level) 5. Use a method to prevent over-fitting 6. Determine confidence in generalization through cross-validation 28

29 THE END danny. silver@acadiau. ca

29 THE END danny. silver@acadiau. ca