c8fc53a80907f6569979e02f18cd2bc9.ppt

- Количество слайдов: 181

1 Cluster Resources Training © Cluster Resources, Inc. 1

1 Cluster Resources Training © Cluster Resources, Inc. 1

1 Outline 1. Moab Overview 2. Deployment 3. Diagnostics and Troubleshooting 4. Integration 5. Scheduling Behaviour 6. Resource Access 7. Grid Computing 8. Accounting 9. Transitioning from LCRM 10. End Users © Cluster Resources, Inc. 2

1 Outline 1. Moab Overview 2. Deployment 3. Diagnostics and Troubleshooting 4. Integration 5. Scheduling Behaviour 6. Resource Access 7. Grid Computing 8. Accounting 9. Transitioning from LCRM 10. End Users © Cluster Resources, Inc. 2

1 1. Moab Introduction • • • Overview of the Modern Cluster Evolution Cluster Productivity Losses Moab Workload Manager Architecture What Moab Does Not Do © Cluster Resources, Inc. 3

1 1. Moab Introduction • • • Overview of the Modern Cluster Evolution Cluster Productivity Losses Moab Workload Manager Architecture What Moab Does Not Do © Cluster Resources, Inc. 3

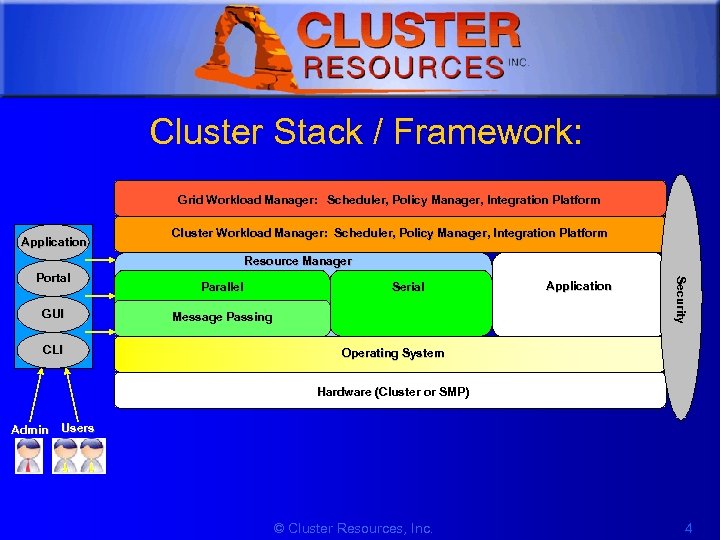

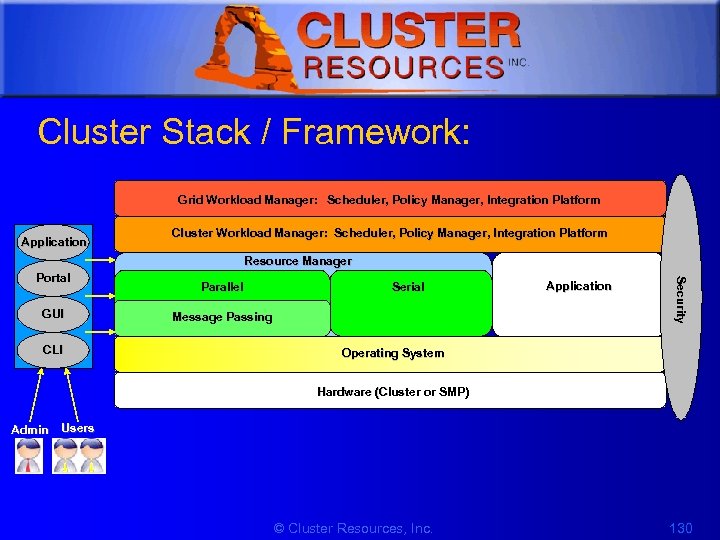

1 Cluster Stack / Framework: Grid Workload Manager: Scheduler, Policy Manager, Integration Platform Application Cluster Workload Manager: Scheduler, Policy Manager, Integration Platform Resource Manager GUI CLI Parallel Serial Message Passing Application Security Portal Operating System Hardware (Cluster or SMP) Admin Users © Cluster Resources, Inc. 4

1 Cluster Stack / Framework: Grid Workload Manager: Scheduler, Policy Manager, Integration Platform Application Cluster Workload Manager: Scheduler, Policy Manager, Integration Platform Resource Manager GUI CLI Parallel Serial Message Passing Application Security Portal Operating System Hardware (Cluster or SMP) Admin Users © Cluster Resources, Inc. 4

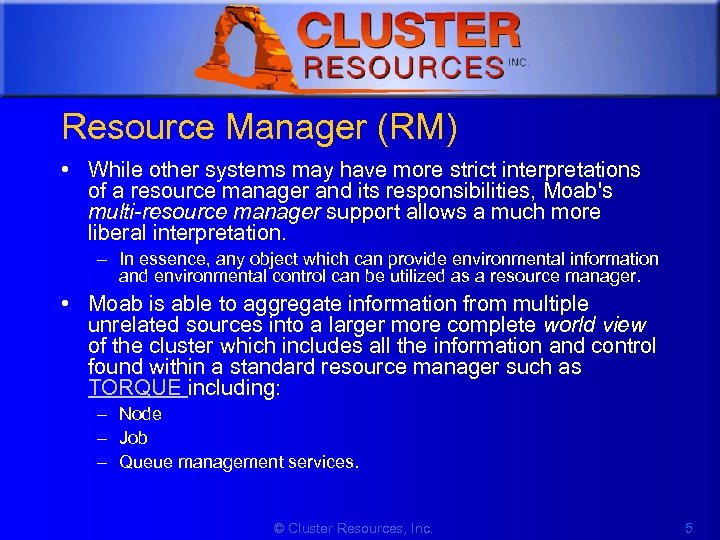

1 Resource Manager (RM) • While other systems may have more strict interpretations of a resource manager and its responsibilities, Moab's multi-resource manager support allows a much more liberal interpretation. – In essence, any object which can provide environmental information and environmental control can be utilized as a resource manager. • Moab is able to aggregate information from multiple unrelated sources into a larger more complete world view of the cluster which includes all the information and control found within a standard resource manager such as TORQUE including: – Node – Job – Queue management services. © Cluster Resources, Inc. 5

1 Resource Manager (RM) • While other systems may have more strict interpretations of a resource manager and its responsibilities, Moab's multi-resource manager support allows a much more liberal interpretation. – In essence, any object which can provide environmental information and environmental control can be utilized as a resource manager. • Moab is able to aggregate information from multiple unrelated sources into a larger more complete world view of the cluster which includes all the information and control found within a standard resource manager such as TORQUE including: – Node – Job – Queue management services. © Cluster Resources, Inc. 5

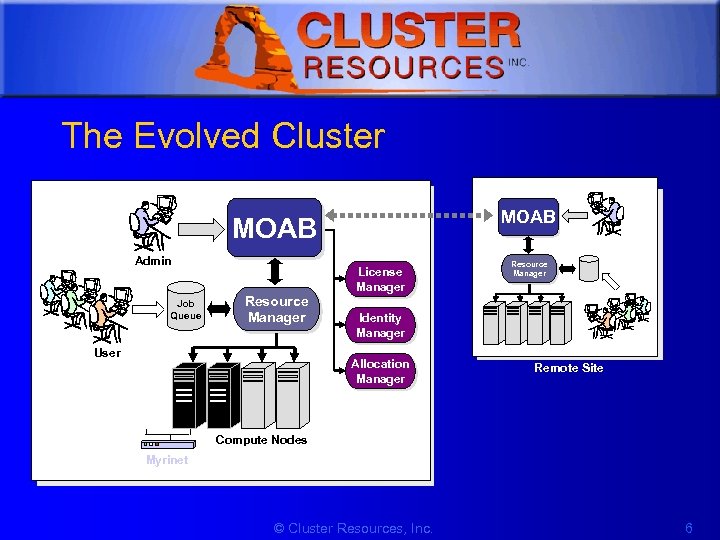

1 The Evolved Cluster MOAB Admin Job Queue Resource Manager User License Manager Resource Manager Identity Manager Allocation Manager Remote Site Compute Nodes Myrinet © Cluster Resources, Inc. 6

1 The Evolved Cluster MOAB Admin Job Queue Resource Manager User License Manager Resource Manager Identity Manager Allocation Manager Remote Site Compute Nodes Myrinet © Cluster Resources, Inc. 6

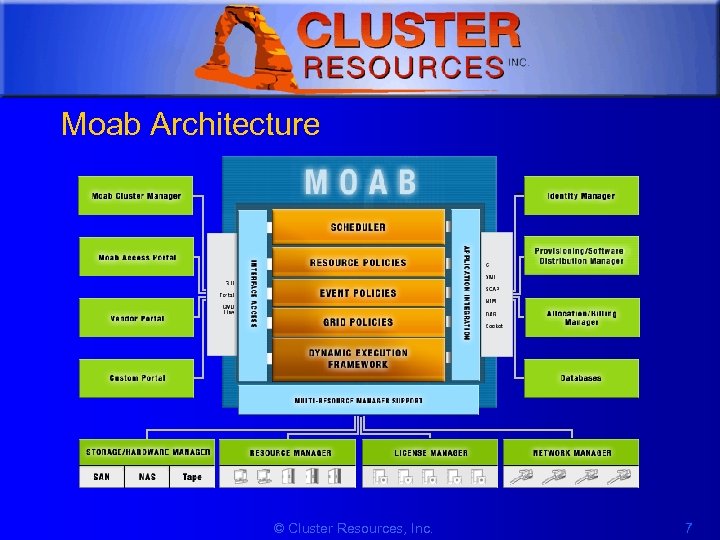

1 Moab Architecture © Cluster Resources, Inc. 7

1 Moab Architecture © Cluster Resources, Inc. 7

1 What Moab Does • Optimizes Resource Utilization with Intelligent Scheduling and Advanced Reservations • Unifies Cluster Management across Varied Resources and Services • Dynamically Adjusts Workload to Enforce Policies and Service Level Agreements • Automates Diagnosis and Failure Response © Cluster Resources, Inc. 8

1 What Moab Does • Optimizes Resource Utilization with Intelligent Scheduling and Advanced Reservations • Unifies Cluster Management across Varied Resources and Services • Dynamically Adjusts Workload to Enforce Policies and Service Level Agreements • Automates Diagnosis and Failure Response © Cluster Resources, Inc. 8

1 What Moab Does Not Do • Does not does do resource management (usually) • Does not install the system (usually) • Not a storage manager • Not a license manager • Does not do message passing © Cluster Resources, Inc. 9

1 What Moab Does Not Do • Does not does do resource management (usually) • Does not install the system (usually) • Not a storage manager • Not a license manager • Does not do message passing © Cluster Resources, Inc. 9

1 2. Deployment • • • Installation Configuration Testing © Cluster Resources, Inc. 10

1 2. Deployment • • • Installation Configuration Testing © Cluster Resources, Inc. 10

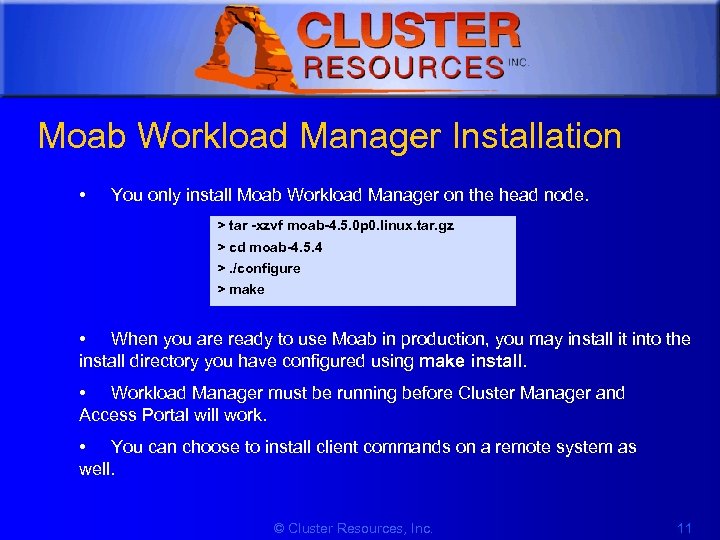

1 Moab Workload Manager Installation • You only install Moab Workload Manager on the head node. > tar -xzvf moab-4. 5. 0 p 0. linux. tar. gz > cd moab-4. 5. 4 >. /configure > make • When you are ready to use Moab in production, you may install it into the install directory you have configured using make install. • Workload Manager must be running before Cluster Manager and Access Portal will work. • You can choose to install client commands on a remote system as well. © Cluster Resources, Inc. 11

1 Moab Workload Manager Installation • You only install Moab Workload Manager on the head node. > tar -xzvf moab-4. 5. 0 p 0. linux. tar. gz > cd moab-4. 5. 4 >. /configure > make • When you are ready to use Moab in production, you may install it into the install directory you have configured using make install. • Workload Manager must be running before Cluster Manager and Access Portal will work. • You can choose to install client commands on a remote system as well. © Cluster Resources, Inc. 11

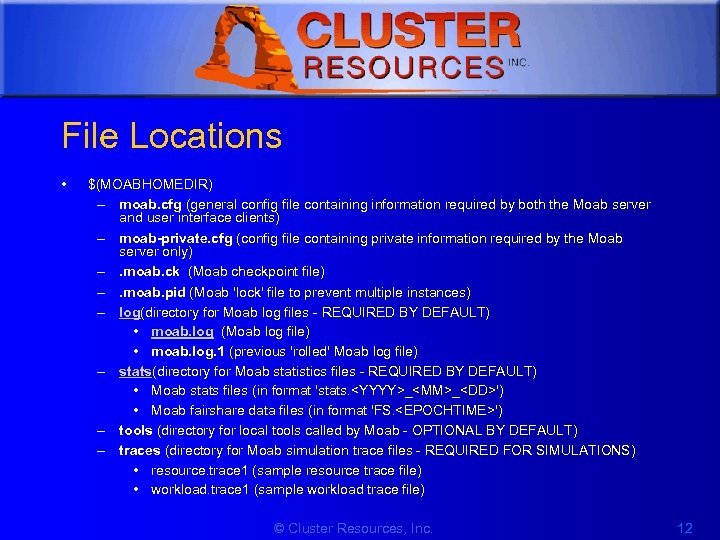

1 File Locations • $(MOABHOMEDIR) – moab. cfg (general config file containing information required by both the Moab server and user interface clients) – moab-private. cfg (config file containing private information required by the Moab server only) –. moab. ck (Moab checkpoint file) –. moab. pid (Moab 'lock' file to prevent multiple instances) – log(directory for Moab log files - REQUIRED BY DEFAULT) • moab. log (Moab log file) • moab. log. 1 (previous 'rolled' Moab log file) – stats(directory for Moab statistics files - REQUIRED BY DEFAULT) • Moab stats files (in format 'stats.

1 File Locations • $(MOABHOMEDIR) – moab. cfg (general config file containing information required by both the Moab server and user interface clients) – moab-private. cfg (config file containing private information required by the Moab server only) –. moab. ck (Moab checkpoint file) –. moab. pid (Moab 'lock' file to prevent multiple instances) – log(directory for Moab log files - REQUIRED BY DEFAULT) • moab. log (Moab log file) • moab. log. 1 (previous 'rolled' Moab log file) – stats(directory for Moab statistics files - REQUIRED BY DEFAULT) • Moab stats files (in format 'stats.

1 – spool (directory for temporary Moab files - REQUIRED FOR ADVANCED FEATURES) – contrib (directory containing contributed code in the areas of GUI's, algorithms, policies, etc) • $(MOABINSTDIR) – bin (directory for installed Moab executables) • moab (Moab scheduler executable) • mclient (Moab user interface client executable) • /etc/moab. cfg (optional file. This file is used to override default '$(MOABHOMEDIR)' settings. It should contain the string 'MOABHOMEDIR $(DIRECTORY)' to override the 'built -in' $(MOABHOMEDIR)' setting. © Cluster Resources, Inc. 13

1 – spool (directory for temporary Moab files - REQUIRED FOR ADVANCED FEATURES) – contrib (directory containing contributed code in the areas of GUI's, algorithms, policies, etc) • $(MOABINSTDIR) – bin (directory for installed Moab executables) • moab (Moab scheduler executable) • mclient (Moab user interface client executable) • /etc/moab. cfg (optional file. This file is used to override default '$(MOABHOMEDIR)' settings. It should contain the string 'MOABHOMEDIR $(DIRECTORY)' to override the 'built -in' $(MOABHOMEDIR)' setting. © Cluster Resources, Inc. 13

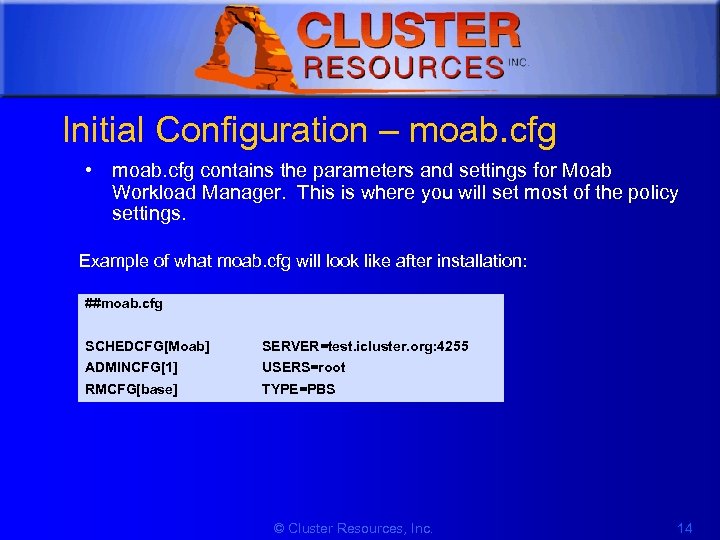

1 Initial Configuration – moab. cfg • moab. cfg contains the parameters and settings for Moab Workload Manager. This is where you will set most of the policy settings. Example of what moab. cfg will look like after installation: ##moab. cfg SCHEDCFG[Moab] SERVER=test. icluster. org: 4255 ADMINCFG[1] USERS=root RMCFG[base] TYPE=PBS © Cluster Resources, Inc. 14

1 Initial Configuration – moab. cfg • moab. cfg contains the parameters and settings for Moab Workload Manager. This is where you will set most of the policy settings. Example of what moab. cfg will look like after installation: ##moab. cfg SCHEDCFG[Moab] SERVER=test. icluster. org: 4255 ADMINCFG[1] USERS=root RMCFG[base] TYPE=PBS © Cluster Resources, Inc. 14

1 Supported Platforms/Environments • Resource Managers – TORQUE, Open. PBS, PBSPro, LSF, Loadleveler, SLURM, BProc, club. MASK, S 3, WIKI • Operating Systems – Red. Hat, SUSE, Fedora, Debian, Free. BSD, (+ all known variants of Linux), AIX, IRIX, HP-UX, OS/X, OSF/Tru-64, Sun. OS, Solaris, (+ all known variants of UNIX) • Hardware – Intel x 86, Intel IA-32, Intel IA-64, AMD x 86, AMD Opteron, SGI Altix, HP, IBM SP, IBM x-Series, IBM p-Series, IBM i. Series, Mac G 4 and G 5 © Cluster Resources, Inc. 15

1 Supported Platforms/Environments • Resource Managers – TORQUE, Open. PBS, PBSPro, LSF, Loadleveler, SLURM, BProc, club. MASK, S 3, WIKI • Operating Systems – Red. Hat, SUSE, Fedora, Debian, Free. BSD, (+ all known variants of Linux), AIX, IRIX, HP-UX, OS/X, OSF/Tru-64, Sun. OS, Solaris, (+ all known variants of UNIX) • Hardware – Intel x 86, Intel IA-32, Intel IA-64, AMD x 86, AMD Opteron, SGI Altix, HP, IBM SP, IBM x-Series, IBM p-Series, IBM i. Series, Mac G 4 and G 5 © Cluster Resources, Inc. 15

1 Basic Parameters • SCHEDCFG – Specifies how the Moab server will execute and communicate with client requests. • Example: SCHEDCFG[orion] SERVER=cw. psu. edu • ADMINCFG – Moab provides role-based security enabled by way of multiple levels of admin access. • Example: The following may be used to enable users greg amd thomas as level 1 admins: – ADMINCFG[1] USERS=greg, thomas NOTE: Moab may only be launched by the primary admin user id. • RMCFG – In order for Moab to properly interact with a resource manager, the interface to this resource manager must be defined. • For example: To interface to a TORQUE resource manager, the following may be used: – RMCFG[torque 1] TYPE=pbs © Cluster Resources, Inc. 16

1 Basic Parameters • SCHEDCFG – Specifies how the Moab server will execute and communicate with client requests. • Example: SCHEDCFG[orion] SERVER=cw. psu. edu • ADMINCFG – Moab provides role-based security enabled by way of multiple levels of admin access. • Example: The following may be used to enable users greg amd thomas as level 1 admins: – ADMINCFG[1] USERS=greg, thomas NOTE: Moab may only be launched by the primary admin user id. • RMCFG – In order for Moab to properly interact with a resource manager, the interface to this resource manager must be defined. • For example: To interface to a TORQUE resource manager, the following may be used: – RMCFG[torque 1] TYPE=pbs © Cluster Resources, Inc. 16

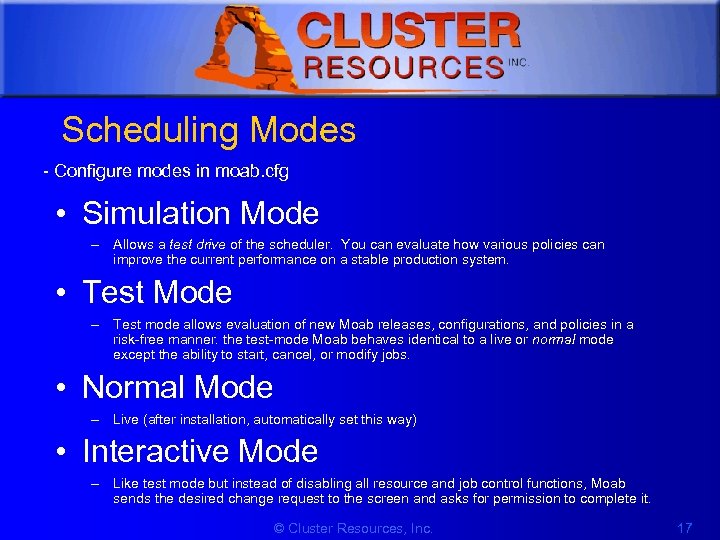

1 Scheduling Modes - Configure modes in moab. cfg • Simulation Mode – Allows a test drive of the scheduler. You can evaluate how various policies can improve the current performance on a stable production system. • Test Mode – Test mode allows evaluation of new Moab releases, configurations, and policies in a risk-free manner. the test-mode Moab behaves identical to a live or normal mode except the ability to start, cancel, or modify jobs. • Normal Mode – Live (after installation, automatically set this way) • Interactive Mode – Like test mode but instead of disabling all resource and job control functions, Moab sends the desired change request to the screen and asks for permission to complete it. © Cluster Resources, Inc. 17

1 Scheduling Modes - Configure modes in moab. cfg • Simulation Mode – Allows a test drive of the scheduler. You can evaluate how various policies can improve the current performance on a stable production system. • Test Mode – Test mode allows evaluation of new Moab releases, configurations, and policies in a risk-free manner. the test-mode Moab behaves identical to a live or normal mode except the ability to start, cancel, or modify jobs. • Normal Mode – Live (after installation, automatically set this way) • Interactive Mode – Like test mode but instead of disabling all resource and job control functions, Moab sends the desired change request to the screen and asks for permission to complete it. © Cluster Resources, Inc. 17

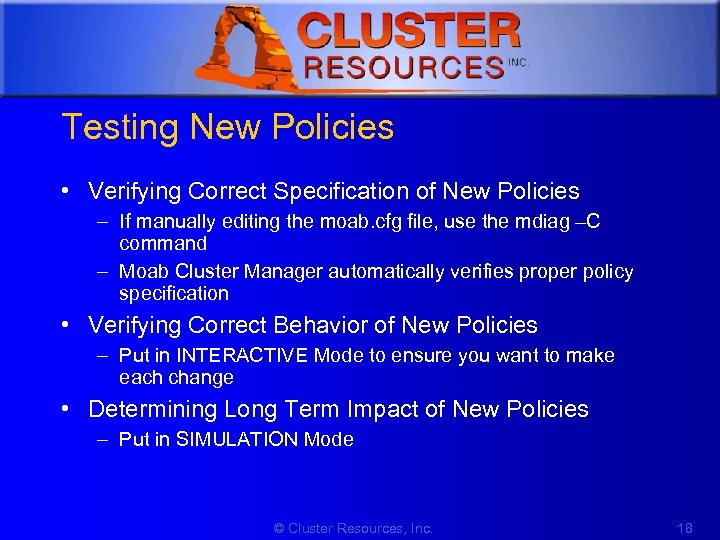

1 Testing New Policies • Verifying Correct Specification of New Policies – If manually editing the moab. cfg file, use the mdiag –C command – Moab Cluster Manager automatically verifies proper policy specification • Verifying Correct Behavior of New Policies – Put in INTERACTIVE Mode to ensure you want to make each change • Determining Long Term Impact of New Policies – Put in SIMULATION Mode © Cluster Resources, Inc. 18

1 Testing New Policies • Verifying Correct Specification of New Policies – If manually editing the moab. cfg file, use the mdiag –C command – Moab Cluster Manager automatically verifies proper policy specification • Verifying Correct Behavior of New Policies – Put in INTERACTIVE Mode to ensure you want to make each change • Determining Long Term Impact of New Policies – Put in SIMULATION Mode © Cluster Resources, Inc. 18

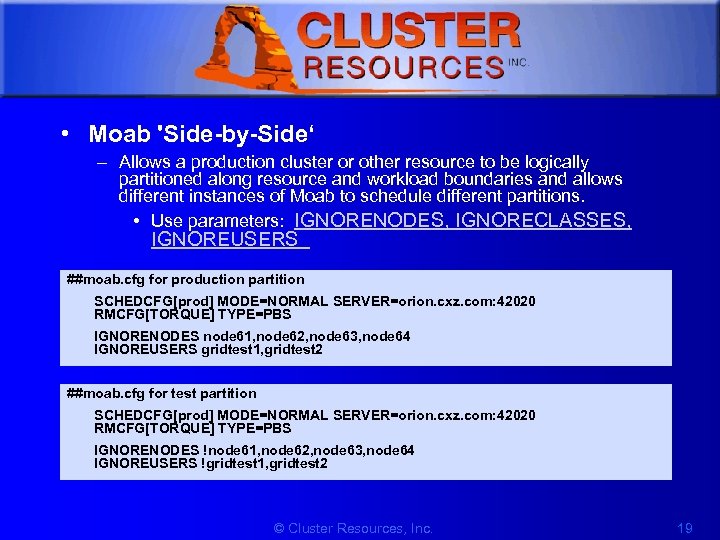

1 • Moab 'Side-by-Side‘ – Allows a production cluster or other resource to be logically partitioned along resource and workload boundaries and allows different instances of Moab to schedule different partitions. • Use parameters: IGNORENODES, IGNORECLASSES, IGNOREUSERS ##moab. cfg for production partition SCHEDCFG[prod] MODE=NORMAL SERVER=orion. cxz. com: 42020 RMCFG[TORQUE] TYPE=PBS IGNORENODES node 61, node 62, node 63, node 64 IGNOREUSERS gridtest 1, gridtest 2 ##moab. cfg for test partition SCHEDCFG[prod] MODE=NORMAL SERVER=orion. cxz. com: 42020 RMCFG[TORQUE] TYPE=PBS IGNORENODES !node 61, node 62, node 63, node 64 IGNOREUSERS !gridtest 1, gridtest 2 © Cluster Resources, Inc. 19

1 • Moab 'Side-by-Side‘ – Allows a production cluster or other resource to be logically partitioned along resource and workload boundaries and allows different instances of Moab to schedule different partitions. • Use parameters: IGNORENODES, IGNORECLASSES, IGNOREUSERS ##moab. cfg for production partition SCHEDCFG[prod] MODE=NORMAL SERVER=orion. cxz. com: 42020 RMCFG[TORQUE] TYPE=PBS IGNORENODES node 61, node 62, node 63, node 64 IGNOREUSERS gridtest 1, gridtest 2 ##moab. cfg for test partition SCHEDCFG[prod] MODE=NORMAL SERVER=orion. cxz. com: 42020 RMCFG[TORQUE] TYPE=PBS IGNORENODES !node 61, node 62, node 63, node 64 IGNOREUSERS !gridtest 1, gridtest 2 © Cluster Resources, Inc. 19

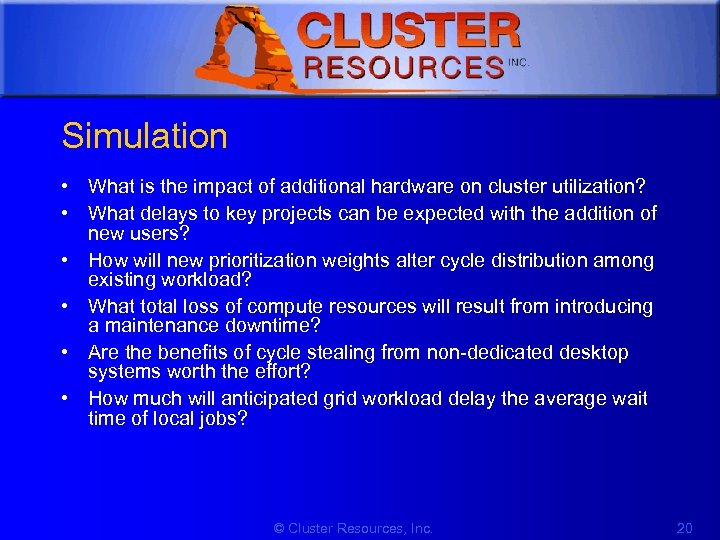

1 Simulation • What is the impact of additional hardware on cluster utilization? • What delays to key projects can be expected with the addition of new users? • How will new prioritization weights alter cycle distribution among existing workload? • What total loss of compute resources will result from introducing a maintenance downtime? • Are the benefits of cycle stealing from non-dedicated desktop systems worth the effort? • How much will anticipated grid workload delay the average wait time of local jobs? © Cluster Resources, Inc. 20

1 Simulation • What is the impact of additional hardware on cluster utilization? • What delays to key projects can be expected with the addition of new users? • How will new prioritization weights alter cycle distribution among existing workload? • What total loss of compute resources will result from introducing a maintenance downtime? • Are the benefits of cycle stealing from non-dedicated desktop systems worth the effort? • How much will anticipated grid workload delay the average wait time of local jobs? © Cluster Resources, Inc. 20

1 Scheduling Iterations • • Update State Information Refresh Reservations Schedule Reserved Jobs Schedule Priority Jobs Backfill Jobs Update Statistics Handle User Requests Perform Next Scheduling Cycle © Cluster Resources, Inc. 21

1 Scheduling Iterations • • Update State Information Refresh Reservations Schedule Reserved Jobs Schedule Priority Jobs Backfill Jobs Update Statistics Handle User Requests Perform Next Scheduling Cycle © Cluster Resources, Inc. 21

1 Job Flow • • • Determine Basic Job Feasibility Prioritize Jobs Enforce Configured Throttling Policies Determine Resource Availability Allocate Resources to Job Launch Job © Cluster Resources, Inc. 22

1 Job Flow • • • Determine Basic Job Feasibility Prioritize Jobs Enforce Configured Throttling Policies Determine Resource Availability Allocate Resources to Job Launch Job © Cluster Resources, Inc. 22

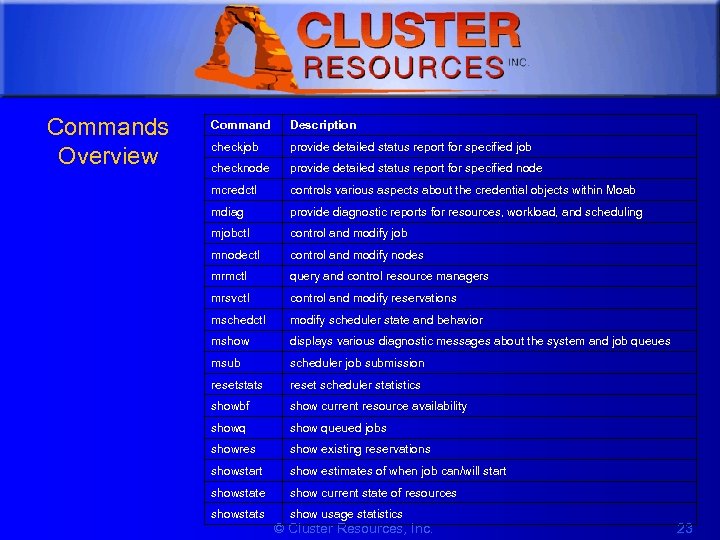

1 Commands Overview Command Description checkjob provide detailed status report for specified job checknode provide detailed status report for specified node mcredctl controls various aspects about the credential objects within Moab mdiag provide diagnostic reports for resources, workload, and scheduling mjobctl control and modify job mnodectl control and modify nodes mrmctl query and control resource managers mrsvctl control and modify reservations mschedctl modify scheduler state and behavior mshow displays various diagnostic messages about the system and job queues msub scheduler job submission resetstats reset scheduler statistics showbf show current resource availability showq show queued jobs showres show existing reservations showstart show estimates of when job can/will start showstate show current state of resources showstats show usage statistics © Cluster Resources, Inc. 23

1 Commands Overview Command Description checkjob provide detailed status report for specified job checknode provide detailed status report for specified node mcredctl controls various aspects about the credential objects within Moab mdiag provide diagnostic reports for resources, workload, and scheduling mjobctl control and modify job mnodectl control and modify nodes mrmctl query and control resource managers mrsvctl control and modify reservations mschedctl modify scheduler state and behavior mshow displays various diagnostic messages about the system and job queues msub scheduler job submission resetstats reset scheduler statistics showbf show current resource availability showq show queued jobs showres show existing reservations showstart show estimates of when job can/will start showstate show current state of resources showstats show usage statistics © Cluster Resources, Inc. 23

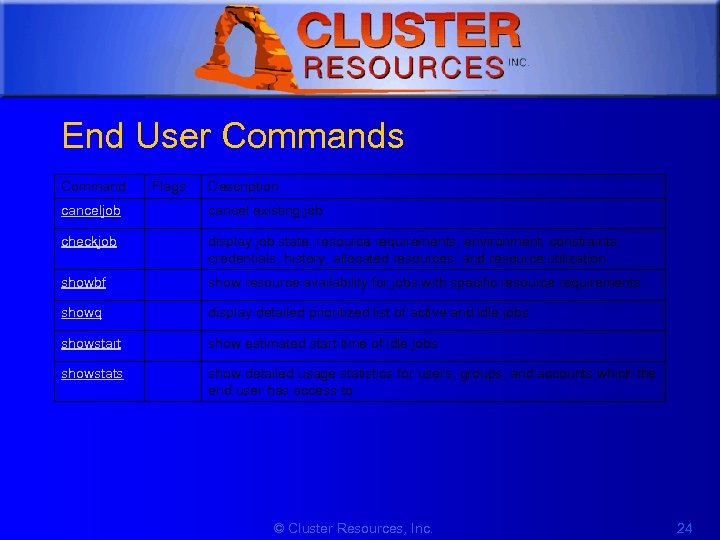

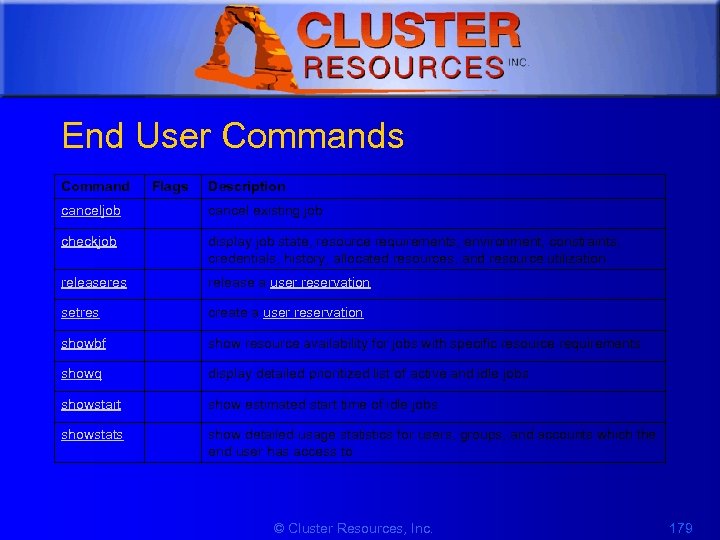

1 End User Commands Command Flags Description canceljob cancel existing job checkjob display job state, resource requirements, environment, constraints, credentials, history, allocated resources, and resource utilization showbf show resource availability for jobs with specific resource requirements showq display detailed prioritized list of active and idle jobs showstart show estimated start time of idle jobs showstats show detailed usage statistics for users, groups, and accounts which the end user has access to © Cluster Resources, Inc. 24

1 End User Commands Command Flags Description canceljob cancel existing job checkjob display job state, resource requirements, environment, constraints, credentials, history, allocated resources, and resource utilization showbf show resource availability for jobs with specific resource requirements showq display detailed prioritized list of active and idle jobs showstart show estimated start time of idle jobs showstats show detailed usage statistics for users, groups, and accounts which the end user has access to © Cluster Resources, Inc. 24

1 Scheduling Objects • Moab functions by manipulating five primary, elementary objects: – Jobs – Nodes – Reservations – Policies © Cluster Resources, Inc. 25

1 Scheduling Objects • Moab functions by manipulating five primary, elementary objects: – Jobs – Nodes – Reservations – Policies © Cluster Resources, Inc. 25

1 Jobs • Job information is provided to the Moab scheduler from a resource manager – (Such as Loadleveler, PBS, Wiki, or LSF) • Job attributes include ownership of the: – – – Job state Amount Type of resources required by the job Wallclock limit • A job consists of one or more requirements each of which requests a number of resources of a given type. © Cluster Resources, Inc. 26

1 Jobs • Job information is provided to the Moab scheduler from a resource manager – (Such as Loadleveler, PBS, Wiki, or LSF) • Job attributes include ownership of the: – – – Job state Amount Type of resources required by the job Wallclock limit • A job consists of one or more requirements each of which requests a number of resources of a given type. © Cluster Resources, Inc. 26

1 Nodes • Within Moab, a node is a collection of resources with a particular set of associated attributes. • A node is defined as one or more CPU's, together with associated memory, and possibly other compute resources such as local disk, swap, network adapters, software licenses, etc. © Cluster Resources, Inc. 27

1 Nodes • Within Moab, a node is a collection of resources with a particular set of associated attributes. • A node is defined as one or more CPU's, together with associated memory, and possibly other compute resources such as local disk, swap, network adapters, software licenses, etc. © Cluster Resources, Inc. 27

1 Advance Reservations • An object which dedicates a block of specific resources for a particular use. • Each reservation consists of a list of resources, an access control list, and a time range for which this access control list will be enforced. • The reservation prevents the listed resources from being used in a way not described by the access control list during the time range specified. © Cluster Resources, Inc. 28

1 Advance Reservations • An object which dedicates a block of specific resources for a particular use. • Each reservation consists of a list of resources, an access control list, and a time range for which this access control list will be enforced. • The reservation prevents the listed resources from being used in a way not described by the access control list during the time range specified. © Cluster Resources, Inc. 28

1 Resource Managers • Moab can be configured to manage more than one resource manager simultaneously, even resource managers of different types. • Moab aggregates information from the RMs to fully manage workload, resources, and cluster policies © Cluster Resources, Inc. 29

1 Resource Managers • Moab can be configured to manage more than one resource manager simultaneously, even resource managers of different types. • Moab aggregates information from the RMs to fully manage workload, resources, and cluster policies © Cluster Resources, Inc. 29

1 3 Troubleshooting and Diagnostics • • Object Messages Diagnostic Commands Admin Notification Logging Tracking System Failures Checkpointing Debuggers http: //www. clusterresources. com/products/mwm/moabdocs/14. 0 troubleshootingandsysmaintenance. shtml © Cluster Resources, Inc. 30

1 3 Troubleshooting and Diagnostics • • Object Messages Diagnostic Commands Admin Notification Logging Tracking System Failures Checkpointing Debuggers http: //www. clusterresources. com/products/mwm/moabdocs/14. 0 troubleshootingandsysmaintenance. shtml © Cluster Resources, Inc. 30

1 Object Messages • Messages can hold information regarding failures and key events • Messages possess event time, owner, expiration time, and event count information • Resource managers and peer services can attach messages to objects • Admins can attach messages • Multiple messages per object are supported • Messages are persistent http: //www. clusterresources. com/products/mwm/moabdocs/commands/mschedctl. shtml http: //www. clusterresources. com/products/mwm/moabdocs/14. 3 messagebuffer. shtml © Cluster Resources, Inc. 31

1 Object Messages • Messages can hold information regarding failures and key events • Messages possess event time, owner, expiration time, and event count information • Resource managers and peer services can attach messages to objects • Admins can attach messages • Multiple messages per object are supported • Messages are persistent http: //www. clusterresources. com/products/mwm/moabdocs/commands/mschedctl. shtml http: //www. clusterresources. com/products/mwm/moabdocs/14. 3 messagebuffer. shtml © Cluster Resources, Inc. 31

1 Diagnostics • Moab’s diagnostic commands present detailed state information – Scheduling problems – Summarize performance – Evaluate current operation reporting on any unexpected or potentially erroneous conditions – Where possible correct detected problems if desired © Cluster Resources, Inc. 32

1 Diagnostics • Moab’s diagnostic commands present detailed state information – Scheduling problems – Summarize performance – Evaluate current operation reporting on any unexpected or potentially erroneous conditions – Where possible correct detected problems if desired © Cluster Resources, Inc. 32

1 mdiag • Displays object state/health • Displays object configuration – Attributes, resources, policies • Displays object history and performance • Displays object failures and messages http: //www. clusterresources. com/products/mwm/moabdocs/commands/mdiag. shtml © Cluster Resources, Inc. 33

1 mdiag • Displays object state/health • Displays object configuration – Attributes, resources, policies • Displays object history and performance • Displays object failures and messages http: //www. clusterresources. com/products/mwm/moabdocs/commands/mdiag. shtml © Cluster Resources, Inc. 33

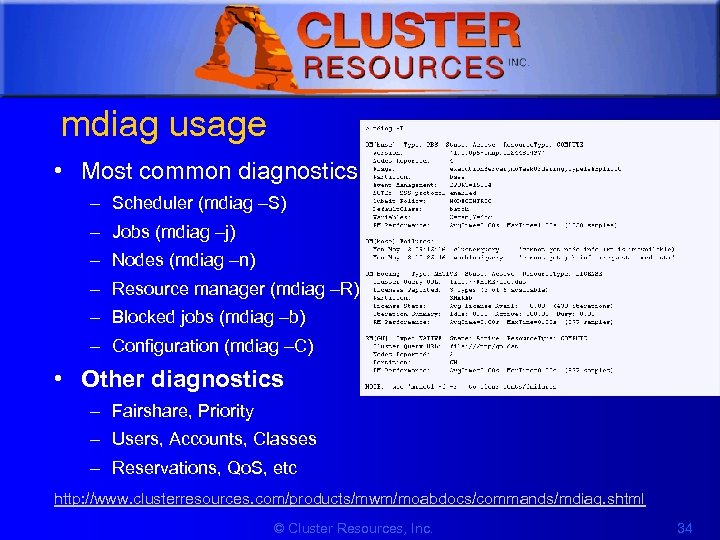

1 mdiag usage • Most common diagnostics – Scheduler (mdiag –S) – Jobs (mdiag –j) – Nodes (mdiag –n) – Resource manager (mdiag –R) – Blocked jobs (mdiag –b) – Configuration (mdiag –C) • Other diagnostics – Fairshare, Priority – Users, Accounts, Classes – Reservations, Qo. S, etc http: //www. clusterresources. com/products/mwm/moabdocs/commands/mdiag. shtml © Cluster Resources, Inc. 34

1 mdiag usage • Most common diagnostics – Scheduler (mdiag –S) – Jobs (mdiag –j) – Nodes (mdiag –n) – Resource manager (mdiag –R) – Blocked jobs (mdiag –b) – Configuration (mdiag –C) • Other diagnostics – Fairshare, Priority – Users, Accounts, Classes – Reservations, Qo. S, etc http: //www. clusterresources. com/products/mwm/moabdocs/commands/mdiag. shtml © Cluster Resources, Inc. 34

1 mdiag details • Performs numerous internal health and consistency checks – Race conditions, object configuration inconsistencies, possible external failures • Not just for failures • Provides status, config, and current performance • Enables moab as an information service --flags=xml © Cluster Resources, Inc. 35

1 mdiag details • Performs numerous internal health and consistency checks – Race conditions, object configuration inconsistencies, possible external failures • Not just for failures • Provides status, config, and current performance • Enables moab as an information service --flags=xml © Cluster Resources, Inc. 35

1 Job Troubleshooting To determine why a particular job will not start, there are several commands which can be helpful: • checkjob -v – Checkjob will evaluate the ability of a job to start immediately. Tests include resource access, node state, job constraints (ie, startdate, taskspernode, QOS, etc). Additionally, command line flags may be specified to provide further information. • • -l

1 Job Troubleshooting To determine why a particular job will not start, there are several commands which can be helpful: • checkjob -v – Checkjob will evaluate the ability of a job to start immediately. Tests include resource access, node state, job constraints (ie, startdate, taskspernode, QOS, etc). Additionally, command line flags may be specified to provide further information. • • -l

1 Other Diagnostics • checkjob and checknode commands – Why a job cannot start – Which nodes can be available information regarding the recent events impacting current job – Nodes state © Cluster Resources, Inc. 37

1 Other Diagnostics • checkjob and checknode commands – Why a job cannot start – Which nodes can be available information regarding the recent events impacting current job – Nodes state © Cluster Resources, Inc. 37

1 Issues with Client Commands • Utilize built in moab logging – showq --loglevel=9 Or • Check the moab log files © Cluster Resources, Inc. 38

1 Issues with Client Commands • Utilize built in moab logging – showq --loglevel=9 Or • Check the moab log files © Cluster Resources, Inc. 38

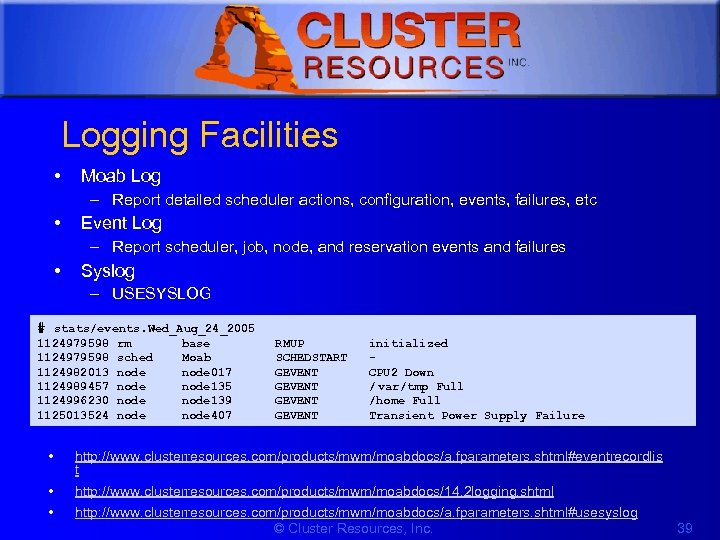

1 Logging Facilities • Moab Log – Report detailed scheduler actions, configuration, events, failures, etc • Event Log – Report scheduler, job, node, and reservation events and failures • Syslog – USESYSLOG # stats/events. Wed_Aug_24_2005 1124979598 1124982013 1124989457 1124996230 1125013524 rm base RMUP initialized sched Moab SCHEDSTART node 017 GEVENT CPU 2 Down node 135 GEVENT / var/tmp Full node 139 GEVENT /home Full node 407 GEVENT Transient Power Supply Failure • http: //www. clusterresources. com/products/mwm/moabdocs/a. fparameters. shtml#eventrecordlis t • • http: //www. clusterresources. com/products/mwm/moabdocs/14. 2 logging. shtml http: //www. clusterresources. com/products/mwm/moabdocs/a. fparameters. shtml#usesyslog © Cluster Resources, Inc. 39

1 Logging Facilities • Moab Log – Report detailed scheduler actions, configuration, events, failures, etc • Event Log – Report scheduler, job, node, and reservation events and failures • Syslog – USESYSLOG # stats/events. Wed_Aug_24_2005 1124979598 1124982013 1124989457 1124996230 1125013524 rm base RMUP initialized sched Moab SCHEDSTART node 017 GEVENT CPU 2 Down node 135 GEVENT / var/tmp Full node 139 GEVENT /home Full node 407 GEVENT Transient Power Supply Failure • http: //www. clusterresources. com/products/mwm/moabdocs/a. fparameters. shtml#eventrecordlis t • • http: //www. clusterresources. com/products/mwm/moabdocs/14. 2 logging. shtml http: //www. clusterresources. com/products/mwm/moabdocs/a. fparameters. shtml#usesyslog © Cluster Resources, Inc. 39

1 Logging Basics • LOGDIR - Indicates directory for log files • LOGFILE - Indicates path name of log file • LOGFILEMAXSIZE - Indicates maximum size of log file before rolling • LOGFILEROLLDEPTH - Indicates maximum number of log files to maintain • LOGLEVEL - Indicates verbosity of logging © Cluster Resources, Inc. 40

1 Logging Basics • LOGDIR - Indicates directory for log files • LOGFILE - Indicates path name of log file • LOGFILEMAXSIZE - Indicates maximum size of log file before rolling • LOGFILEROLLDEPTH - Indicates maximum number of log files to maintain • LOGLEVEL - Indicates verbosity of logging © Cluster Resources, Inc. 40

1 Function Level Information • In source and debug releases, each subroutine is logged, along with all printable parameters. ##moab. log MPolicy. Check(orion. 322, 2, Reason) © Cluster Resources, Inc. 41

1 Function Level Information • In source and debug releases, each subroutine is logged, along with all printable parameters. ##moab. log MPolicy. Check(orion. 322, 2, Reason) © Cluster Resources, Inc. 41

1 Status Information • Information about internal status is logged at all LOGLEVELs. Critical internal status is indicated at low LOGLEVELs while less critical and more vebose status information is logged at higher LOGLEVELs. ##moab. log INFO: job orion. 4228 rejected (max user jobs) INFO: job fr 4 n 01. 923. 0 rejected (maxjobperuser policy failure) © Cluster Resources, Inc. 42

1 Status Information • Information about internal status is logged at all LOGLEVELs. Critical internal status is indicated at low LOGLEVELs while less critical and more vebose status information is logged at higher LOGLEVELs. ##moab. log INFO: job orion. 4228 rejected (max user jobs) INFO: job fr 4 n 01. 923. 0 rejected (maxjobperuser policy failure) © Cluster Resources, Inc. 42

1 Scheduler Warnings • Warnings are logged when the scheduler detects an unexpected value or receives an unexpected result from a system call or subroutine. ##moab. log WARNING: cannot open fairshare data file '/opt/moab/stats/FS. 87000' © Cluster Resources, Inc. 43

1 Scheduler Warnings • Warnings are logged when the scheduler detects an unexpected value or receives an unexpected result from a system call or subroutine. ##moab. log WARNING: cannot open fairshare data file '/opt/moab/stats/FS. 87000' © Cluster Resources, Inc. 43

1 Scheduler Alerts • Alerts are logged when the scheduler detects events of an unexpected nature which may indicate problems in other systems or in objects. ##moab. log ALERT: job orion. 72 cannot run. deferring job for 360 Seconds © Cluster Resources, Inc. 44

1 Scheduler Alerts • Alerts are logged when the scheduler detects events of an unexpected nature which may indicate problems in other systems or in objects. ##moab. log ALERT: job orion. 72 cannot run. deferring job for 360 Seconds © Cluster Resources, Inc. 44

1 Scheduler Errors • Errors are logged when the scheduler detects problems of a nature of which impact the scheduler's ability to properly schedule the cluster. ##moab. log ERROR: cannot connect to Loadleveler API © Cluster Resources, Inc. 45

1 Scheduler Errors • Errors are logged when the scheduler detects problems of a nature of which impact the scheduler's ability to properly schedule the cluster. ##moab. log ERROR: cannot connect to Loadleveler API © Cluster Resources, Inc. 45

1 Searching Moab Logs • While major failures will be reported via the mdiag -S command, these failures can also be uncovered by searching the logs using the grep command as in the following: > grep -E "WARNING|ALERT|ERROR" moab. log © Cluster Resources, Inc. 46

1 Searching Moab Logs • While major failures will be reported via the mdiag -S command, these failures can also be uncovered by searching the logs using the grep command as in the following: > grep -E "WARNING|ALERT|ERROR" moab. log © Cluster Resources, Inc. 46

1 Event Logs • Major events are reported to both the Moab log file as well as the Moab event log. By default, the event log is maintained in the statistics directory and rolls on a daily basis, using the naming convention: – events. WWW_MMM_DD_YYYY (e. g. events. Fri_Aug_19_2005) ##event log format

1 Event Logs • Major events are reported to both the Moab log file as well as the Moab event log. By default, the event log is maintained in the statistics directory and rolls on a daily basis, using the naming convention: – events. WWW_MMM_DD_YYYY (e. g. events. Fri_Aug_19_2005) ##event log format

1 Enabling Syslog • In addition to the log file, the Moab Scheduler can report events it determines to be critical to the UNIX syslog facility via the daemon facility using priorities ranging from INFO to ERROR. • The verbosity of this logging is not affected by the LOGLEVEL parameter. In addition to errors and critical events, user commands that affect the state of the jobs, nodes, or the scheduler may also be logged to syslog. • Moab syslog messages are reported using the INFO, NOTICE, and ERR syslog priorities. © Cluster Resources, Inc. 48

1 Enabling Syslog • In addition to the log file, the Moab Scheduler can report events it determines to be critical to the UNIX syslog facility via the daemon facility using priorities ranging from INFO to ERROR. • The verbosity of this logging is not affected by the LOGLEVEL parameter. In addition to errors and critical events, user commands that affect the state of the jobs, nodes, or the scheduler may also be logged to syslog. • Moab syslog messages are reported using the INFO, NOTICE, and ERR syslog priorities. © Cluster Resources, Inc. 48

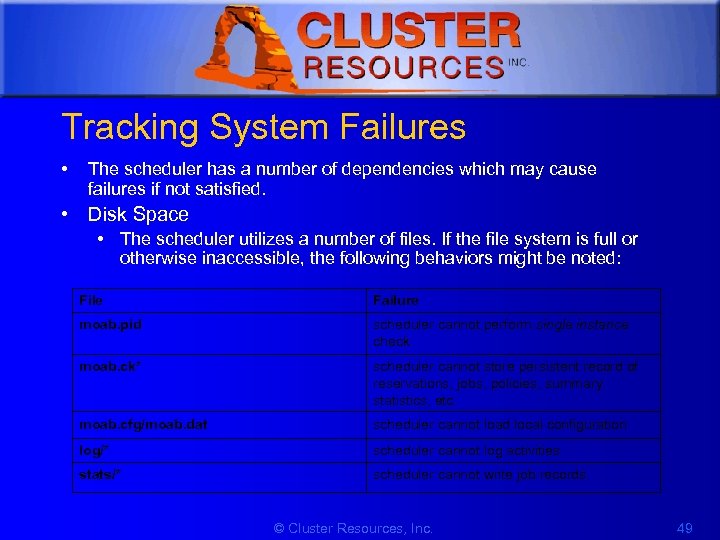

1 Tracking System Failures • The scheduler has a number of dependencies which may cause failures if not satisfied. • Disk Space • The scheduler utilizes a number of files. If the file system is full or otherwise inaccessible, the following behaviors might be noted: File Failure moab. pid scheduler cannot perform single instance check moab. ck* scheduler cannot store persistent record of reservations, jobs, policies, summary statistics, etc. moab. cfg/moab. dat scheduler cannot load local configuration log/* scheduler cannot log activities stats/* scheduler cannot write job records © Cluster Resources, Inc. 49

1 Tracking System Failures • The scheduler has a number of dependencies which may cause failures if not satisfied. • Disk Space • The scheduler utilizes a number of files. If the file system is full or otherwise inaccessible, the following behaviors might be noted: File Failure moab. pid scheduler cannot perform single instance check moab. ck* scheduler cannot store persistent record of reservations, jobs, policies, summary statistics, etc. moab. cfg/moab. dat scheduler cannot load local configuration log/* scheduler cannot log activities stats/* scheduler cannot write job records © Cluster Resources, Inc. 49

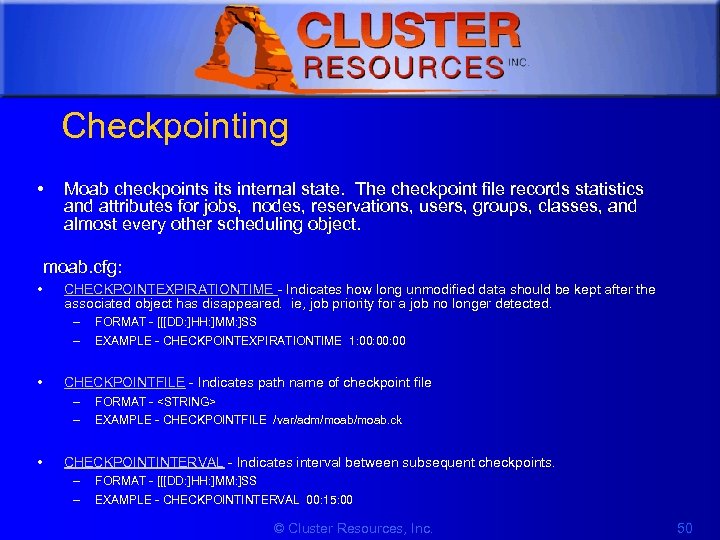

1 Checkpointing • Moab checkpoints internal state. The checkpoint file records statistics and attributes for jobs, nodes, reservations, users, groups, classes, and almost every other scheduling object. moab. cfg: • CHECKPOINTEXPIRATIONTIME - Indicates how long unmodified data should be kept after the associated object has disappeared. ie, job priority for a job no longer detected. – – • EXAMPLE - CHECKPOINTEXPIRATIONTIME 1: 00: 00 CHECKPOINTFILE - Indicates path name of checkpoint file – – • FORMAT - [[[DD: ]HH: ]MM: ]SS FORMAT -

1 Checkpointing • Moab checkpoints internal state. The checkpoint file records statistics and attributes for jobs, nodes, reservations, users, groups, classes, and almost every other scheduling object. moab. cfg: • CHECKPOINTEXPIRATIONTIME - Indicates how long unmodified data should be kept after the associated object has disappeared. ie, job priority for a job no longer detected. – – • EXAMPLE - CHECKPOINTEXPIRATIONTIME 1: 00: 00 CHECKPOINTFILE - Indicates path name of checkpoint file – – • FORMAT - [[[DD: ]HH: ]MM: ]SS FORMAT -

1 4 Integration • • • High Availability License Managers Identity Managers Allocation Managers Site Specific Integration (Native RM) © Cluster Resources, Inc. 51

1 4 Integration • • • High Availability License Managers Identity Managers Allocation Managers Site Specific Integration (Native RM) © Cluster Resources, Inc. 51

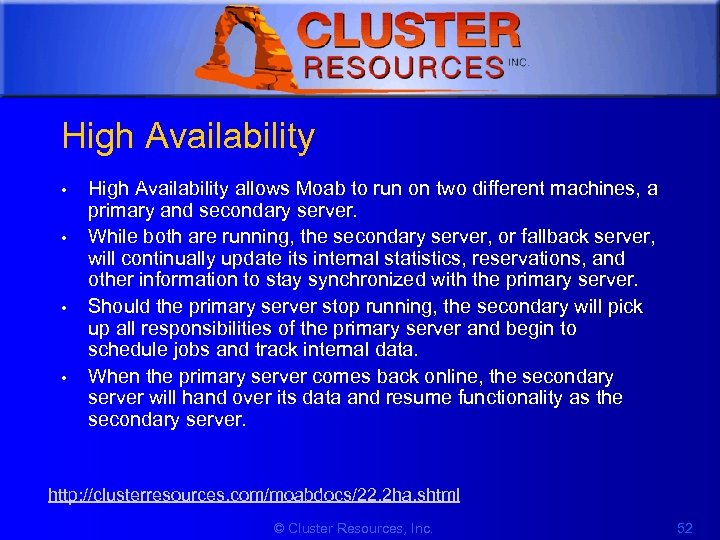

1 High Availability • • High Availability allows Moab to run on two different machines, a primary and secondary server. While both are running, the secondary server, or fallback server, will continually update its internal statistics, reservations, and other information to stay synchronized with the primary server. Should the primary server stop running, the secondary will pick up all responsibilities of the primary server and begin to schedule jobs and track internal data. When the primary server comes back online, the secondary server will hand over its data and resume functionality as the secondary server. http: //clusterresources. com/moabdocs/22. 2 ha. shtml © Cluster Resources, Inc. 52

1 High Availability • • High Availability allows Moab to run on two different machines, a primary and secondary server. While both are running, the secondary server, or fallback server, will continually update its internal statistics, reservations, and other information to stay synchronized with the primary server. Should the primary server stop running, the secondary will pick up all responsibilities of the primary server and begin to schedule jobs and track internal data. When the primary server comes back online, the secondary server will hand over its data and resume functionality as the secondary server. http: //clusterresources. com/moabdocs/22. 2 ha. shtml © Cluster Resources, Inc. 52

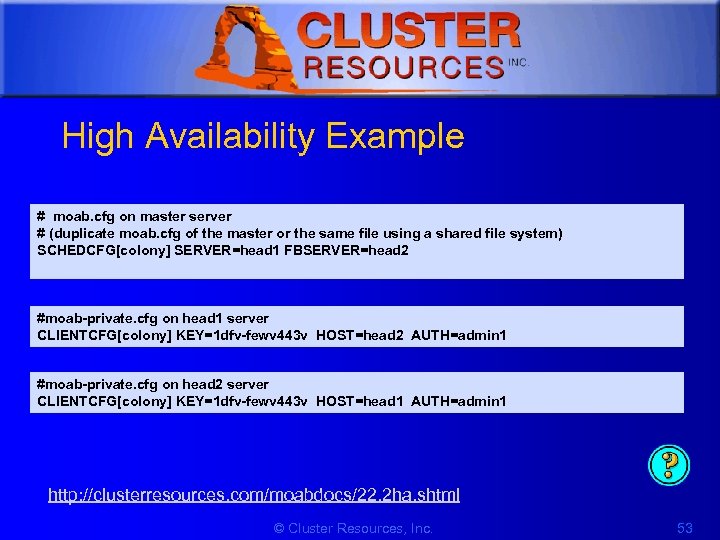

1 High Availability Example # moab. cfg on master server # (duplicate moab. cfg of the master or the same file using a shared file system) SCHEDCFG[colony] SERVER=head 1 FBSERVER=head 2 #moab-private. cfg on head 1 server CLIENTCFG[colony] KEY=1 dfv-fewv 443 v HOST=head 2 AUTH=admin 1 #moab-private. cfg on head 2 server CLIENTCFG[colony] KEY=1 dfv-fewv 443 v HOST=head 1 AUTH=admin 1 http: //clusterresources. com/moabdocs/22. 2 ha. shtml © Cluster Resources, Inc. 53

1 High Availability Example # moab. cfg on master server # (duplicate moab. cfg of the master or the same file using a shared file system) SCHEDCFG[colony] SERVER=head 1 FBSERVER=head 2 #moab-private. cfg on head 1 server CLIENTCFG[colony] KEY=1 dfv-fewv 443 v HOST=head 2 AUTH=admin 1 #moab-private. cfg on head 2 server CLIENTCFG[colony] KEY=1 dfv-fewv 443 v HOST=head 1 AUTH=admin 1 http: //clusterresources. com/moabdocs/22. 2 ha. shtml © Cluster Resources, Inc. 53

1 Enabling High Availability Features • Moab runs on two machines, primary and secondary server – The secondary server, or fallback server, will continually update its internal statistics, reservations, and other information to stay synchronized with the primary server and take over scheduling should the primary server fail © Cluster Resources, Inc. 54

1 Enabling High Availability Features • Moab runs on two machines, primary and secondary server – The secondary server, or fallback server, will continually update its internal statistics, reservations, and other information to stay synchronized with the primary server and take over scheduling should the primary server fail © Cluster Resources, Inc. 54

![1 Configuring High Availability moab. cfg SCHEDCFG[mycluster] SERVER=primaryhostname: 3000 SCHEDCFG[mycluster] FBSERVER=secondaryhostname • Both the 1 Configuring High Availability moab. cfg SCHEDCFG[mycluster] SERVER=primaryhostname: 3000 SCHEDCFG[mycluster] FBSERVER=secondaryhostname • Both the](https://present5.com/presentation/c8fc53a80907f6569979e02f18cd2bc9/image-55.jpg) 1 Configuring High Availability moab. cfg SCHEDCFG[mycluster] SERVER=primaryhostname: 3000 SCHEDCFG[mycluster] FBSERVER=secondaryhostname • Both the SERVER and FBSERVER are of the format:

1 Configuring High Availability moab. cfg SCHEDCFG[mycluster] SERVER=primaryhostname: 3000 SCHEDCFG[mycluster] FBSERVER=secondaryhostname • Both the SERVER and FBSERVER are of the format:

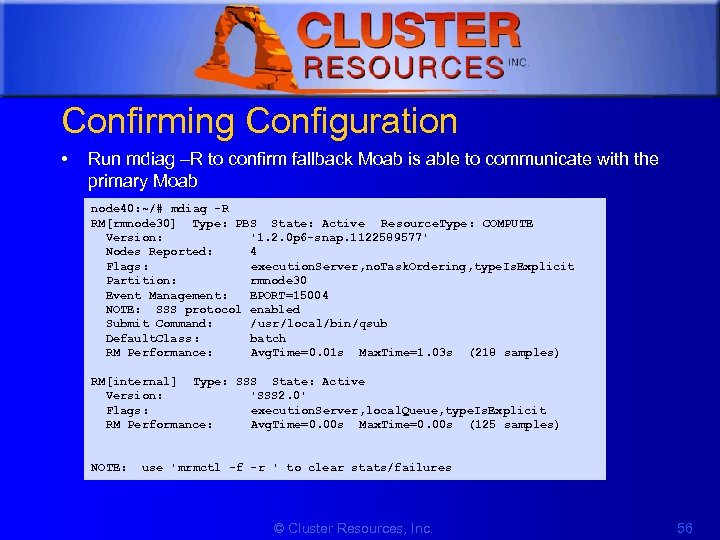

1 Confirming Configuration • Run mdiag –R to confirm fallback Moab is able to communicate with the primary Moab node 40: ~/# mdiag -R RM[rmnode 30] Type: PBS State: Active Resource. Type: COMPUTE Version: '1. 2. 0 p 6 -snap. 1122589577' Nodes Reported: 4 Flags: execution. Server, no. Task. Ordering, type. Is. Explicit Partition: rmnode 30 Event Management: EPORT=15004 NOTE: SSS protocol enabled Submit Command: /usr/local/bin/qsub Default. Class: batch RM Performance: Avg. Time=0. 01 s Max. Time=1. 03 s (218 samples) RM[internal] Type: SSS State: Active Version: 'SSS 2. 0' Flags: execution. Server, local. Queue, type. Is. Explicit RM Performance: Avg. Time=0. 00 s Max. Time=0. 00 s (125 samples) NOTE: use 'mrmctl -f -r ' to clear stats/failures © Cluster Resources, Inc. 56

1 Confirming Configuration • Run mdiag –R to confirm fallback Moab is able to communicate with the primary Moab node 40: ~/# mdiag -R RM[rmnode 30] Type: PBS State: Active Resource. Type: COMPUTE Version: '1. 2. 0 p 6 -snap. 1122589577' Nodes Reported: 4 Flags: execution. Server, no. Task. Ordering, type. Is. Explicit Partition: rmnode 30 Event Management: EPORT=15004 NOTE: SSS protocol enabled Submit Command: /usr/local/bin/qsub Default. Class: batch RM Performance: Avg. Time=0. 01 s Max. Time=1. 03 s (218 samples) RM[internal] Type: SSS State: Active Version: 'SSS 2. 0' Flags: execution. Server, local. Queue, type. Is. Explicit RM Performance: Avg. Time=0. 00 s Max. Time=0. 00 s (125 samples) NOTE: use 'mrmctl -f -r ' to clear stats/failures © Cluster Resources, Inc. 56

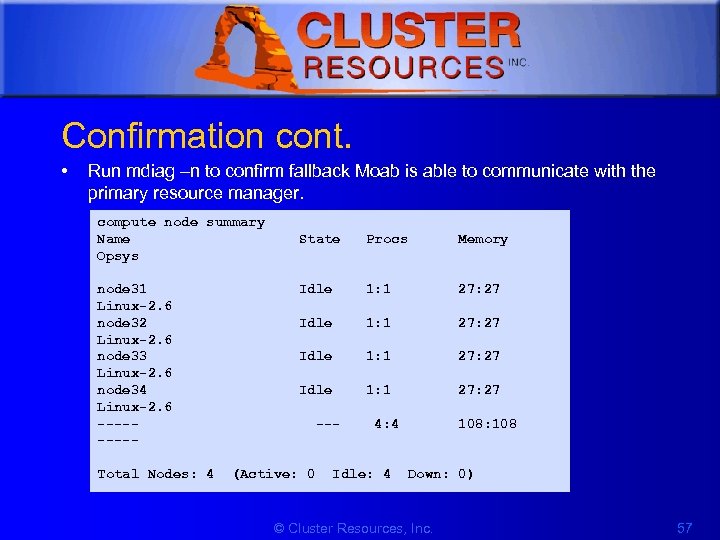

1 Confirmation cont. • Run mdiag –n to confirm fallback Moab is able to communicate with the primary resource manager. compute node summary Name State Procs Memory Opsys node 31 Idle 1: 1 27: 27 Linux-2. 6 node 32 Idle 1: 1 27: 27 Linux-2. 6 node 33 Idle 1: 1 27: 27 Linux-2. 6 node 34 Idle 1: 1 27: 27 Linux-2. 6 ----- --- 4: 4 108: 108 ----Total Nodes: 4 (Active: 0 Idle: 4 Down: 0) © Cluster Resources, Inc. 57

1 Confirmation cont. • Run mdiag –n to confirm fallback Moab is able to communicate with the primary resource manager. compute node summary Name State Procs Memory Opsys node 31 Idle 1: 1 27: 27 Linux-2. 6 node 32 Idle 1: 1 27: 27 Linux-2. 6 node 33 Idle 1: 1 27: 27 Linux-2. 6 node 34 Idle 1: 1 27: 27 Linux-2. 6 ----- --- 4: 4 108: 108 ----Total Nodes: 4 (Active: 0 Idle: 4 Down: 0) © Cluster Resources, Inc. 57

1 License Management • Moab supports both node-locked and floating license models and even allows mixing the two models simultaneously • Methods for determining license availability – Local Consumable Resources – Resource Manager Based Consumable Resources – Interfacing to an External License Manager • Requesting Licenses within Jobs #qsub > qsub -l nodes=2, software=blast cmdscript. txt © Cluster Resources, Inc. 58

1 License Management • Moab supports both node-locked and floating license models and even allows mixing the two models simultaneously • Methods for determining license availability – Local Consumable Resources – Resource Manager Based Consumable Resources – Interfacing to an External License Manager • Requesting Licenses within Jobs #qsub > qsub -l nodes=2, software=blast cmdscript. txt © Cluster Resources, Inc. 58

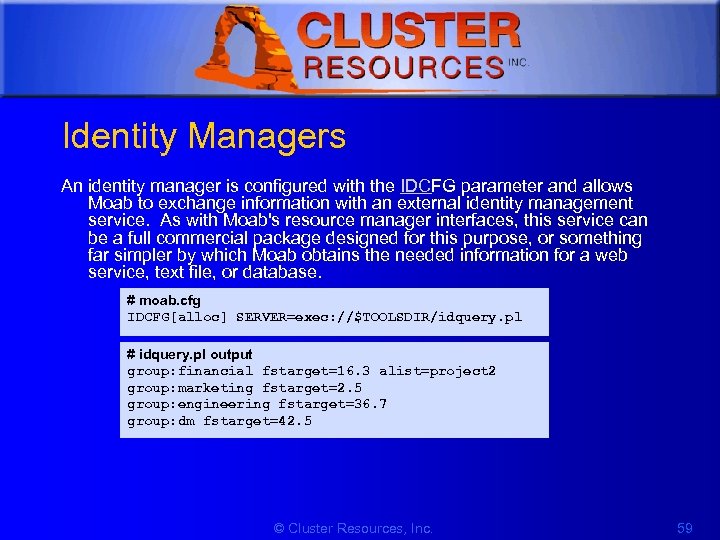

1 Identity Managers An identity manager is configured with the IDCFG parameter and allows Moab to exchange information with an external identity management service. As with Moab's resource manager interfaces, this service can be a full commercial package designed for this purpose, or something far simpler by which Moab obtains the needed information for a web service, text file, or database. # moab. cfg IDCFG[alloc] SERVER=exec: //$TOOLSDIR/idquery. pl # idquery. pl output group: financial fstarget=16. 3 alist=project 2 group: marketing fstarget=2. 5 group: engineering fstarget=36. 7 group: dm fstarget=42. 5 © Cluster Resources, Inc. 59

1 Identity Managers An identity manager is configured with the IDCFG parameter and allows Moab to exchange information with an external identity management service. As with Moab's resource manager interfaces, this service can be a full commercial package designed for this purpose, or something far simpler by which Moab obtains the needed information for a web service, text file, or database. # moab. cfg IDCFG[alloc] SERVER=exec: //$TOOLSDIR/idquery. pl # idquery. pl output group: financial fstarget=16. 3 alist=project 2 group: marketing fstarget=2. 5 group: engineering fstarget=36. 7 group: dm fstarget=42. 5 © Cluster Resources, Inc. 59

1 Allocation Management Overview Gold Capabilities/Features Allocation Manager Example © Cluster Resources, Inc. 60

1 Allocation Management Overview Gold Capabilities/Features Allocation Manager Example © Cluster Resources, Inc. 60

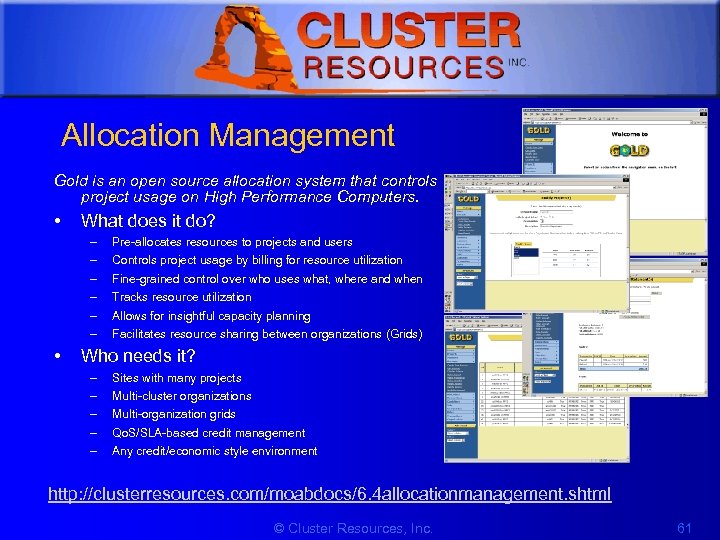

1 Allocation Management Gold is an open source allocation system that controls project usage on High Performance Computers. • What does it do? – – – • Pre-allocates resources to projects and users Controls project usage by billing for resource utilization Fine-grained control over who uses what, where and when Tracks resource utilization Allows for insightful capacity planning Facilitates resource sharing between organizations (Grids) Who needs it? – – – Sites with many projects Multi-cluster organizations Multi-organization grids Qo. S/SLA-based credit management Any credit/economic style environment http: //clusterresources. com/moabdocs/6. 4 allocationmanagement. shtml © Cluster Resources, Inc. 61

1 Allocation Management Gold is an open source allocation system that controls project usage on High Performance Computers. • What does it do? – – – • Pre-allocates resources to projects and users Controls project usage by billing for resource utilization Fine-grained control over who uses what, where and when Tracks resource utilization Allows for insightful capacity planning Facilitates resource sharing between organizations (Grids) Who needs it? – – – Sites with many projects Multi-cluster organizations Multi-organization grids Qo. S/SLA-based credit management Any credit/economic style environment http: //clusterresources. com/moabdocs/6. 4 allocationmanagement. shtml © Cluster Resources, Inc. 61

1 Gold Features • • • Enforces long-term usage limits Uses Reservations to Enforce Allocations Online Bank (Dynamic Charging) Journaled Account History Promotes Resource Sharing between Organizations (Grids) • Facilitates capacity planning http: //www. emsl. pnl. gov/docs/mscf/gold © Cluster Resources, Inc. 62

1 Gold Features • • • Enforces long-term usage limits Uses Reservations to Enforce Allocations Online Bank (Dynamic Charging) Journaled Account History Promotes Resource Sharing between Organizations (Grids) • Facilitates capacity planning http: //www. emsl. pnl. gov/docs/mscf/gold © Cluster Resources, Inc. 62

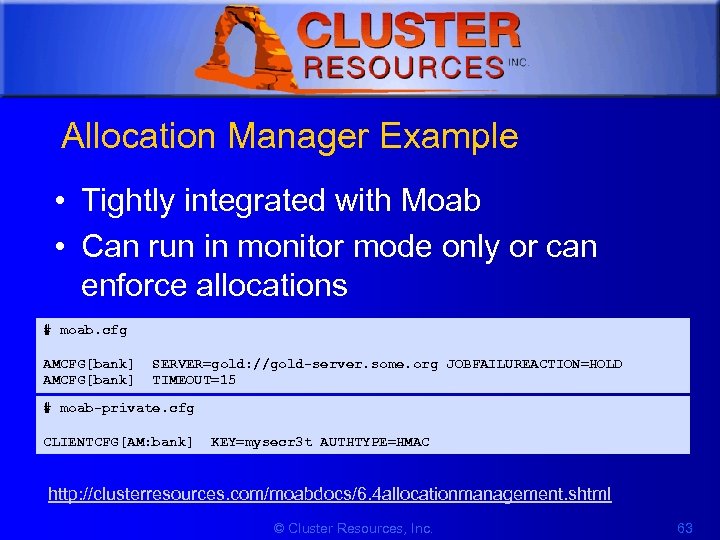

1 Allocation Manager Example • Tightly integrated with Moab • Can run in monitor mode only or can enforce allocations # moab. cfg AMCFG[bank] SERVER=gold: //gold-server. some. org JOBFAILUREACTION=HOLD AMCFG[bank] TIMEOUT=15 # moab-private. cfg CLIENTCFG[AM: bank] KEY=mysecr 3 t AUTHTYPE=HMAC http: //clusterresources. com/moabdocs/6. 4 allocationmanagement. shtml © Cluster Resources, Inc. 63

1 Allocation Manager Example • Tightly integrated with Moab • Can run in monitor mode only or can enforce allocations # moab. cfg AMCFG[bank] SERVER=gold: //gold-server. some. org JOBFAILUREACTION=HOLD AMCFG[bank] TIMEOUT=15 # moab-private. cfg CLIENTCFG[AM: bank] KEY=mysecr 3 t AUTHTYPE=HMAC http: //clusterresources. com/moabdocs/6. 4 allocationmanagement. shtml © Cluster Resources, Inc. 63

1 Other Allocation Management Options • Qbank • Moab’s native interface © Cluster Resources, Inc. 64

1 Other Allocation Management Options • Qbank • Moab’s native interface © Cluster Resources, Inc. 64

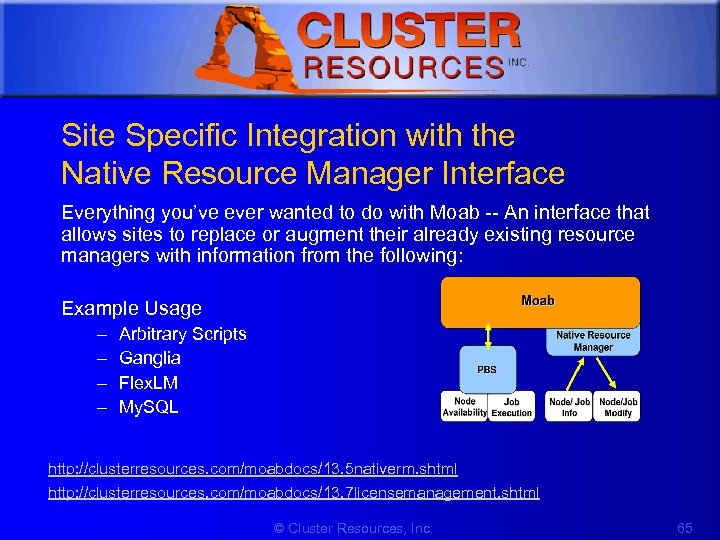

1 Site Specific Integration with the Native Resource Manager Interface Everything you’ve ever wanted to do with Moab -- An interface that allows sites to replace or augment their already existing resource managers with information from the following: Example Usage – – Arbitrary Scripts Ganglia Flex. LM My. SQL http: //clusterresources. com/moabdocs/13. 5 nativerm. shtml http: //clusterresources. com/moabdocs/13. 7 licensemanagement. shtml © Cluster Resources, Inc. 65

1 Site Specific Integration with the Native Resource Manager Interface Everything you’ve ever wanted to do with Moab -- An interface that allows sites to replace or augment their already existing resource managers with information from the following: Example Usage – – Arbitrary Scripts Ganglia Flex. LM My. SQL http: //clusterresources. com/moabdocs/13. 5 nativerm. shtml http: //clusterresources. com/moabdocs/13. 7 licensemanagement. shtml © Cluster Resources, Inc. 65

![1 Native Resource Manager Example # moab. cfg # interface w/TORQUE RMCFG[torque] TYPE=PBS # 1 Native Resource Manager Example # moab. cfg # interface w/TORQUE RMCFG[torque] TYPE=PBS #](https://present5.com/presentation/c8fc53a80907f6569979e02f18cd2bc9/image-66.jpg) 1 Native Resource Manager Example # moab. cfg # interface w/TORQUE RMCFG[torque] TYPE=PBS # interface w/flex. LM RMCFG[flex. LM] TYPE=NATIVE RTYPE=license RMCFG[flex. LM] CLUSTERQUERYURL=exec: ///$HOME/tools/license. mon. flexlm. pl # integrate local node health check script data RMCFG[local] TYPE=NATIVE RMCFG[local] CLUSTERQUERYURL=file: ///opt/moab/localtools/healthcheck. dat © Cluster Resources, Inc. 66

1 Native Resource Manager Example # moab. cfg # interface w/TORQUE RMCFG[torque] TYPE=PBS # interface w/flex. LM RMCFG[flex. LM] TYPE=NATIVE RTYPE=license RMCFG[flex. LM] CLUSTERQUERYURL=exec: ///$HOME/tools/license. mon. flexlm. pl # integrate local node health check script data RMCFG[local] TYPE=NATIVE RMCFG[local] CLUSTERQUERYURL=file: ///opt/moab/localtools/healthcheck. dat © Cluster Resources, Inc. 66

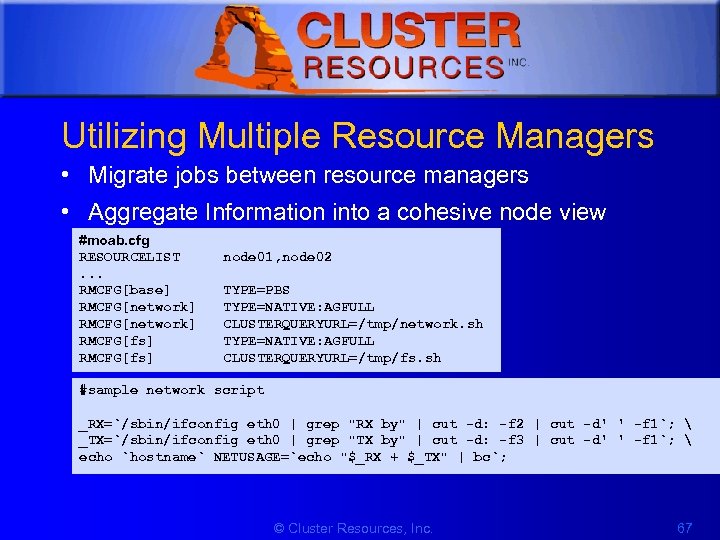

1 Utilizing Multiple Resource Managers • Migrate jobs between resource managers • Aggregate Information into a cohesive node view #moab. cfg RESOURCELIST. . . RMCFG[base] RMCFG[network] RMCFG[fs] node 01, node 02 TYPE=PBS TYPE=NATIVE: AGFULL CLUSTERQUERYURL=/tmp/network. sh TYPE=NATIVE: AGFULL CLUSTERQUERYURL=/tmp/fs. sh #sample network script _RX=`/sbin/ifconfig eth 0 | grep "RX by" | cut -d: -f 2 | cut -d' ' -f 1`; _TX=`/sbin/ifconfig eth 0 | grep "TX by" | cut -d: -f 3 | cut -d' ' -f 1`; echo `hostname` NETUSAGE=`echo "$_RX + $_TX" | bc`; © Cluster Resources, Inc. 67

1 Utilizing Multiple Resource Managers • Migrate jobs between resource managers • Aggregate Information into a cohesive node view #moab. cfg RESOURCELIST. . . RMCFG[base] RMCFG[network] RMCFG[fs] node 01, node 02 TYPE=PBS TYPE=NATIVE: AGFULL CLUSTERQUERYURL=/tmp/network. sh TYPE=NATIVE: AGFULL CLUSTERQUERYURL=/tmp/fs. sh #sample network script _RX=`/sbin/ifconfig eth 0 | grep "RX by" | cut -d: -f 2 | cut -d' ' -f 1`; _TX=`/sbin/ifconfig eth 0 | grep "TX by" | cut -d: -f 3 | cut -d' ' -f 1`; echo `hostname` NETUSAGE=`echo "$_RX + $_TX" | bc`; © Cluster Resources, Inc. 67

1 5. Scheduling Behaviour • • Job Priority Fairshare Usage Limits Optimizing the Scheduler © Cluster Resources, Inc. 68

1 5. Scheduling Behaviour • • Job Priority Fairshare Usage Limits Optimizing the Scheduler © Cluster Resources, Inc. 68

1 Credentials • Certain job attributes (such as user, group, account, class and qos) describe entities the job belongs to and can be used to associate policies with jobs. • Every Job has credentials – Users (The only mandatory credential) – Groups (Standard Unix group or arbitrary collection of users) – Accounts (Associated with projects and billing) – Class (Associated with RM queues) – Quality Of Service (Qo. S) (Policy overrides, resource access, service targets, charge rates) • All Credentials can have Usage Limits, Fairshare Targets, Priorities, Usage History, Credential Access Lists / Defaults http: //clusterresources. com/moabdocs/3. 5 credoverview. shtml © Cluster Resources, Inc. 69

1 Credentials • Certain job attributes (such as user, group, account, class and qos) describe entities the job belongs to and can be used to associate policies with jobs. • Every Job has credentials – Users (The only mandatory credential) – Groups (Standard Unix group or arbitrary collection of users) – Accounts (Associated with projects and billing) – Class (Associated with RM queues) – Quality Of Service (Qo. S) (Policy overrides, resource access, service targets, charge rates) • All Credentials can have Usage Limits, Fairshare Targets, Priorities, Usage History, Credential Access Lists / Defaults http: //clusterresources. com/moabdocs/3. 5 credoverview. shtml © Cluster Resources, Inc. 69

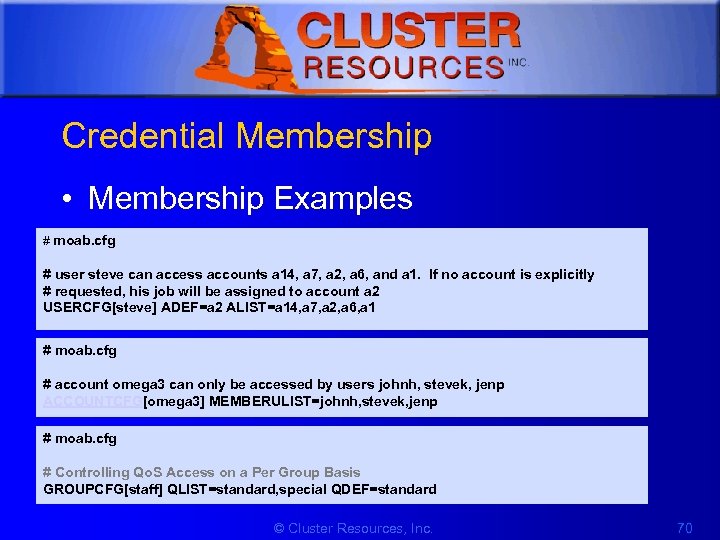

1 Credential Membership • Membership Examples # moab. cfg # user steve can access accounts a 14, a 7, a 2, a 6, and a 1. If no account is explicitly # requested, his job will be assigned to account a 2 USERCFG[steve] ADEF=a 2 ALIST=a 14, a 7, a 2, a 6, a 1 # moab. cfg # account omega 3 can only be accessed by users johnh, stevek, jenp ACCOUNTCFG[omega 3] MEMBERULIST=johnh, stevek, jenp # moab. cfg # Controlling Qo. S Access on a Per Group Basis GROUPCFG[staff] QLIST=standard, special QDEF=standard © Cluster Resources, Inc. 70

1 Credential Membership • Membership Examples # moab. cfg # user steve can access accounts a 14, a 7, a 2, a 6, and a 1. If no account is explicitly # requested, his job will be assigned to account a 2 USERCFG[steve] ADEF=a 2 ALIST=a 14, a 7, a 2, a 6, a 1 # moab. cfg # account omega 3 can only be accessed by users johnh, stevek, jenp ACCOUNTCFG[omega 3] MEMBERULIST=johnh, stevek, jenp # moab. cfg # Controlling Qo. S Access on a Per Group Basis GROUPCFG[staff] QLIST=standard, special QDEF=standard © Cluster Resources, Inc. 70

1 Fairness • Definition: – giving all users equal access to compute resources – incorporating historical resource usage, political issues, and job value • Moab provides a comprehensive and flexible set of tools allowing the ability to address the many and varied fairness management needs. http: //clusterresources. com/moabdocs/6. 0 managingfairness. shtml © Cluster Resources, Inc. 71

1 Fairness • Definition: – giving all users equal access to compute resources – incorporating historical resource usage, political issues, and job value • Moab provides a comprehensive and flexible set of tools allowing the ability to address the many and varied fairness management needs. http: //clusterresources. com/moabdocs/6. 0 managingfairness. shtml © Cluster Resources, Inc. 71

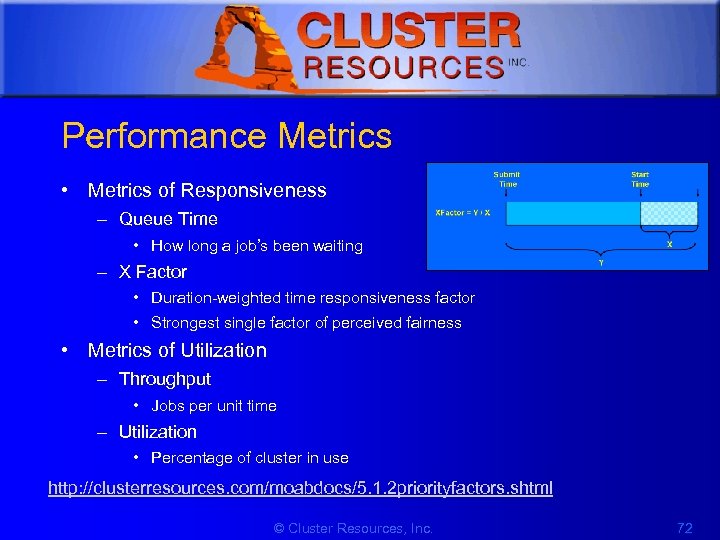

1 Performance Metrics • Metrics of Responsiveness – Queue Time • How long a job’s been waiting – X Factor • Duration-weighted time responsiveness factor • Strongest single factor of perceived fairness • Metrics of Utilization – Throughput • Jobs per unit time – Utilization • Percentage of cluster in use http: //clusterresources. com/moabdocs/5. 1. 2 priorityfactors. shtml © Cluster Resources, Inc. 72

1 Performance Metrics • Metrics of Responsiveness – Queue Time • How long a job’s been waiting – X Factor • Duration-weighted time responsiveness factor • Strongest single factor of perceived fairness • Metrics of Utilization – Throughput • Jobs per unit time – Utilization • Percentage of cluster in use http: //clusterresources. com/moabdocs/5. 1. 2 priorityfactors. shtml © Cluster Resources, Inc. 72

1 General Fairness Strategies • Maximize Scheduler Options -- Do Not Overspecify • Keep It Simple – Do Not Address Hypothetical Issues • Seek To Adjust User Behaviour, Not Limit User Options • Allow Users to Specify Required Service Level • Monitor Cluster Performance Regularly • Tune Policies As Needed © Cluster Resources, Inc. 73

1 General Fairness Strategies • Maximize Scheduler Options -- Do Not Overspecify • Keep It Simple – Do Not Address Hypothetical Issues • Seek To Adjust User Behaviour, Not Limit User Options • Allow Users to Specify Required Service Level • Monitor Cluster Performance Regularly • Tune Policies As Needed © Cluster Resources, Inc. 73

1 Priority • • • 2 -tier prioritization structure Independent component and subcomponent weights/caps Components include service, target, fairshare, resource, usage, job attribute, and credential Negative priority jobs may be blocked Tuning facility available with mdiag -p http: //clusterresources. com/moabdocs/5. 1 jobprioritization. shtml © Cluster Resources, Inc. 74

1 Priority • • • 2 -tier prioritization structure Independent component and subcomponent weights/caps Components include service, target, fairshare, resource, usage, job attribute, and credential Negative priority jobs may be blocked Tuning facility available with mdiag -p http: //clusterresources. com/moabdocs/5. 1 jobprioritization. shtml © Cluster Resources, Inc. 74

1 Job Prioritization – Component Overview • Service • • • Target – – – • Level of service delivered or anticipated Includes queue time, xfactor, bypass, policy violation Desired service level Provides exponential factor growth Includes target queue time, target xfactor Credential • • Based on credential priorities Includes user, group, account, Qo. S, and class http: //www. clusterresources. com/moabdocs/5. 1. 2 priorityfactors. shtml#service http: //www. clusterresources. com/moabdocs/5. 1. 2 priorityfactors. shtml#target http: //www. clusterresources. com/moabdocs/5. 1. 2 priorityfactors. shtml#cred © Cluster Resources, Inc. 75

1 Job Prioritization – Component Overview • Service • • • Target – – – • Level of service delivered or anticipated Includes queue time, xfactor, bypass, policy violation Desired service level Provides exponential factor growth Includes target queue time, target xfactor Credential • • Based on credential priorities Includes user, group, account, Qo. S, and class http: //www. clusterresources. com/moabdocs/5. 1. 2 priorityfactors. shtml#service http: //www. clusterresources. com/moabdocs/5. 1. 2 priorityfactors. shtml#target http: //www. clusterresources. com/moabdocs/5. 1. 2 priorityfactors. shtml#cred © Cluster Resources, Inc. 75

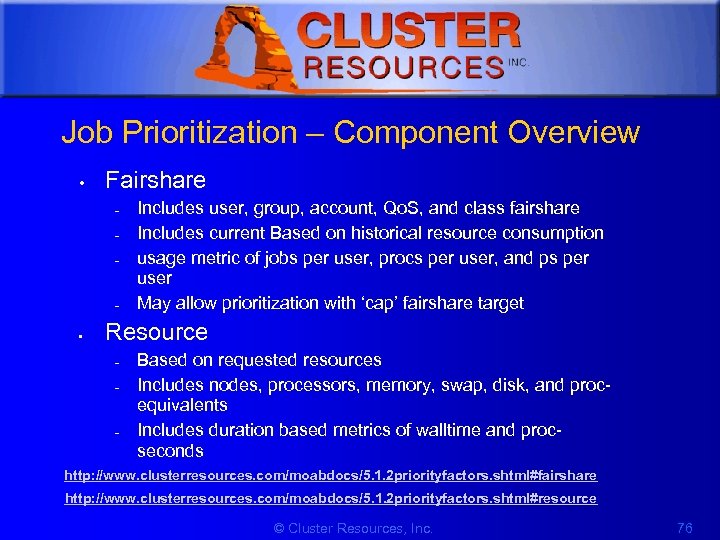

1 Job Prioritization – Component Overview • Fairshare – – • Includes user, group, account, Qo. S, and class fairshare Includes current Based on historical resource consumption usage metric of jobs per user, procs per user, and ps per user May allow prioritization with ‘cap’ fairshare target Resource – – – Based on requested resources Includes nodes, processors, memory, swap, disk, and procequivalents Includes duration based metrics of walltime and procseconds http: //www. clusterresources. com/moabdocs/5. 1. 2 priorityfactors. shtml#fairshare http: //www. clusterresources. com/moabdocs/5. 1. 2 priorityfactors. shtml#resource © Cluster Resources, Inc. 76

1 Job Prioritization – Component Overview • Fairshare – – • Includes user, group, account, Qo. S, and class fairshare Includes current Based on historical resource consumption usage metric of jobs per user, procs per user, and ps per user May allow prioritization with ‘cap’ fairshare target Resource – – – Based on requested resources Includes nodes, processors, memory, swap, disk, and procequivalents Includes duration based metrics of walltime and procseconds http: //www. clusterresources. com/moabdocs/5. 1. 2 priorityfactors. shtml#fairshare http: //www. clusterresources. com/moabdocs/5. 1. 2 priorityfactors. shtml#resource © Cluster Resources, Inc. 76

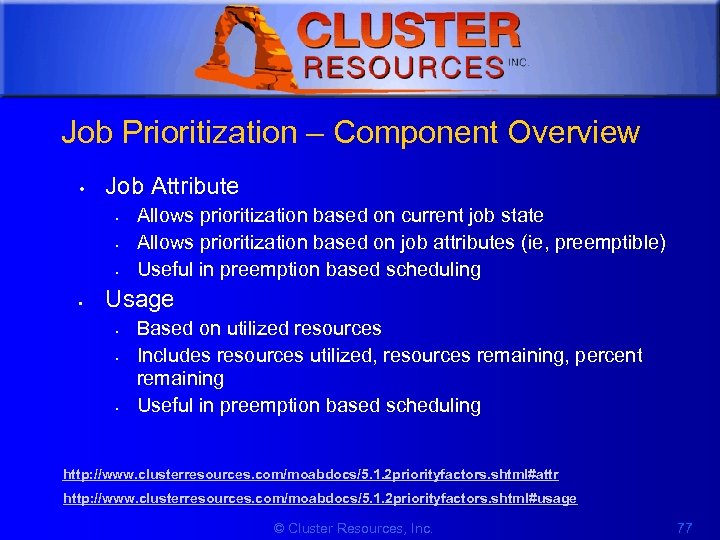

1 Job Prioritization – Component Overview • Job Attribute • • Allows prioritization based on current job state Allows prioritization based on job attributes (ie, preemptible) Useful in preemption based scheduling Usage • • • Based on utilized resources Includes resources utilized, resources remaining, percent remaining Useful in preemption based scheduling http: //www. clusterresources. com/moabdocs/5. 1. 2 priorityfactors. shtml#attr http: //www. clusterresources. com/moabdocs/5. 1. 2 priorityfactors. shtml#usage © Cluster Resources, Inc. 77

1 Job Prioritization – Component Overview • Job Attribute • • Allows prioritization based on current job state Allows prioritization based on job attributes (ie, preemptible) Useful in preemption based scheduling Usage • • • Based on utilized resources Includes resources utilized, resources remaining, percent remaining Useful in preemption based scheduling http: //www. clusterresources. com/moabdocs/5. 1. 2 priorityfactors. shtml#attr http: //www. clusterresources. com/moabdocs/5. 1. 2 priorityfactors. shtml#usage © Cluster Resources, Inc. 77

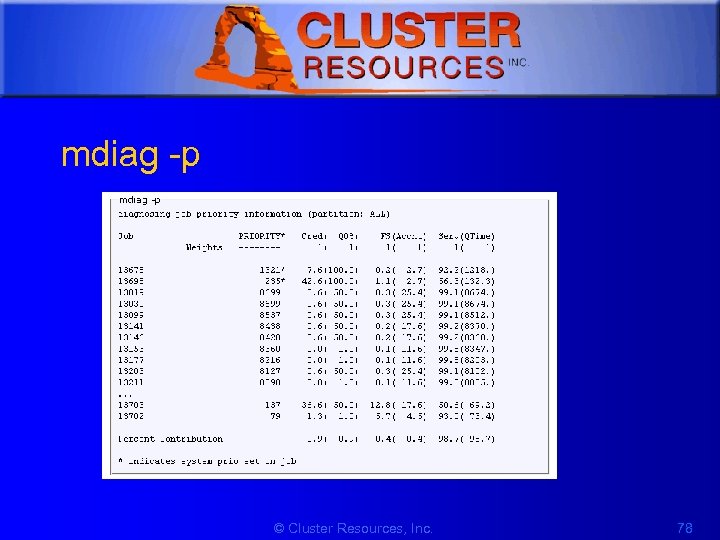

1 mdiag -p © Cluster Resources, Inc. 78

1 mdiag -p © Cluster Resources, Inc. 78

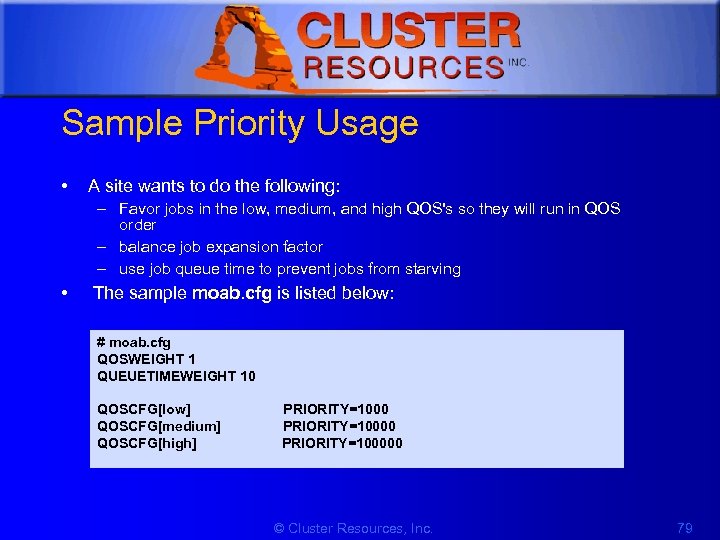

1 Sample Priority Usage • A site wants to do the following: – Favor jobs in the low, medium, and high QOS's so they will run in QOS order – balance job expansion factor – use job queue time to prevent jobs from starving • The sample moab. cfg is listed below: # moab. cfg QOSWEIGHT 1 QUEUETIMEWEIGHT 10 QOSCFG[low] PRIORITY=1000 QOSCFG[medium] PRIORITY=10000 QOSCFG[high] PRIORITY=100000 © Cluster Resources, Inc. 79

1 Sample Priority Usage • A site wants to do the following: – Favor jobs in the low, medium, and high QOS's so they will run in QOS order – balance job expansion factor – use job queue time to prevent jobs from starving • The sample moab. cfg is listed below: # moab. cfg QOSWEIGHT 1 QUEUETIMEWEIGHT 10 QOSCFG[low] PRIORITY=1000 QOSCFG[medium] PRIORITY=10000 QOSCFG[high] PRIORITY=100000 © Cluster Resources, Inc. 79

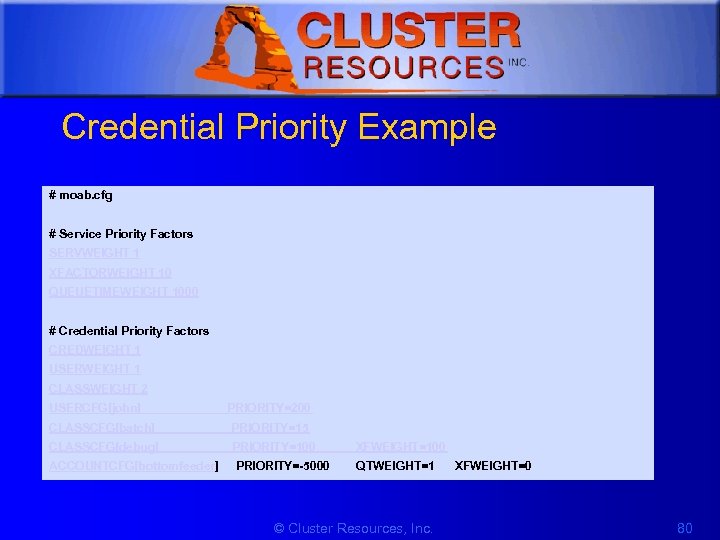

1 Credential Priority Example # moab. cfg # Service Priority Factors SERVWEIGHT 1 XFACTORWEIGHT 10 QUEUETIMEWEIGHT 1000 # Credential Priority Factors CREDWEIGHT 1 USERWEIGHT 1 CLASSWEIGHT 2 USERCFG[john] PRIORITY=200 CLASSCFG[batch] PRIORITY=15 CLASSCFG[debug] PRIORITY=100 XFWEIGHT=100 ACCOUNTCFG[bottomfeeder] PRIORITY=-5000 QTWEIGHT=1 XFWEIGHT=0 © Cluster Resources, Inc. 80

1 Credential Priority Example # moab. cfg # Service Priority Factors SERVWEIGHT 1 XFACTORWEIGHT 10 QUEUETIMEWEIGHT 1000 # Credential Priority Factors CREDWEIGHT 1 USERWEIGHT 1 CLASSWEIGHT 2 USERCFG[john] PRIORITY=200 CLASSCFG[batch] PRIORITY=15 CLASSCFG[debug] PRIORITY=100 XFWEIGHT=100 ACCOUNTCFG[bottomfeeder] PRIORITY=-5000 QTWEIGHT=1 XFWEIGHT=0 © Cluster Resources, Inc. 80

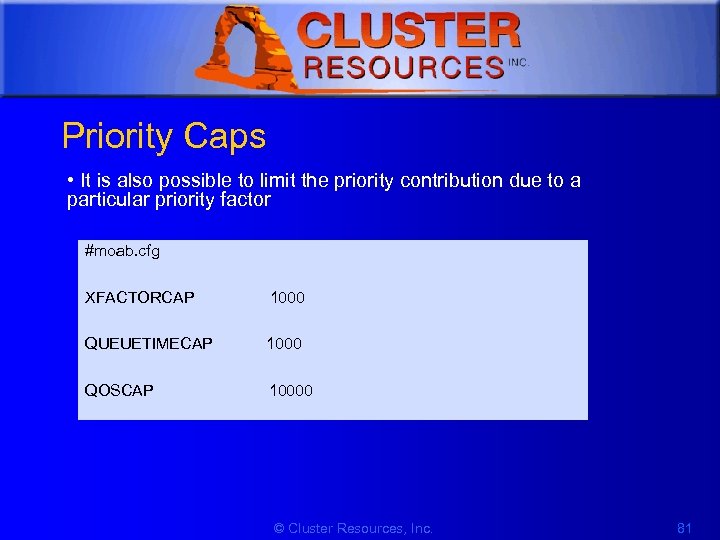

1 Priority Caps • It is also possible to limit the priority contribution due to a particular priority factor #moab. cfg XFACTORCAP 1000 QUEUETIMECAP 1000 QOSCAP 10000 © Cluster Resources, Inc. 81

1 Priority Caps • It is also possible to limit the priority contribution due to a particular priority factor #moab. cfg XFACTORCAP 1000 QUEUETIMECAP 1000 QOSCAP 10000 © Cluster Resources, Inc. 81

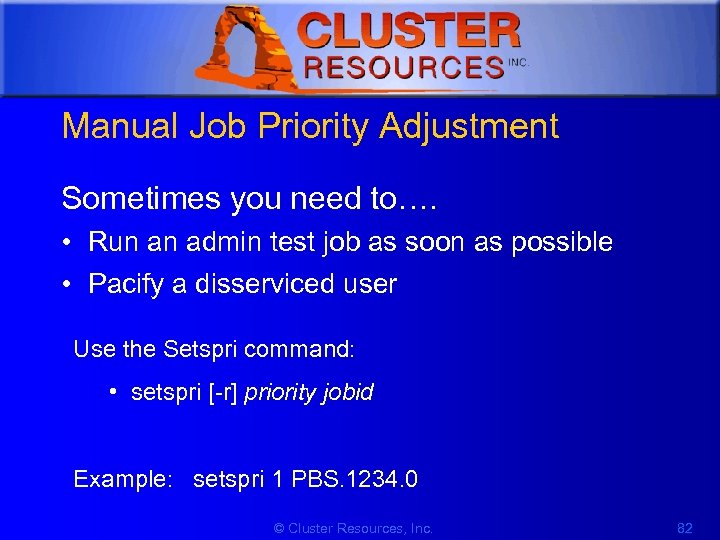

1 Manual Job Priority Adjustment Sometimes you need to…. • Run an admin test job as soon as possible • Pacify a disserviced user Use the Setspri command: • setspri [-r] priority jobid Example: setspri 1 PBS. 1234. 0 © Cluster Resources, Inc. 82

1 Manual Job Priority Adjustment Sometimes you need to…. • Run an admin test job as soon as possible • Pacify a disserviced user Use the Setspri command: • setspri [-r] priority jobid Example: setspri 1 PBS. 1234. 0 © Cluster Resources, Inc. 82

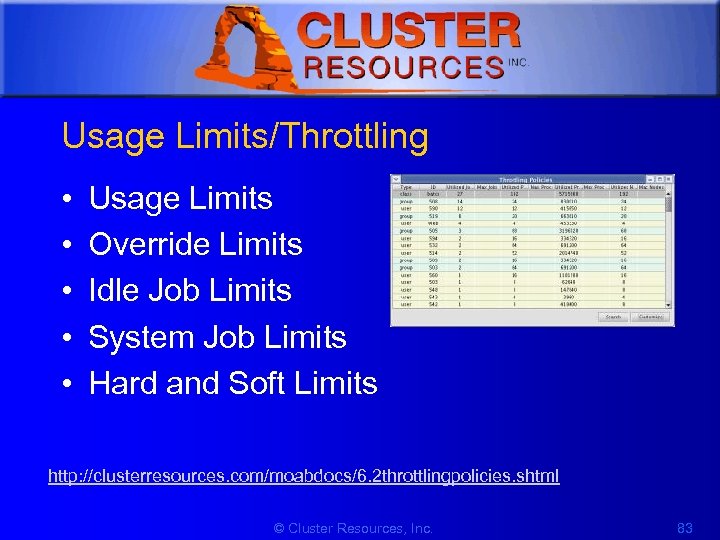

1 Usage Limits/Throttling • • • Usage Limits Override Limits Idle Job Limits System Job Limits Hard and Soft Limits http: //clusterresources. com/moabdocs/6. 2 throttlingpolicies. shtml © Cluster Resources, Inc. 83

1 Usage Limits/Throttling • • • Usage Limits Override Limits Idle Job Limits System Job Limits Hard and Soft Limits http: //clusterresources. com/moabdocs/6. 2 throttlingpolicies. shtml © Cluster Resources, Inc. 83

![1 Usage Limit Example # moab. cfg USERCFG[steve] GROUPCFG[staff] CLASSCFG[DEFAULT] CLASSCFG[batch] MAXJOB=2 MAXNODE=30 MAXJOB=5 1 Usage Limit Example # moab. cfg USERCFG[steve] GROUPCFG[staff] CLASSCFG[DEFAULT] CLASSCFG[batch] MAXJOB=2 MAXNODE=30 MAXJOB=5](https://present5.com/presentation/c8fc53a80907f6569979e02f18cd2bc9/image-84.jpg) 1 Usage Limit Example # moab. cfg USERCFG[steve] GROUPCFG[staff] CLASSCFG[DEFAULT] CLASSCFG[batch] MAXJOB=2 MAXNODE=30 MAXJOB=5 MAXNODE=16 MAXNODE=32 # moab. cfg # allow class batch to run up the 3 simultaneous jobs and # allow any user to use up to 8 total nodes within class batch CLASSCFG[batch] MAXJOB=3 MAXNODE[USER]=8 # allow users steve and bob to use up to 3 and 4 total processors respectively within class CLASSCFG[fast] MAXPROC[USER: steve]=3 MAXPROC[USER: bob]=4 © Cluster Resources, Inc. 84

1 Usage Limit Example # moab. cfg USERCFG[steve] GROUPCFG[staff] CLASSCFG[DEFAULT] CLASSCFG[batch] MAXJOB=2 MAXNODE=30 MAXJOB=5 MAXNODE=16 MAXNODE=32 # moab. cfg # allow class batch to run up the 3 simultaneous jobs and # allow any user to use up to 8 total nodes within class batch CLASSCFG[batch] MAXJOB=3 MAXNODE[USER]=8 # allow users steve and bob to use up to 3 and 4 total processors respectively within class CLASSCFG[fast] MAXPROC[USER: steve]=3 MAXPROC[USER: bob]=4 © Cluster Resources, Inc. 84

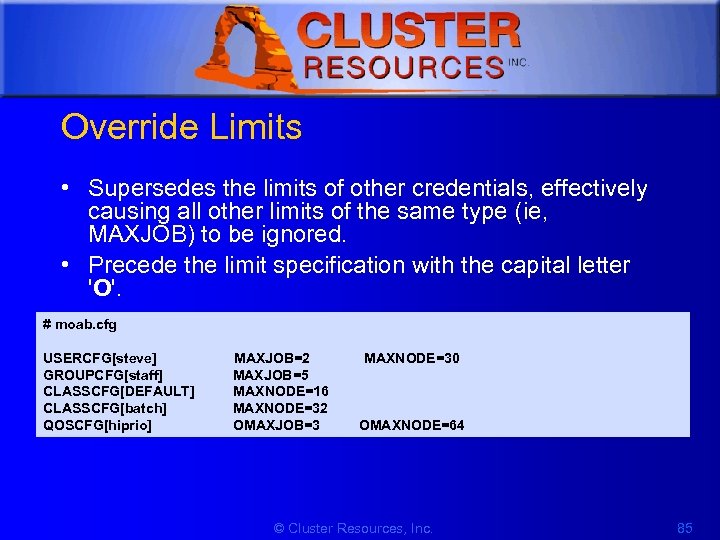

1 Override Limits • Supersedes the limits of other credentials, effectively causing all other limits of the same type (ie, MAXJOB) to be ignored. • Precede the limit specification with the capital letter 'O'. # moab. cfg USERCFG[steve] MAXJOB=2 MAXNODE=30 GROUPCFG[staff] MAXJOB=5 CLASSCFG[DEFAULT] MAXNODE=16 CLASSCFG[batch] MAXNODE=32 QOSCFG[hiprio] OMAXJOB=3 OMAXNODE=64 © Cluster Resources, Inc. 85

1 Override Limits • Supersedes the limits of other credentials, effectively causing all other limits of the same type (ie, MAXJOB) to be ignored. • Precede the limit specification with the capital letter 'O'. # moab. cfg USERCFG[steve] MAXJOB=2 MAXNODE=30 GROUPCFG[staff] MAXJOB=5 CLASSCFG[DEFAULT] MAXNODE=16 CLASSCFG[batch] MAXNODE=32 QOSCFG[hiprio] OMAXJOB=3 OMAXNODE=64 © Cluster Resources, Inc. 85

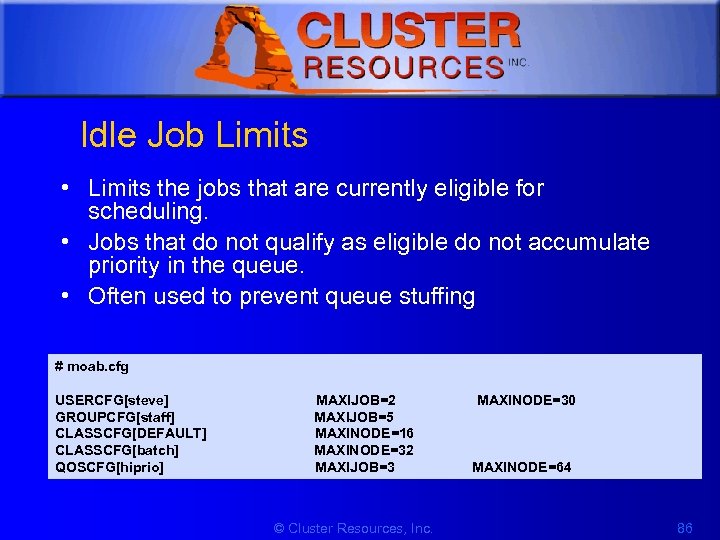

1 Idle Job Limits • Limits the jobs that are currently eligible for scheduling. • Jobs that do not qualify as eligible do not accumulate priority in the queue. • Often used to prevent queue stuffing # moab. cfg USERCFG[steve] MAXIJOB=2 MAXINODE=30 GROUPCFG[staff] MAXIJOB=5 CLASSCFG[DEFAULT] MAXINODE=16 CLASSCFG[batch] MAXINODE=32 QOSCFG[hiprio] MAXIJOB=3 MAXINODE=64 © Cluster Resources, Inc. 86

1 Idle Job Limits • Limits the jobs that are currently eligible for scheduling. • Jobs that do not qualify as eligible do not accumulate priority in the queue. • Often used to prevent queue stuffing # moab. cfg USERCFG[steve] MAXIJOB=2 MAXINODE=30 GROUPCFG[staff] MAXIJOB=5 CLASSCFG[DEFAULT] MAXINODE=16 CLASSCFG[batch] MAXINODE=32 QOSCFG[hiprio] MAXIJOB=3 MAXINODE=64 © Cluster Resources, Inc. 86

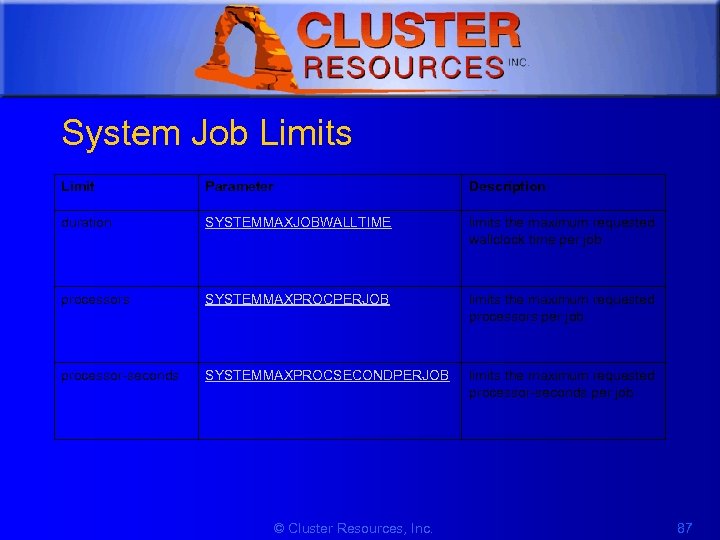

1 System Job Limits Limit Parameter Description duration SYSTEMMAXJOBWALLTIME limits the maximum requested wallclock time per job processors SYSTEMMAXPROCPERJOB limits the maximum requested processors per job processor-seconds SYSTEMMAXPROCSECONDPERJOB limits the maximum requested processor-seconds per job © Cluster Resources, Inc. 87

1 System Job Limits Limit Parameter Description duration SYSTEMMAXJOBWALLTIME limits the maximum requested wallclock time per job processors SYSTEMMAXPROCPERJOB limits the maximum requested processors per job processor-seconds SYSTEMMAXPROCSECONDPERJOB limits the maximum requested processor-seconds per job © Cluster Resources, Inc. 87

![1 Hard and Soft Limits • Balance both fairness and utilization #moab. cfg USERCFG[steve] 1 Hard and Soft Limits • Balance both fairness and utilization #moab. cfg USERCFG[steve]](https://present5.com/presentation/c8fc53a80907f6569979e02f18cd2bc9/image-88.jpg) 1 Hard and Soft Limits • Balance both fairness and utilization #moab. cfg USERCFG[steve] MAXJOB=2, 4 MAXNODE=15, 30 GROUPCFG[staff] MAXJOB=2, 5 CLASSCFG[DEFAULT] MAXNODE=16, 32 CLASSCFG[batch] MAXNODE=12, 32 QOSCFG[hiprio] MAXJOB=3, 5 MAXNODE=32, 64 © Cluster Resources, Inc. 88

1 Hard and Soft Limits • Balance both fairness and utilization #moab. cfg USERCFG[steve] MAXJOB=2, 4 MAXNODE=15, 30 GROUPCFG[staff] MAXJOB=2, 5 CLASSCFG[DEFAULT] MAXNODE=16, 32 CLASSCFG[batch] MAXNODE=12, 32 QOSCFG[hiprio] MAXJOB=3, 5 MAXNODE=32, 64 © Cluster Resources, Inc. 88

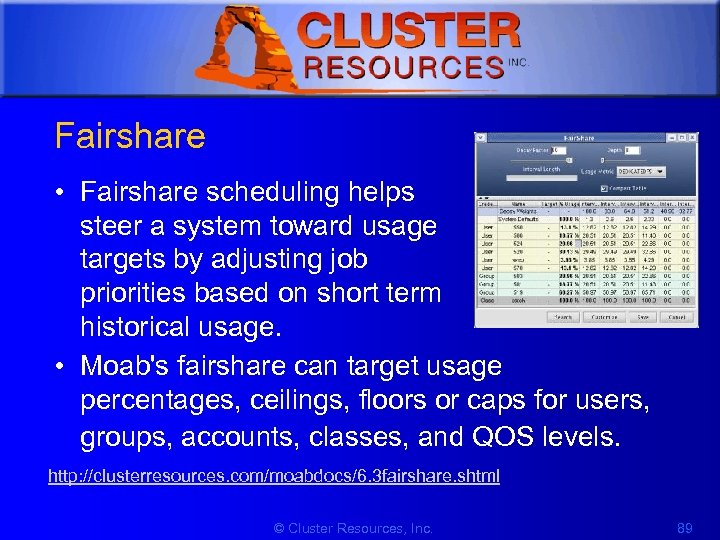

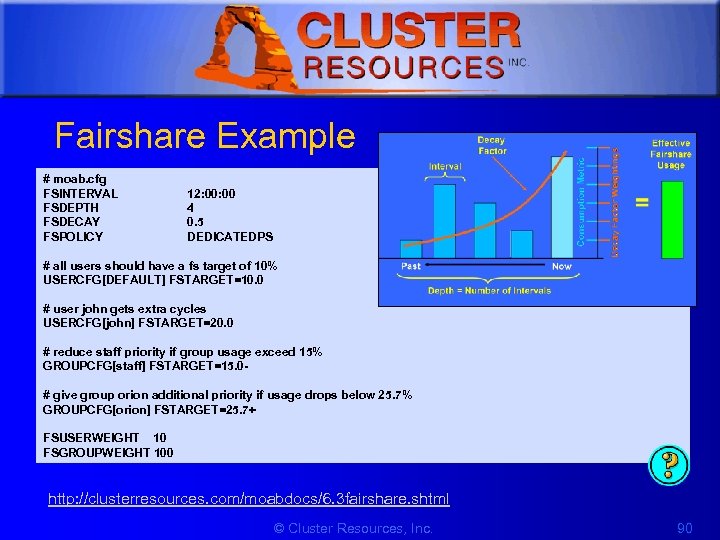

1 Fairshare • Fairshare scheduling helps steer a system toward usage targets by adjusting job priorities based on short term historical usage. • Moab's fairshare can target usage percentages, ceilings, floors or caps for users, groups, accounts, classes, and QOS levels. http: //clusterresources. com/moabdocs/6. 3 fairshare. shtml © Cluster Resources, Inc. 89

1 Fairshare • Fairshare scheduling helps steer a system toward usage targets by adjusting job priorities based on short term historical usage. • Moab's fairshare can target usage percentages, ceilings, floors or caps for users, groups, accounts, classes, and QOS levels. http: //clusterresources. com/moabdocs/6. 3 fairshare. shtml © Cluster Resources, Inc. 89

1 Fairshare Example # moab. cfg FSINTERVAL FSDEPTH FSDECAY FSPOLICY 12: 00 4 0. 5 DEDICATEDPS # all users should have a fs target of 10% USERCFG[DEFAULT] FSTARGET=10. 0 # user john gets extra cycles USERCFG[john] FSTARGET=20. 0 # reduce staff priority if group usage exceed 15% GROUPCFG[staff] FSTARGET=15. 0# give group orion additional priority if usage drops below 25. 7% GROUPCFG[orion] FSTARGET=25. 7+ FSUSERWEIGHT 10 FSGROUPWEIGHT 100 http: //clusterresources. com/moabdocs/6. 3 fairshare. shtml © Cluster Resources, Inc. 90

1 Fairshare Example # moab. cfg FSINTERVAL FSDEPTH FSDECAY FSPOLICY 12: 00 4 0. 5 DEDICATEDPS # all users should have a fs target of 10% USERCFG[DEFAULT] FSTARGET=10. 0 # user john gets extra cycles USERCFG[john] FSTARGET=20. 0 # reduce staff priority if group usage exceed 15% GROUPCFG[staff] FSTARGET=15. 0# give group orion additional priority if usage drops below 25. 7% GROUPCFG[orion] FSTARGET=25. 7+ FSUSERWEIGHT 10 FSGROUPWEIGHT 100 http: //clusterresources. com/moabdocs/6. 3 fairshare. shtml © Cluster Resources, Inc. 90

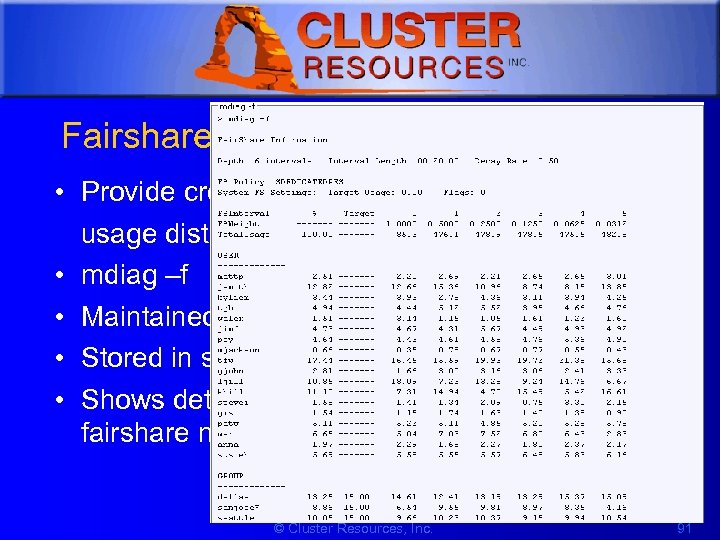

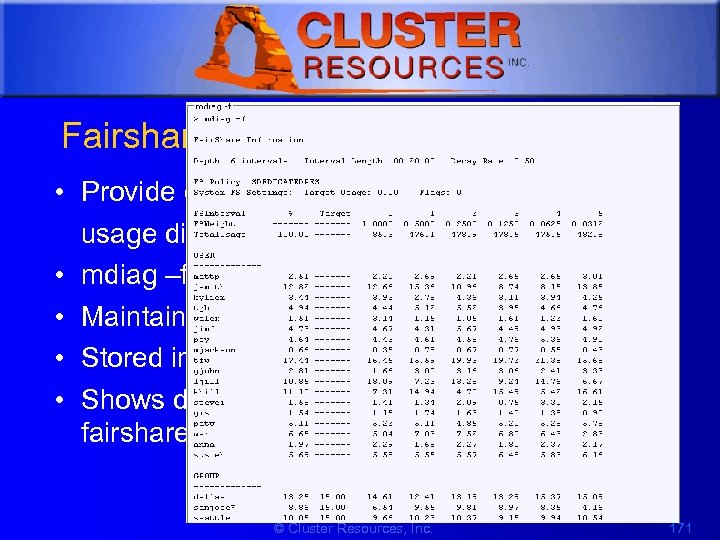

1 Fairshare stats • Provide credential-based usage distributions over time • mdiag –f • Maintained for all credentials • Stored in stats/FS. ${epochtime} • Shows detailed time-distribution usage by fairshare metric © Cluster Resources, Inc. 91

1 Fairshare stats • Provide credential-based usage distributions over time • mdiag –f • Maintained for all credentials • Stored in stats/FS. ${epochtime} • Shows detailed time-distribution usage by fairshare metric © Cluster Resources, Inc. 91

1 Optimization is maximizing performance while fully addressing all mission objectives. True optimization includes aspects of policy selection, increased availability, user training, and other factors. • • • Identifying Policy Bottlenecks Identifying Resource Fragmentation Preemption Malleable/Dynamic Jobs Backfill © Cluster Resources, Inc. 92

1 Optimization is maximizing performance while fully addressing all mission objectives. True optimization includes aspects of policy selection, increased availability, user training, and other factors. • • • Identifying Policy Bottlenecks Identifying Resource Fragmentation Preemption Malleable/Dynamic Jobs Backfill © Cluster Resources, Inc. 92

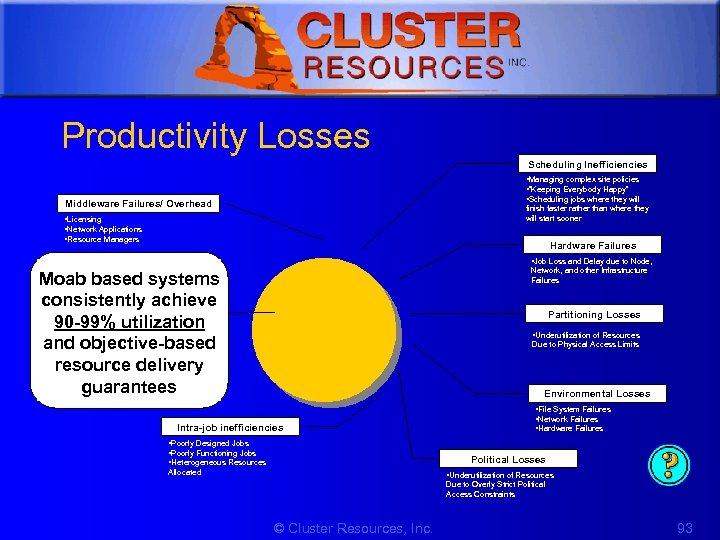

1 Productivity Losses Scheduling Inefficiencies • Managing complex site policies • “Keeping Everybody Happy” • Scheduling jobs where they will Middleware Failures/ Overhead finish faster rather than where they will start sooner • Licensing • Network Applications • Resource Managers Hardware Failures • Job Loss and Delay due to Node, Network, and other Infrastructure Failures Moab based systems consistently achieve Remaining Productivity 90 -99% utilization and objective-based resource delivery guarantees Partitioning Losses • Underutilization of Resources Due to Physical Access Limits Environmental Losses • File System Failures • Network Failures • Hardware Failures Intra-job inefficiencies • Poorly Designed Jobs • Poorly Functioning Jobs • Heterogeneous Resources Political Losses Allocated • Underutilization of Resources Due to Overly Strict Political Access Constraints © Cluster Resources, Inc. 93

1 Productivity Losses Scheduling Inefficiencies • Managing complex site policies • “Keeping Everybody Happy” • Scheduling jobs where they will Middleware Failures/ Overhead finish faster rather than where they will start sooner • Licensing • Network Applications • Resource Managers Hardware Failures • Job Loss and Delay due to Node, Network, and other Infrastructure Failures Moab based systems consistently achieve Remaining Productivity 90 -99% utilization and objective-based resource delivery guarantees Partitioning Losses • Underutilization of Resources Due to Physical Access Limits Environmental Losses • File System Failures • Network Failures • Hardware Failures Intra-job inefficiencies • Poorly Designed Jobs • Poorly Functioning Jobs • Heterogeneous Resources Political Losses Allocated • Underutilization of Resources Due to Overly Strict Political Access Constraints © Cluster Resources, Inc. 93

1 Identifying Policy Bottlenecks • Most Optimization is Enable by Default • Sources of Bottlenecks – Usage Limits, Fairshare Caps – Eval Steps • Verify priority (are most important jobs getting access to resources first? ) http: //clusterresources. com/moabdocs/commands/mdiag-priority. shtml © Cluster Resources, Inc. 94

1 Identifying Policy Bottlenecks • Most Optimization is Enable by Default • Sources of Bottlenecks – Usage Limits, Fairshare Caps – Eval Steps • Verify priority (are most important jobs getting access to resources first? ) http: //clusterresources. com/moabdocs/commands/mdiag-priority. shtml © Cluster Resources, Inc. 94

1 Identifying Policy Bottlenecks (contd) • Sources of Bottlenecks (contd) – Eval Steps (contd) • Check job blockage • Adjust Limits, Caps, Priority as needed • If needed, use simulation to determine performance impact of changes http: //clusterresources. com/moabdocs/commands/mdiagqueues. shtml © Cluster Resources, Inc. 95

1 Identifying Policy Bottlenecks (contd) • Sources of Bottlenecks (contd) – Eval Steps (contd) • Check job blockage • Adjust Limits, Caps, Priority as needed • If needed, use simulation to determine performance impact of changes http: //clusterresources. com/moabdocs/commands/mdiagqueues. shtml © Cluster Resources, Inc. 95

1 Identifying Resource Fragmentation • Fragmentation based on queues, reservations, partitions, os's, architectures, etc. • Recommend changes, use node sets, soften reservations, time-based reservations, etc. • User training to eliminate user specified fragmentation © Cluster Resources, Inc. 96

1 Identifying Resource Fragmentation • Fragmentation based on queues, reservations, partitions, os's, architectures, etc. • Recommend changes, use node sets, soften reservations, time-based reservations, etc. • User training to eliminate user specified fragmentation © Cluster Resources, Inc. 96

1 Preemption • Conflict between high utilization for cluster and guarantees for important jobs • Preemption allows scheduler to 'retract' some scheduling decisions to address newly submitted workload • Qo. S-based preemption allows scheduler to enable preemption only if targets cannot be satisfied in other ways http: //www. clusterresources. com/products/mwm/docs/8. 4 preemption. shtml © Cluster Resources, Inc. 97

1 Preemption • Conflict between high utilization for cluster and guarantees for important jobs • Preemption allows scheduler to 'retract' some scheduling decisions to address newly submitted workload • Qo. S-based preemption allows scheduler to enable preemption only if targets cannot be satisfied in other ways http: //www. clusterresources. com/products/mwm/docs/8. 4 preemption. shtml © Cluster Resources, Inc. 97

1 Malleable/Dynamic Jobs • Moab adjusts jobs to utilize available resource and fill holes • Moab adjusts both job size and job duration • Only supported with resource managers which support dynamic job modification (i. e. TORQUE) or with msub http: //www. clusterresources. com/products/mwm/docs/22. 4 dynamicjobs. shtm l © Cluster Resources, Inc. 98

1 Malleable/Dynamic Jobs • Moab adjusts jobs to utilize available resource and fill holes • Moab adjusts both job size and job duration • Only supported with resource managers which support dynamic job modification (i. e. TORQUE) or with msub http: //www. clusterresources. com/products/mwm/docs/22. 4 dynamicjobs. shtm l © Cluster Resources, Inc. 98

1 Backfill • Allows a scheduler to make better use of available resources by running jobs out of order • Prioritizes the jobs in the queue according to a number of factors and then orders the jobs into a highest priority first (or priority FIFO) sorted list © Cluster Resources, Inc. 99

1 Backfill • Allows a scheduler to make better use of available resources by running jobs out of order • Prioritizes the jobs in the queue according to a number of factors and then orders the jobs into a highest priority first (or priority FIFO) sorted list © Cluster Resources, Inc. 99