4699b155894bd7a24a2f1872aaae267a.ppt

- Количество слайдов: 115

05398 מבוא לתקשורת הרצאות 5 -3: רמת התמסורת (chapter 3 – Transport) פרופ' אמיר הרצברג Based on foils by Kurose & Ross ©, see: http: //www. aw. com/kurose-ross/ My site: http: //amirherzberg. com Computer Networking: A Top Down Approach Featuring the Internet, 3 rd edition. Jim Kurose, Keith Ross Addison-Wesley, July 2005. Transport Layer 1

05398 מבוא לתקשורת הרצאות 5 -3: רמת התמסורת (chapter 3 – Transport) פרופ' אמיר הרצברג Based on foils by Kurose & Ross ©, see: http: //www. aw. com/kurose-ross/ My site: http: //amirherzberg. com Computer Networking: A Top Down Approach Featuring the Internet, 3 rd edition. Jim Kurose, Keith Ross Addison-Wesley, July 2005. Transport Layer 1

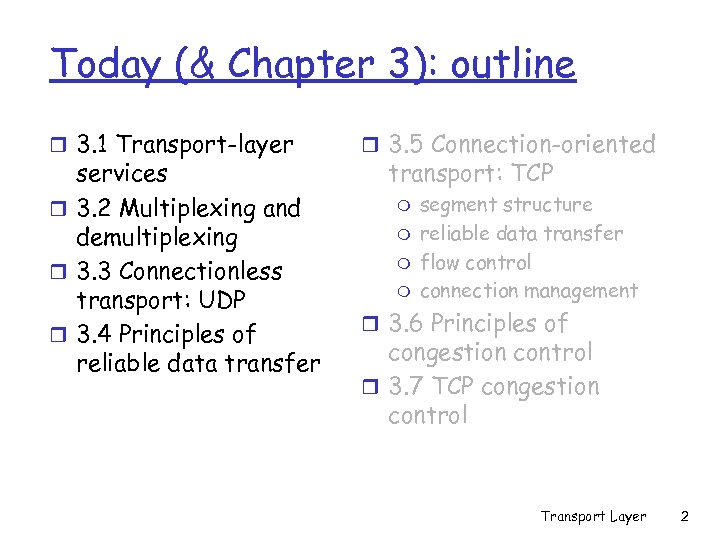

Today (& Chapter 3): outline r 3. 1 Transport-layer services r 3. 2 Multiplexing and demultiplexing r 3. 3 Connectionless transport: UDP r 3. 4 Principles of reliable data transfer r 3. 5 Connection-oriented transport: TCP m m segment structure reliable data transfer flow control connection management r 3. 6 Principles of congestion control r 3. 7 TCP congestion control Transport Layer 2

Today (& Chapter 3): outline r 3. 1 Transport-layer services r 3. 2 Multiplexing and demultiplexing r 3. 3 Connectionless transport: UDP r 3. 4 Principles of reliable data transfer r 3. 5 Connection-oriented transport: TCP m m segment structure reliable data transfer flow control connection management r 3. 6 Principles of congestion control r 3. 7 TCP congestion control Transport Layer 2

Processes & communication Process: program running within a host. r within same host, two processes communicate using inter-process communication (IPC) m Client process: process that initiates communication Server process: process that waits to be contacted; always on Not in this course r processes in different hosts communicate by exchanging messages m In this course Transport Layer 3

Processes & communication Process: program running within a host. r within same host, two processes communicate using inter-process communication (IPC) m Client process: process that initiates communication Server process: process that waits to be contacted; always on Not in this course r processes in different hosts communicate by exchanging messages m In this course Transport Layer 3

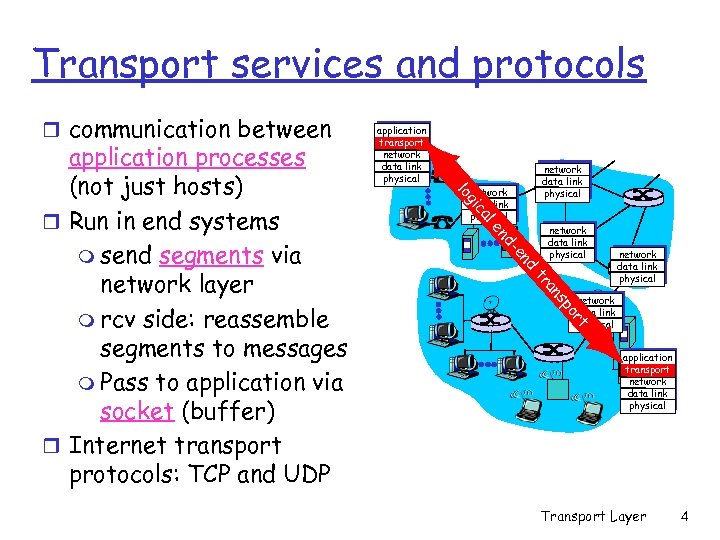

Transport services and protocols r communication between network data link physical al ic g lo d en d- en network data link physical po s an tr rt application processes (not just hosts) r Run in end systems m send segments via network layer m rcv side: reassemble segments to messages m Pass to application via socket (buffer) r Internet transport protocols: TCP and UDP application transport network data link physical Transport Layer 4

Transport services and protocols r communication between network data link physical al ic g lo d en d- en network data link physical po s an tr rt application processes (not just hosts) r Run in end systems m send segments via network layer m rcv side: reassemble segments to messages m Pass to application via socket (buffer) r Internet transport protocols: TCP and UDP application transport network data link physical Transport Layer 4

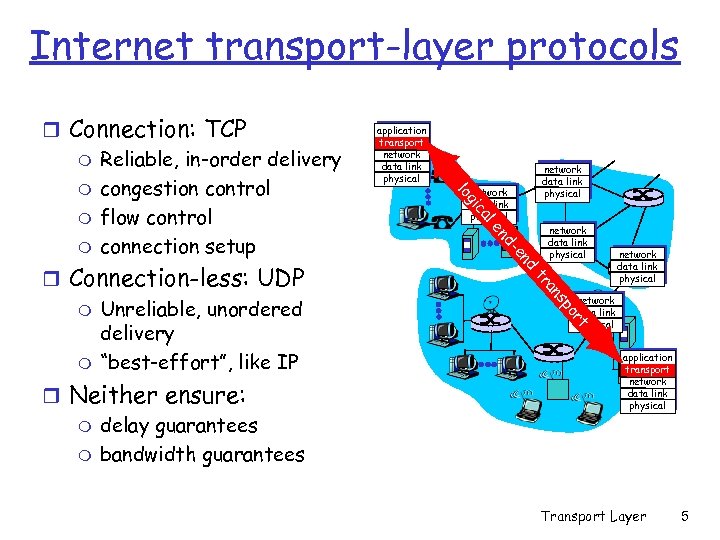

Internet transport-layer protocols al d en d- en network data link physical po s an tr rt r Neither ensure: m delay guarantees m bandwidth guarantees network data link physical ic r Connection-less: UDP m Unreliable, unordered delivery m “best-effort”, like IP application transport network data link physical g lo r Connection: TCP m Reliable, in-order delivery m congestion control m flow control m connection setup application transport network data link physical Transport Layer 5

Internet transport-layer protocols al d en d- en network data link physical po s an tr rt r Neither ensure: m delay guarantees m bandwidth guarantees network data link physical ic r Connection-less: UDP m Unreliable, unordered delivery m “best-effort”, like IP application transport network data link physical g lo r Connection: TCP m Reliable, in-order delivery m congestion control m flow control m connection setup application transport network data link physical Transport Layer 5

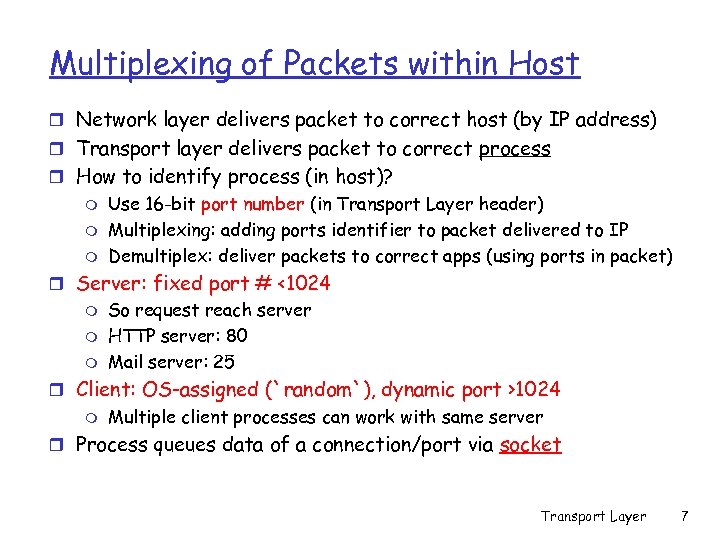

Multiplexing of Packets within Host r Network layer delivers packet to correct host (by IP address) r Transport layer delivers packet to correct process r How to identify process (in host)? m m m Use 16 -bit port number (in Transport Layer header) Multiplexing: adding ports identifier to packet delivered to IP Demultiplex: deliver packets to correct apps (using ports in packet) r Server: fixed port # <1024 m m m So request reach server HTTP server: 80 Mail server: 25 r Client: OS-assigned (`random`), dynamic port >1024 m Multiple client processes can work with same server r Process queues data of a connection/port via socket Transport Layer 7

Multiplexing of Packets within Host r Network layer delivers packet to correct host (by IP address) r Transport layer delivers packet to correct process r How to identify process (in host)? m m m Use 16 -bit port number (in Transport Layer header) Multiplexing: adding ports identifier to packet delivered to IP Demultiplex: deliver packets to correct apps (using ports in packet) r Server: fixed port # <1024 m m m So request reach server HTTP server: 80 Mail server: 25 r Client: OS-assigned (`random`), dynamic port >1024 m Multiple client processes can work with same server r Process queues data of a connection/port via socket Transport Layer 7

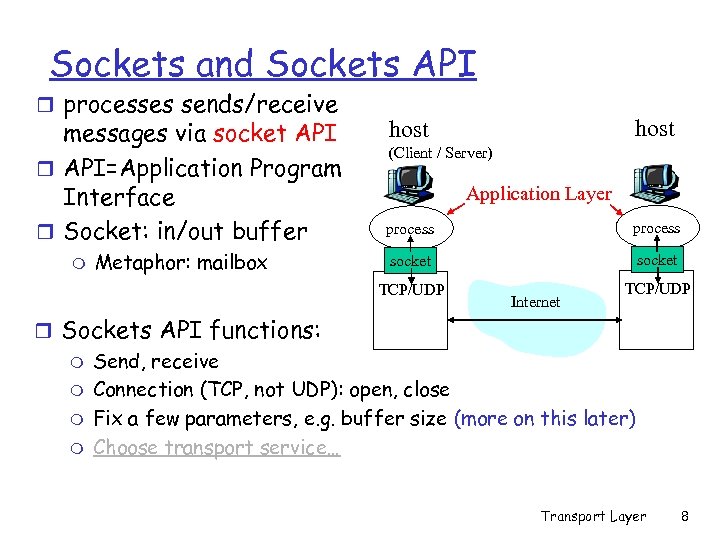

Sockets and Sockets API r processes sends/receive messages via socket API r API=Application Program Interface r Socket: in/out buffer m Metaphor: mailbox host (Client / Server) Application Layer process socket TCP/UDP Internet TCP/UDP r Sockets API functions: m Send, receive m Connection (TCP, not UDP): open, close m Fix a few parameters, e. g. buffer size (more on this later) m Choose transport service… Transport Layer 8

Sockets and Sockets API r processes sends/receive messages via socket API r API=Application Program Interface r Socket: in/out buffer m Metaphor: mailbox host (Client / Server) Application Layer process socket TCP/UDP Internet TCP/UDP r Sockets API functions: m Send, receive m Connection (TCP, not UDP): open, close m Fix a few parameters, e. g. buffer size (more on this later) m Choose transport service… Transport Layer 8

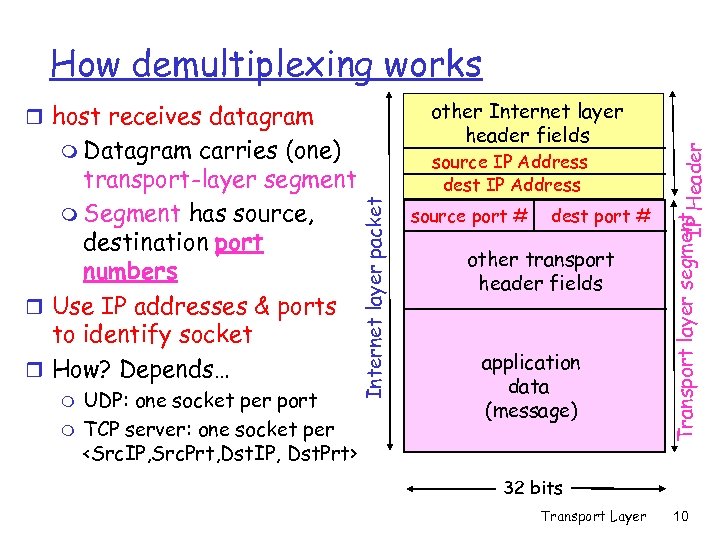

other Internet layer header fields r host receives datagram carries (one) transport-layer segment m Segment has source, destination port numbers r Use IP addresses & ports to identify socket r How? Depends… m m UDP: one socket per port TCP server: one socket per

other Internet layer header fields r host receives datagram carries (one) transport-layer segment m Segment has source, destination port numbers r Use IP addresses & ports to identify socket r How? Depends… m m UDP: one socket per port TCP server: one socket per

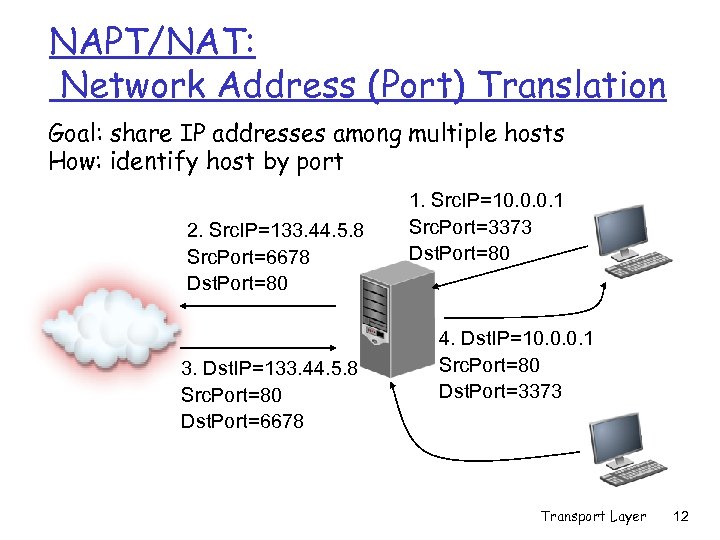

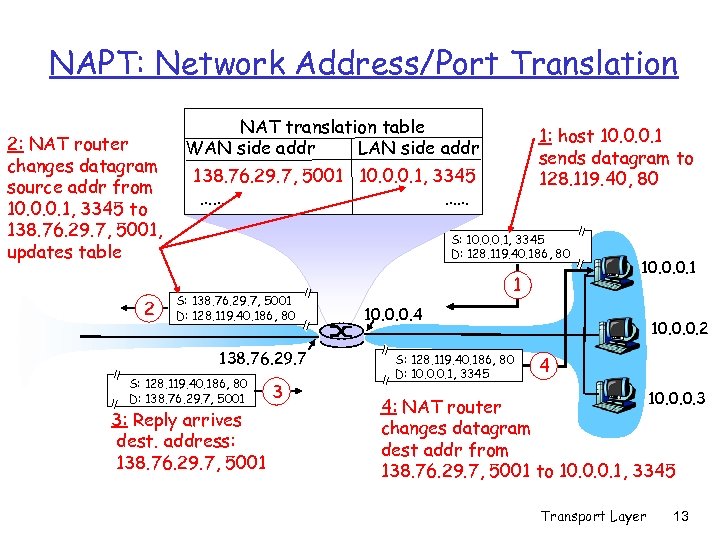

NAPT/NAT: Network Address (Port) Translation Goal: share IP addresses among multiple hosts How: identify host by port 2. Src. IP=133. 44. 5. 8 Src. Port=6678 Dst. Port=80 3. Dst. IP=133. 44. 5. 8 Src. Port=80 Dst. Port=6678 1. Src. IP=10. 0. 0. 1 Src. Port=3373 Dst. Port=80 4. Dst. IP=10. 0. 0. 1 Src. Port=80 Dst. Port=3373 Transport Layer 12

NAPT/NAT: Network Address (Port) Translation Goal: share IP addresses among multiple hosts How: identify host by port 2. Src. IP=133. 44. 5. 8 Src. Port=6678 Dst. Port=80 3. Dst. IP=133. 44. 5. 8 Src. Port=80 Dst. Port=6678 1. Src. IP=10. 0. 0. 1 Src. Port=3373 Dst. Port=80 4. Dst. IP=10. 0. 0. 1 Src. Port=80 Dst. Port=3373 Transport Layer 12

NAPT: Network Address/Port Translation 2: NAT router changes datagram source addr from 10. 0. 0. 1, 3345 to 138. 76. 29. 7, 5001, updates table 2 NAT translation table WAN side addr LAN side addr 1: host 10. 0. 0. 1 sends datagram to 128. 119. 40, 80 138. 76. 29. 7, 5001 10. 0. 0. 1, 3345 …… …… S: 10. 0. 0. 1, 3345 D: 128. 119. 40. 186, 80 S: 138. 76. 29. 7, 5001 D: 128. 119. 40. 186, 80 138. 76. 29. 7 S: 128. 119. 40. 186, 80 D: 138. 76. 29. 7, 5001 3: Reply arrives dest. address: 138. 76. 29. 7, 5001 3 1 10. 0. 0. 4 S: 128. 119. 40. 186, 80 D: 10. 0. 0. 1, 3345 10. 0. 0. 2 4 10. 0. 0. 3 4: NAT router changes datagram dest addr from 138. 76. 29. 7, 5001 to 10. 0. 0. 1, 3345 Transport Layer 13

NAPT: Network Address/Port Translation 2: NAT router changes datagram source addr from 10. 0. 0. 1, 3345 to 138. 76. 29. 7, 5001, updates table 2 NAT translation table WAN side addr LAN side addr 1: host 10. 0. 0. 1 sends datagram to 128. 119. 40, 80 138. 76. 29. 7, 5001 10. 0. 0. 1, 3345 …… …… S: 10. 0. 0. 1, 3345 D: 128. 119. 40. 186, 80 S: 138. 76. 29. 7, 5001 D: 128. 119. 40. 186, 80 138. 76. 29. 7 S: 128. 119. 40. 186, 80 D: 138. 76. 29. 7, 5001 3: Reply arrives dest. address: 138. 76. 29. 7, 5001 3 1 10. 0. 0. 4 S: 128. 119. 40. 186, 80 D: 10. 0. 0. 1, 3345 10. 0. 0. 2 4 10. 0. 0. 3 4: NAT router changes datagram dest addr from 138. 76. 29. 7, 5001 to 10. 0. 0. 1, 3345 Transport Layer 13

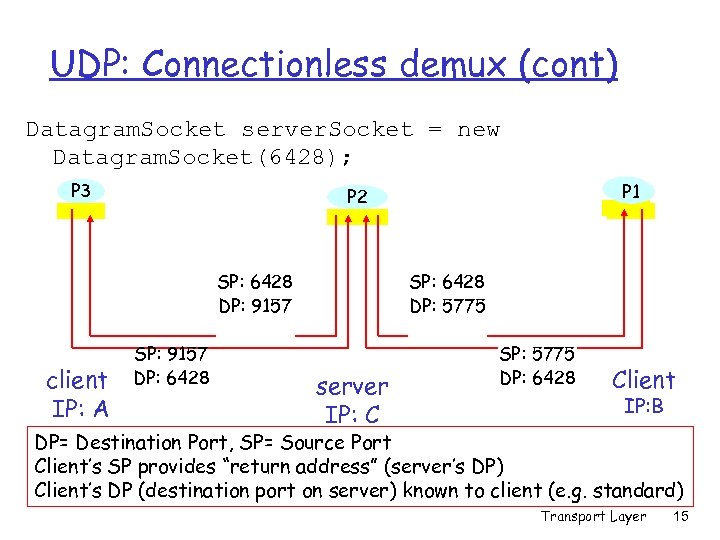

UDP: Connectionless demultiplexing r Create sockets with port numbers: Datagram. Socket my. Socket 1 = new Datagram. Socket(9111); Datagram. Socket my. Socket 2 = new Datagram. Socket(); r UDP socket identified by destination port (and IP) r When host receives UDP segment: m m checks destination port number in segment directs UDP segment to socket with that port number r Regardless of source IP and port Transport Layer 14

UDP: Connectionless demultiplexing r Create sockets with port numbers: Datagram. Socket my. Socket 1 = new Datagram. Socket(9111); Datagram. Socket my. Socket 2 = new Datagram. Socket(); r UDP socket identified by destination port (and IP) r When host receives UDP segment: m m checks destination port number in segment directs UDP segment to socket with that port number r Regardless of source IP and port Transport Layer 14

UDP: Connectionless demux (cont) Datagram. Socket server. Socket = new Datagram. Socket(6428); P 3 SP: 6428 DP: 9157 client IP: A P 1 P 2 SP: 9157 DP: 6428 SP: 6428 DP: 5775 server IP: C SP: 5775 DP: 6428 Client IP: B DP= Destination Port, SP= Source Port Client’s SP provides “return address” (server’s DP) Client’s DP (destination port on server) known to client (e. g. standard) Transport Layer 15

UDP: Connectionless demux (cont) Datagram. Socket server. Socket = new Datagram. Socket(6428); P 3 SP: 6428 DP: 9157 client IP: A P 1 P 2 SP: 9157 DP: 6428 SP: 6428 DP: 5775 server IP: C SP: 5775 DP: 6428 Client IP: B DP= Destination Port, SP= Source Port Client’s SP provides “return address” (server’s DP) Client’s DP (destination port on server) known to client (e. g. standard) Transport Layer 15

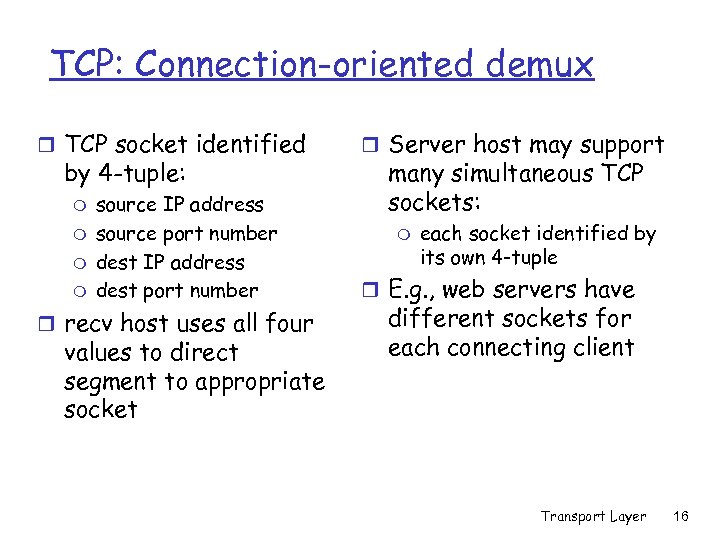

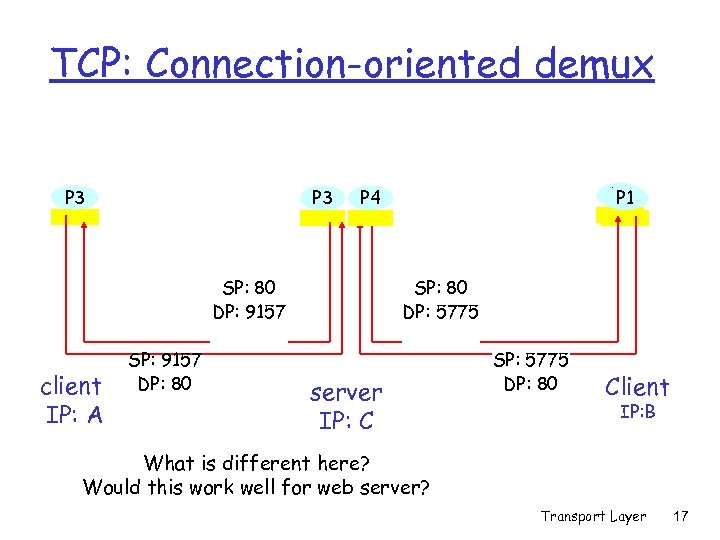

TCP: Connection-oriented demux r TCP socket identified by 4 -tuple: m m source IP address source port number dest IP address dest port number r recv host uses all four values to direct segment to appropriate socket r Server host may support many simultaneous TCP sockets: m each socket identified by its own 4 -tuple r E. g. , web servers have different sockets for each connecting client Transport Layer 16

TCP: Connection-oriented demux r TCP socket identified by 4 -tuple: m m source IP address source port number dest IP address dest port number r recv host uses all four values to direct segment to appropriate socket r Server host may support many simultaneous TCP sockets: m each socket identified by its own 4 -tuple r E. g. , web servers have different sockets for each connecting client Transport Layer 16

TCP: Connection-oriented demux P 3 SP: 80 DP: 9157 client IP: A SP: 9157 DP: 80 P 1 P 4 SP: 80 DP: 5775 server IP: C SP: 5775 DP: 80 Client IP: B What is different here? Would this work well for web server? Transport Layer 17

TCP: Connection-oriented demux P 3 SP: 80 DP: 9157 client IP: A SP: 9157 DP: 80 P 1 P 4 SP: 80 DP: 5775 server IP: C SP: 5775 DP: 80 Client IP: B What is different here? Would this work well for web server? Transport Layer 17

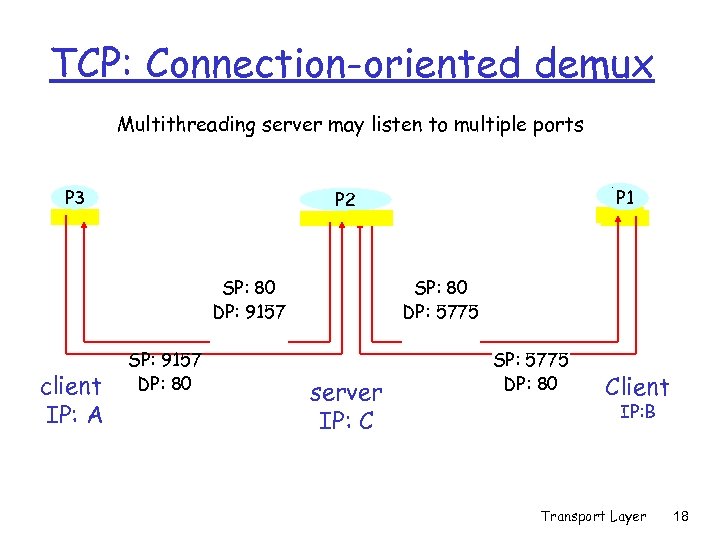

TCP: Connection-oriented demux Multithreading server may listen to multiple ports P 3 SP: 80 DP: 9157 client IP: A P 1 P 2 SP: 9157 DP: 80 SP: 80 DP: 5775 server IP: C SP: 5775 DP: 80 Client IP: B Transport Layer 18

TCP: Connection-oriented demux Multithreading server may listen to multiple ports P 3 SP: 80 DP: 9157 client IP: A P 1 P 2 SP: 9157 DP: 80 SP: 80 DP: 5775 server IP: C SP: 5775 DP: 80 Client IP: B Transport Layer 18

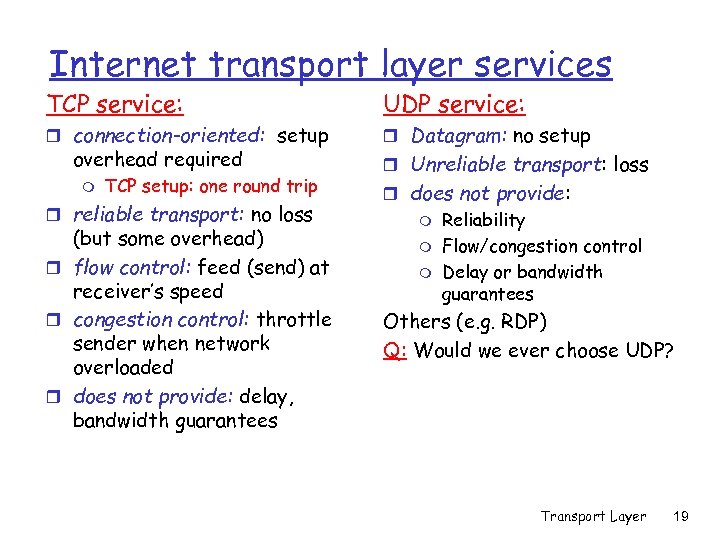

Internet transport layer services TCP service: UDP service: r connection-oriented: setup r Datagram: no setup overhead required m TCP setup: one round trip r reliable transport: no loss (but some overhead) r flow control: feed (send) at receiver’s speed r congestion control: throttle sender when network overloaded r does not provide: delay, bandwidth guarantees r Unreliable transport: loss r does not provide: m m m Reliability Flow/congestion control Delay or bandwidth guarantees Others (e. g. RDP) Q: Would we ever choose UDP? Transport Layer 19

Internet transport layer services TCP service: UDP service: r connection-oriented: setup r Datagram: no setup overhead required m TCP setup: one round trip r reliable transport: no loss (but some overhead) r flow control: feed (send) at receiver’s speed r congestion control: throttle sender when network overloaded r does not provide: delay, bandwidth guarantees r Unreliable transport: loss r does not provide: m m m Reliability Flow/congestion control Delay or bandwidth guarantees Others (e. g. RDP) Q: Would we ever choose UDP? Transport Layer 19

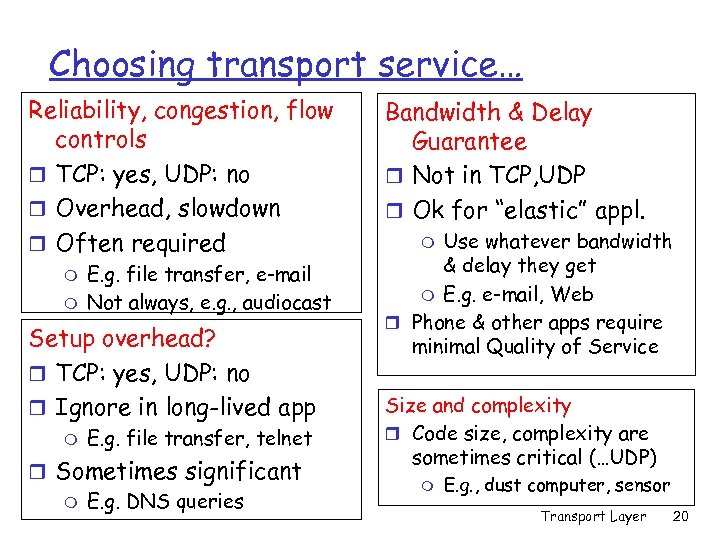

Choosing transport service… Reliability, congestion, flow controls r TCP: yes, UDP: no r Overhead, slowdown r Often required m m E. g. file transfer, e-mail Not always, e. g. , audiocast Setup overhead? r TCP: yes, UDP: no r Ignore in long-lived app m E. g. file transfer, telnet r Sometimes significant m E. g. DNS queries Bandwidth & Delay Guarantee r Not in TCP, UDP r Ok for “elastic” appl. Use whatever bandwidth & delay they get m E. g. e-mail, Web r Phone & other apps require minimal Quality of Service m Size and complexity r Code size, complexity are sometimes critical (…UDP) m E. g. , dust computer, sensor Transport Layer 20

Choosing transport service… Reliability, congestion, flow controls r TCP: yes, UDP: no r Overhead, slowdown r Often required m m E. g. file transfer, e-mail Not always, e. g. , audiocast Setup overhead? r TCP: yes, UDP: no r Ignore in long-lived app m E. g. file transfer, telnet r Sometimes significant m E. g. DNS queries Bandwidth & Delay Guarantee r Not in TCP, UDP r Ok for “elastic” appl. Use whatever bandwidth & delay they get m E. g. e-mail, Web r Phone & other apps require minimal Quality of Service m Size and complexity r Code size, complexity are sometimes critical (…UDP) m E. g. , dust computer, sensor Transport Layer 20

Chapter 3 outline r 3. 1 Transport-layer services r 3. 2 Multiplexing and demultiplexing r 3. 3 Connectionless transport: UDP r 3. 4 Principles of reliable data transfer r 3. 5 Connection-oriented transport: TCP m m segment structure reliable data transfer flow control connection management r 3. 6 Principles of congestion control r 3. 7 TCP congestion control Transport Layer 21

Chapter 3 outline r 3. 1 Transport-layer services r 3. 2 Multiplexing and demultiplexing r 3. 3 Connectionless transport: UDP r 3. 4 Principles of reliable data transfer r 3. 5 Connection-oriented transport: TCP m m segment structure reliable data transfer flow control connection management r 3. 6 Principles of congestion control r 3. 7 TCP congestion control Transport Layer 21

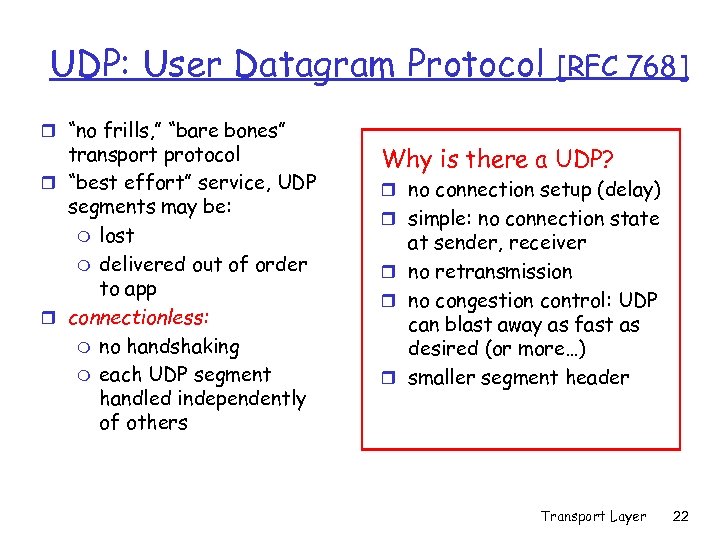

UDP: User Datagram Protocol r “no frills, ” “bare bones” transport protocol r “best effort” service, UDP segments may be: m lost m delivered out of order to app r connectionless: m no handshaking m each UDP segment handled independently of others [RFC 768] Why is there a UDP? r no connection setup (delay) r simple: no connection state at sender, receiver r no retransmission r no congestion control: UDP can blast away as fast as desired (or more…) r smaller segment header Transport Layer 22

UDP: User Datagram Protocol r “no frills, ” “bare bones” transport protocol r “best effort” service, UDP segments may be: m lost m delivered out of order to app r connectionless: m no handshaking m each UDP segment handled independently of others [RFC 768] Why is there a UDP? r no connection setup (delay) r simple: no connection state at sender, receiver r no retransmission r no congestion control: UDP can blast away as fast as desired (or more…) r smaller segment header Transport Layer 22

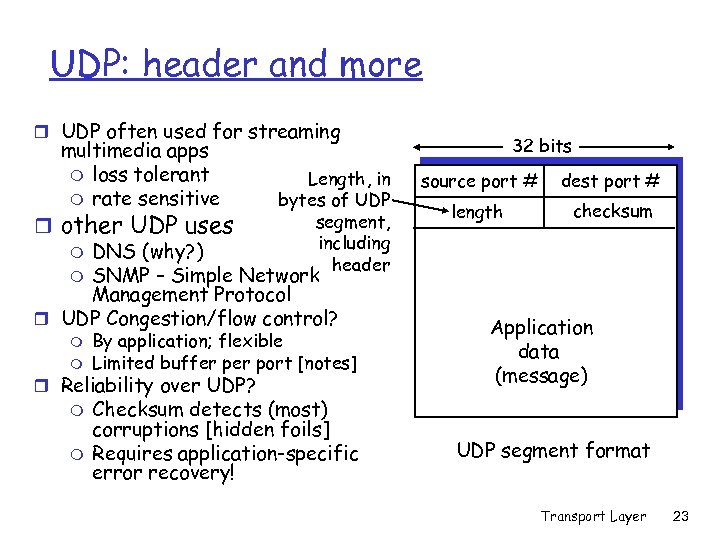

UDP: header and more r UDP often used for streaming multimedia apps m loss tolerant m rate sensitive Length, in bytes of UDP segment, including header r other UDP uses m DNS (why? ) m SNMP – Simple Network Management Protocol r UDP Congestion/flow control? m m By application; flexible Limited buffer port [notes] r Reliability over UDP? m m Checksum detects (most) corruptions [hidden foils] Requires application-specific error recovery! 32 bits source port # dest port # length checksum Application data (message) UDP segment format Transport Layer 23

UDP: header and more r UDP often used for streaming multimedia apps m loss tolerant m rate sensitive Length, in bytes of UDP segment, including header r other UDP uses m DNS (why? ) m SNMP – Simple Network Management Protocol r UDP Congestion/flow control? m m By application; flexible Limited buffer port [notes] r Reliability over UDP? m m Checksum detects (most) corruptions [hidden foils] Requires application-specific error recovery! 32 bits source port # dest port # length checksum Application data (message) UDP segment format Transport Layer 23

Chapter 3 outline r 3. 1 Transport-layer services r 3. 2 Multiplexing and demultiplexing r 3. 3 Connectionless transport: UDP r 3. 4 Principles of reliable data transfer r 3. 5 Connection-oriented transport: TCP m m segment structure reliable data transfer flow control connection management r 3. 6 Principles of congestion control r 3. 7 TCP congestion control Transport Layer 26

Chapter 3 outline r 3. 1 Transport-layer services r 3. 2 Multiplexing and demultiplexing r 3. 3 Connectionless transport: UDP r 3. 4 Principles of reliable data transfer r 3. 5 Connection-oriented transport: TCP m m segment structure reliable data transfer flow control connection management r 3. 6 Principles of congestion control r 3. 7 TCP congestion control Transport Layer 26

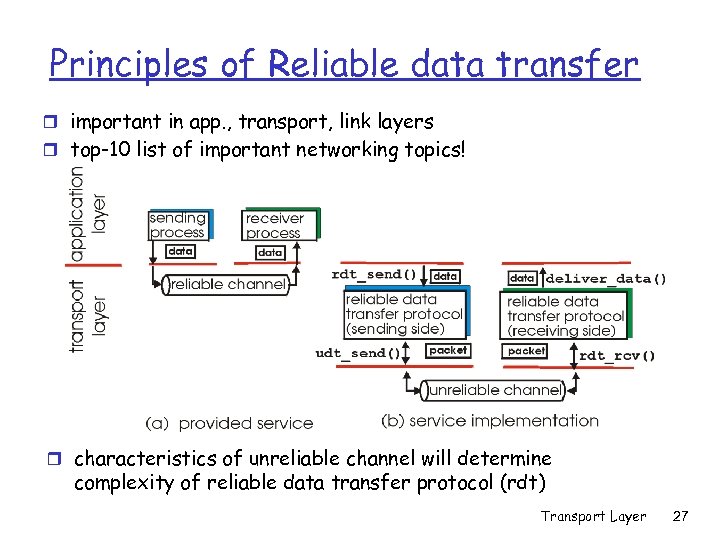

Principles of Reliable data transfer r important in app. , transport, link layers r top-10 list of important networking topics! r characteristics of unreliable channel will determine complexity of reliable data transfer protocol (rdt) Transport Layer 27

Principles of Reliable data transfer r important in app. , transport, link layers r top-10 list of important networking topics! r characteristics of unreliable channel will determine complexity of reliable data transfer protocol (rdt) Transport Layer 27

RDT Protocol : Events r Finite state machine r Inputs: m rdt_send(m) – message m from application m rdt_rcv(p) – packet p from (unreliable) channel r Outputs: m udt_send(p) – packet p to (unreliable) channel m deliver_data(m) – message m to application m ready( ) – to receive another message from application r Execution: m Sequence of input, output events m Outputs defined by protocol Transport Layer 29

RDT Protocol : Events r Finite state machine r Inputs: m rdt_send(m) – message m from application m rdt_rcv(p) – packet p from (unreliable) channel r Outputs: m udt_send(p) – packet p to (unreliable) channel m deliver_data(m) – message m to application m ready( ) – to receive another message from application r Execution: m Sequence of input, output events m Outputs defined by protocol Transport Layer 29

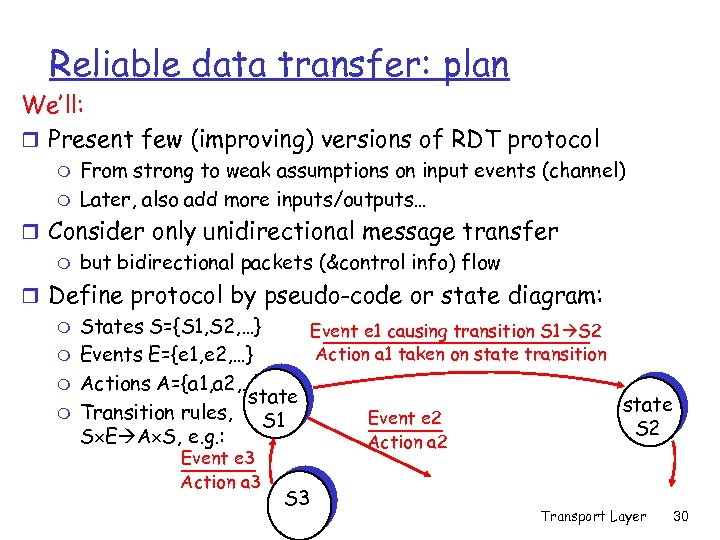

Reliable data transfer: plan We’ll: r Present few (improving) versions of RDT protocol m m From strong to weak assumptions on input events (channel) Later, also add more inputs/outputs… r Consider only unidirectional message transfer m but bidirectional packets (&control info) flow r Define protocol by pseudo-code or state diagram: m States S={S 1, S 2, …} Event e 1 causing transition S 1 S 2 Action a 1 taken on state transition m Events E={e 1, e 2, …} m Actions A={a 1, a 2, …} state m Transition rules, Event e 2 S 1 S 2 S E A S, e. g. : Action a 2 Event e 3 Action a 3 S 3 Transport Layer 30

Reliable data transfer: plan We’ll: r Present few (improving) versions of RDT protocol m m From strong to weak assumptions on input events (channel) Later, also add more inputs/outputs… r Consider only unidirectional message transfer m but bidirectional packets (&control info) flow r Define protocol by pseudo-code or state diagram: m States S={S 1, S 2, …} Event e 1 causing transition S 1 S 2 Action a 1 taken on state transition m Events E={e 1, e 2, …} m Actions A={a 1, a 2, …} state m Transition rules, Event e 2 S 1 S 2 S E A S, e. g. : Action a 2 Event e 3 Action a 3 S 3 Transport Layer 30

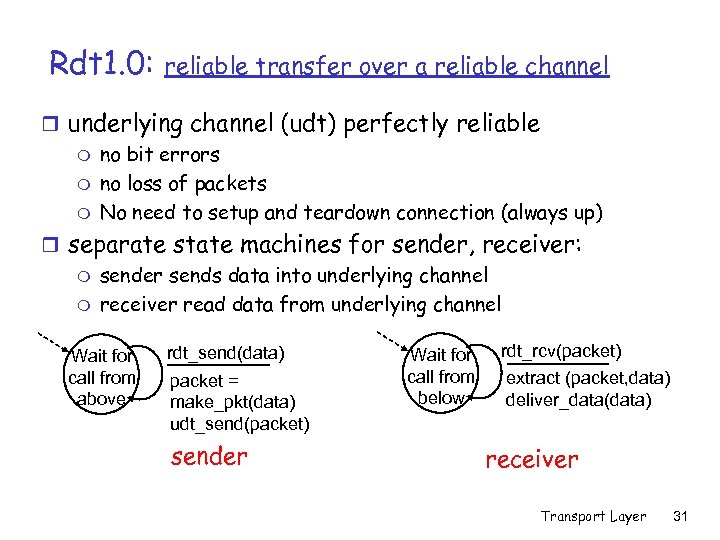

Rdt 1. 0: reliable transfer over a reliable channel r underlying channel (udt) perfectly reliable m no bit errors m no loss of packets m No need to setup and teardown connection (always up) r separate state machines for sender, receiver: m sender sends data into underlying channel m receiver read data from underlying channel Wait for call from above rdt_send(data) packet = make_pkt(data) udt_send(packet) sender Wait for call from below rdt_rcv(packet) extract (packet, data) deliver_data(data) receiver Transport Layer 31

Rdt 1. 0: reliable transfer over a reliable channel r underlying channel (udt) perfectly reliable m no bit errors m no loss of packets m No need to setup and teardown connection (always up) r separate state machines for sender, receiver: m sender sends data into underlying channel m receiver read data from underlying channel Wait for call from above rdt_send(data) packet = make_pkt(data) udt_send(packet) sender Wait for call from below rdt_rcv(packet) extract (packet, data) deliver_data(data) receiver Transport Layer 31

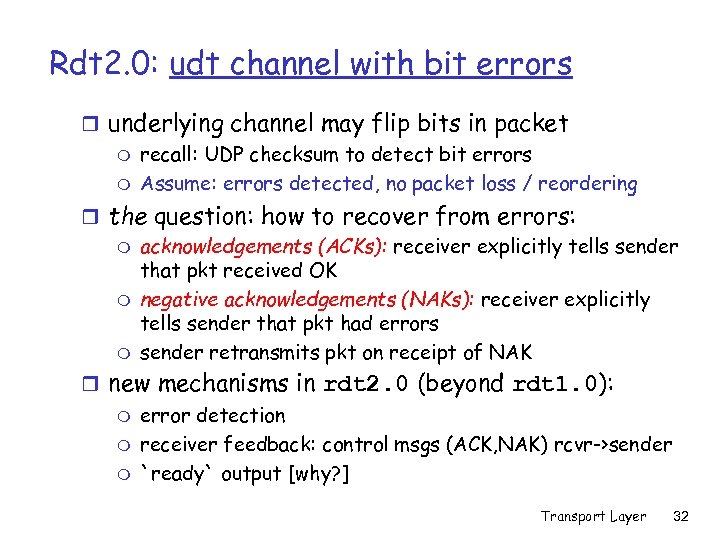

Rdt 2. 0: udt channel with bit errors r underlying channel may flip bits in packet m recall: UDP checksum to detect bit errors m Assume: errors detected, no packet loss / reordering r the question: how to recover from errors: m acknowledgements (ACKs): receiver explicitly tells sender that pkt received OK m negative acknowledgements (NAKs): receiver explicitly tells sender that pkt had errors m sender retransmits pkt on receipt of NAK r new mechanisms in rdt 2. 0 (beyond rdt 1. 0): m m m error detection receiver feedback: control msgs (ACK, NAK) rcvr->sender `ready` output [why? ] Transport Layer 32

Rdt 2. 0: udt channel with bit errors r underlying channel may flip bits in packet m recall: UDP checksum to detect bit errors m Assume: errors detected, no packet loss / reordering r the question: how to recover from errors: m acknowledgements (ACKs): receiver explicitly tells sender that pkt received OK m negative acknowledgements (NAKs): receiver explicitly tells sender that pkt had errors m sender retransmits pkt on receipt of NAK r new mechanisms in rdt 2. 0 (beyond rdt 1. 0): m m m error detection receiver feedback: control msgs (ACK, NAK) rcvr->sender `ready` output [why? ] Transport Layer 32

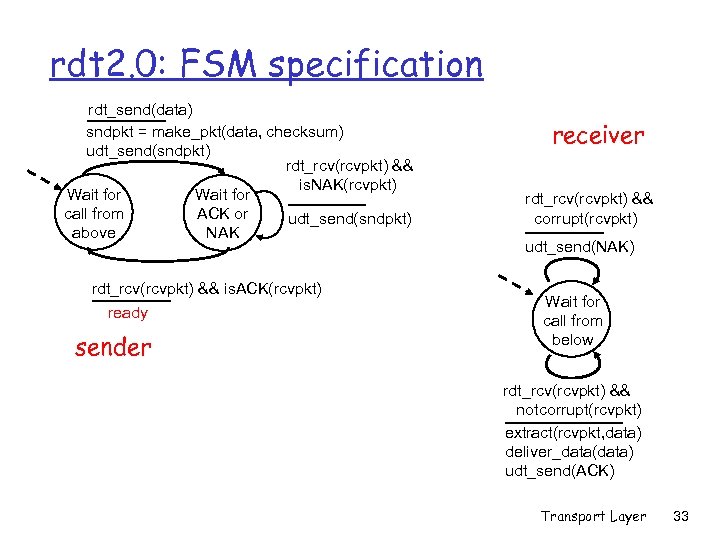

rdt 2. 0: FSM specification rdt_send(data) sndpkt = make_pkt(data, checksum) udt_send(sndpkt) rdt_rcv(rcvpkt) && is. NAK(rcvpkt) Wait for call from ACK or udt_send(sndpkt) above NAK rdt_rcv(rcvpkt) && is. ACK(rcvpkt) ready sender receiver rdt_rcv(rcvpkt) && corrupt(rcvpkt) udt_send(NAK) Wait for call from below rdt_rcv(rcvpkt) && notcorrupt(rcvpkt) extract(rcvpkt, data) deliver_data(data) udt_send(ACK) Transport Layer 33

rdt 2. 0: FSM specification rdt_send(data) sndpkt = make_pkt(data, checksum) udt_send(sndpkt) rdt_rcv(rcvpkt) && is. NAK(rcvpkt) Wait for call from ACK or udt_send(sndpkt) above NAK rdt_rcv(rcvpkt) && is. ACK(rcvpkt) ready sender receiver rdt_rcv(rcvpkt) && corrupt(rcvpkt) udt_send(NAK) Wait for call from below rdt_rcv(rcvpkt) && notcorrupt(rcvpkt) extract(rcvpkt, data) deliver_data(data) udt_send(ACK) Transport Layer 33

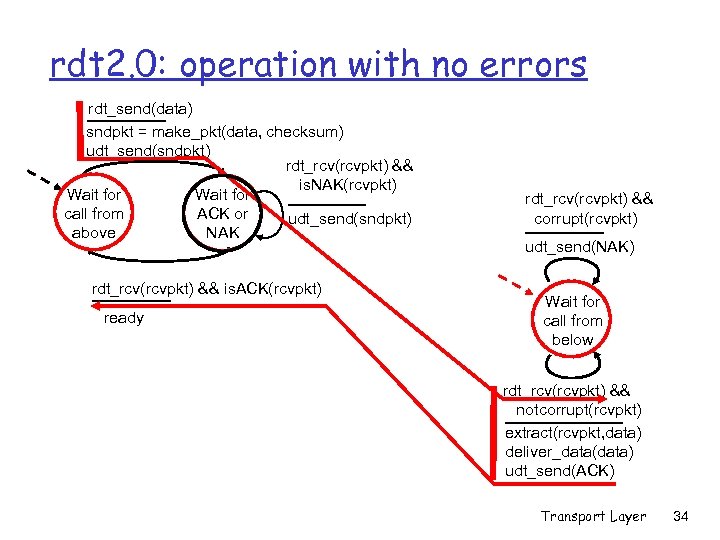

rdt 2. 0: operation with no errors rdt_send(data) sndpkt = make_pkt(data, checksum) udt_send(sndpkt) rdt_rcv(rcvpkt) && is. NAK(rcvpkt) Wait for call from ACK or udt_send(sndpkt) above NAK rdt_rcv(rcvpkt) && is. ACK(rcvpkt) ready rdt_rcv(rcvpkt) && corrupt(rcvpkt) udt_send(NAK) Wait for call from below rdt_rcv(rcvpkt) && notcorrupt(rcvpkt) extract(rcvpkt, data) deliver_data(data) udt_send(ACK) Transport Layer 34

rdt 2. 0: operation with no errors rdt_send(data) sndpkt = make_pkt(data, checksum) udt_send(sndpkt) rdt_rcv(rcvpkt) && is. NAK(rcvpkt) Wait for call from ACK or udt_send(sndpkt) above NAK rdt_rcv(rcvpkt) && is. ACK(rcvpkt) ready rdt_rcv(rcvpkt) && corrupt(rcvpkt) udt_send(NAK) Wait for call from below rdt_rcv(rcvpkt) && notcorrupt(rcvpkt) extract(rcvpkt, data) deliver_data(data) udt_send(ACK) Transport Layer 34

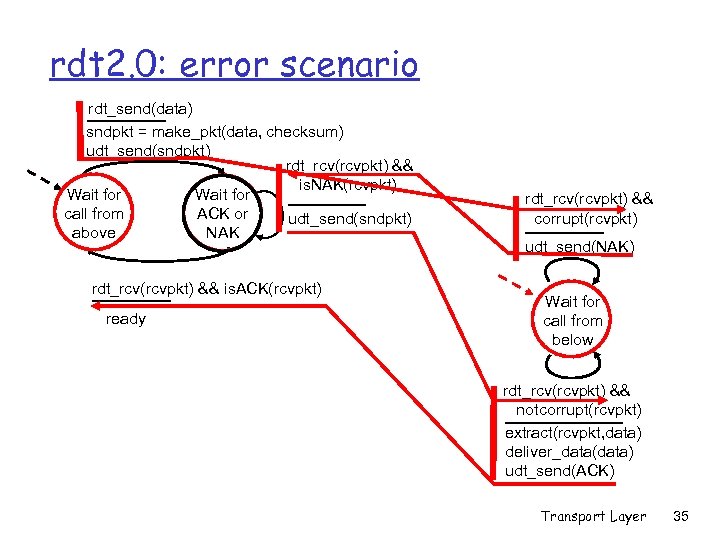

rdt 2. 0: error scenario rdt_send(data) sndpkt = make_pkt(data, checksum) udt_send(sndpkt) rdt_rcv(rcvpkt) && is. NAK(rcvpkt) Wait for call from ACK or udt_send(sndpkt) above NAK rdt_rcv(rcvpkt) && is. ACK(rcvpkt) ready rdt_rcv(rcvpkt) && corrupt(rcvpkt) udt_send(NAK) Wait for call from below rdt_rcv(rcvpkt) && notcorrupt(rcvpkt) extract(rcvpkt, data) deliver_data(data) udt_send(ACK) Transport Layer 35

rdt 2. 0: error scenario rdt_send(data) sndpkt = make_pkt(data, checksum) udt_send(sndpkt) rdt_rcv(rcvpkt) && is. NAK(rcvpkt) Wait for call from ACK or udt_send(sndpkt) above NAK rdt_rcv(rcvpkt) && is. ACK(rcvpkt) ready rdt_rcv(rcvpkt) && corrupt(rcvpkt) udt_send(NAK) Wait for call from below rdt_rcv(rcvpkt) && notcorrupt(rcvpkt) extract(rcvpkt, data) deliver_data(data) udt_send(ACK) Transport Layer 35

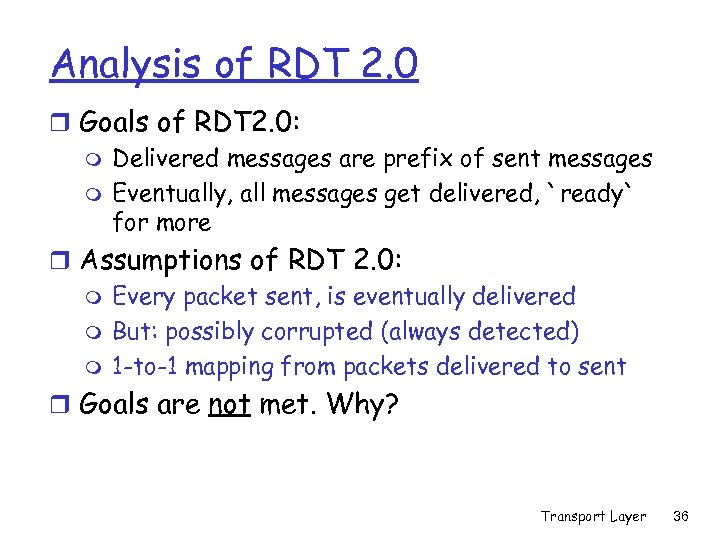

Analysis of RDT 2. 0 r Goals of RDT 2. 0: m Delivered messages are prefix of sent messages m Eventually, all messages get delivered, `ready` for more r Assumptions of RDT 2. 0: m Every packet sent, is eventually delivered m But: possibly corrupted (always detected) m 1 -to-1 mapping from packets delivered to sent r Goals are not met. Why? Transport Layer 36

Analysis of RDT 2. 0 r Goals of RDT 2. 0: m Delivered messages are prefix of sent messages m Eventually, all messages get delivered, `ready` for more r Assumptions of RDT 2. 0: m Every packet sent, is eventually delivered m But: possibly corrupted (always detected) m 1 -to-1 mapping from packets delivered to sent r Goals are not met. Why? Transport Layer 36

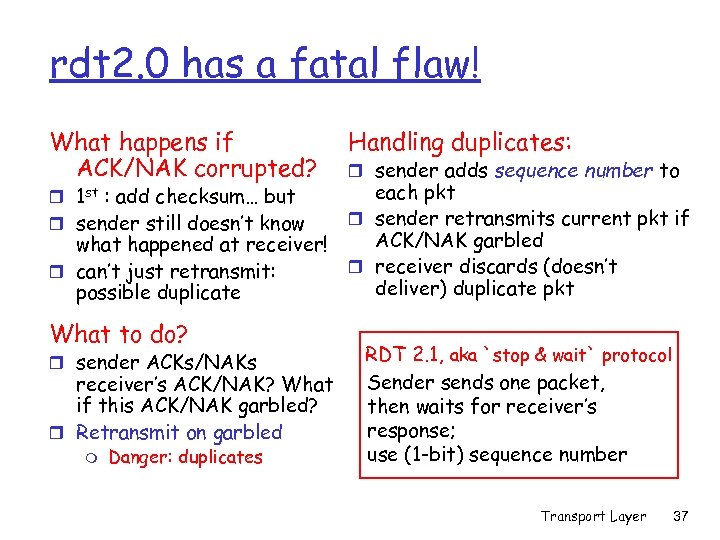

rdt 2. 0 has a fatal flaw! What happens if ACK/NAK corrupted? r 1 st : add checksum… but r sender still doesn’t know what happened at receiver! r can’t just retransmit: possible duplicate What to do? r sender ACKs/NAKs receiver’s ACK/NAK? What if this ACK/NAK garbled? r Retransmit on garbled m Danger: duplicates Handling duplicates: r sender adds sequence number to each pkt r sender retransmits current pkt if ACK/NAK garbled r receiver discards (doesn’t deliver) duplicate pkt RDT 2. 1, aka `stop & wait` protocol Sender sends one packet, then waits for receiver’s response; use (1 -bit) sequence number Transport Layer 37

rdt 2. 0 has a fatal flaw! What happens if ACK/NAK corrupted? r 1 st : add checksum… but r sender still doesn’t know what happened at receiver! r can’t just retransmit: possible duplicate What to do? r sender ACKs/NAKs receiver’s ACK/NAK? What if this ACK/NAK garbled? r Retransmit on garbled m Danger: duplicates Handling duplicates: r sender adds sequence number to each pkt r sender retransmits current pkt if ACK/NAK garbled r receiver discards (doesn’t deliver) duplicate pkt RDT 2. 1, aka `stop & wait` protocol Sender sends one packet, then waits for receiver’s response; use (1 -bit) sequence number Transport Layer 37

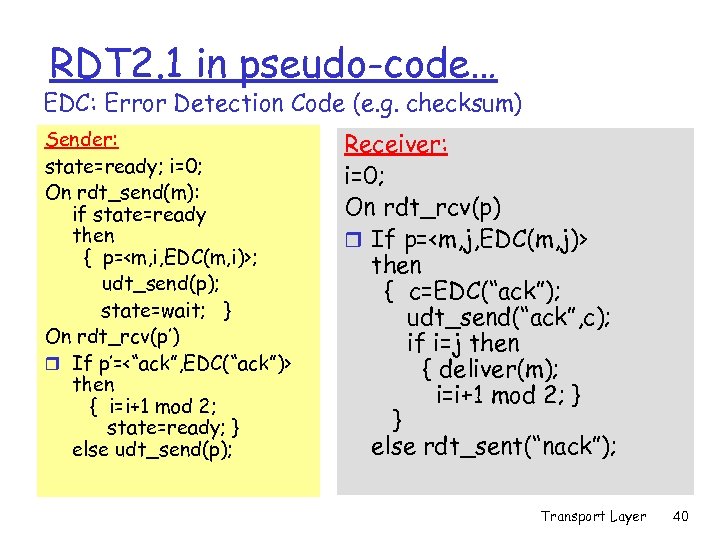

RDT 2. 1 in pseudo-code… EDC: Error Detection Code (e. g. checksum) Sender: state=ready; i=0; On rdt_send(m): if state=ready then { p=

RDT 2. 1 in pseudo-code… EDC: Error Detection Code (e. g. checksum) Sender: state=ready; i=0; On rdt_send(m): if state=ready then { p=

![Analysis of RDT 2. 1 [sketch] r Goals, Assumptions: same as RDT 2. 0 Analysis of RDT 2. 1 [sketch] r Goals, Assumptions: same as RDT 2. 0](https://present5.com/presentation/4699b155894bd7a24a2f1872aaae267a/image-33.jpg) Analysis of RDT 2. 1 [sketch] r Goals, Assumptions: same as RDT 2. 0 m Every packet sent, is eventually delivered m But: possibly corrupted (always detected) m 1 -to-1 mapping from packets delivered to sent r Define `legitimate` states: [E=Even, O=Odd] 1. RE/RO: Ready (to receive E/O message); channel empty 2. ME/MO: Wait for E/O Ack; channel has new E/O message 3. AE/AO: Wait for E/O Ack; channel has E/O Ack 4. MER/MOR: Wait for E/O Ack; channel has E/O message which was already delivered 5. WEAO: Wait for E Ack but channel has O Ack 6. WOAE: Wait for O Ack but channel has E Ack m Prove by induction: system stays in legitimate state! Transport Layer 41

Analysis of RDT 2. 1 [sketch] r Goals, Assumptions: same as RDT 2. 0 m Every packet sent, is eventually delivered m But: possibly corrupted (always detected) m 1 -to-1 mapping from packets delivered to sent r Define `legitimate` states: [E=Even, O=Odd] 1. RE/RO: Ready (to receive E/O message); channel empty 2. ME/MO: Wait for E/O Ack; channel has new E/O message 3. AE/AO: Wait for E/O Ack; channel has E/O Ack 4. MER/MOR: Wait for E/O Ack; channel has E/O message which was already delivered 5. WEAO: Wait for E Ack but channel has O Ack 6. WOAE: Wait for O Ack but channel has E Ack m Prove by induction: system stays in legitimate state! Transport Layer 41

![Analysis of RDT 2. 1 [sketch, cont’] m Claim: system always in legitimate state Analysis of RDT 2. 1 [sketch, cont’] m Claim: system always in legitimate state](https://present5.com/presentation/4699b155894bd7a24a2f1872aaae267a/image-34.jpg) Analysis of RDT 2. 1 [sketch, cont’] m Claim: system always in legitimate state m Base: initial state is (legitimate) RE m Consider moves from legitimate states: 1. RE/RO: only to ME/MO 2. ME/MO: If received Ok, move to AE/AO; otherwise, to WEAO/WOAE 3. AE/AO: If received Ok, move to RO/RE; otherwise, to MER/MOR 4. MER/MOR: same as ME/MO! 5. WEAO/WOAE: move to MER/MOR m If received correctly… and also if corrupted! m System always stays in legit state!! □ Transport Layer 42

Analysis of RDT 2. 1 [sketch, cont’] m Claim: system always in legitimate state m Base: initial state is (legitimate) RE m Consider moves from legitimate states: 1. RE/RO: only to ME/MO 2. ME/MO: If received Ok, move to AE/AO; otherwise, to WEAO/WOAE 3. AE/AO: If received Ok, move to RO/RE; otherwise, to MER/MOR 4. MER/MOR: same as ME/MO! 5. WEAO/WOAE: move to MER/MOR m If received correctly… and also if corrupted! m System always stays in legit state!! □ Transport Layer 42

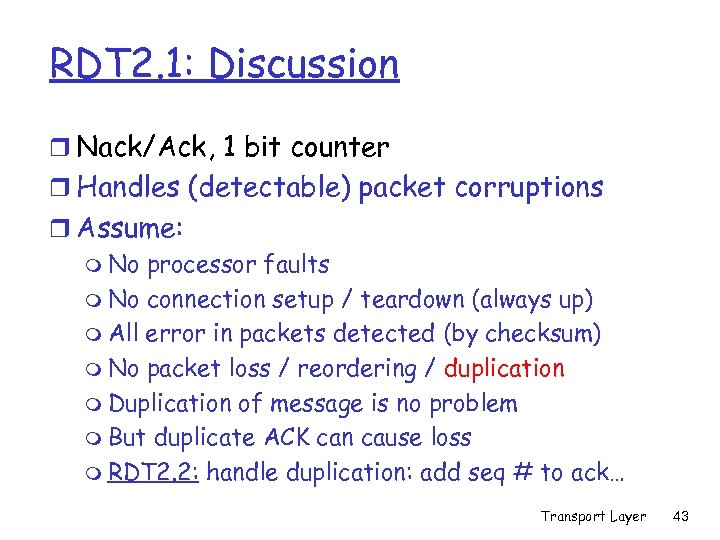

RDT 2. 1: Discussion r Nack/Ack, 1 bit counter r Handles (detectable) packet corruptions r Assume: m No processor faults m No connection setup / teardown (always up) m All error in packets detected (by checksum) m No packet loss / reordering / duplication m Duplication of message is no problem m But duplicate ACK can cause loss m RDT 2. 2: handle duplication: add seq # to ack… Transport Layer 43

RDT 2. 1: Discussion r Nack/Ack, 1 bit counter r Handles (detectable) packet corruptions r Assume: m No processor faults m No connection setup / teardown (always up) m All error in packets detected (by checksum) m No packet loss / reordering / duplication m Duplication of message is no problem m But duplicate ACK can cause loss m RDT 2. 2: handle duplication: add seq # to ack… Transport Layer 43

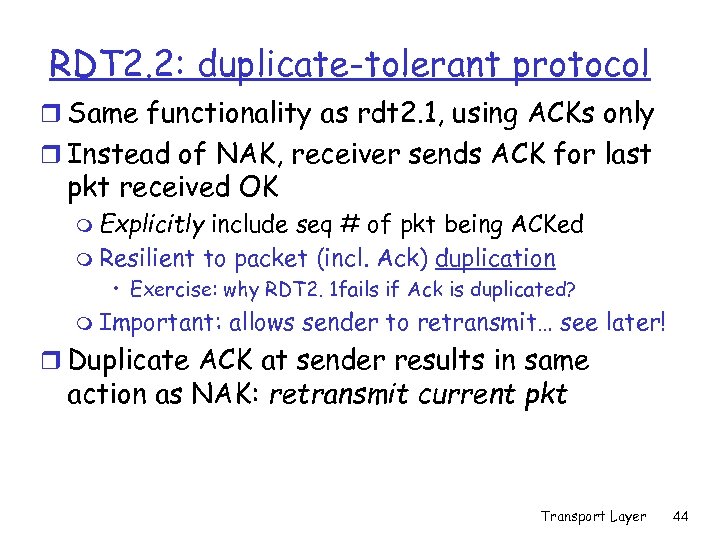

RDT 2. 2: duplicate-tolerant protocol r Same functionality as rdt 2. 1, using ACKs only r Instead of NAK, receiver sends ACK for last pkt received OK m Explicitly include seq # of pkt being ACKed m Resilient to packet (incl. Ack) duplication • Exercise: why RDT 2. 1 fails if Ack is duplicated? m Important: allows sender to retransmit… see later! r Duplicate ACK at sender results in same action as NAK: retransmit current pkt Transport Layer 44

RDT 2. 2: duplicate-tolerant protocol r Same functionality as rdt 2. 1, using ACKs only r Instead of NAK, receiver sends ACK for last pkt received OK m Explicitly include seq # of pkt being ACKed m Resilient to packet (incl. Ack) duplication • Exercise: why RDT 2. 1 fails if Ack is duplicated? m Important: allows sender to retransmit… see later! r Duplicate ACK at sender results in same action as NAK: retransmit current pkt Transport Layer 44

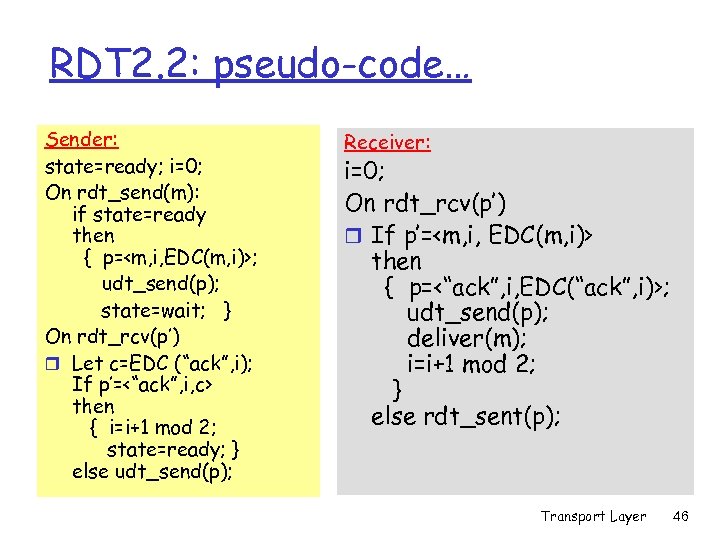

RDT 2. 2: pseudo-code… Sender: state=ready; i=0; On rdt_send(m): if state=ready then { p=

RDT 2. 2: pseudo-code… Sender: state=ready; i=0; On rdt_send(m): if state=ready then { p=

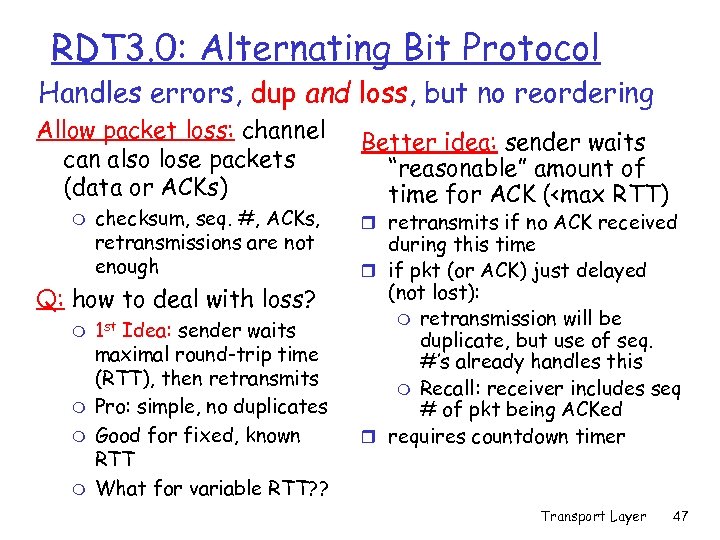

RDT 3. 0: Alternating Bit Protocol Handles errors, dup and loss, but no reordering Allow packet loss: channel can also lose packets (data or ACKs) m checksum, seq. #, ACKs, retransmissions are not enough Q: how to deal with loss? m m 1 st Idea: sender waits maximal round-trip time (RTT), then retransmits Pro: simple, no duplicates Good for fixed, known RTT What for variable RTT? ? Better idea: sender waits “reasonable” amount of time for ACK (

RDT 3. 0: Alternating Bit Protocol Handles errors, dup and loss, but no reordering Allow packet loss: channel can also lose packets (data or ACKs) m checksum, seq. #, ACKs, retransmissions are not enough Q: how to deal with loss? m m 1 st Idea: sender waits maximal round-trip time (RTT), then retransmits Pro: simple, no duplicates Good for fixed, known RTT What for variable RTT? ? Better idea: sender waits “reasonable” amount of time for ACK (

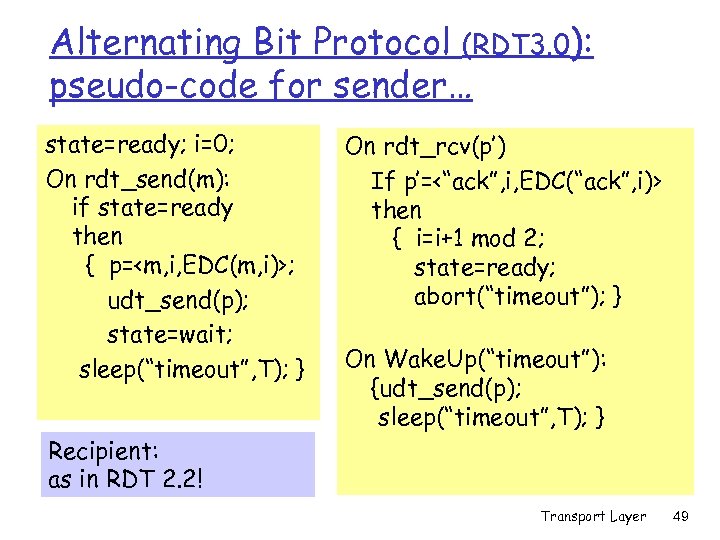

Alternating Bit Protocol (RDT 3. 0): pseudo-code for sender… state=ready; i=0; On rdt_send(m): if state=ready then { p=

Alternating Bit Protocol (RDT 3. 0): pseudo-code for sender… state=ready; i=0; On rdt_send(m): if state=ready then { p=

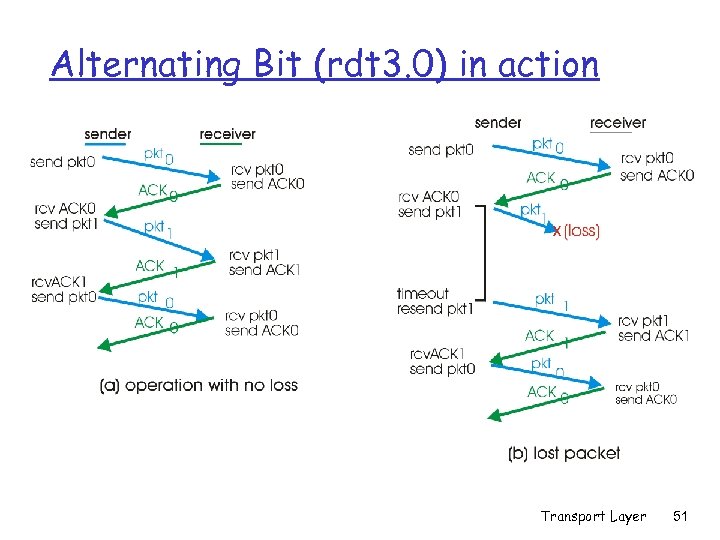

Alternating Bit (rdt 3. 0) in action Transport Layer 51

Alternating Bit (rdt 3. 0) in action Transport Layer 51

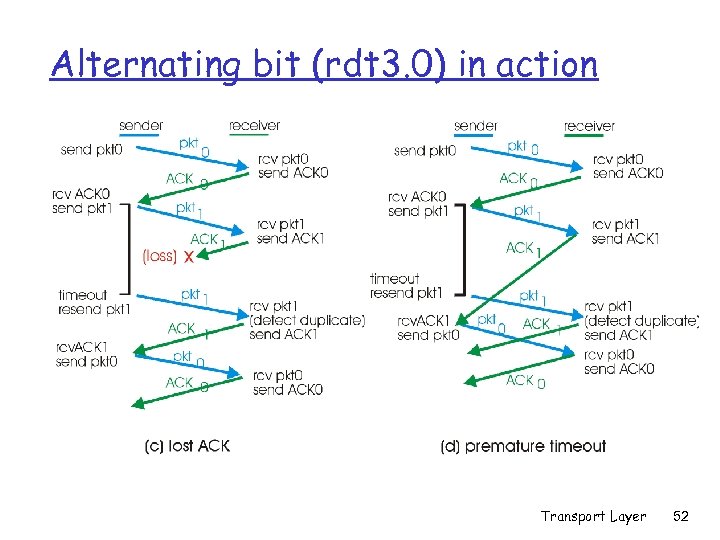

Alternating bit (rdt 3. 0) in action Transport Layer 52

Alternating bit (rdt 3. 0) in action Transport Layer 52

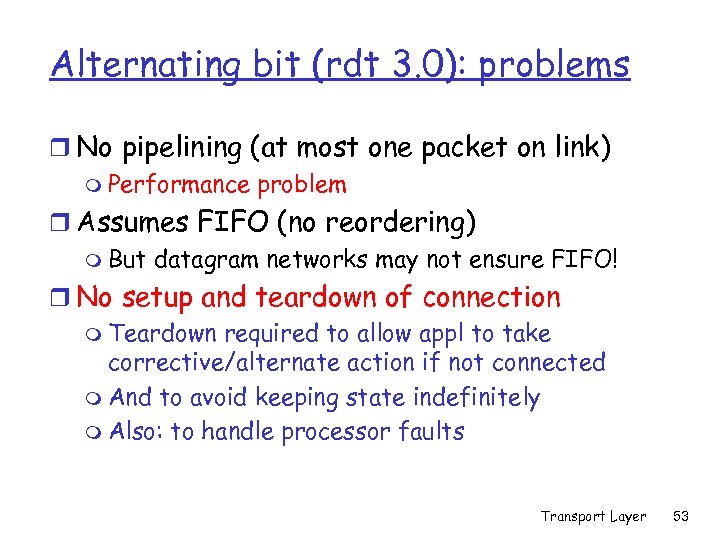

Alternating bit (rdt 3. 0): problems r No pipelining (at most one packet on link) m Performance problem r Assumes FIFO (no reordering) m But datagram networks may not ensure FIFO! r No setup and teardown of connection m Teardown required to allow appl to take corrective/alternate action if not connected m And to avoid keeping state indefinitely m Also: to handle processor faults Transport Layer 53

Alternating bit (rdt 3. 0): problems r No pipelining (at most one packet on link) m Performance problem r Assumes FIFO (no reordering) m But datagram networks may not ensure FIFO! r No setup and teardown of connection m Teardown required to allow appl to take corrective/alternate action if not connected m And to avoid keeping state indefinitely m Also: to handle processor faults Transport Layer 53

Rest of Transport Layer… r TCP Overview r TCP Reliability r TCP Timeouts r TCP Flow Control r 3. 6 Principles of congestion control r 3. 7 TCP congestion control r TCP Fairness Transport Layer 54

Rest of Transport Layer… r TCP Overview r TCP Reliability r TCP Timeouts r TCP Flow Control r 3. 6 Principles of congestion control r 3. 7 TCP congestion control r TCP Fairness Transport Layer 54

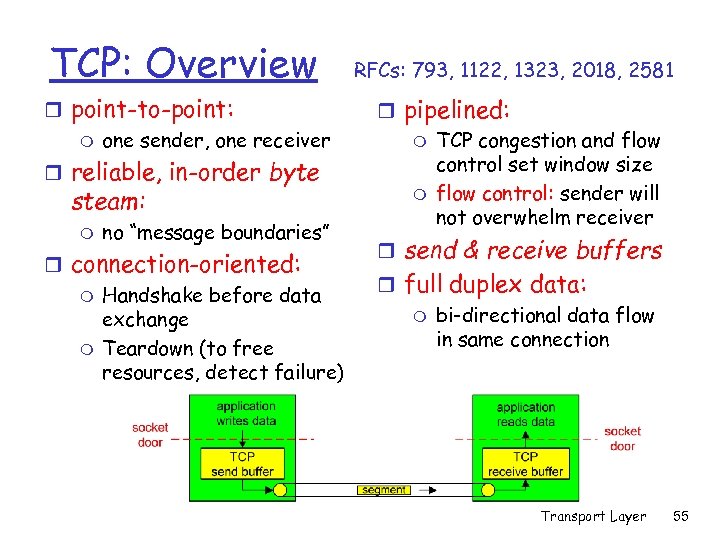

TCP: Overview r point-to-point: m one sender, one receiver r reliable, in-order byte steam: m no “message boundaries” r connection-oriented: m Handshake before data exchange m Teardown (to free resources, detect failure) RFCs: 793, 1122, 1323, 2018, 2581 r pipelined: m TCP congestion and flow control set window size m flow control: sender will not overwhelm receiver r send & receive buffers r full duplex data: m bi-directional data flow in same connection Transport Layer 55

TCP: Overview r point-to-point: m one sender, one receiver r reliable, in-order byte steam: m no “message boundaries” r connection-oriented: m Handshake before data exchange m Teardown (to free resources, detect failure) RFCs: 793, 1122, 1323, 2018, 2581 r pipelined: m TCP congestion and flow control set window size m flow control: sender will not overwhelm receiver r send & receive buffers r full duplex data: m bi-directional data flow in same connection Transport Layer 55

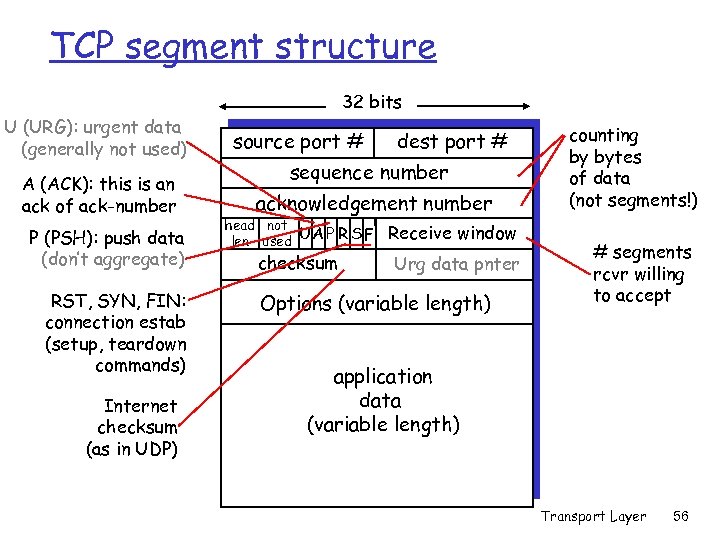

TCP segment structure 32 bits U (URG): urgent data (generally not used) A (ACK): this is an ack of ack-number P (PSH): push data (don’t aggregate) RST, SYN, FIN: connection estab (setup, teardown commands) Internet checksum (as in UDP) source port # dest port # sequence number acknowledgement number head not UA P R S F len used checksum Receive window Urg data pnter Options (variable length) counting by bytes of data (not segments!) # segments rcvr willing to accept application data (variable length) Transport Layer 56

TCP segment structure 32 bits U (URG): urgent data (generally not used) A (ACK): this is an ack of ack-number P (PSH): push data (don’t aggregate) RST, SYN, FIN: connection estab (setup, teardown commands) Internet checksum (as in UDP) source port # dest port # sequence number acknowledgement number head not UA P R S F len used checksum Receive window Urg data pnter Options (variable length) counting by bytes of data (not segments!) # segments rcvr willing to accept application data (variable length) Transport Layer 56

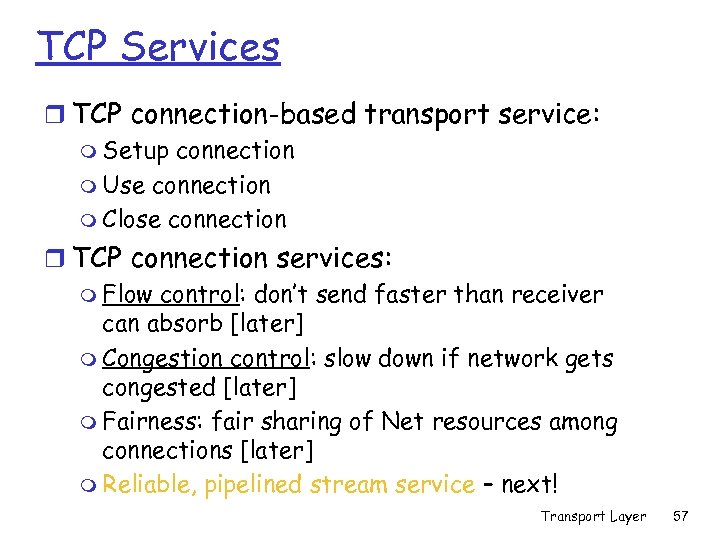

TCP Services r TCP connection-based transport service: m Setup connection m Use connection m Close connection r TCP connection services: m Flow control: don’t send faster than receiver can absorb [later] m Congestion control: slow down if network gets congested [later] m Fairness: fair sharing of Net resources among connections [later] m Reliable, pipelined stream service – next! Transport Layer 57

TCP Services r TCP connection-based transport service: m Setup connection m Use connection m Close connection r TCP connection services: m Flow control: don’t send faster than receiver can absorb [later] m Congestion control: slow down if network gets congested [later] m Fairness: fair sharing of Net resources among connections [later] m Reliable, pipelined stream service – next! Transport Layer 57

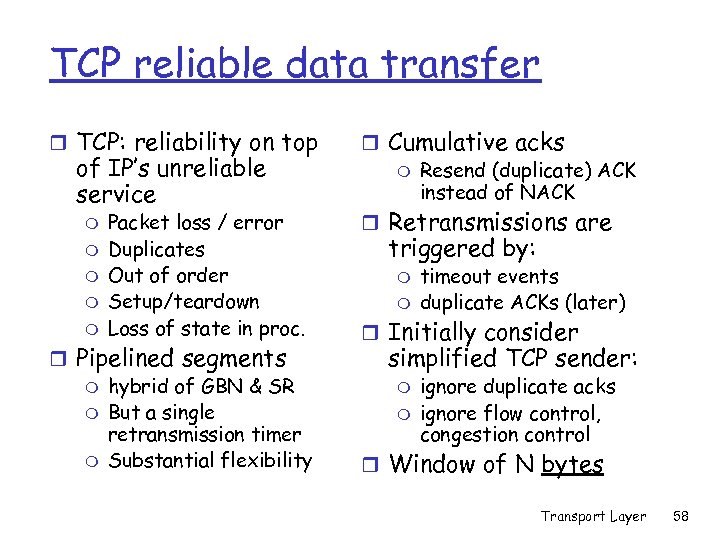

TCP reliable data transfer r TCP: reliability on top of IP’s unreliable service m m m Packet loss / error Duplicates Out of order Setup/teardown Loss of state in proc. r Pipelined segments m hybrid of GBN & SR m But a single retransmission timer m Substantial flexibility r Cumulative acks m Resend (duplicate) ACK instead of NACK r Retransmissions are triggered by: m m timeout events duplicate ACKs (later) r Initially consider simplified TCP sender: m m ignore duplicate acks ignore flow control, congestion control r Window of N bytes Transport Layer 58

TCP reliable data transfer r TCP: reliability on top of IP’s unreliable service m m m Packet loss / error Duplicates Out of order Setup/teardown Loss of state in proc. r Pipelined segments m hybrid of GBN & SR m But a single retransmission timer m Substantial flexibility r Cumulative acks m Resend (duplicate) ACK instead of NACK r Retransmissions are triggered by: m m timeout events duplicate ACKs (later) r Initially consider simplified TCP sender: m m ignore duplicate acks ignore flow control, congestion control r Window of N bytes Transport Layer 58

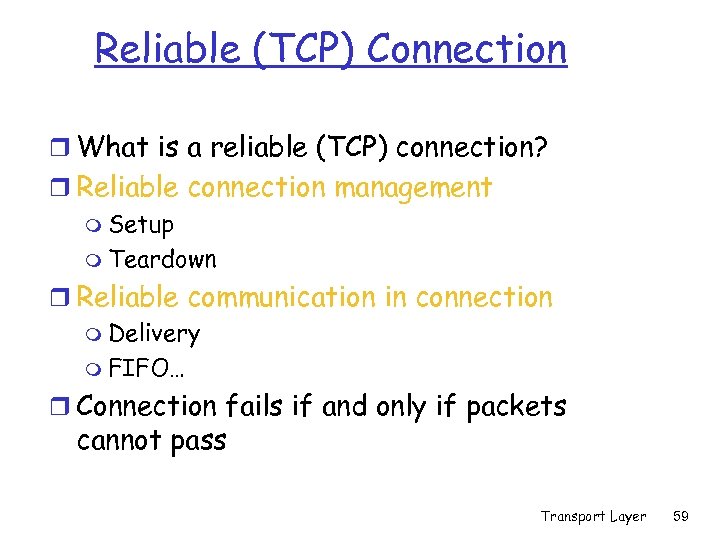

Reliable (TCP) Connection r What is a reliable (TCP) connection? r Reliable connection management m Setup m Teardown r Reliable communication in connection m Delivery m FIFO… r Connection fails if and only if packets cannot pass Transport Layer 59

Reliable (TCP) Connection r What is a reliable (TCP) connection? r Reliable connection management m Setup m Teardown r Reliable communication in connection m Delivery m FIFO… r Connection fails if and only if packets cannot pass Transport Layer 59

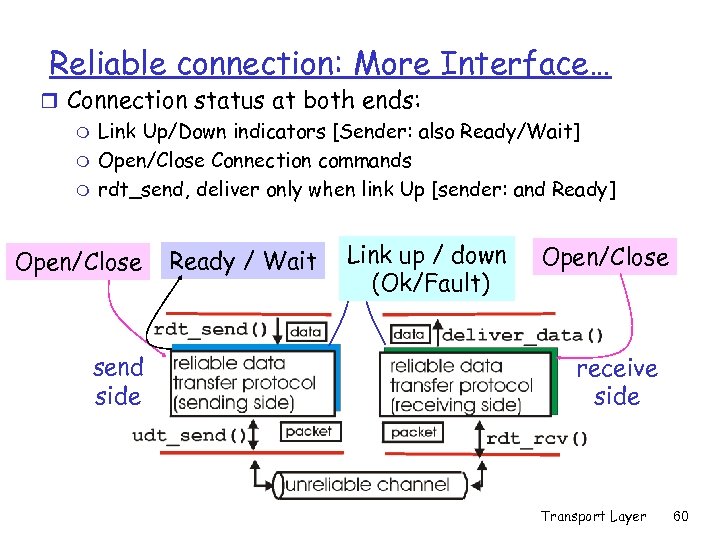

Reliable connection: More Interface… r Connection status at both ends: m Link Up/Down indicators [Sender: also Ready/Wait] m Open/Close Connection commands m rdt_send, deliver only when link Up [sender: and Ready] Open/Close send side Ready / Wait Link up / down (Ok/Fault) Open/Close receive side Transport Layer 60

Reliable connection: More Interface… r Connection status at both ends: m Link Up/Down indicators [Sender: also Ready/Wait] m Open/Close Connection commands m rdt_send, deliver only when link Up [sender: and Ready] Open/Close send side Ready / Wait Link up / down (Ok/Fault) Open/Close receive side Transport Layer 60

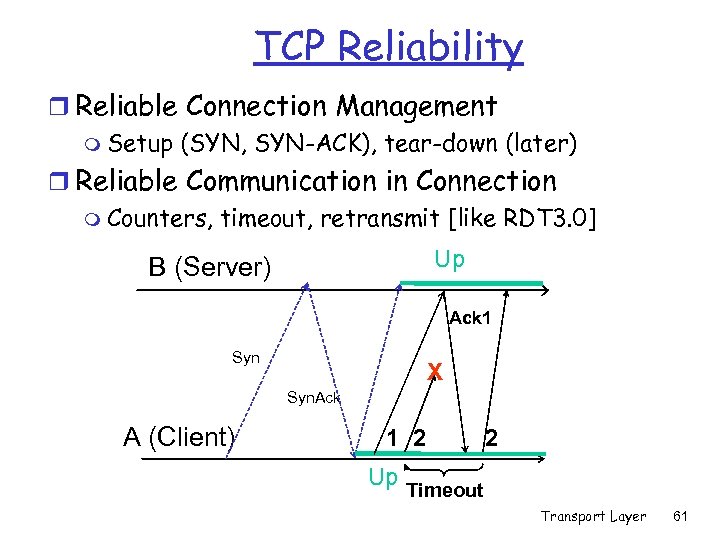

TCP Reliability r Reliable Connection Management m Setup (SYN, SYN-ACK), tear-down (later) r Reliable Communication in Connection m Counters, timeout, retransmit [like RDT 3. 0] Up B (Server) Ack 1 Syn X Syn. Ack A (Client) 1 2 Up 2 Timeout Transport Layer 61

TCP Reliability r Reliable Connection Management m Setup (SYN, SYN-ACK), tear-down (later) r Reliable Communication in Connection m Counters, timeout, retransmit [like RDT 3. 0] Up B (Server) Ack 1 Syn X Syn. Ack A (Client) 1 2 Up 2 Timeout Transport Layer 61

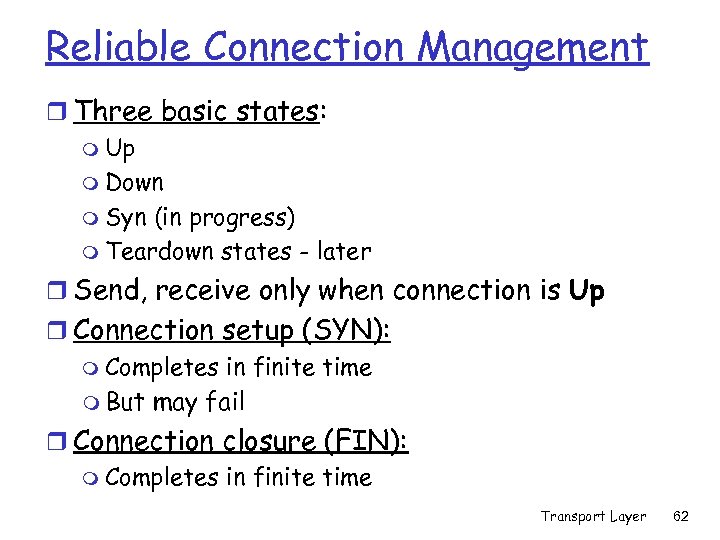

Reliable Connection Management r Three basic states: m Up m Down m Syn (in progress) m Teardown states - later r Send, receive only when connection is Up r Connection setup (SYN): m Completes in finite time m But may fail r Connection closure (FIN): m Completes in finite time Transport Layer 62

Reliable Connection Management r Three basic states: m Up m Down m Syn (in progress) m Teardown states - later r Send, receive only when connection is Up r Connection setup (SYN): m Completes in finite time m But may fail r Connection closure (FIN): m Completes in finite time Transport Layer 62

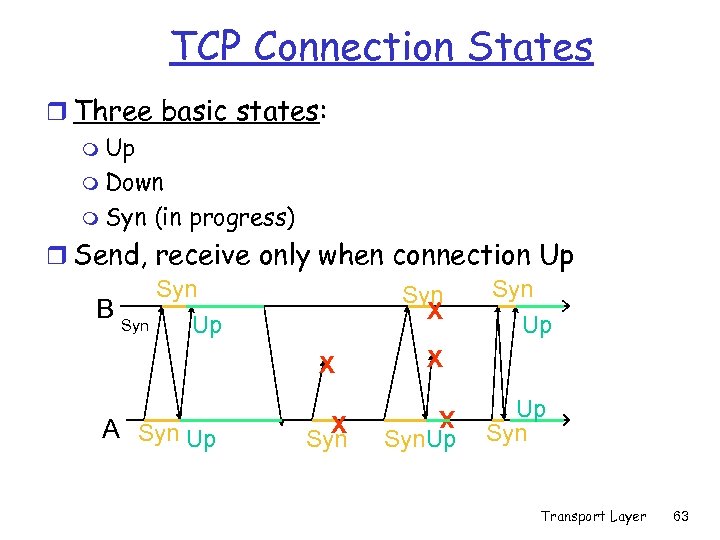

TCP Connection States r Three basic states: m Up m Down m Syn (in progress) r Send, receive only when connection Up Syn Syn B X Syn Up Up X X X Syn X A Syn Up Syn. Up Up Syn Transport Layer 63

TCP Connection States r Three basic states: m Up m Down m Syn (in progress) r Send, receive only when connection Up Syn Syn B X Syn Up Up X X X Syn X A Syn Up Syn. Up Up Syn Transport Layer 63

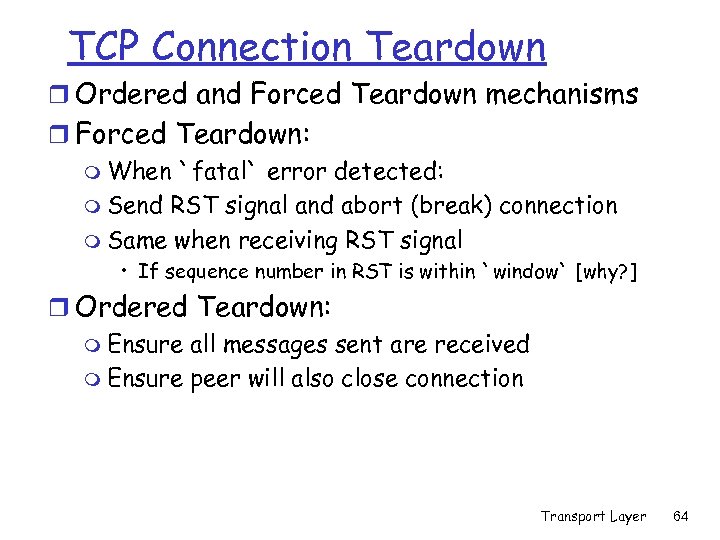

TCP Connection Teardown r Ordered and Forced Teardown mechanisms r Forced Teardown: m When `fatal` error detected: m Send RST signal and abort (break) connection m Same when receiving RST signal • If sequence number in RST is within `window` [why? ] r Ordered Teardown: m Ensure all messages sent are received m Ensure peer will also close connection Transport Layer 64

TCP Connection Teardown r Ordered and Forced Teardown mechanisms r Forced Teardown: m When `fatal` error detected: m Send RST signal and abort (break) connection m Same when receiving RST signal • If sequence number in RST is within `window` [why? ] r Ordered Teardown: m Ensure all messages sent are received m Ensure peer will also close connection Transport Layer 64

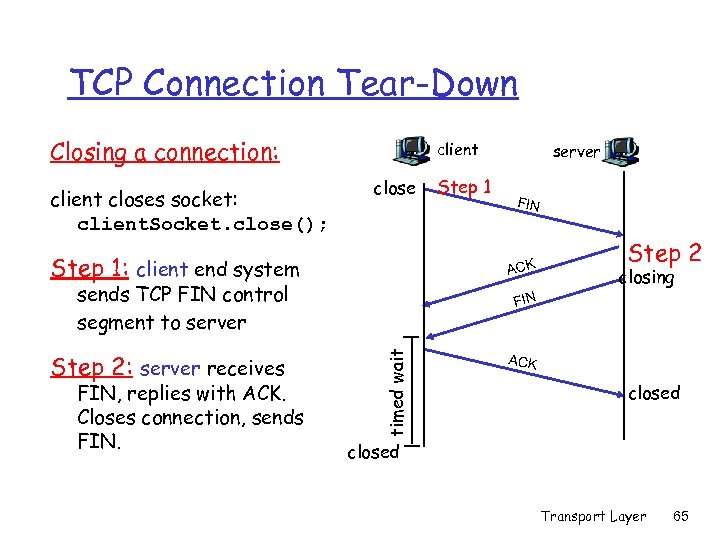

TCP Connection Tear-Down Closing a connection: client closes socket: client. Socket. close(); client close Step 1: client end system Step 2 closing FIN timed wait FIN, replies with ACK. Closes connection, sends FIN ACK sends TCP FIN control segment to server Step 2: server receives Step 1 server ACK closed Transport Layer 65

TCP Connection Tear-Down Closing a connection: client closes socket: client. Socket. close(); client close Step 1: client end system Step 2 closing FIN timed wait FIN, replies with ACK. Closes connection, sends FIN ACK sends TCP FIN control segment to server Step 2: server receives Step 1 server ACK closed Transport Layer 65

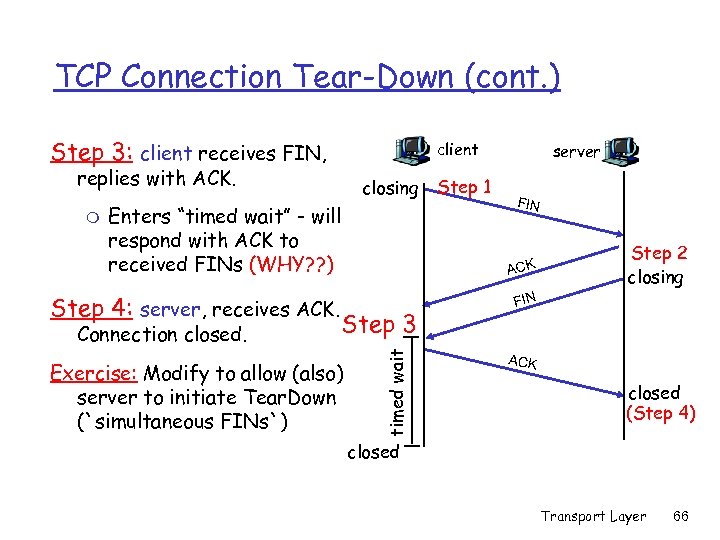

TCP Connection Tear-Down (cont. ) Step 3: client receives FIN, client replies with ACK. Enters “timed wait” - will respond with ACK to received FINs (WHY? ? ) Step 4: server, receives ACK. Connection closed. Step 3 Exercise: Modify to allow (also) server to initiate Tear. Down (`simultaneous FINs`) Step 1 FIN ACK timed wait m closing server Step 2 closing FIN ACK closed (Step 4) closed Transport Layer 66

TCP Connection Tear-Down (cont. ) Step 3: client receives FIN, client replies with ACK. Enters “timed wait” - will respond with ACK to received FINs (WHY? ? ) Step 4: server, receives ACK. Connection closed. Step 3 Exercise: Modify to allow (also) server to initiate Tear. Down (`simultaneous FINs`) Step 1 FIN ACK timed wait m closing server Step 2 closing FIN ACK closed (Step 4) closed Transport Layer 66

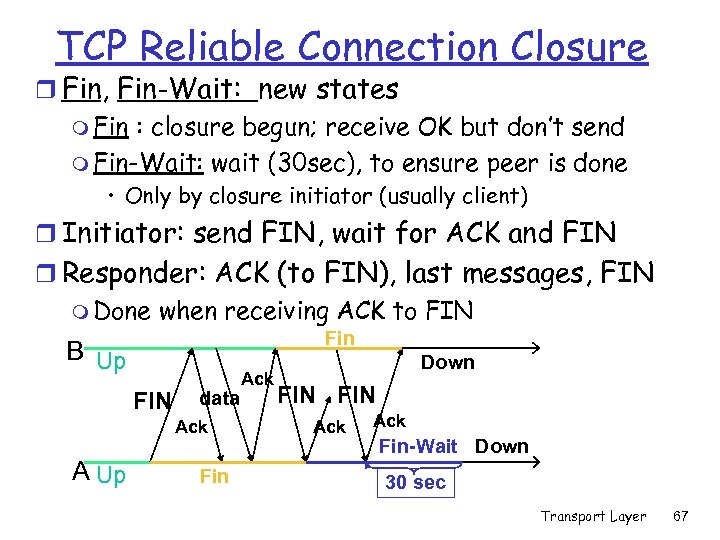

TCP Reliable Connection Closure r Fin, Fin-Wait: new states m Fin : closure begun; receive OK but don’t send m Fin-Wait: wait (30 sec), to ensure peer is done • Only by closure initiator (usually client) r Initiator: send FIN, wait for ACK and FIN r Responder: ACK (to FIN), last messages, FIN m Done when receiving ACK to FIN Fin B Up FIN data Ack A Up Fin Ack Down FIN Ack Fin-Wait Down 30 sec Transport Layer 67

TCP Reliable Connection Closure r Fin, Fin-Wait: new states m Fin : closure begun; receive OK but don’t send m Fin-Wait: wait (30 sec), to ensure peer is done • Only by closure initiator (usually client) r Initiator: send FIN, wait for ACK and FIN r Responder: ACK (to FIN), last messages, FIN m Done when receiving ACK to FIN Fin B Up FIN data Ack A Up Fin Ack Down FIN Ack Fin-Wait Down 30 sec Transport Layer 67

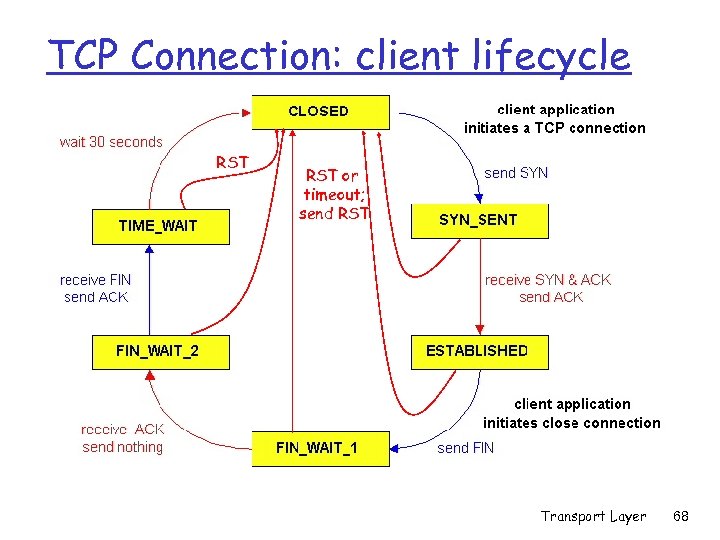

TCP Connection: client lifecycle RST or timeout; send RST Transport Layer 68

TCP Connection: client lifecycle RST or timeout; send RST Transport Layer 68

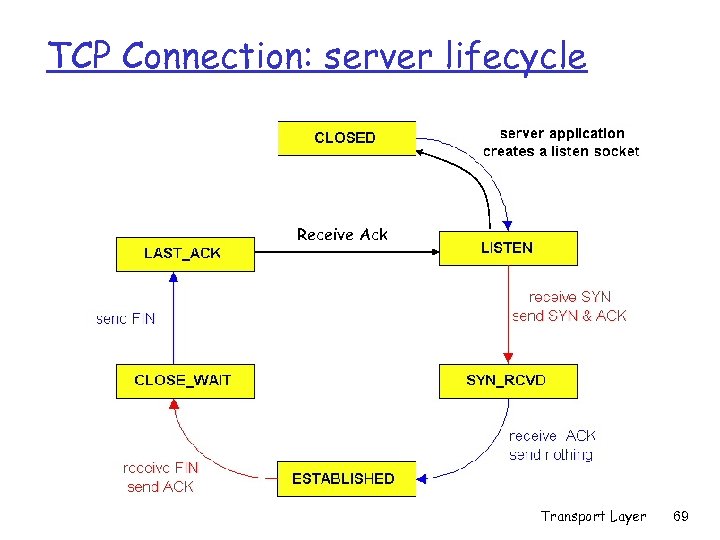

TCP Connection: server lifecycle Receive Ack Transport Layer 69

TCP Connection: server lifecycle Receive Ack Transport Layer 69

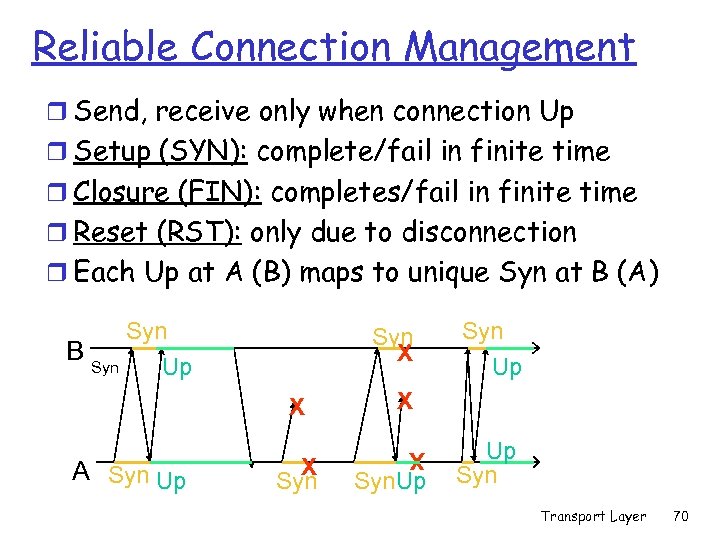

Reliable Connection Management r Send, receive only when connection Up r Setup (SYN): complete/fail in finite time r Closure (FIN): completes/fail in finite time r Reset (RST): only due to disconnection r Each Up at A (B) maps to unique Syn at B (A) Syn X X A Syn Up X X B Syn Up Syn. Up Up Syn Transport Layer 70

Reliable Connection Management r Send, receive only when connection Up r Setup (SYN): complete/fail in finite time r Closure (FIN): completes/fail in finite time r Reset (RST): only due to disconnection r Each Up at A (B) maps to unique Syn at B (A) Syn X X A Syn Up X X B Syn Up Syn. Up Up Syn Transport Layer 70

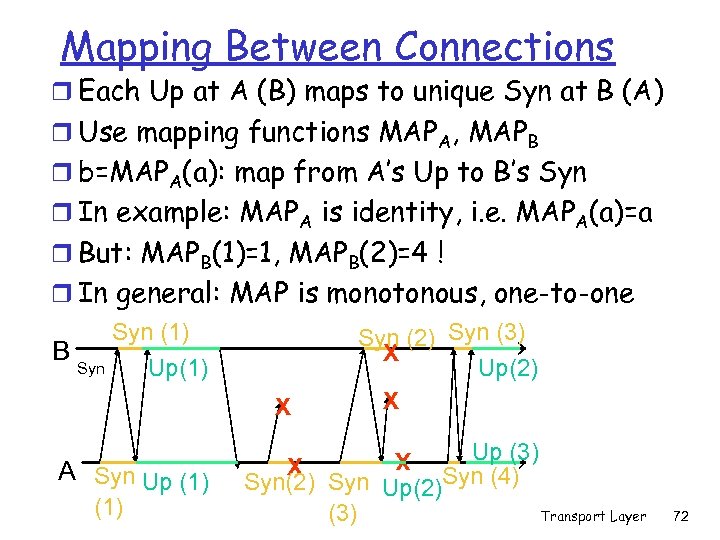

Mapping Between Connections r Each Up at A (B) maps to unique Syn at B (A) r Use mapping functions MAPA, MAPB r b=MAPA(a): map from A’s Up to B’s Syn r In example: MAPA is identity, i. e. MAPA(a)=a r But: MAPB(1)=1, MAPB(2)=4 ! r In general: MAP is monotonous, one-to-one Syn X A Syn Up (1) Syn (2) Syn (3) X Up(2) X Up (3) X Syn(2) Syn Up(2)Syn (4) Transport Layer (3) X B Syn (1) Up(1) 72

Mapping Between Connections r Each Up at A (B) maps to unique Syn at B (A) r Use mapping functions MAPA, MAPB r b=MAPA(a): map from A’s Up to B’s Syn r In example: MAPA is identity, i. e. MAPA(a)=a r But: MAPB(1)=1, MAPB(2)=4 ! r In general: MAP is monotonous, one-to-one Syn X A Syn Up (1) Syn (2) Syn (3) X Up(2) X Up (3) X Syn(2) Syn Up(2)Syn (4) Transport Layer (3) X B Syn (1) Up(1) 72

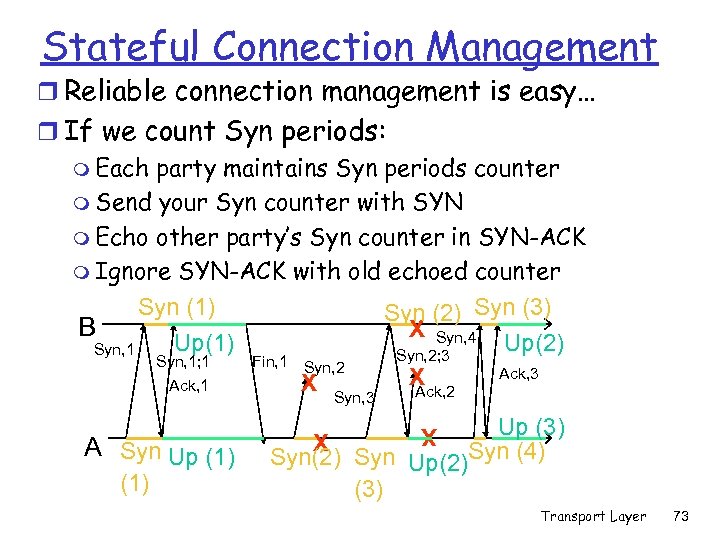

Stateful Connection Management r Reliable connection management is easy… r If we count Syn periods: m Each party maintains Syn periods counter m Send your Syn counter with SYN m Echo other party’s Syn counter in SYN-ACK m Ignore SYN-ACK with old echoed counter Syn (1) Syn (2) Syn (3) B X Syn, 4 Up(1) Up(2) Syn, 1 Syn, 2; 3 Syn, 1; 1 Fin, 1 Syn, 2 Ack, 3 X X Ack, 1 Syn, 3 (1) Up (3) X Syn(2) Syn Up(2)Syn (4) (3) X A Syn Up (1) Ack, 2 Transport Layer 73

Stateful Connection Management r Reliable connection management is easy… r If we count Syn periods: m Each party maintains Syn periods counter m Send your Syn counter with SYN m Echo other party’s Syn counter in SYN-ACK m Ignore SYN-ACK with old echoed counter Syn (1) Syn (2) Syn (3) B X Syn, 4 Up(1) Up(2) Syn, 1 Syn, 2; 3 Syn, 1; 1 Fin, 1 Syn, 2 Ack, 3 X X Ack, 1 Syn, 3 (1) Up (3) X Syn(2) Syn Up(2)Syn (4) (3) X A Syn Up (1) Ack, 2 Transport Layer 73

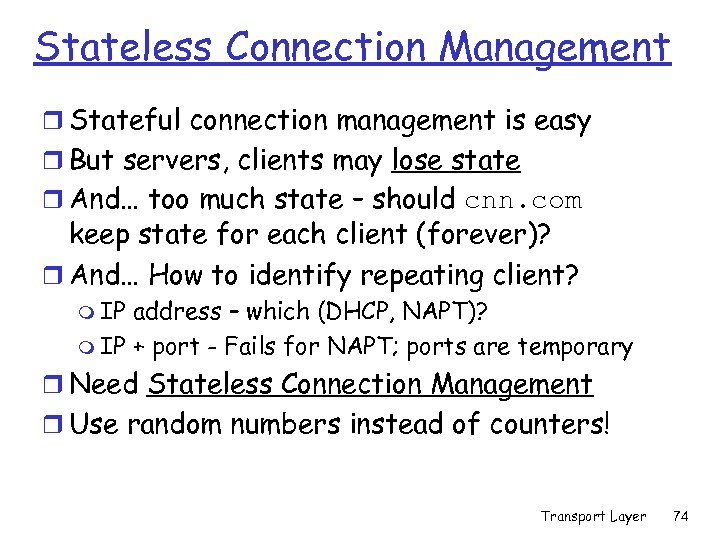

Stateless Connection Management r Stateful connection management is easy r But servers, clients may lose state r And… too much state – should cnn. com keep state for each client (forever)? r And… How to identify repeating client? m IP address – which (DHCP, NAPT)? m IP + port - Fails for NAPT; ports are temporary r Need Stateless Connection Management r Use random numbers instead of counters! Transport Layer 74

Stateless Connection Management r Stateful connection management is easy r But servers, clients may lose state r And… too much state – should cnn. com keep state for each client (forever)? r And… How to identify repeating client? m IP address – which (DHCP, NAPT)? m IP + port - Fails for NAPT; ports are temporary r Need Stateless Connection Management r Use random numbers instead of counters! Transport Layer 74

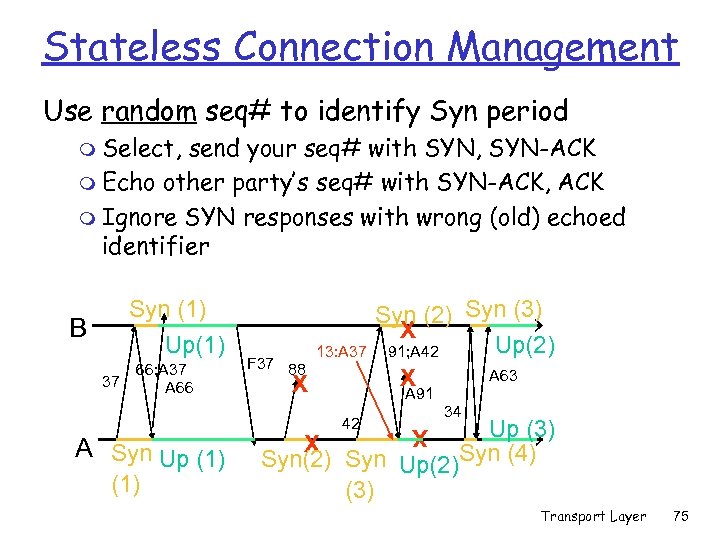

Stateless Connection Management Use random seq# to identify Syn period m Select, send your seq# with SYN, SYN-ACK m Echo other party’s seq# with SYN-ACK, ACK m Ignore SYN responses with wrong (old) echoed identifier B 37 66; A 37 A 66 F 37 88 13: A 37 X A 91 42 A Syn Up (1) Syn (2) Syn (3) X Up(2) 91; A 42 A 63 X 34 Up (3) X Syn(2) Syn Up(2)Syn (4) (3) X Syn (1) Up(1) Transport Layer 75

Stateless Connection Management Use random seq# to identify Syn period m Select, send your seq# with SYN, SYN-ACK m Echo other party’s seq# with SYN-ACK, ACK m Ignore SYN responses with wrong (old) echoed identifier B 37 66; A 37 A 66 F 37 88 13: A 37 X A 91 42 A Syn Up (1) Syn (2) Syn (3) X Up(2) 91; A 42 A 63 X 34 Up (3) X Syn(2) Syn Up(2)Syn (4) (3) X Syn (1) Up(1) Transport Layer 75

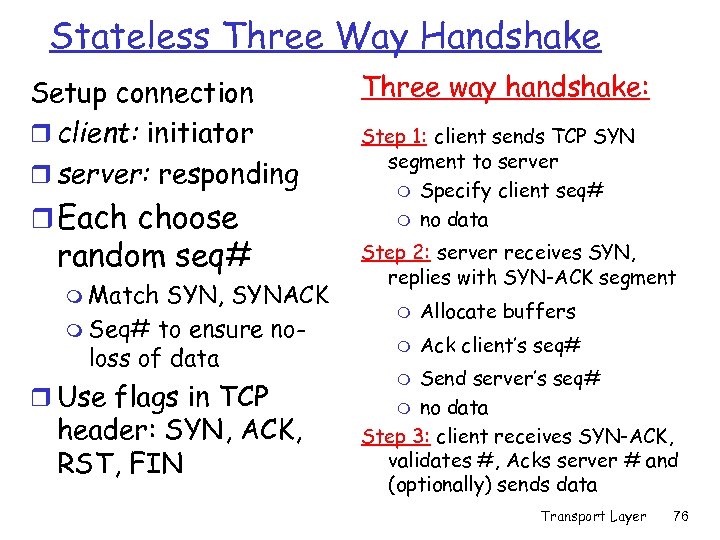

Stateless Three Way Handshake Setup connection r client: initiator r server: responding r Each choose random seq# m Match SYN, SYNACK m Seq# to ensure noloss of data r Use flags in TCP header: SYN, ACK, RST, FIN Three way handshake: Step 1: client sends TCP SYN segment to server m Specify client seq# m no data Step 2: server receives SYN, replies with SYN-ACK segment m Allocate buffers m Ack client’s seq# Send server’s seq# m no data Step 3: client receives SYN-ACK, validates #, Acks server # and (optionally) sends data m Transport Layer 76

Stateless Three Way Handshake Setup connection r client: initiator r server: responding r Each choose random seq# m Match SYN, SYNACK m Seq# to ensure noloss of data r Use flags in TCP header: SYN, ACK, RST, FIN Three way handshake: Step 1: client sends TCP SYN segment to server m Specify client seq# m no data Step 2: server receives SYN, replies with SYN-ACK segment m Allocate buffers m Ack client’s seq# Send server’s seq# m no data Step 3: client receives SYN-ACK, validates #, Acks server # and (optionally) sends data m Transport Layer 76

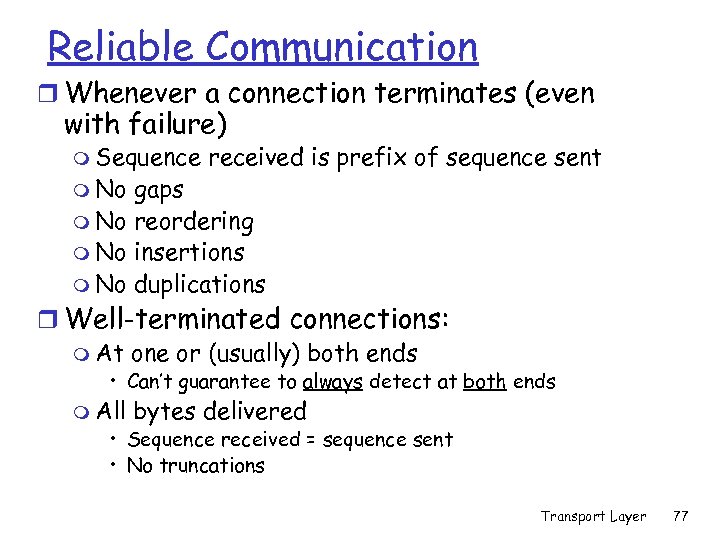

Reliable Communication r Whenever a connection terminates (even with failure) m Sequence m No received is prefix of sequence sent gaps m No reordering m No insertions m No duplications r Well-terminated connections: m At one or (usually) both ends • Can’t guarantee to always detect at both ends m All bytes delivered • Sequence received = sequence sent • No truncations Transport Layer 77

Reliable Communication r Whenever a connection terminates (even with failure) m Sequence m No received is prefix of sequence sent gaps m No reordering m No insertions m No duplications r Well-terminated connections: m At one or (usually) both ends • Can’t guarantee to always detect at both ends m All bytes delivered • Sequence received = sequence sent • No truncations Transport Layer 77

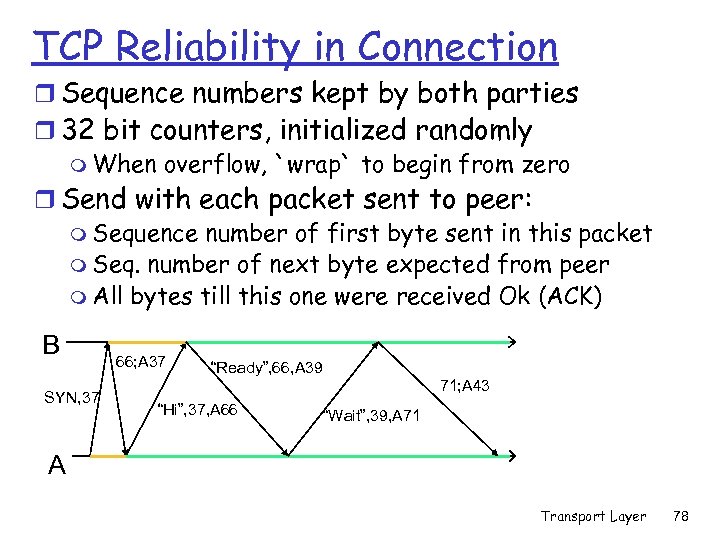

TCP Reliability in Connection r Sequence numbers kept by both parties r 32 bit counters, initialized randomly m When overflow, `wrap` to begin from zero r Send with each packet sent to peer: m Sequence number of first byte sent in this packet m Seq. number of next byte expected from peer m All bytes till this one were received Ok (ACK) B SYN, 37 66; A 37 “Ready”, 66, A 39 71; A 43 “Hi”, 37, A 66 “Wait”, 39, A 71 A Transport Layer 78

TCP Reliability in Connection r Sequence numbers kept by both parties r 32 bit counters, initialized randomly m When overflow, `wrap` to begin from zero r Send with each packet sent to peer: m Sequence number of first byte sent in this packet m Seq. number of next byte expected from peer m All bytes till this one were received Ok (ACK) B SYN, 37 66; A 37 “Ready”, 66, A 39 71; A 43 “Hi”, 37, A 66 “Wait”, 39, A 71 A Transport Layer 78

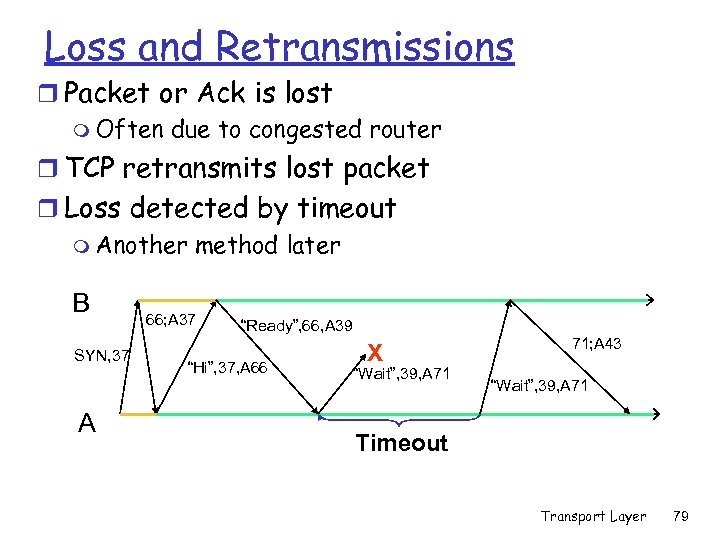

Loss and Retransmissions r Packet or Ack is lost m Often due to congested router r TCP retransmits lost packet r Loss detected by timeout m Another method later B SYN, 37 A 66; A 37 “Ready”, 66, A 39 “Hi”, 37, A 66 X “Wait”, 39, A 71 71; A 43 “Wait”, 39, A 71 Timeout Transport Layer 79

Loss and Retransmissions r Packet or Ack is lost m Often due to congested router r TCP retransmits lost packet r Loss detected by timeout m Another method later B SYN, 37 A 66; A 37 “Ready”, 66, A 39 “Hi”, 37, A 66 X “Wait”, 39, A 71 71; A 43 “Wait”, 39, A 71 Timeout Transport Layer 79

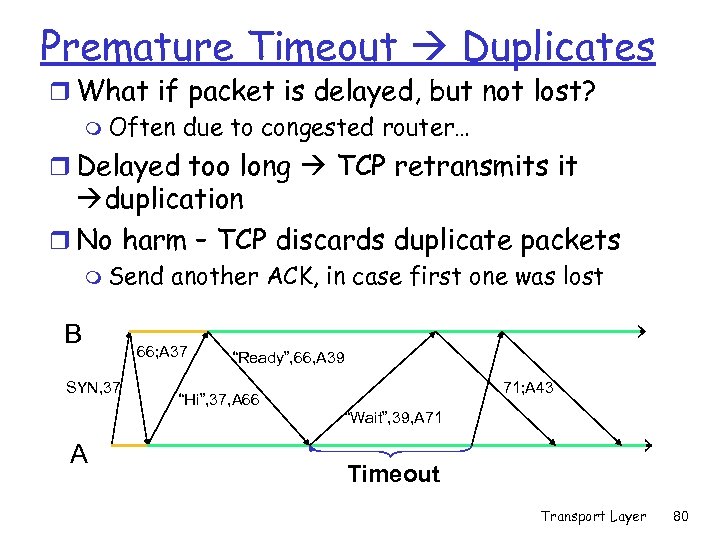

Premature Timeout Duplicates r What if packet is delayed, but not lost? m Often due to congested router… r Delayed too long TCP retransmits it duplication r No harm – TCP discards duplicate packets m Send B SYN, 37 another ACK, in case first one was lost 66; A 37 “Ready”, 66, A 39 71; A 43 “Hi”, 37, A 66 “Wait”, 39, A 71 A Timeout Transport Layer 80

Premature Timeout Duplicates r What if packet is delayed, but not lost? m Often due to congested router… r Delayed too long TCP retransmits it duplication r No harm – TCP discards duplicate packets m Send B SYN, 37 another ACK, in case first one was lost 66; A 37 “Ready”, 66, A 39 71; A 43 “Hi”, 37, A 66 “Wait”, 39, A 71 A Timeout Transport Layer 80

![Stop-and-wait [RDT 3. 0]: inefficiency sender receiver first packet bit transmitted, t = 0 Stop-and-wait [RDT 3. 0]: inefficiency sender receiver first packet bit transmitted, t = 0](https://present5.com/presentation/4699b155894bd7a24a2f1872aaae267a/image-69.jpg) Stop-and-wait [RDT 3. 0]: inefficiency sender receiver first packet bit transmitted, t = 0 last packet bit transmitted, t = L / R RTT first packet bit arrives last packet bit arrives, send ACK arrives, send next packet, t = RTT + L / R Transport Layer 81

Stop-and-wait [RDT 3. 0]: inefficiency sender receiver first packet bit transmitted, t = 0 last packet bit transmitted, t = L / R RTT first packet bit arrives last packet bit arrives, send ACK arrives, send next packet, t = RTT + L / R Transport Layer 81

Pipeline / Window for Efficiency r Protocol as described is inefficient: m Transmission rate = 10 Mb/sec m Max segment size (MSS) = 1250 B= 10000 bits m Round Trip Time (RTT) = 100 msec Includes transmit, propagation, queuing m File size = 125 KB = 1 million bits = 100 segments m Time to send= 100 segments * 100 msec = 10 sec r Idea: send file without waiting for ACK? m Transmission time= (1 Mb)/(10 Mb/sec)=100 msec m RTT = 200 msec m Send 100 segments in pipeline / window Transport Layer 82

Pipeline / Window for Efficiency r Protocol as described is inefficient: m Transmission rate = 10 Mb/sec m Max segment size (MSS) = 1250 B= 10000 bits m Round Trip Time (RTT) = 100 msec Includes transmit, propagation, queuing m File size = 125 KB = 1 million bits = 100 segments m Time to send= 100 segments * 100 msec = 10 sec r Idea: send file without waiting for ACK? m Transmission time= (1 Mb)/(10 Mb/sec)=100 msec m RTT = 200 msec m Send 100 segments in pipeline / window Transport Layer 82

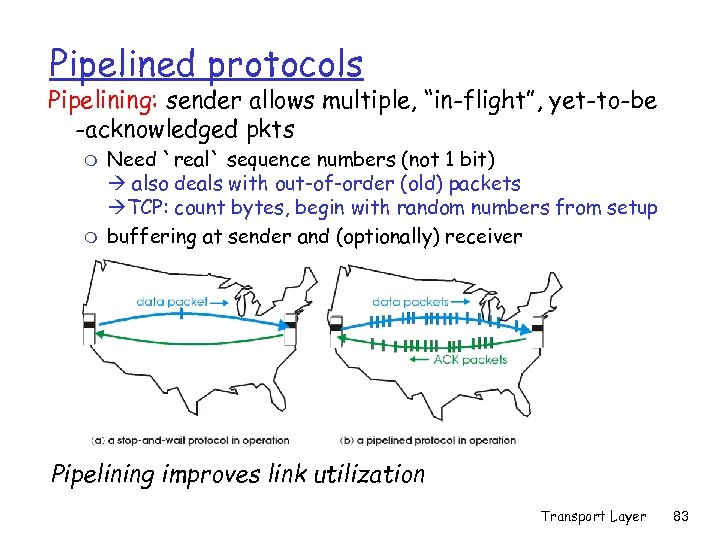

Pipelined protocols Pipelining: sender allows multiple, “in-flight”, yet-to-be -acknowledged pkts m m Need `real` sequence numbers (not 1 bit) also deals with out-of-order (old) packets TCP: count bytes, begin with random numbers from setup buffering at sender and (optionally) receiver Pipelining improves link utilization Transport Layer 83

Pipelined protocols Pipelining: sender allows multiple, “in-flight”, yet-to-be -acknowledged pkts m m Need `real` sequence numbers (not 1 bit) also deals with out-of-order (old) packets TCP: count bytes, begin with random numbers from setup buffering at sender and (optionally) receiver Pipelining improves link utilization Transport Layer 83

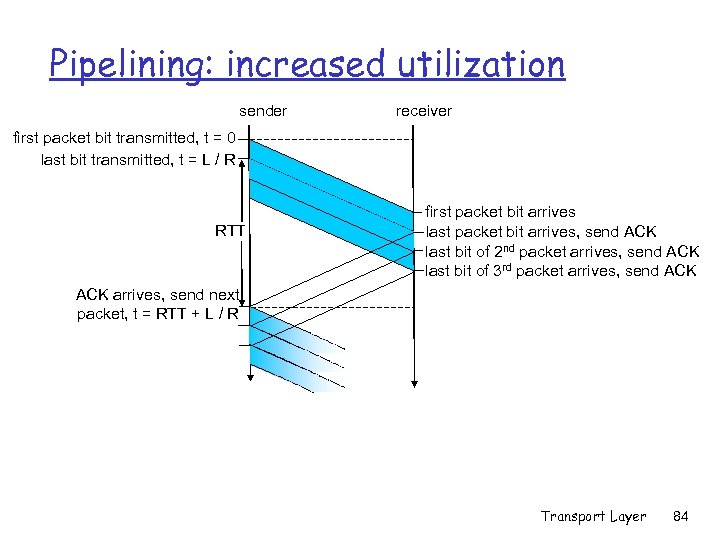

Pipelining: increased utilization sender receiver first packet bit transmitted, t = 0 last bit transmitted, t = L / R RTT first packet bit arrives last packet bit arrives, send ACK last bit of 2 nd packet arrives, send ACK last bit of 3 rd packet arrives, send ACK arrives, send next packet, t = RTT + L / R Transport Layer 84

Pipelining: increased utilization sender receiver first packet bit transmitted, t = 0 last bit transmitted, t = L / R RTT first packet bit arrives last packet bit arrives, send ACK last bit of 2 nd packet arrives, send ACK last bit of 3 rd packet arrives, send ACK arrives, send next packet, t = RTT + L / R Transport Layer 84

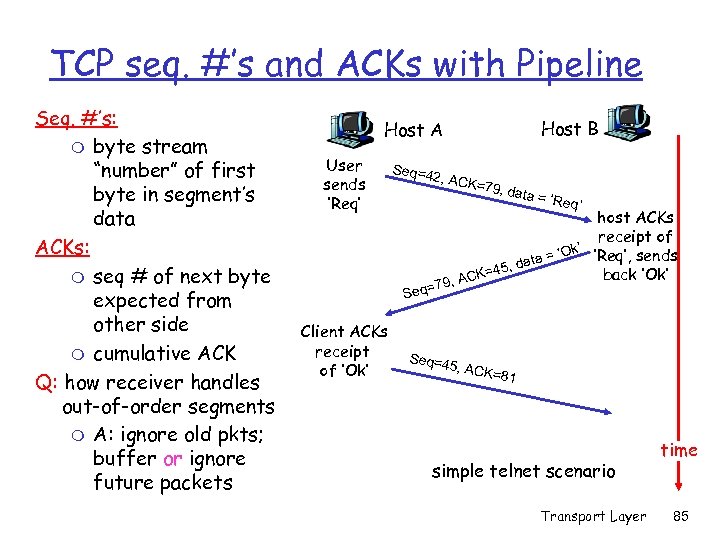

TCP seq. #’s and ACKs with Pipeline Seq. #’s: m byte stream “number” of first byte in segment’s data ACKs: m seq # of next byte expected from other side m cumulative ACK Q: how receiver handles out-of-order segments m A: ignore old pkts; buffer or ignore future packets Host B Host A User sends ‘Req’ Client ACKs receipt of ‘Ok’ Seq=4 2, ACK = 79, da ta = ‘R eq’ host ACKs receipt of k’ ‘Req’, sends a = ‘O , dat 45 back ‘Ok’ CK= 79, A q= Se Seq=4 5, ACK =81 simple telnet scenario Transport Layer time 85

TCP seq. #’s and ACKs with Pipeline Seq. #’s: m byte stream “number” of first byte in segment’s data ACKs: m seq # of next byte expected from other side m cumulative ACK Q: how receiver handles out-of-order segments m A: ignore old pkts; buffer or ignore future packets Host B Host A User sends ‘Req’ Client ACKs receipt of ‘Ok’ Seq=4 2, ACK = 79, da ta = ‘R eq’ host ACKs receipt of k’ ‘Req’, sends a = ‘O , dat 45 back ‘Ok’ CK= 79, A q= Se Seq=4 5, ACK =81 simple telnet scenario Transport Layer time 85

TCP: retransmission scenarios Host A 2, 8 by tes da t Seq=92 timeout a =100 X ACK loss Seq=9 2, 8 by tes da ta 100 Sendbase = 100 Send. Base = 120 = ACK Send. Base = 100 time Host B Seq=9 Send. Base = 120 lost ACK scenario 2, 8 by Seq= 100, 2 tes da ta 0 byte s data 00 =1 20 CK CK=1 A A Seq=92 timeout Seq=9 timeout Host A Host B time 2, 8 by tes da ta 20 K=1 AC premature timeout Transport Layer 86

TCP: retransmission scenarios Host A 2, 8 by tes da t Seq=92 timeout a =100 X ACK loss Seq=9 2, 8 by tes da ta 100 Sendbase = 100 Send. Base = 120 = ACK Send. Base = 100 time Host B Seq=9 Send. Base = 120 lost ACK scenario 2, 8 by Seq= 100, 2 tes da ta 0 byte s data 00 =1 20 CK CK=1 A A Seq=92 timeout Seq=9 timeout Host A Host B time 2, 8 by tes da ta 20 K=1 AC premature timeout Transport Layer 86

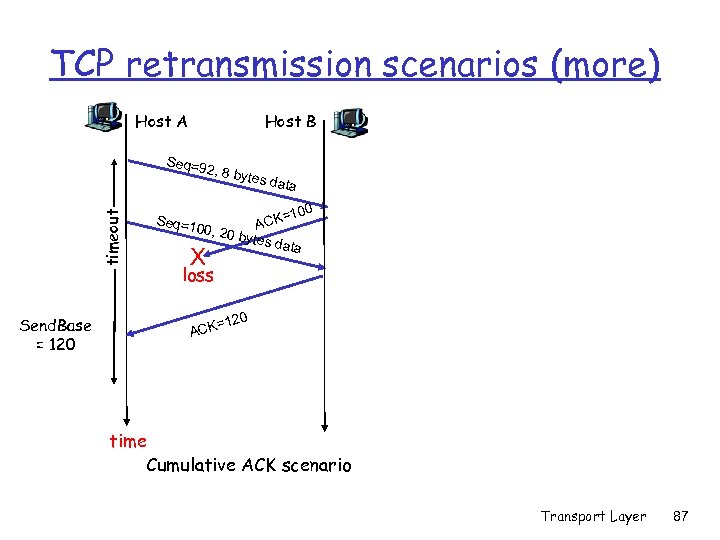

TCP retransmission scenarios (more) Host A Host B Seq=9 timeout 2, 8 by Send. Base = 120 Seq=1 tes da ta 100 CK= A 00, 20 bytes data X loss 120 = ACK time Cumulative ACK scenario Transport Layer 87

TCP retransmission scenarios (more) Host A Host B Seq=9 timeout 2, 8 by Send. Base = 120 Seq=1 tes da ta 100 CK= A 00, 20 bytes data X loss 120 = ACK time Cumulative ACK scenario Transport Layer 87

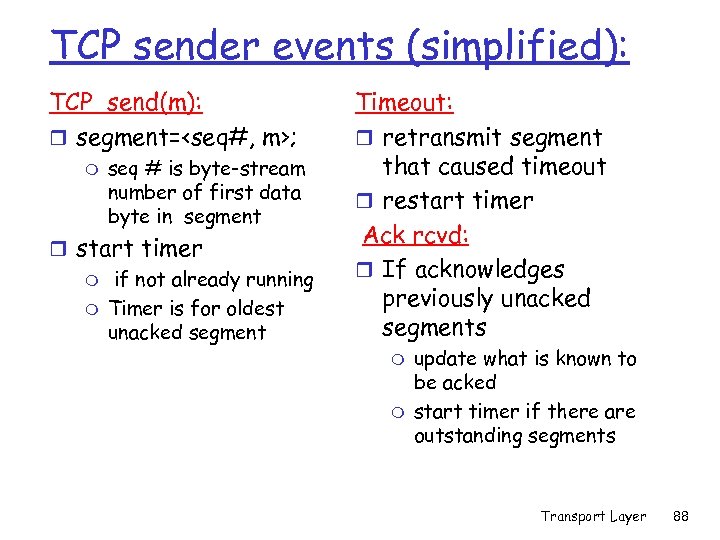

TCP sender events (simplified): TCP_send(m): r segment=

TCP sender events (simplified): TCP_send(m): r segment=

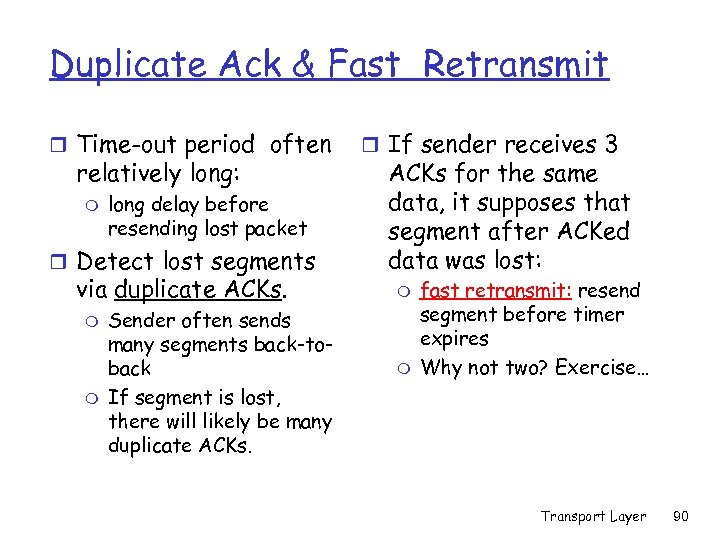

Duplicate Ack & Fast Retransmit r Time-out period often relatively long: m long delay before resending lost packet r Detect lost segments via duplicate ACKs. m m Sender often sends many segments back-toback If segment is lost, there will likely be many duplicate ACKs. r If sender receives 3 ACKs for the same data, it supposes that segment after ACKed data was lost: m m fast retransmit: resend segment before timer expires Why not two? Exercise… Transport Layer 90

Duplicate Ack & Fast Retransmit r Time-out period often relatively long: m long delay before resending lost packet r Detect lost segments via duplicate ACKs. m m Sender often sends many segments back-toback If segment is lost, there will likely be many duplicate ACKs. r If sender receives 3 ACKs for the same data, it supposes that segment after ACKed data was lost: m m fast retransmit: resend segment before timer expires Why not two? Exercise… Transport Layer 90

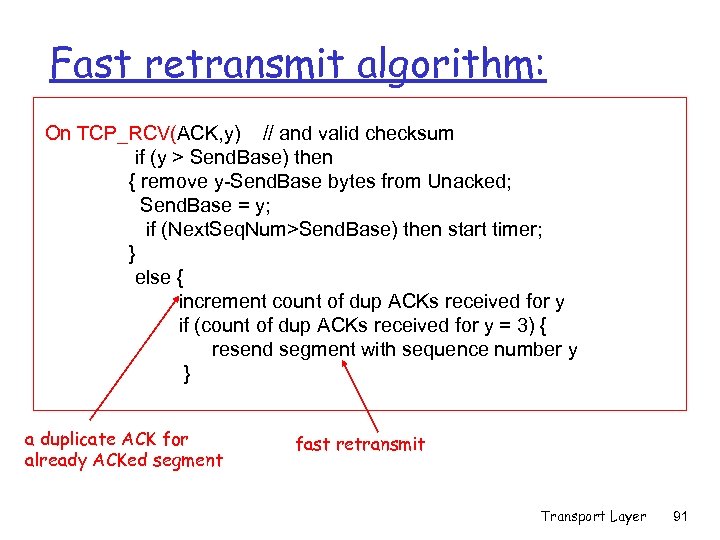

Fast retransmit algorithm: On TCP_RCV(ACK, y) // and valid checksum if (y > Send. Base) then { remove y-Send. Base bytes from Unacked; Send. Base = y; if (Next. Seq. Num>Send. Base) then start timer; } else { increment count of dup ACKs received for y if (count of dup ACKs received for y = 3) { resend segment with sequence number y } a duplicate ACK for already ACKed segment fast retransmit Transport Layer 91

Fast retransmit algorithm: On TCP_RCV(ACK, y) // and valid checksum if (y > Send. Base) then { remove y-Send. Base bytes from Unacked; Send. Base = y; if (Next. Seq. Num>Send. Base) then start timer; } else { increment count of dup ACKs received for y if (count of dup ACKs received for y = 3) { resend segment with sequence number y } a duplicate ACK for already ACKed segment fast retransmit Transport Layer 91

![TCP ACK generation [RFC 1122, RFC 2581] Event at Receiver TCP Receiver action Arrival TCP ACK generation [RFC 1122, RFC 2581] Event at Receiver TCP Receiver action Arrival](https://present5.com/presentation/4699b155894bd7a24a2f1872aaae267a/image-79.jpg) TCP ACK generation [RFC 1122, RFC 2581] Event at Receiver TCP Receiver action Arrival of in-order segment with expected seq #. All data up to expected seq # already ACKed Delayed ACK. Wait 200 ms (up to 500 ms) for next segment. If no next segment, send ACK Arrival of in-order segment with expected seq #. One other segment has ACK pending Immediately send single cumulative ACK, ACKing both in-order segments Arrival of higher-than-expected sequence number (gap) Immediately send duplicate ACK, indicating seq. # of next expected byte; usually, buffer data Transport Layer 92

TCP ACK generation [RFC 1122, RFC 2581] Event at Receiver TCP Receiver action Arrival of in-order segment with expected seq #. All data up to expected seq # already ACKed Delayed ACK. Wait 200 ms (up to 500 ms) for next segment. If no next segment, send ACK Arrival of in-order segment with expected seq #. One other segment has ACK pending Immediately send single cumulative ACK, ACKing both in-order segments Arrival of higher-than-expected sequence number (gap) Immediately send duplicate ACK, indicating seq. # of next expected byte; usually, buffer data Transport Layer 92

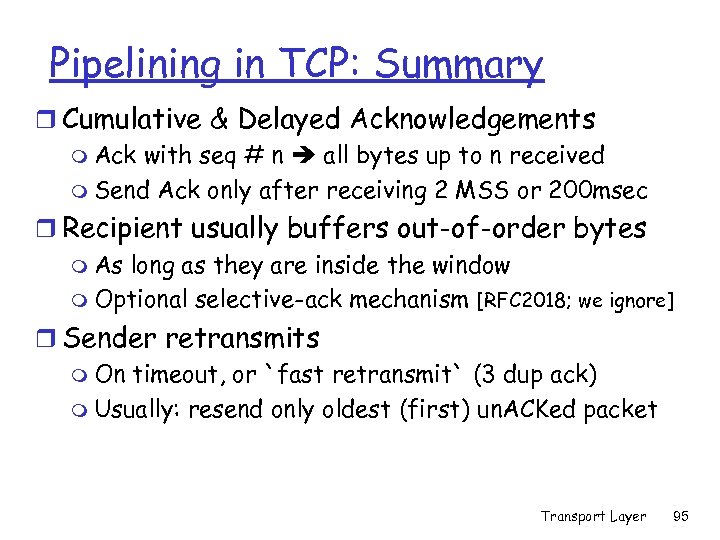

Pipelining in TCP: Summary r Cumulative & Delayed Acknowledgements m Ack with seq # n all bytes up to n received m Send Ack only after receiving 2 MSS or 200 msec r Recipient usually buffers out-of-order bytes m As long as they are inside the window m Optional selective-ack mechanism [RFC 2018; we ignore] r Sender retransmits m On timeout, or `fast retransmit` (3 dup ack) m Usually: resend only oldest (first) un. ACKed packet Transport Layer 95

Pipelining in TCP: Summary r Cumulative & Delayed Acknowledgements m Ack with seq # n all bytes up to n received m Send Ack only after receiving 2 MSS or 200 msec r Recipient usually buffers out-of-order bytes m As long as they are inside the window m Optional selective-ack mechanism [RFC 2018; we ignore] r Sender retransmits m On timeout, or `fast retransmit` (3 dup ack) m Usually: resend only oldest (first) un. ACKed packet Transport Layer 95

Rest of Transport Layer… r TCP Overview r TCP Reliability r TCP Flow Control r 3. 6 Principles of congestion control r TCP Timeouts r 3. 7 TCP congestion control r TCP Fairness Transport Layer 96

Rest of Transport Layer… r TCP Overview r TCP Reliability r TCP Flow Control r 3. 6 Principles of congestion control r TCP Timeouts r 3. 7 TCP congestion control r TCP Fairness Transport Layer 96

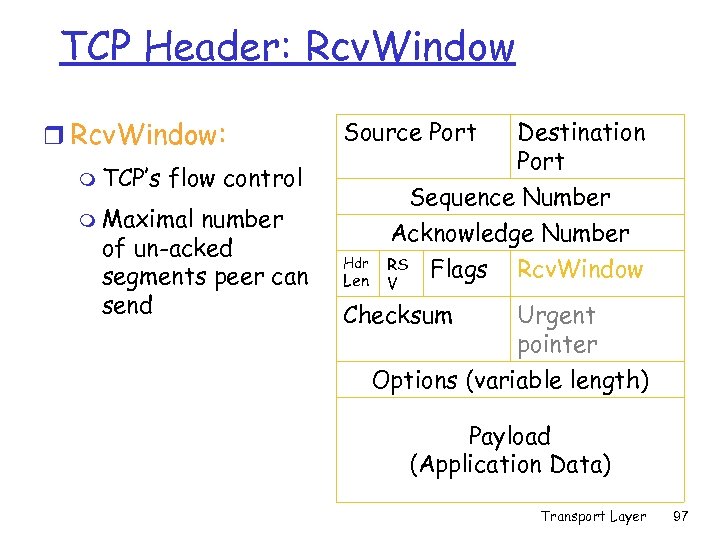

TCP Header: Rcv. Window r Rcv. Window: m TCP’s Source Port Destination Port Sequence Number Acknowledge Number flow control m Maximal number of un-acked segments peer can send Hdr Len RS V Flags Checksum Rcv. Window Urgent pointer Options (variable length) Payload (Application Data) Transport Layer 97

TCP Header: Rcv. Window r Rcv. Window: m TCP’s Source Port Destination Port Sequence Number Acknowledge Number flow control m Maximal number of un-acked segments peer can send Hdr Len RS V Flags Checksum Rcv. Window Urgent pointer Options (variable length) Payload (Application Data) Transport Layer 97

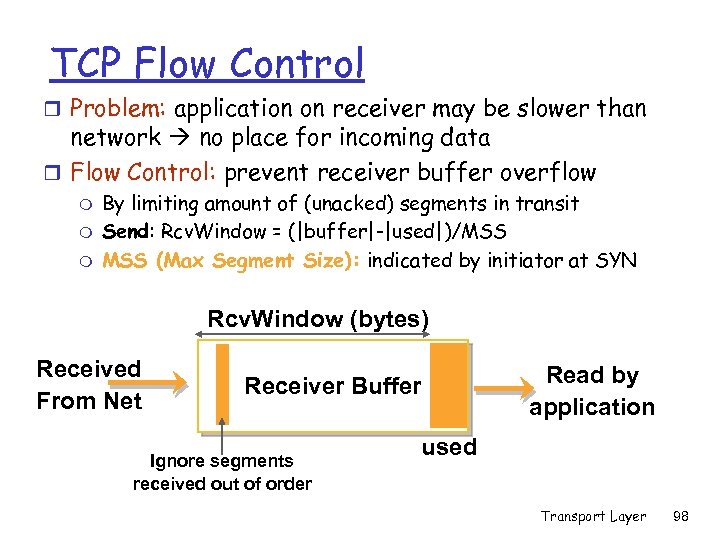

TCP Flow Control r Problem: application on receiver may be slower than network no place for incoming data r Flow Control: prevent receiver buffer overflow m m m By limiting amount of (unacked) segments in transit Send: Rcv. Window = (|buffer|-|used|)/MSS (Max Segment Size): indicated by initiator at SYN Rcv. Window (bytes) Received From Net Receiver Buffer Ignore segments received out of order Read by application used Transport Layer 98

TCP Flow Control r Problem: application on receiver may be slower than network no place for incoming data r Flow Control: prevent receiver buffer overflow m m m By limiting amount of (unacked) segments in transit Send: Rcv. Window = (|buffer|-|used|)/MSS (Max Segment Size): indicated by initiator at SYN Rcv. Window (bytes) Received From Net Receiver Buffer Ignore segments received out of order Read by application used Transport Layer 98

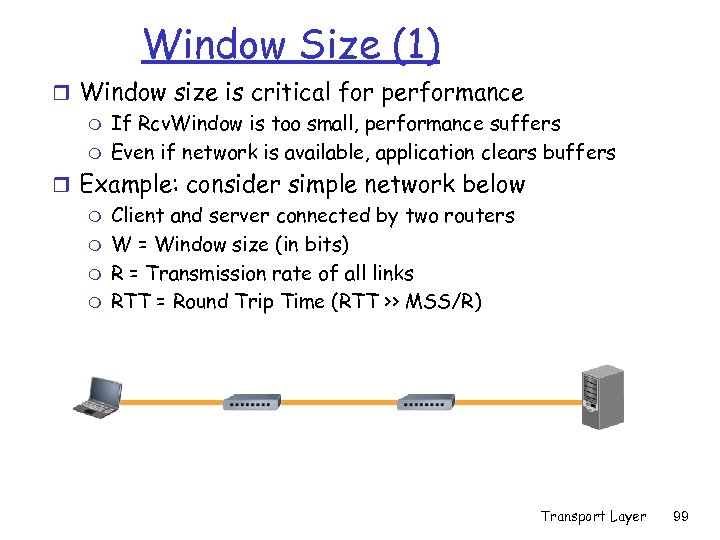

Window Size (1) r Window size is critical for performance m If Rcv. Window is too small, performance suffers m Even if network is available, application clears buffers r Example: consider simple network below m Client and server connected by two routers m W = Window size (in bits) m R = Transmission rate of all links m RTT = Round Trip Time (RTT >> MSS/R) Transport Layer 99

Window Size (1) r Window size is critical for performance m If Rcv. Window is too small, performance suffers m Even if network is available, application clears buffers r Example: consider simple network below m Client and server connected by two routers m W = Window size (in bits) m R = Transmission rate of all links m RTT = Round Trip Time (RTT >> MSS/R) Transport Layer 99

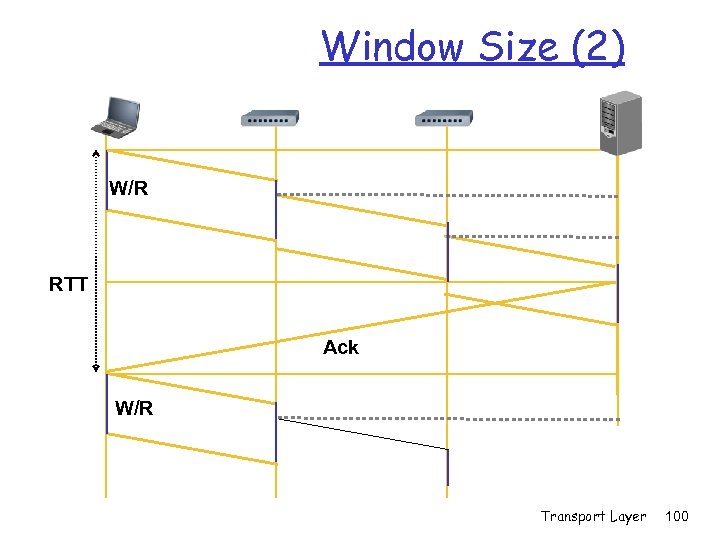

Window Size (2) W/R RTT Ack W/R Transport Layer 100

Window Size (2) W/R RTT Ack W/R Transport Layer 100

![Average Rate W/R Average Rate ≤ [R*(W/R)+0*(RTT-W/R)]/RTT = W/RTT Ack W/R Average Rate ≤ Average Rate W/R Average Rate ≤ [R*(W/R)+0*(RTT-W/R)]/RTT = W/RTT Ack W/R Average Rate ≤](https://present5.com/presentation/4699b155894bd7a24a2f1872aaae267a/image-86.jpg) Average Rate W/R Average Rate ≤ [R*(W/R)+0*(RTT-W/R)]/RTT = W/RTT Ack W/R Average Rate ≤ min(R, W/RTT) Transport Layer 101

Average Rate W/R Average Rate ≤ [R*(W/R)+0*(RTT-W/R)]/RTT = W/RTT Ack W/R Average Rate ≤ min(R, W/RTT) Transport Layer 101

Optimal Receiver Window r Average Rate = min{R, W/RTT} r Flow control is not bottleneck, as long as W R * RTT r Receiver. Buffer Bandwidth x Delay r If window too small: slows down connection r Fat links: huge Bandwidth x Delay (e. g. satellite) r Aggressive flow control: send Rcv. Window larger than available buffers m By estimating drain rate; risk of buffer overflow m Works well when delay does not change much Transport Layer 102

Optimal Receiver Window r Average Rate = min{R, W/RTT} r Flow control is not bottleneck, as long as W R * RTT r Receiver. Buffer Bandwidth x Delay r If window too small: slows down connection r Fat links: huge Bandwidth x Delay (e. g. satellite) r Aggressive flow control: send Rcv. Window larger than available buffers m By estimating drain rate; risk of buffer overflow m Works well when delay does not change much Transport Layer 102

TCP Buffers - Critical Resource r TCP Receiver Buffers: critical for performance r But large buffers limit number of connections m 10, 000 connections, 10 KB each 100 MB m Costs and performance implications m Abused by SYN-flooding Do. S attack (Net. Security course) r Servers should minimize open connections m Client should close connection (and wait 30 sec) m Many servers initiate close, but don’t wait 30 sec • If Ack is lost, client may timeout (abnormal close) Transport Layer 103

TCP Buffers - Critical Resource r TCP Receiver Buffers: critical for performance r But large buffers limit number of connections m 10, 000 connections, 10 KB each 100 MB m Costs and performance implications m Abused by SYN-flooding Do. S attack (Net. Security course) r Servers should minimize open connections m Client should close connection (and wait 30 sec) m Many servers initiate close, but don’t wait 30 sec • If Ack is lost, client may timeout (abnormal close) Transport Layer 103

Lecture 7 outline r TCP Overview r TCP Flow Control r 3. 6 Principles of congestion control r TCP Timeouts r 3. 7 TCP congestion control r TCP Fairness Transport Layer 105

Lecture 7 outline r TCP Overview r TCP Flow Control r 3. 6 Principles of congestion control r TCP Timeouts r 3. 7 TCP congestion control r TCP Fairness Transport Layer 105

Principles of Congestion Control Congestion: r informally: “too many sources sending too much data too fast for network to handle” m Cf. to flow control (limited by receiver’s buffers) r manifestations: m lost packets (buffer overflow at routers) m long delays (queueing in router buffers) r a top-10 problem! m m Main cause for packet loss Main cause for delay and jitter (changing delay) Transport Layer 106

Principles of Congestion Control Congestion: r informally: “too many sources sending too much data too fast for network to handle” m Cf. to flow control (limited by receiver’s buffers) r manifestations: m lost packets (buffer overflow at routers) m long delays (queueing in router buffers) r a top-10 problem! m m Main cause for packet loss Main cause for delay and jitter (changing delay) Transport Layer 106

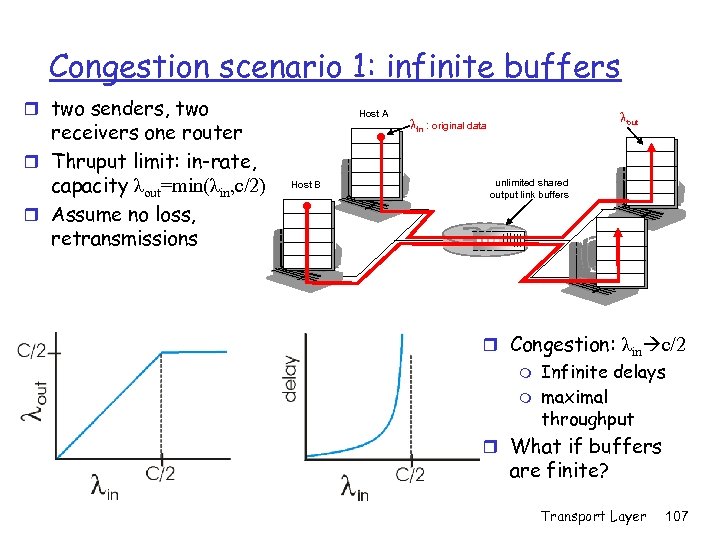

Congestion scenario 1: infinite buffers r two senders, two receivers one router r Thruput limit: in-rate, capacity λout=min(λin, c/2) r Assume no loss, retransmissions Host A Host B lout lin : original data unlimited shared output link buffers r Congestion: λin c/2 m m Infinite delays maximal throughput r What if buffers are finite? Transport Layer 107

Congestion scenario 1: infinite buffers r two senders, two receivers one router r Thruput limit: in-rate, capacity λout=min(λin, c/2) r Assume no loss, retransmissions Host A Host B lout lin : original data unlimited shared output link buffers r Congestion: λin c/2 m m Infinite delays maximal throughput r What if buffers are finite? Transport Layer 107

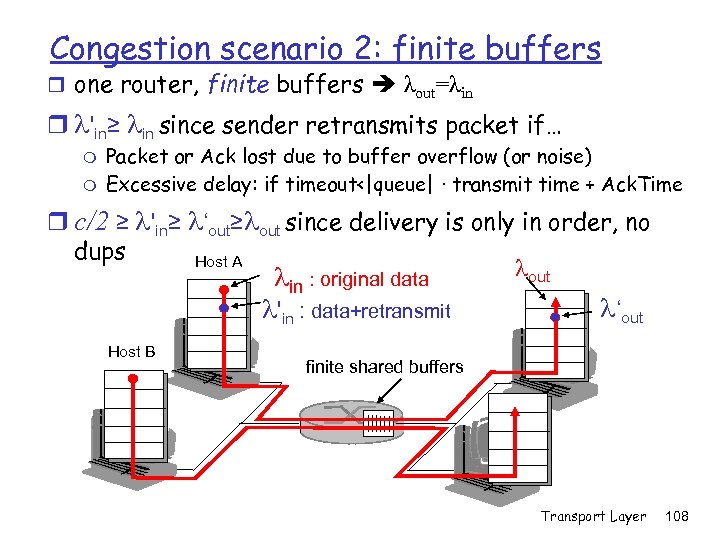

Congestion scenario 2: finite buffers r one router, finite buffers λout=λin r l'in≥ lin since sender retransmits packet if… m m Packet or Ack lost due to buffer overflow (or noise) Excessive delay: if timeout<|queue| ∙ transmit time + Ack. Time r c/2 ≥ l'in≥ l‘out≥lout since delivery is only in order, no dups Host A lout l : original data in l'in : data+retransmit Host B l‘out finite shared buffers Transport Layer 108

Congestion scenario 2: finite buffers r one router, finite buffers λout=λin r l'in≥ lin since sender retransmits packet if… m m Packet or Ack lost due to buffer overflow (or noise) Excessive delay: if timeout<|queue| ∙ transmit time + Ack. Time r c/2 ≥ l'in≥ l‘out≥lout since delivery is only in order, no dups Host A lout l : original data in l'in : data+retransmit Host B l‘out finite shared buffers Transport Layer 108

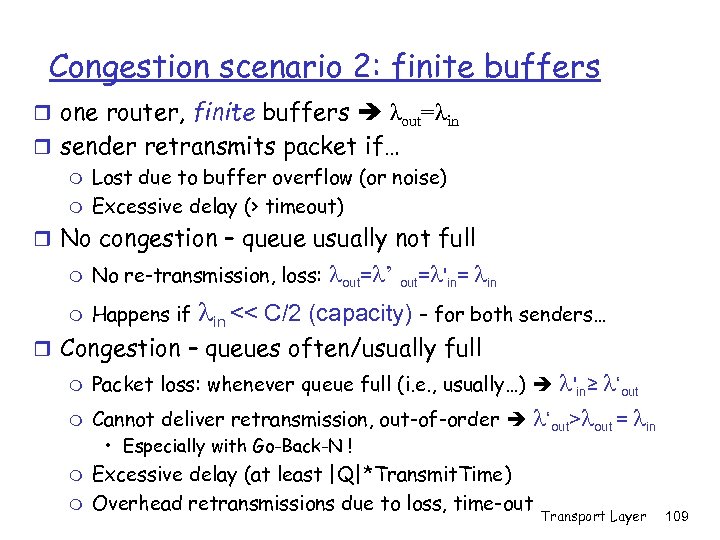

Congestion scenario 2: finite buffers r one router, finite buffers λout=λin r sender retransmits packet if… m Lost due to buffer overflow (or noise) m Excessive delay (> timeout) r No congestion – queue usually not full m No re-transmission, loss: m Happens if lout=l’ out=l'in= lin << C/2 (capacity) – for both senders… r Congestion – queues often/usually full m Packet loss: whenever queue full (i. e. , usually…) l'in≥ l‘out m Cannot deliver retransmission, out-of-order l‘out>lout = lin • Especially with Go-Back-N ! m m Excessive delay (at least |Q|*Transmit. Time) Overhead retransmissions due to loss, time-out Transport Layer 109

Congestion scenario 2: finite buffers r one router, finite buffers λout=λin r sender retransmits packet if… m Lost due to buffer overflow (or noise) m Excessive delay (> timeout) r No congestion – queue usually not full m No re-transmission, loss: m Happens if lout=l’ out=l'in= lin << C/2 (capacity) – for both senders… r Congestion – queues often/usually full m Packet loss: whenever queue full (i. e. , usually…) l'in≥ l‘out m Cannot deliver retransmission, out-of-order l‘out>lout = lin • Especially with Go-Back-N ! m m Excessive delay (at least |Q|*Transmit. Time) Overhead retransmissions due to loss, time-out Transport Layer 109

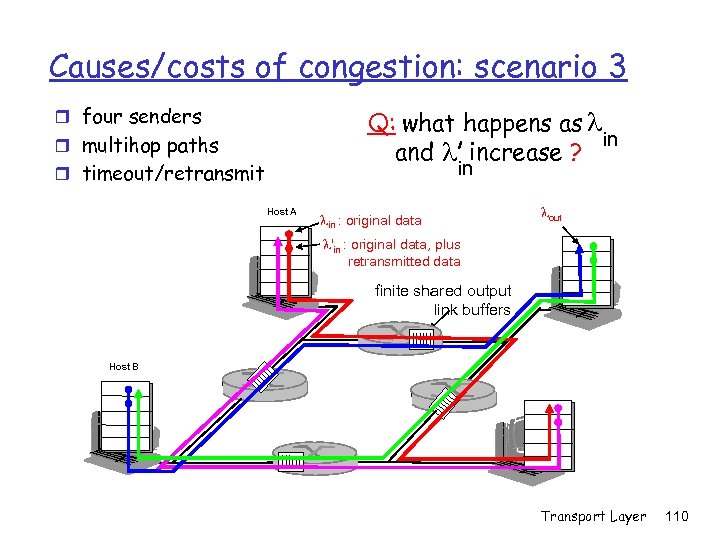

Causes/costs of congestion: scenario 3 r four senders Q: what happens as l in and l increase ? r multihop paths in r timeout/retransmit Host A lin : original data lout l'in : original data, plus retransmitted data finite shared output link buffers Host B Transport Layer 110

Causes/costs of congestion: scenario 3 r four senders Q: what happens as l in and l increase ? r multihop paths in r timeout/retransmit Host A lin : original data lout l'in : original data, plus retransmitted data finite shared output link buffers Host B Transport Layer 110

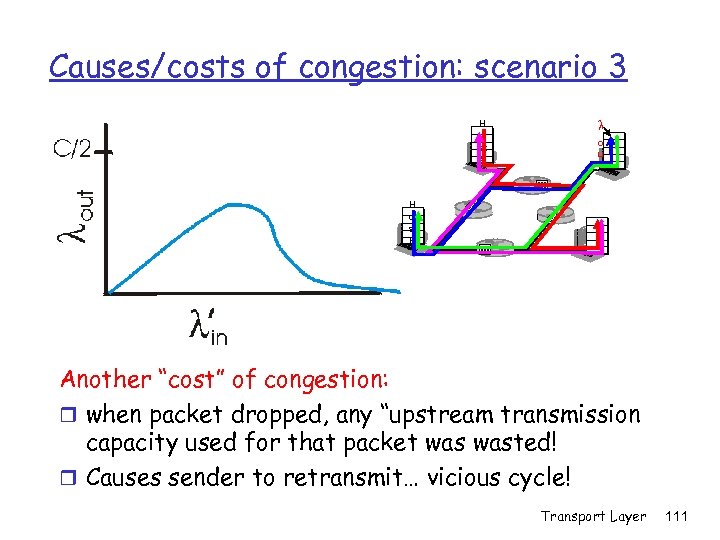

Causes/costs of congestion: scenario 3 H o st A l o u t H o st B Another “cost” of congestion: r when packet dropped, any “upstream transmission capacity used for that packet wasted! r Causes sender to retransmit… vicious cycle! Transport Layer 111

Causes/costs of congestion: scenario 3 H o st A l o u t H o st B Another “cost” of congestion: r when packet dropped, any “upstream transmission capacity used for that packet wasted! r Causes sender to retransmit… vicious cycle! Transport Layer 111

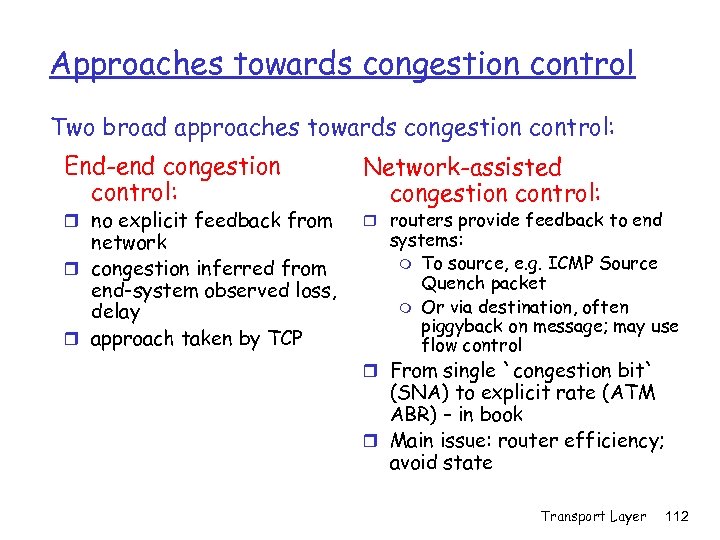

Approaches towards congestion control Two broad approaches towards congestion control: End-end congestion control: r no explicit feedback from network r congestion inferred from end-system observed loss, delay r approach taken by TCP Network-assisted congestion control: r routers provide feedback to end systems: m To source, e. g. ICMP Source Quench packet m Or via destination, often piggyback on message; may use flow control r From single `congestion bit` (SNA) to explicit rate (ATM ABR) – in book r Main issue: router efficiency; avoid state Transport Layer 112

Approaches towards congestion control Two broad approaches towards congestion control: End-end congestion control: r no explicit feedback from network r congestion inferred from end-system observed loss, delay r approach taken by TCP Network-assisted congestion control: r routers provide feedback to end systems: m To source, e. g. ICMP Source Quench packet m Or via destination, often piggyback on message; may use flow control r From single `congestion bit` (SNA) to explicit rate (ATM ABR) – in book r Main issue: router efficiency; avoid state Transport Layer 112

Lecture 7 outline r TCP Overview r TCP Timeouts r TCP Flow Control r 3. 6 Principles of congestion control r 3. 7 TCP congestion control r TCP Fairness Transport Layer 113

Lecture 7 outline r TCP Overview r TCP Timeouts r TCP Flow Control r 3. 6 Principles of congestion control r 3. 7 TCP congestion control r TCP Fairness Transport Layer 113

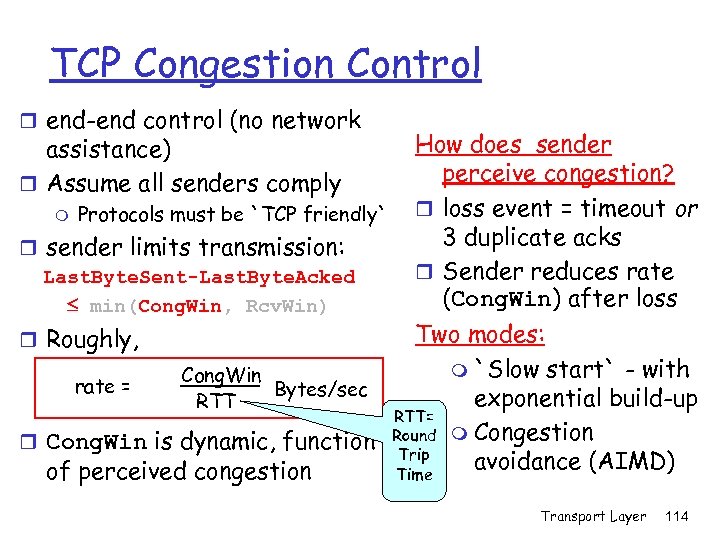

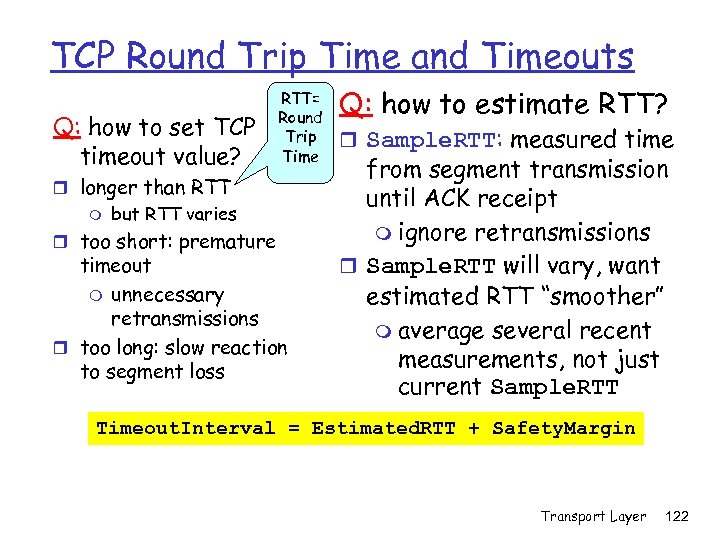

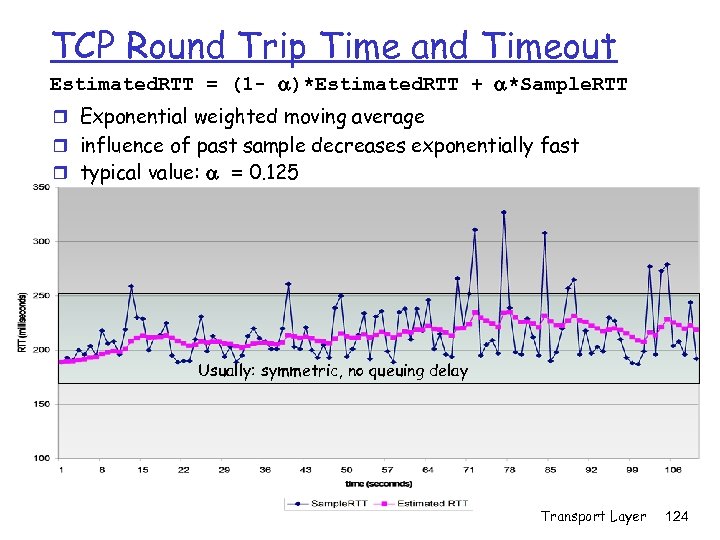

TCP Congestion Control r end-end control (no network assistance) r Assume all senders comply m Protocols must be `TCP friendly` r sender limits transmission: Last. Byte. Sent-Last. Byte. Acked min(Cong. Win, Rcv. Win) How does sender perceive congestion? r loss event = timeout or 3 duplicate acks r Sender reduces rate (Cong. Win) after loss Two modes: m `Slow start` - with Cong. Win rate = Bytes/sec exponential build-up RTT= r Cong. Win is dynamic, function Round m Congestion Trip avoidance (AIMD) of perceived congestion Time r Roughly, Transport Layer 114

TCP Congestion Control r end-end control (no network assistance) r Assume all senders comply m Protocols must be `TCP friendly` r sender limits transmission: Last. Byte. Sent-Last. Byte. Acked min(Cong. Win, Rcv. Win) How does sender perceive congestion? r loss event = timeout or 3 duplicate acks r Sender reduces rate (Cong. Win) after loss Two modes: m `Slow start` - with Cong. Win rate = Bytes/sec exponential build-up RTT= r Cong. Win is dynamic, function Round m Congestion Trip avoidance (AIMD) of perceived congestion Time r Roughly, Transport Layer 114

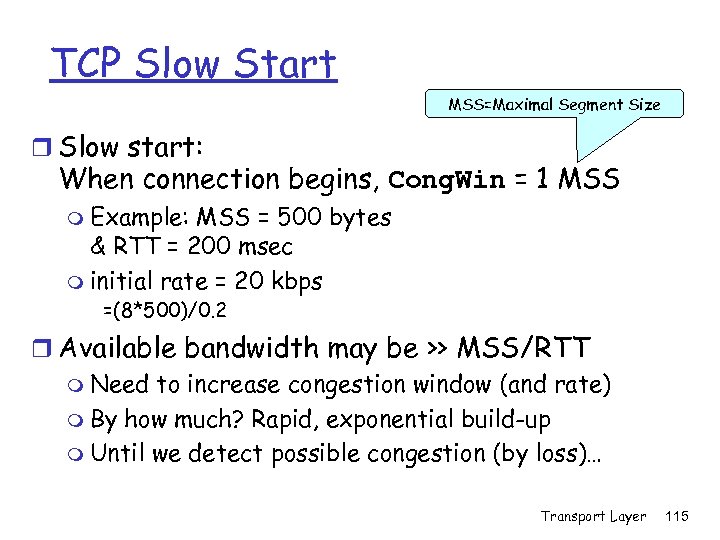

TCP Slow Start MSS=Maximal Segment Size r Slow start: When connection begins, Cong. Win = 1 MSS m Example: MSS = 500 bytes & RTT = 200 msec m initial rate = 20 kbps =(8*500)/0. 2 r Available bandwidth may be >> MSS/RTT m Need to increase congestion window (and rate) m By how much? Rapid, exponential build-up m Until we detect possible congestion (by loss)… Transport Layer 115

TCP Slow Start MSS=Maximal Segment Size r Slow start: When connection begins, Cong. Win = 1 MSS m Example: MSS = 500 bytes & RTT = 200 msec m initial rate = 20 kbps =(8*500)/0. 2 r Available bandwidth may be >> MSS/RTT m Need to increase congestion window (and rate) m By how much? Rapid, exponential build-up m Until we detect possible congestion (by loss)… Transport Layer 115

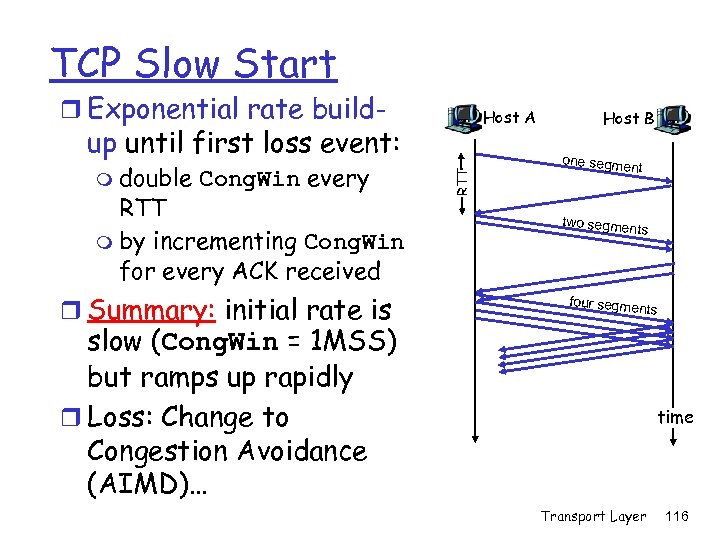

TCP Slow Start r Exponential rate build- Host A m double Cong. Win every RTT m by incrementing Cong. Win for every ACK received r Summary: initial rate is RTT up until first loss event: Host B one segme nt two segme nts four segme nts slow (Cong. Win = 1 MSS) but ramps up rapidly r Loss: Change to Congestion Avoidance (AIMD)… time Transport Layer 116

TCP Slow Start r Exponential rate build- Host A m double Cong. Win every RTT m by incrementing Cong. Win for every ACK received r Summary: initial rate is RTT up until first loss event: Host B one segme nt two segme nts four segme nts slow (Cong. Win = 1 MSS) but ramps up rapidly r Loss: Change to Congestion Avoidance (AIMD)… time Transport Layer 116

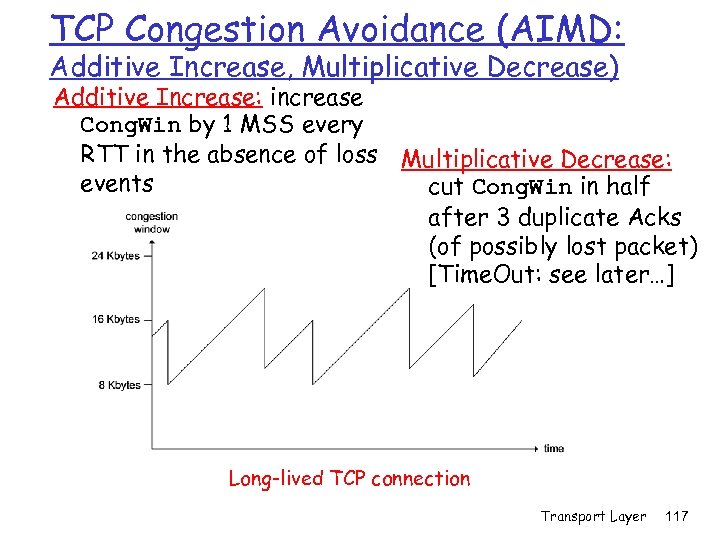

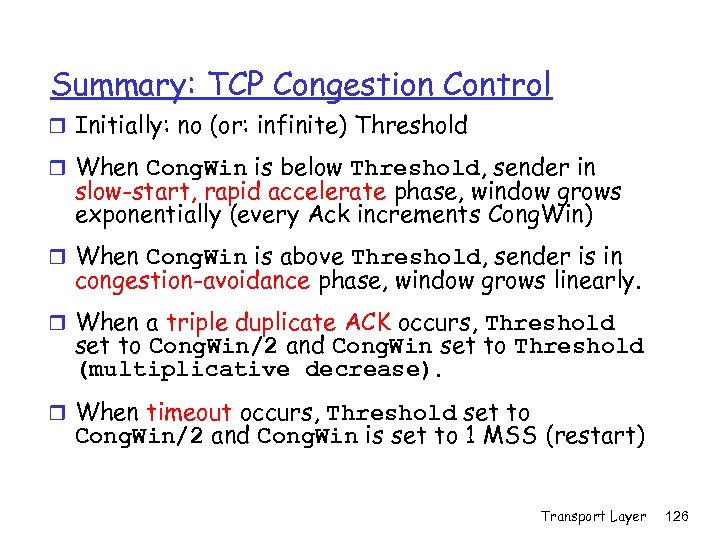

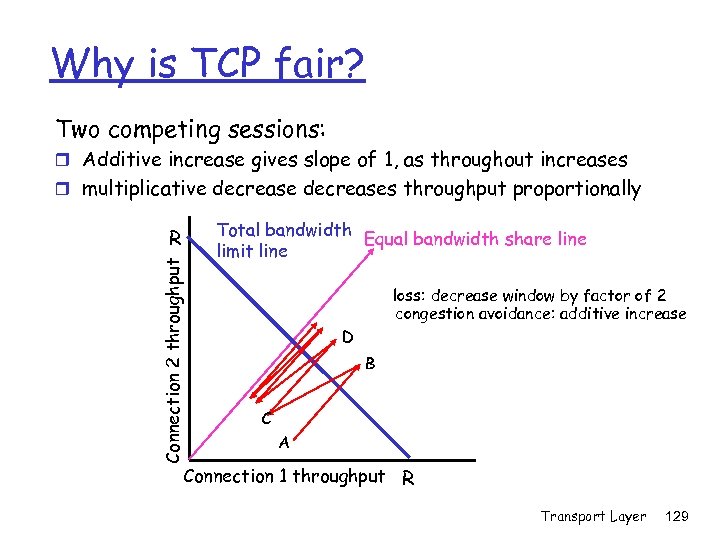

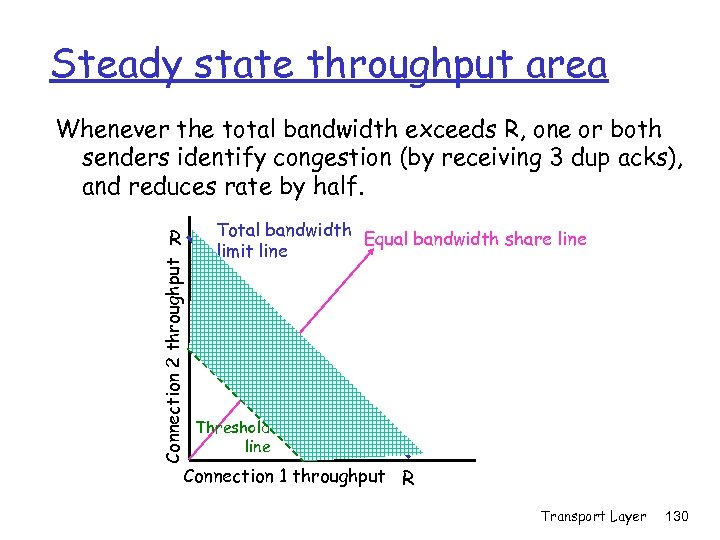

TCP Congestion Avoidance (AIMD: Additive Increase, Multiplicative Decrease) Additive Increase: increase Cong. Win by 1 MSS every RTT in the absence of loss Multiplicative Decrease: events cut Cong. Win in half after 3 duplicate Acks (of possibly lost packet) [Time. Out: see later…] Long-lived TCP connection Transport Layer 117

TCP Congestion Avoidance (AIMD: Additive Increase, Multiplicative Decrease) Additive Increase: increase Cong. Win by 1 MSS every RTT in the absence of loss Multiplicative Decrease: events cut Cong. Win in half after 3 duplicate Acks (of possibly lost packet) [Time. Out: see later…] Long-lived TCP connection Transport Layer 117

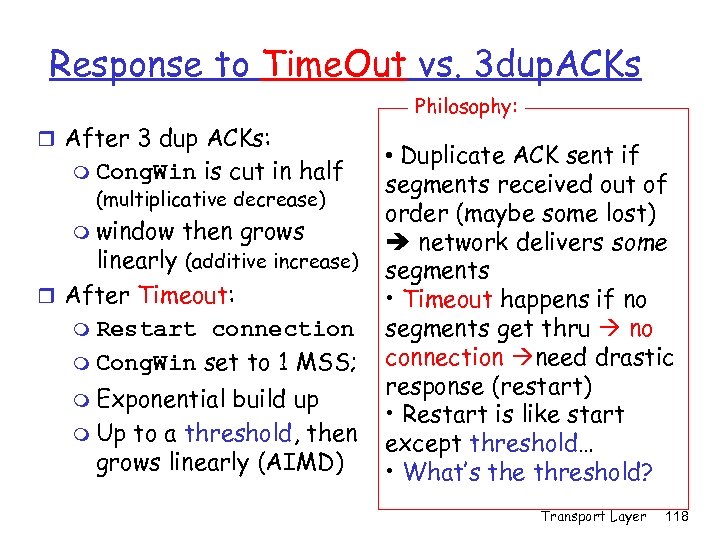

Response to Time. Out vs. 3 dup. ACKs Philosophy: r After 3 dup ACKs: m Cong. Win is cut in half (multiplicative decrease) m window then grows linearly (additive increase) r After Timeout: m Restart connection m Cong. Win set to 1 MSS; m Exponential build up m Up to a threshold, then grows linearly (AIMD) • Duplicate ACK sent if segments received out of order (maybe some lost) network delivers some segments • Timeout happens if no segments get thru no connection need drastic response (restart) • Restart is like start except threshold… • What’s the threshold? Transport Layer 118

Response to Time. Out vs. 3 dup. ACKs Philosophy: r After 3 dup ACKs: m Cong. Win is cut in half (multiplicative decrease) m window then grows linearly (additive increase) r After Timeout: m Restart connection m Cong. Win set to 1 MSS; m Exponential build up m Up to a threshold, then grows linearly (AIMD) • Duplicate ACK sent if segments received out of order (maybe some lost) network delivers some segments • Timeout happens if no segments get thru no connection need drastic response (restart) • Restart is like start except threshold… • What’s the threshold? Transport Layer 118

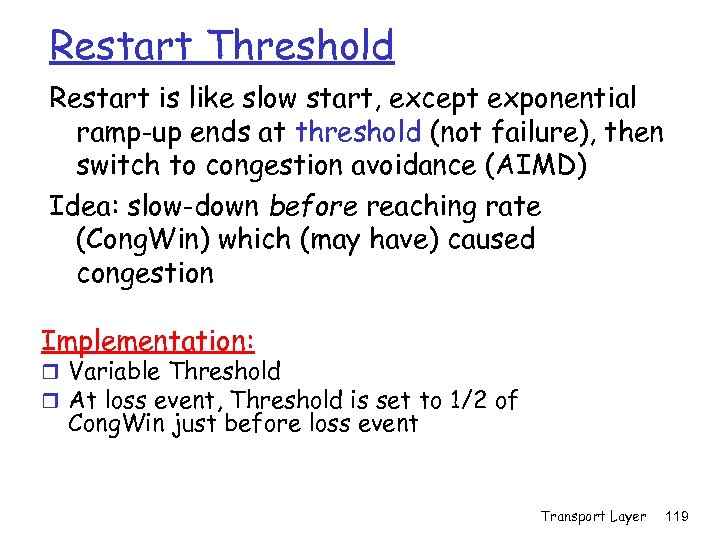

Restart Threshold Restart is like slow start, except exponential ramp-up ends at threshold (not failure), then switch to congestion avoidance (AIMD) Idea: slow-down before reaching rate (Cong. Win) which (may have) caused congestion Implementation: r Variable Threshold r At loss event, Threshold is set to 1/2 of Cong. Win just before loss event Transport Layer 119