3eef064e084aa3a7d134b3d0afbe2a2e.ppt

- Количество слайдов: 25

What Are the High-Level Concepts with Small Semantic Gaps? CS 4763 Multimedia System, Spring 2008

What Are the High-Level Concepts with Small Semantic Gaps? CS 4763 Multimedia System, Spring 2008

Outline n n Introduction LCSS: a lexicon of high-level concepts with small semantic gaps. n n Framework of LCSS Construction Methodologies Experimental results Conclusion

Outline n n Introduction LCSS: a lexicon of high-level concepts with small semantic gaps. n n Framework of LCSS Construction Methodologies Experimental results Conclusion

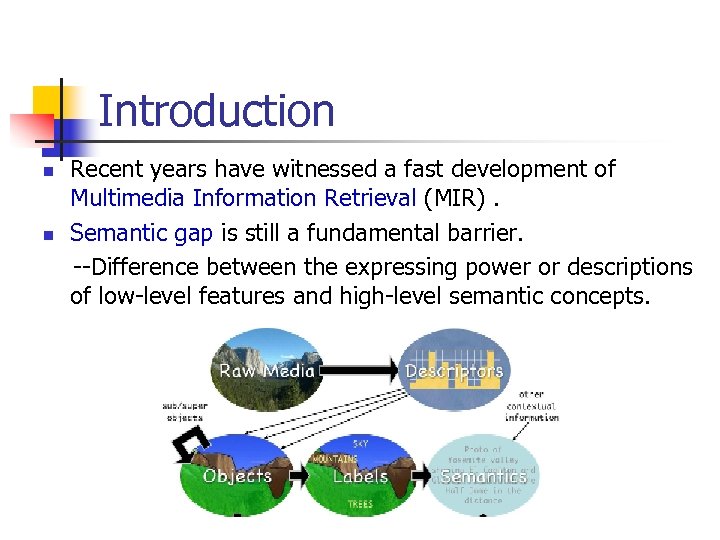

Introduction n n Recent years have witnessed a fast development of Multimedia Information Retrieval (MIR). Semantic gap is still a fundamental barrier. --Difference between the expressing power or descriptions of low-level features and high-level semantic concepts.

Introduction n n Recent years have witnessed a fast development of Multimedia Information Retrieval (MIR). Semantic gap is still a fundamental barrier. --Difference between the expressing power or descriptions of low-level features and high-level semantic concepts.

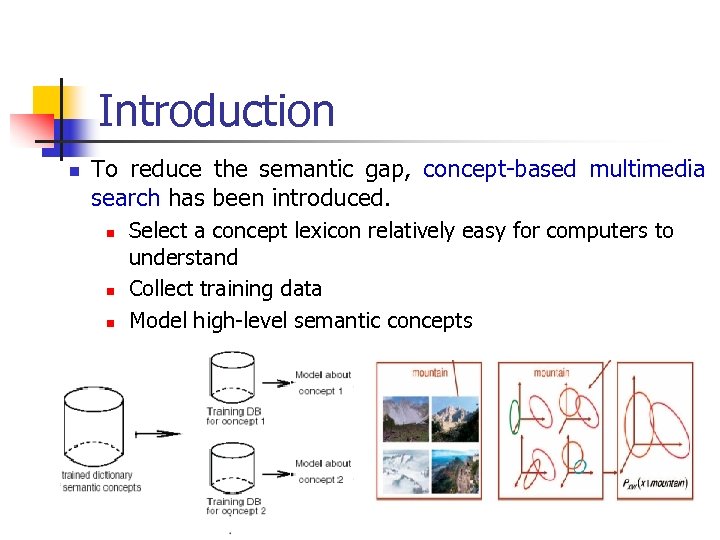

Introduction n To reduce the semantic gap, concept-based multimedia search has been introduced. n n n Select a concept lexicon relatively easy for computers to understand Collect training data Model high-level semantic concepts

Introduction n To reduce the semantic gap, concept-based multimedia search has been introduced. n n n Select a concept lexicon relatively easy for computers to understand Collect training data Model high-level semantic concepts

Problem n Concept Lexicon selection is usually simplified by manual selection or totally ignored in previous works. n n i. e. Caltech 101, Caltech 256, PASCAL --Implicitly favored those relatively “easy” concepts Media. Mill challenge concept data (101 terms) and Large-Scale Concept Ontology for Multimedia (LSCOM) (1, 000 concepts) -- Manually annotated concept lexicon on broadcast news video from the TRECVID benchmark n All the lexica ignore the differences of semantic gaps among concepts. n No automatic selection is executed.

Problem n Concept Lexicon selection is usually simplified by manual selection or totally ignored in previous works. n n i. e. Caltech 101, Caltech 256, PASCAL --Implicitly favored those relatively “easy” concepts Media. Mill challenge concept data (101 terms) and Large-Scale Concept Ontology for Multimedia (LSCOM) (1, 000 concepts) -- Manually annotated concept lexicon on broadcast news video from the TRECVID benchmark n All the lexica ignore the differences of semantic gaps among concepts. n No automatic selection is executed.

Problem n n Concepts with smaller semantic gaps are likely to be better modeled and retrieved than concepts with larger ones. Very little research is found on quantitative analysis of semantic gap. n What are the well-defined semantic concepts for learning? n How to automatically find them?

Problem n n Concepts with smaller semantic gaps are likely to be better modeled and retrieved than concepts with larger ones. Very little research is found on quantitative analysis of semantic gap. n What are the well-defined semantic concepts for learning? n How to automatically find them?

Objective n n Automatically construct a lexicon of high-level concepts with small semantic gap (LCSS). Two properties for LCSS: § 1) Concepts are commonly used. --The words have high occurrence frequency within the descriptions of real-world images. § 2) Concepts are expected to be visually and semantically consistent. -- Images of these concepts have smaller semantic gaps (easy to be modeled for retrieval and annotation)

Objective n n Automatically construct a lexicon of high-level concepts with small semantic gap (LCSS). Two properties for LCSS: § 1) Concepts are commonly used. --The words have high occurrence frequency within the descriptions of real-world images. § 2) Concepts are expected to be visually and semantically consistent. -- Images of these concepts have smaller semantic gaps (easy to be modeled for retrieval and annotation)

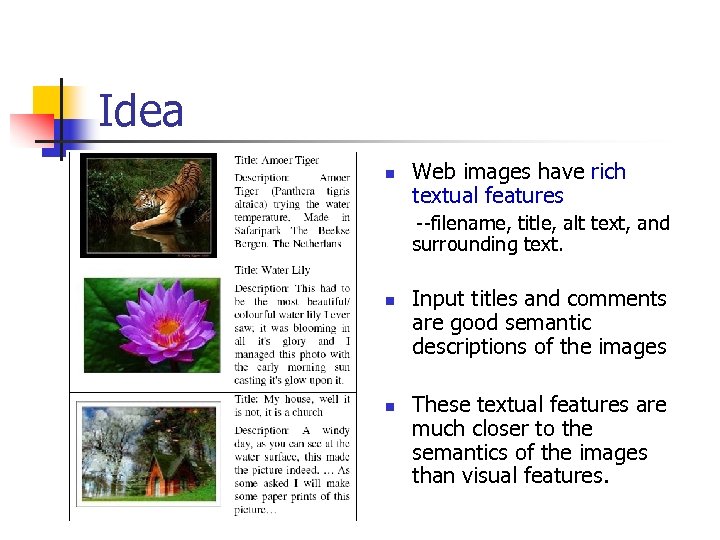

Idea n Web images have rich textual features --filename, title, alt text, and surrounding text. n n Input titles and comments are good semantic descriptions of the images These textual features are much closer to the semantics of the images than visual features.

Idea n Web images have rich textual features --filename, title, alt text, and surrounding text. n n Input titles and comments are good semantic descriptions of the images These textual features are much closer to the semantics of the images than visual features.

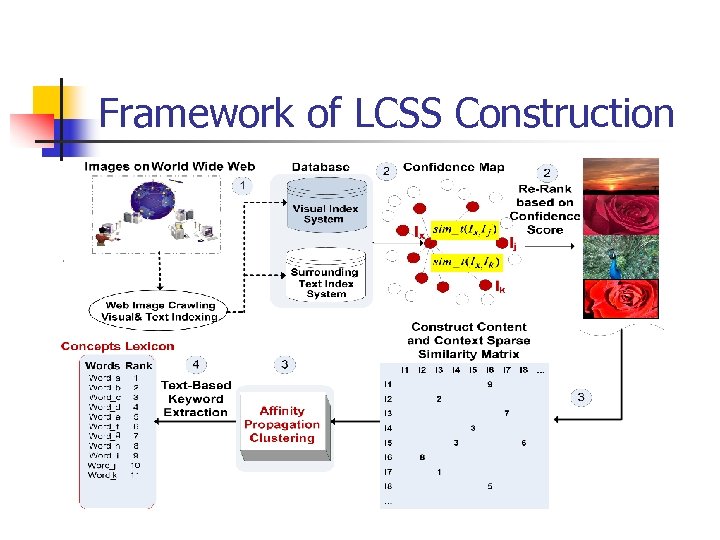

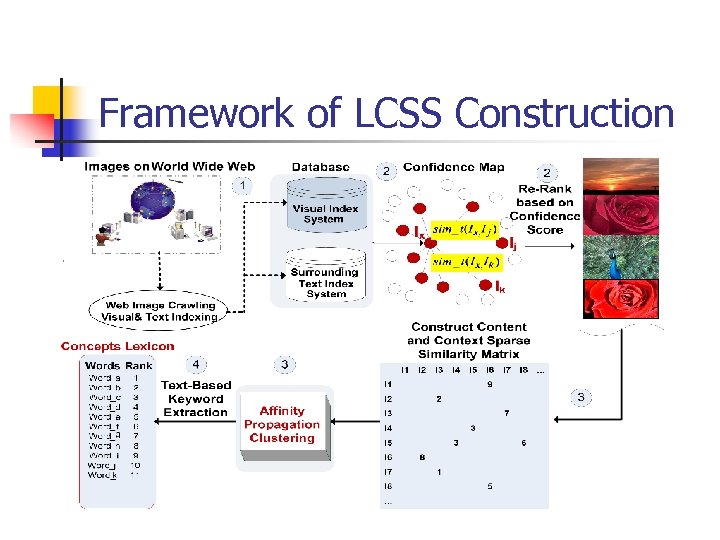

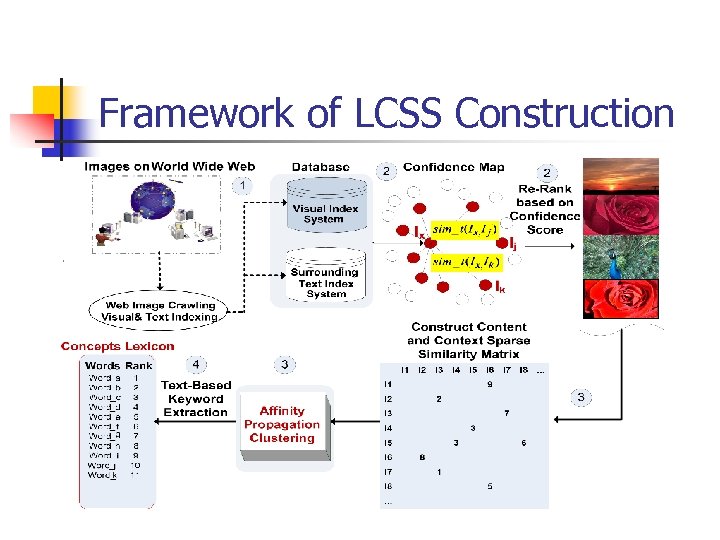

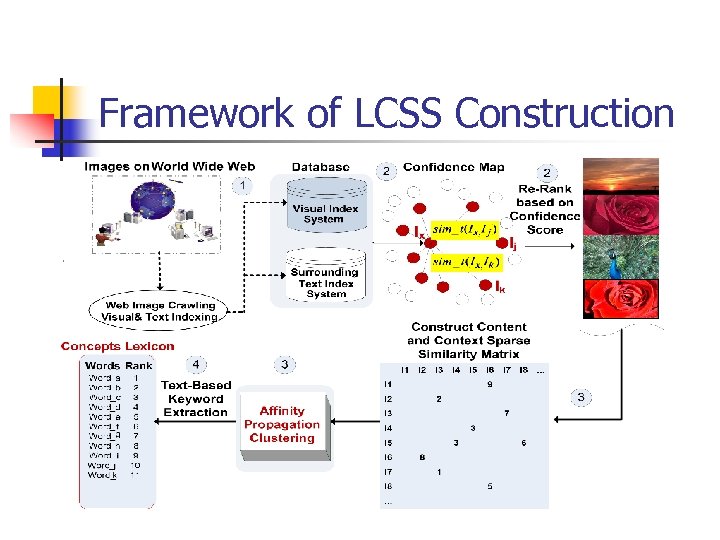

Framework of LCSS Construction

Framework of LCSS Construction

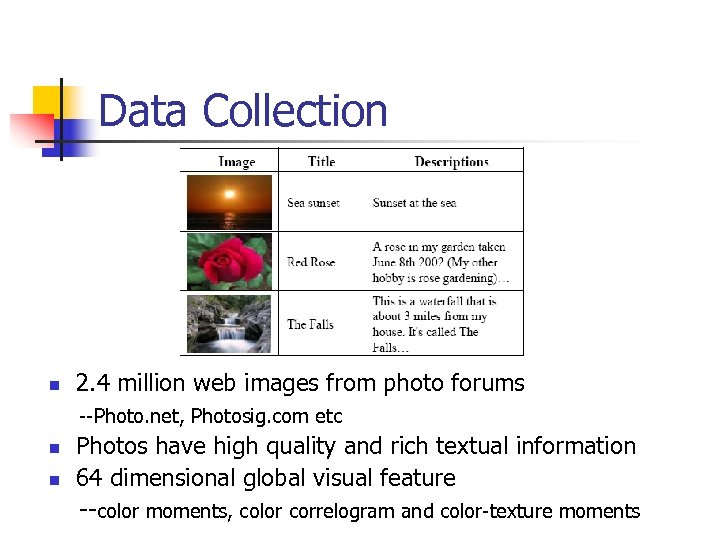

Data Collection n 2. 4 million web images from photo forums --Photo. net, Photosig. com etc n n Photos have high quality and rich textual information 64 dimensional global visual feature --color moments, color correlogram and color-texture moments

Data Collection n 2. 4 million web images from photo forums --Photo. net, Photosig. com etc n n Photos have high quality and rich textual information 64 dimensional global visual feature --color moments, color correlogram and color-texture moments

Framework of LCSS Construction

Framework of LCSS Construction

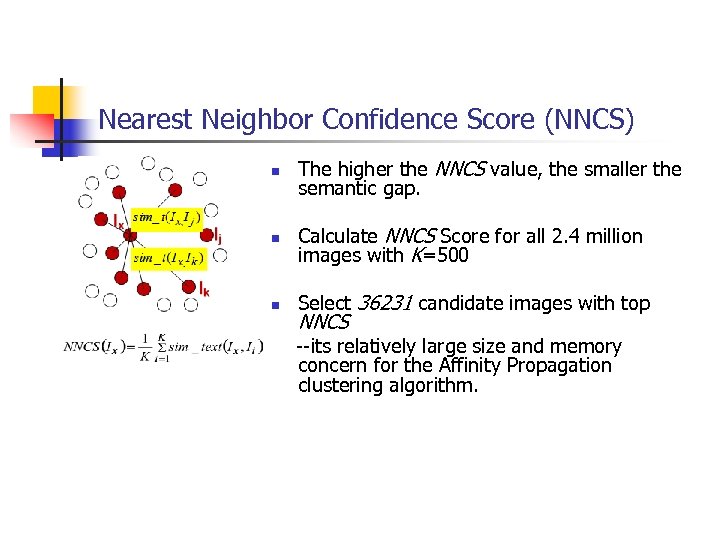

Nearest Neighbor Confidence Score (NNCS) n The higher the NNCS value, the smaller the semantic gap. n Calculate NNCS Score for all 2. 4 million images with K=500 n Select 36231 candidate images with top NNCS --its relatively large size and memory concern for the Affinity Propagation clustering algorithm.

Nearest Neighbor Confidence Score (NNCS) n The higher the NNCS value, the smaller the semantic gap. n Calculate NNCS Score for all 2. 4 million images with K=500 n Select 36231 candidate images with top NNCS --its relatively large size and memory concern for the Affinity Propagation clustering algorithm.

Framework of LCSS Construction

Framework of LCSS Construction

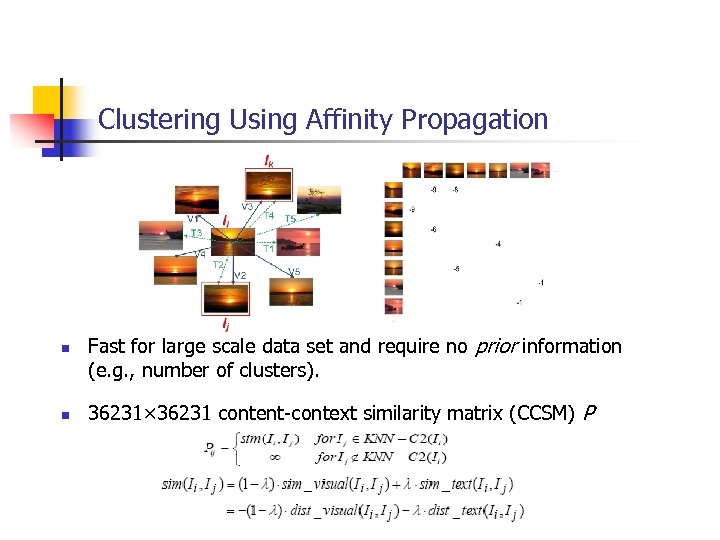

Clustering Using Affinity Propagation n n Fast for large scale data set and require no prior information (e. g. , number of clusters). 36231× 36231 content-context similarity matrix (CCSM) P

Clustering Using Affinity Propagation n n Fast for large scale data set and require no prior information (e. g. , number of clusters). 36231× 36231 content-context similarity matrix (CCSM) P

Framework of LCSS Construction

Framework of LCSS Construction

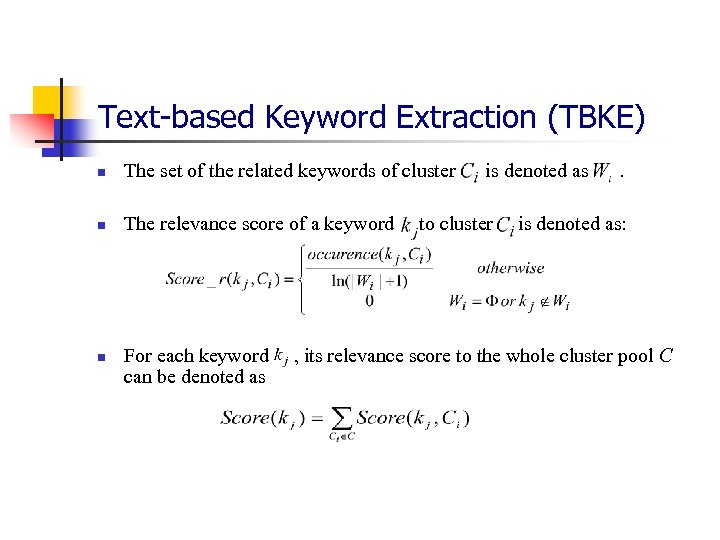

Text-based Keyword Extraction (TBKE) n The set of the related keywords of cluster n The relevance score of a keyword n For each keyword can be denoted as is denoted as to cluster . is denoted as: , its relevance score to the whole cluster pool C

Text-based Keyword Extraction (TBKE) n The set of the related keywords of cluster n The relevance score of a keyword n For each keyword can be denoted as is denoted as to cluster . is denoted as: , its relevance score to the whole cluster pool C

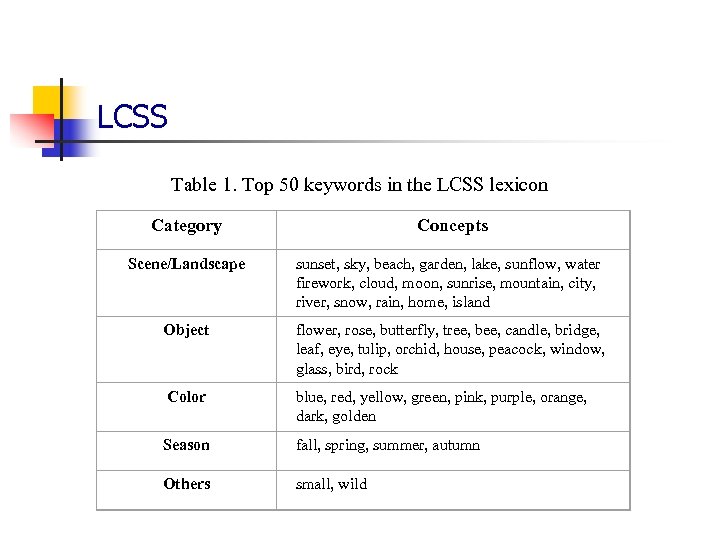

LCSS Table 1. Top 50 keywords in the LCSS lexicon Category Concepts Scene/Landscape sunset, sky, beach, garden, lake, sunflow, water firework, cloud, moon, sunrise, mountain, city, river, snow, rain, home, island Object flower, rose, butterfly, tree, bee, candle, bridge, leaf, eye, tulip, orchid, house, peacock, window, glass, bird, rock Color blue, red, yellow, green, pink, purple, orange, dark, golden Season fall, spring, summer, autumn Others small, wild

LCSS Table 1. Top 50 keywords in the LCSS lexicon Category Concepts Scene/Landscape sunset, sky, beach, garden, lake, sunflow, water firework, cloud, moon, sunrise, mountain, city, river, snow, rain, home, island Object flower, rose, butterfly, tree, bee, candle, bridge, leaf, eye, tulip, orchid, house, peacock, window, glass, bird, rock Color blue, red, yellow, green, pink, purple, orange, dark, golden Season fall, spring, summer, autumn Others small, wild

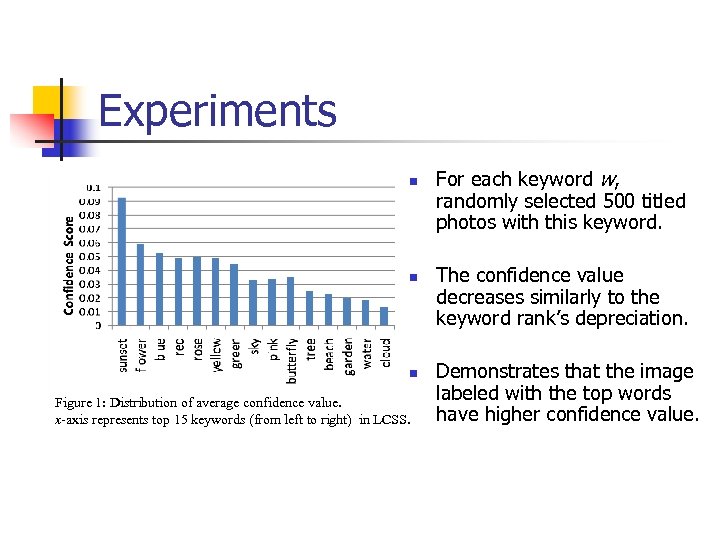

Experiments n n n Figure 1: Distribution of average confidence value. x-axis represents top 15 keywords (from left to right) in LCSS. For each keyword w, randomly selected 500 titled photos with this keyword. The confidence value decreases similarly to the keyword rank’s depreciation. Demonstrates that the image labeled with the top words have higher confidence value.

Experiments n n n Figure 1: Distribution of average confidence value. x-axis represents top 15 keywords (from left to right) in LCSS. For each keyword w, randomly selected 500 titled photos with this keyword. The confidence value decreases similarly to the keyword rank’s depreciation. Demonstrates that the image labeled with the top words have higher confidence value.

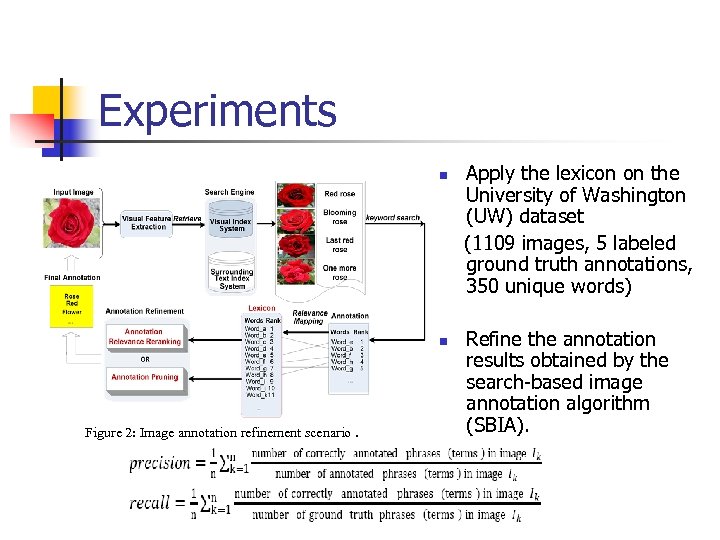

Experiments n n Figure 2: Image annotation refinement scenario. Apply the lexicon on the University of Washington (UW) dataset (1109 images, 5 labeled ground truth annotations, 350 unique words) Refine the annotation results obtained by the search-based image annotation algorithm (SBIA).

Experiments n n Figure 2: Image annotation refinement scenario. Apply the lexicon on the University of Washington (UW) dataset (1109 images, 5 labeled ground truth annotations, 350 unique words) Refine the annotation results obtained by the search-based image annotation algorithm (SBIA).

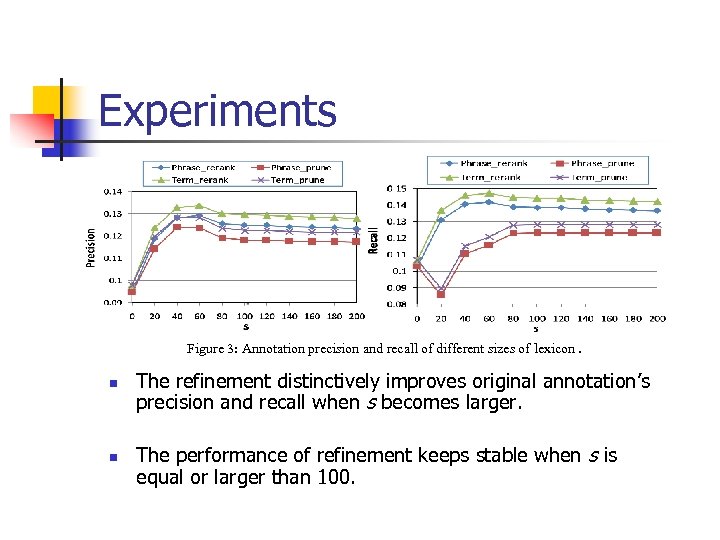

Experiments Figure 3: Annotation precision and recall of different sizes of lexicon. n n The refinement distinctively improves original annotation’s precision and recall when s becomes larger. The performance of refinement keeps stable when s is equal or larger than 100.

Experiments Figure 3: Annotation precision and recall of different sizes of lexicon. n n The refinement distinctively improves original annotation’s precision and recall when s becomes larger. The performance of refinement keeps stable when s is equal or larger than 100.

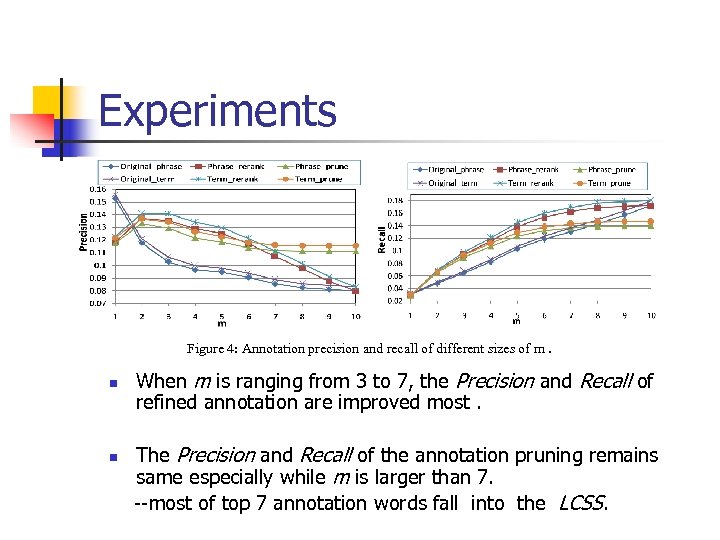

Experiments Figure 4: Annotation precision and recall of different sizes of m. n n When m is ranging from 3 to 7, the Precision and Recall of refined annotation are improved most. The Precision and Recall of the annotation pruning remains same especially while m is larger than 7. --most of top 7 annotation words fall into the LCSS.

Experiments Figure 4: Annotation precision and recall of different sizes of m. n n When m is ranging from 3 to 7, the Precision and Recall of refined annotation are improved most. The Precision and Recall of the annotation pruning remains same especially while m is larger than 7. --most of top 7 annotation words fall into the LCSS.

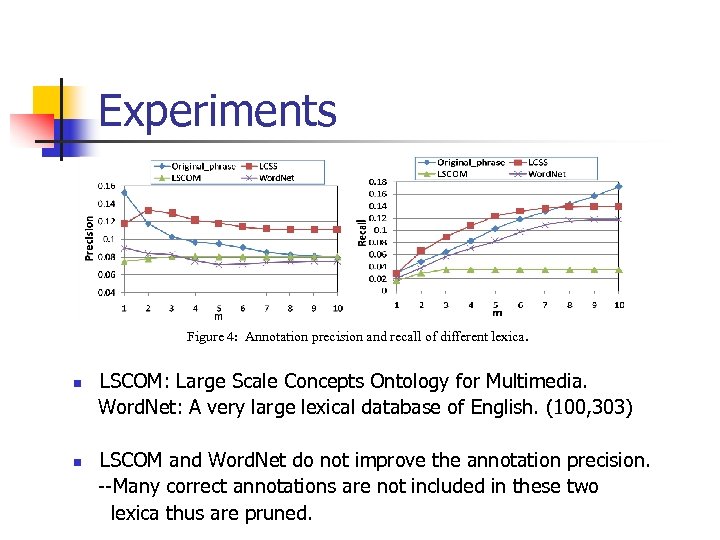

Experiments Figure 4: Annotation precision and recall of different lexica. n n LSCOM: Large Scale Concepts Ontology for Multimedia. Word. Net: A very large lexical database of English. (100, 303) LSCOM and Word. Net do not improve the annotation precision. --Many correct annotations are not included in these two lexica thus are pruned.

Experiments Figure 4: Annotation precision and recall of different lexica. n n LSCOM: Large Scale Concepts Ontology for Multimedia. Word. Net: A very large lexical database of English. (100, 303) LSCOM and Word. Net do not improve the annotation precision. --Many correct annotations are not included in these two lexica thus are pruned.

Conclusion § Quantitatively study and formulate the semantic gap problem. § Propose a novel framework to automatically select visually and semantically consistent concepts. § LCSS is the first lexicon for concepts with small semantic gap § great help for data collection and concept modeling. § a candidate pool of semantic concepts --image annotation, annotation refinement and rejection. § Potential applications in query optimization and MIR.

Conclusion § Quantitatively study and formulate the semantic gap problem. § Propose a novel framework to automatically select visually and semantically consistent concepts. § LCSS is the first lexicon for concepts with small semantic gap § great help for data collection and concept modeling. § a candidate pool of semantic concepts --image annotation, annotation refinement and rejection. § Potential applications in query optimization and MIR.

Future Work n Investigate different features to construct feature-based lexica n n Open questions n n n Texture feature Shape feature SIFT feature How many semantic concepts are necessary? Which features are good for image retrieval with specific concept? ……

Future Work n Investigate different features to construct feature-based lexica n n Open questions n n n Texture feature Shape feature SIFT feature How many semantic concepts are necessary? Which features are good for image retrieval with specific concept? ……

Reference n n n C. G. Snoek, M. Worring, J. C. van Gemert, J. M. Geusebroek, and A. W. Smeulders. “The challenge problem for automated detection of 101 semantic concepts in multimedia. ” Proc. of ACM Multimedia, 2006. C. M. Naphade, J. R. Smith, J. Tesic, and S. F. Chang, et al. “Large-scale concept ontology for multimedia. ” IEEE Multi. Media, 13(3): 86– 91, 2006. B. J. Frey, and D. Dueck. Clustering by passing messages between data points. Science, 315: 972 -976, 2007. X. J. Wang, L. Zhang, F. Jing, and W. Y. Ma. “Anno. Search: image auto-annotation by search”. Proc. of IEEE Conf. CVPR, New York, June, 2006. C. Wang, F. Jing, L. Zhang, and H. J. Zhang. “ Scalable search-based image annotation of personal images. ” Proc. of the 8 th ACM international Workshop on Multimedia information Retrieval, Santa Barbara, CA, USA, 2006. 10.

Reference n n n C. G. Snoek, M. Worring, J. C. van Gemert, J. M. Geusebroek, and A. W. Smeulders. “The challenge problem for automated detection of 101 semantic concepts in multimedia. ” Proc. of ACM Multimedia, 2006. C. M. Naphade, J. R. Smith, J. Tesic, and S. F. Chang, et al. “Large-scale concept ontology for multimedia. ” IEEE Multi. Media, 13(3): 86– 91, 2006. B. J. Frey, and D. Dueck. Clustering by passing messages between data points. Science, 315: 972 -976, 2007. X. J. Wang, L. Zhang, F. Jing, and W. Y. Ma. “Anno. Search: image auto-annotation by search”. Proc. of IEEE Conf. CVPR, New York, June, 2006. C. Wang, F. Jing, L. Zhang, and H. J. Zhang. “ Scalable search-based image annotation of personal images. ” Proc. of the 8 th ACM international Workshop on Multimedia information Retrieval, Santa Barbara, CA, USA, 2006. 10.