MIPT Talk 11.10.2017.pptx

- Количество слайдов: 41

Practical Data Compression for Memory Hierarchies and Applications Gennady Pekhimenko Assistant Professor Computer Systems and Networking Group (CSNG) Eco. System Group

Practical Data Compression for Memory Hierarchies and Applications Gennady Pekhimenko Assistant Professor Computer Systems and Networking Group (CSNG) Eco. System Group

Performance and Energy Efficiency Energy efficiency Applications today are data-intensive Memory Caching Databases Graphics 2

Performance and Energy Efficiency Energy efficiency Applications today are data-intensive Memory Caching Databases Graphics 2

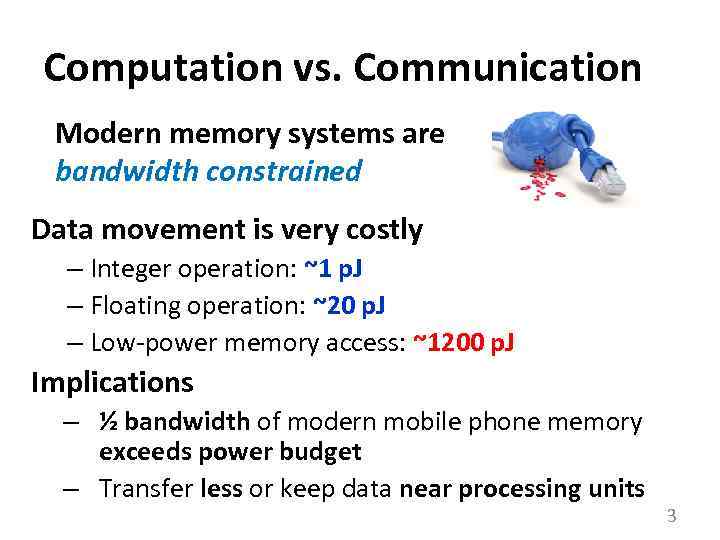

Computation vs. Communication Modern memory systems are bandwidth constrained Data movement is very costly – Integer operation: ~1 p. J – Floating operation: ~20 p. J – Low-power memory access: ~1200 p. J Implications – ½ bandwidth of modern mobile phone memory exceeds power budget – Transfer less or keep data near processing units 3

Computation vs. Communication Modern memory systems are bandwidth constrained Data movement is very costly – Integer operation: ~1 p. J – Floating operation: ~20 p. J – Low-power memory access: ~1200 p. J Implications – ½ bandwidth of modern mobile phone memory exceeds power budget – Transfer less or keep data near processing units 3

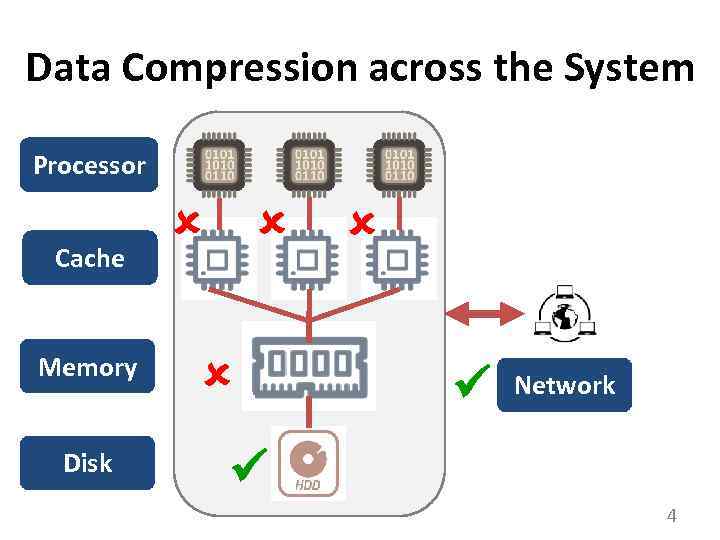

Data Compression across the System Processor Cache Memory Disk Network 4

Data Compression across the System Processor Cache Memory Disk Network 4

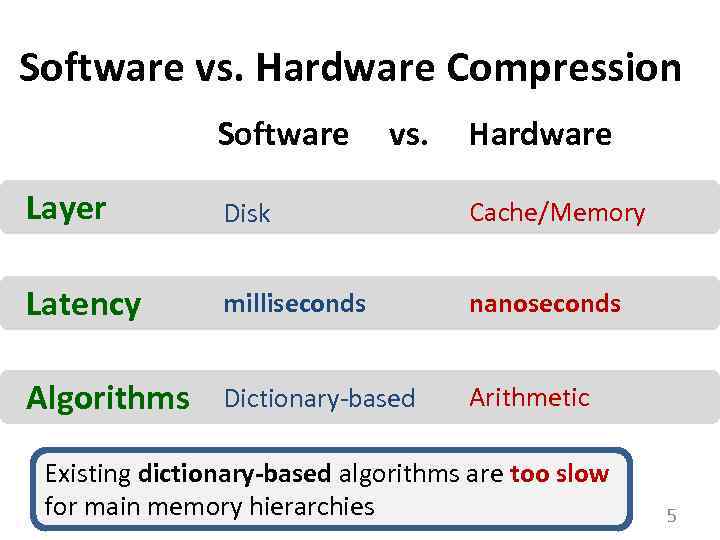

Software vs. Hardware Compression Software vs. Hardware Layer Disk Cache/Memory Latency milliseconds nanoseconds Algorithms Dictionary-based Arithmetic Existing dictionary-based algorithms are too slow for main memory hierarchies 5

Software vs. Hardware Compression Software vs. Hardware Layer Disk Cache/Memory Latency milliseconds nanoseconds Algorithms Dictionary-based Arithmetic Existing dictionary-based algorithms are too slow for main memory hierarchies 5

Key Challenges for Compression in Memory Hierarchy • Fast Access Latency • Practical Implementation and Low Cost • High Compression Ratio 6

Key Challenges for Compression in Memory Hierarchy • Fast Access Latency • Practical Implementation and Low Cost • High Compression Ratio 6

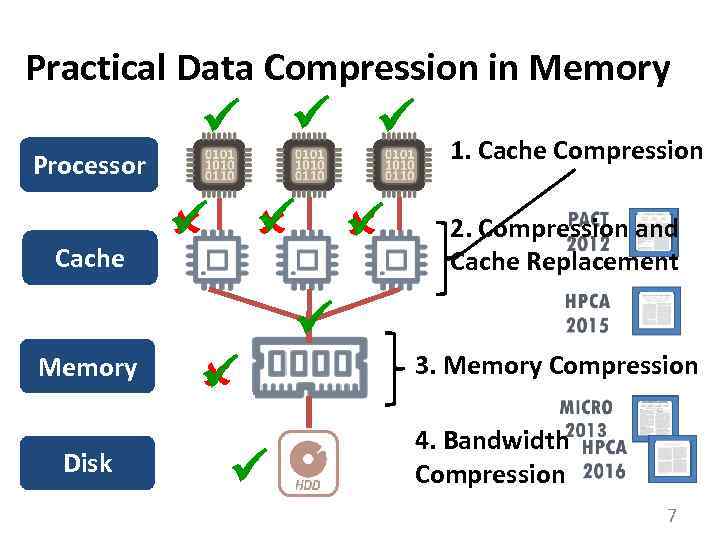

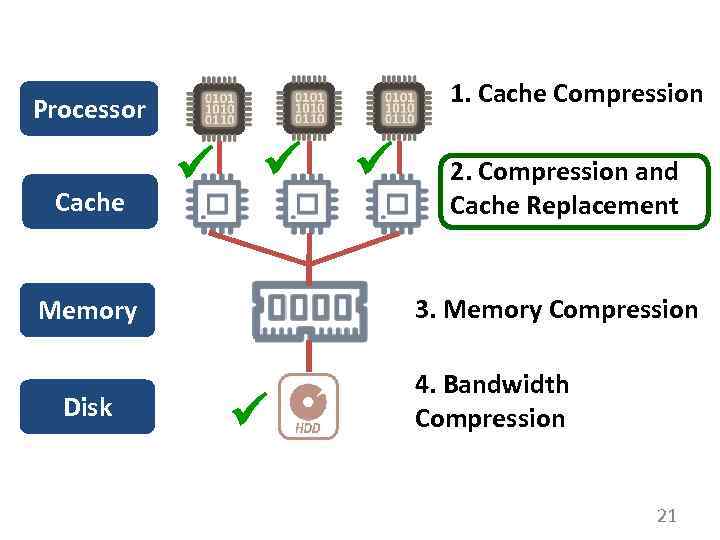

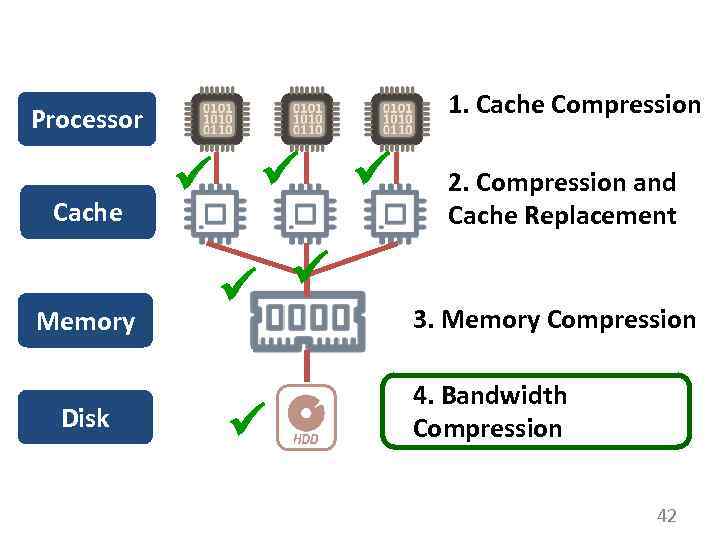

Practical Data Compression in Memory Processor Cache Memory Disk 1. Cache Compression 2. Compression and Cache Replacement 3. Memory Compression 4. Bandwidth Compression 7

Practical Data Compression in Memory Processor Cache Memory Disk 1. Cache Compression 2. Compression and Cache Replacement 3. Memory Compression 4. Bandwidth Compression 7

PACT 2012 1. Cache Compression 8

PACT 2012 1. Cache Compression 8

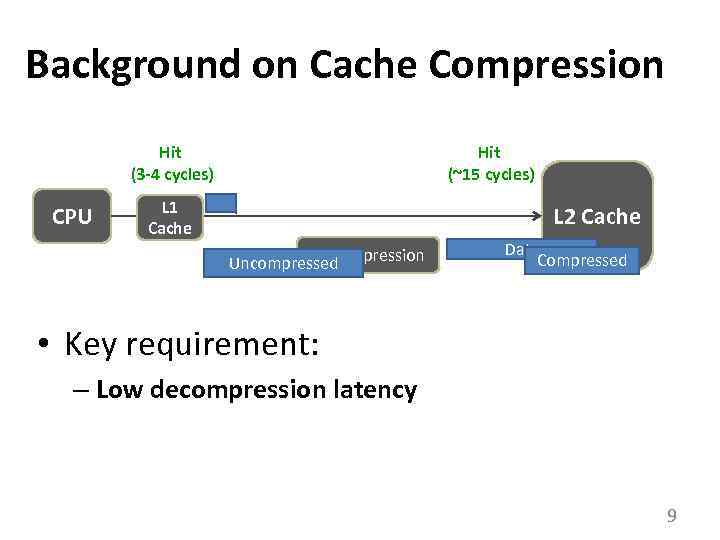

Background on Cache Compression Hit (3 -4 cycles) CPU Hit (~15 cycles) L 1 Cache L 2 Cache Decompression Uncompressed Data Compressed • Key requirement: – Low decompression latency 9

Background on Cache Compression Hit (3 -4 cycles) CPU Hit (~15 cycles) L 1 Cache L 2 Cache Decompression Uncompressed Data Compressed • Key requirement: – Low decompression latency 9

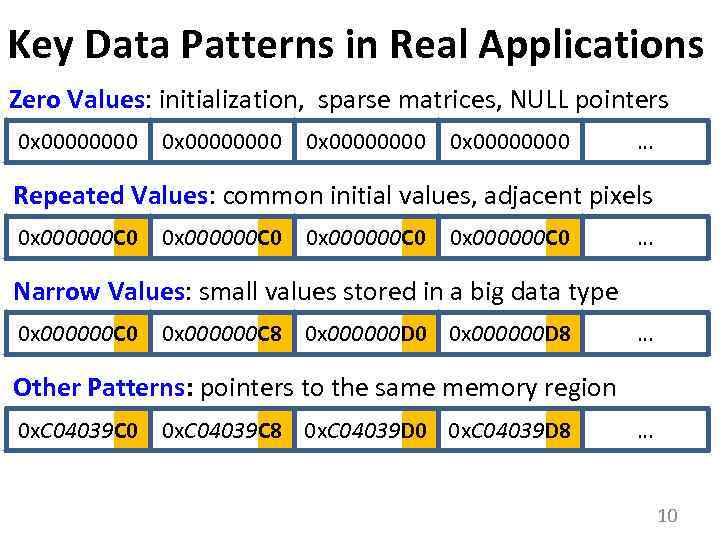

Key Data Patterns in Real Applications Zero Values: initialization, sparse matrices, NULL pointers 0 x 00000000 … Repeated Values: common initial values, adjacent pixels 0 x 000000 C 0 … Narrow Values: small values stored in a big data type 0 x 000000 C 0 0 x 000000 C 8 0 x 000000 D 0 0 x 000000 D 8 … Other Patterns: pointers to the same memory region 0 x. C 04039 C 0 0 x. C 04039 C 8 0 x. C 04039 D 0 0 x. C 04039 D 8 … 10

Key Data Patterns in Real Applications Zero Values: initialization, sparse matrices, NULL pointers 0 x 00000000 … Repeated Values: common initial values, adjacent pixels 0 x 000000 C 0 … Narrow Values: small values stored in a big data type 0 x 000000 C 0 0 x 000000 C 8 0 x 000000 D 0 0 x 000000 D 8 … Other Patterns: pointers to the same memory region 0 x. C 04039 C 0 0 x. C 04039 C 8 0 x. C 04039 D 0 0 x. C 04039 D 8 … 10

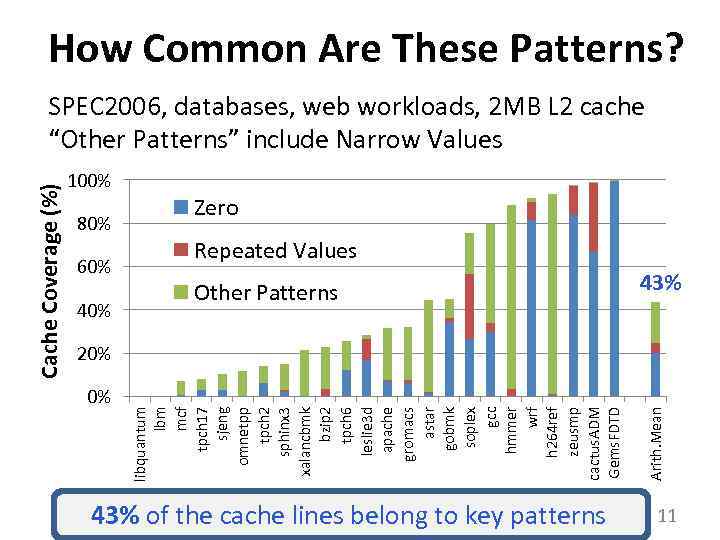

How Common Are These Patterns? 100% 80% 60% 40% Zero Repeated Values Other Patterns 43% 0% 43% of the cache lines belong to key patterns Arith. Mean 20% libquantum lbm mcf tpch 17 sjeng omnetpp tpch 2 sphinx 3 xalancbmk bzip 2 tpch 6 leslie 3 d apache gromacs astar gobmk soplex gcc hmmer wrf h 264 ref zeusmp cactus. ADM Gems. FDTD Cache Coverage (%) SPEC 2006, databases, web workloads, 2 MB L 2 cache “Other Patterns” include Narrow Values 11

How Common Are These Patterns? 100% 80% 60% 40% Zero Repeated Values Other Patterns 43% 0% 43% of the cache lines belong to key patterns Arith. Mean 20% libquantum lbm mcf tpch 17 sjeng omnetpp tpch 2 sphinx 3 xalancbmk bzip 2 tpch 6 leslie 3 d apache gromacs astar gobmk soplex gcc hmmer wrf h 264 ref zeusmp cactus. ADM Gems. FDTD Cache Coverage (%) SPEC 2006, databases, web workloads, 2 MB L 2 cache “Other Patterns” include Narrow Values 11

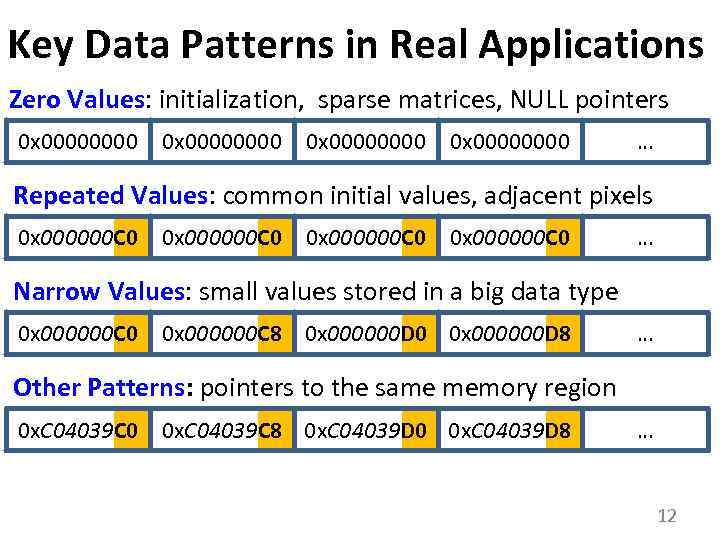

Key Data Patterns in Real Applications Zero Values: initialization, sparse matrices, NULL pointers 0 x 00000000 … Repeated Values: common initial values, adjacent pixels 0 x 000000 C 0 … Narrow Values: small values stored in a big data type 0 x 000000 C 0 0 x 000000 C 8 0 x 000000 D 0 0 x 000000 D 8 … Other Patterns: pointers to the same memory region 0 x. C 04039 C 0 0 x. C 04039 C 8 0 x. C 04039 D 0 0 x. C 04039 D 8 … 12

Key Data Patterns in Real Applications Zero Values: initialization, sparse matrices, NULL pointers 0 x 00000000 … Repeated Values: common initial values, adjacent pixels 0 x 000000 C 0 … Narrow Values: small values stored in a big data type 0 x 000000 C 0 0 x 000000 C 8 0 x 000000 D 0 0 x 000000 D 8 … Other Patterns: pointers to the same memory region 0 x. C 04039 C 0 0 x. C 04039 C 8 0 x. C 04039 D 0 0 x. C 04039 D 8 … 12

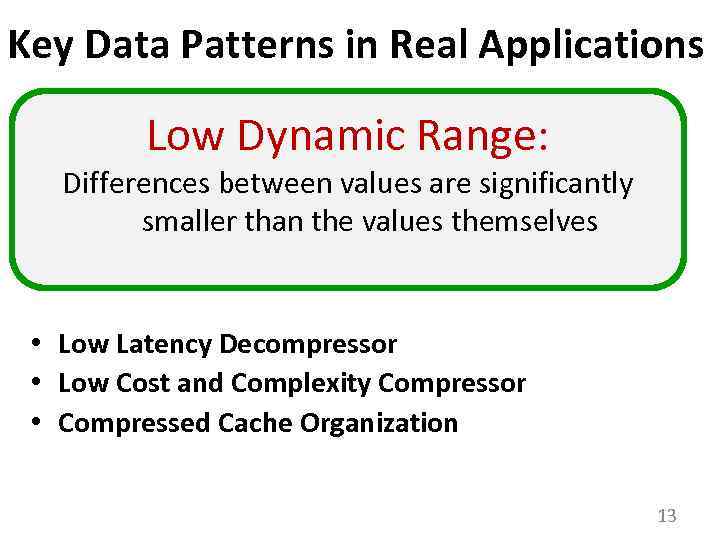

Key Data Patterns in Real Applications Low Dynamic Range: Differences between values are significantly smaller than the values themselves • Low Latency Decompressor • Low Cost and Complexity Compressor • Compressed Cache Organization 13

Key Data Patterns in Real Applications Low Dynamic Range: Differences between values are significantly smaller than the values themselves • Low Latency Decompressor • Low Cost and Complexity Compressor • Compressed Cache Organization 13

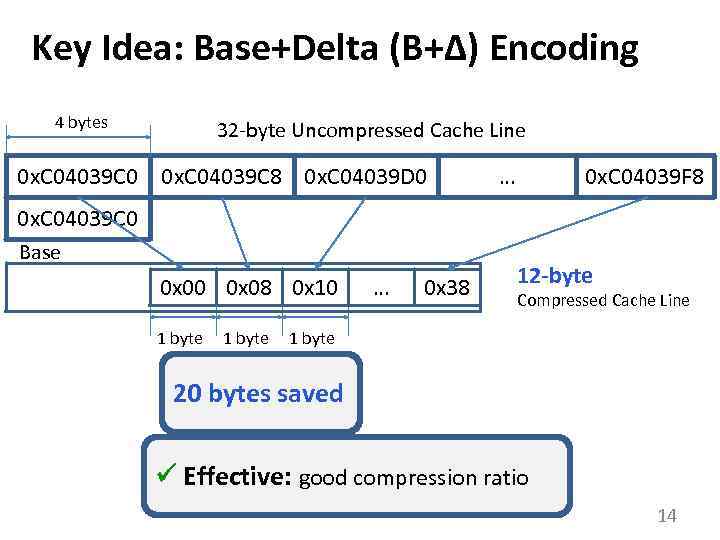

Key Idea: Base+Delta (B+Δ) Encoding 4 bytes 32 -byte Uncompressed Cache Line 0 x. C 04039 C 0 0 x. C 04039 C 8 0 x. C 04039 D 0 … 0 x. C 04039 F 8 0 x. C 04039 C 0 Base 0 x 00 0 x 08 0 x 10 1 byte … 0 x 38 12 -byte Compressed Cache Line 1 byte 20 bytes saved Effective: good compression ratio 14

Key Idea: Base+Delta (B+Δ) Encoding 4 bytes 32 -byte Uncompressed Cache Line 0 x. C 04039 C 0 0 x. C 04039 C 8 0 x. C 04039 D 0 … 0 x. C 04039 F 8 0 x. C 04039 C 0 Base 0 x 00 0 x 08 0 x 10 1 byte … 0 x 38 12 -byte Compressed Cache Line 1 byte 20 bytes saved Effective: good compression ratio 14

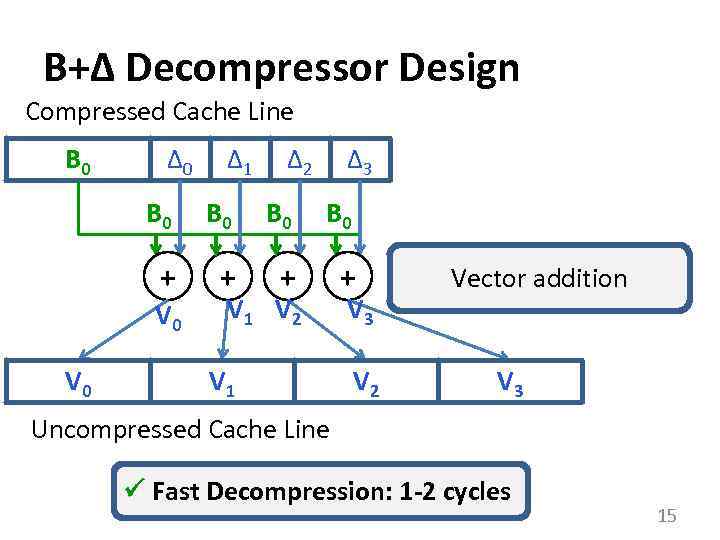

B+Δ Decompressor Design Compressed Cache Line B 0 Δ 1 Δ 2 Δ 3 B 0 B 0 + + V 0 V 1 V 2 V 1 V 3 V 2 Vector addition V 3 Uncompressed Cache Line Fast Decompression: 1 -2 cycles 15

B+Δ Decompressor Design Compressed Cache Line B 0 Δ 1 Δ 2 Δ 3 B 0 B 0 + + V 0 V 1 V 2 V 1 V 3 V 2 Vector addition V 3 Uncompressed Cache Line Fast Decompression: 1 -2 cycles 15

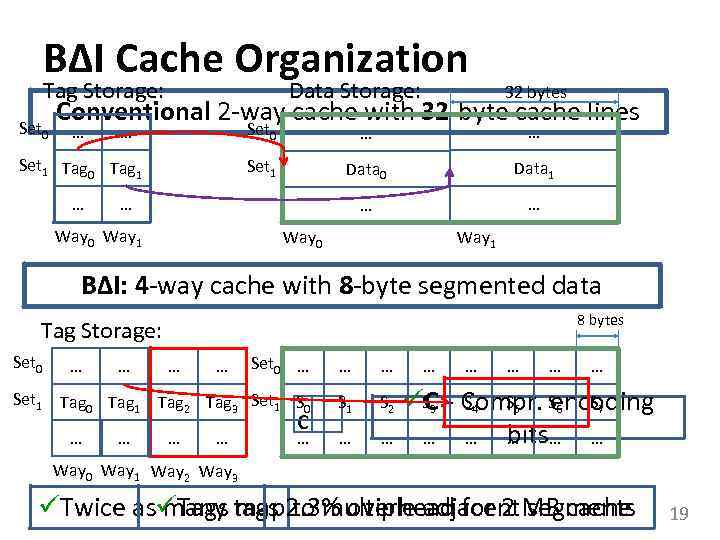

BΔI Cache Organization Tag Storage: Set 0 Data Storage: 32 bytes Conventional 2 -way cache with 32 -byte cache lines … Set 0 … … Data 0 Data 1 … Set 1 Tag 0 Tag 1 … Set 1 … … … Way 0 Way 1 BΔI: 4 -way cache with 8 -byte segmented data 8 bytes Tag Storage: Set 0 … … Set 1 Tag 0 Tag 1 … … Set 0 … … … Tag 2 Tag 3 Set 1 S 0 S 1 S 2 … … … C … … … S 3 S 4 S 5 C - Compr. S 6 S 7 encoding bits… … … Way 0 Way 1 Way 2 Way 3 Twice as Tags tags 2. 3% overhead for 2 MB cache many map to multiple adjacent segments 19

BΔI Cache Organization Tag Storage: Set 0 Data Storage: 32 bytes Conventional 2 -way cache with 32 -byte cache lines … Set 0 … … Data 0 Data 1 … Set 1 Tag 0 Tag 1 … Set 1 … … … Way 0 Way 1 BΔI: 4 -way cache with 8 -byte segmented data 8 bytes Tag Storage: Set 0 … … Set 1 Tag 0 Tag 1 … … Set 0 … … … Tag 2 Tag 3 Set 1 S 0 S 1 S 2 … … … C … … … S 3 S 4 S 5 C - Compr. S 6 S 7 encoding bits… … … Way 0 Way 1 Way 2 Way 3 Twice as Tags tags 2. 3% overhead for 2 MB cache many map to multiple adjacent segments 19

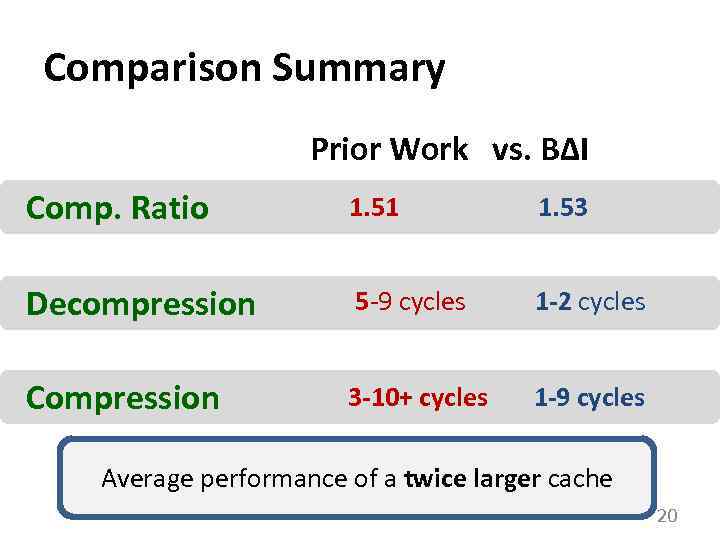

Comparison Summary Prior Work vs. BΔI Comp. Ratio 1. 51 1. 53 Decompression 5 -9 cycles 1 -2 cycles Compression 3 -10+ cycles 1 -9 cycles Average performance of a twice larger cache 20

Comparison Summary Prior Work vs. BΔI Comp. Ratio 1. 51 1. 53 Decompression 5 -9 cycles 1 -2 cycles Compression 3 -10+ cycles 1 -9 cycles Average performance of a twice larger cache 20

1. Cache Compression Processor Cache 2. Compression and Cache Replacement 3. Memory Compression Memory Disk 4. Bandwidth Compression 21

1. Cache Compression Processor Cache 2. Compression and Cache Replacement 3. Memory Compression Memory Disk 4. Bandwidth Compression 21

HPCA 2015 2. Compression and Cache Replacement 22

HPCA 2015 2. Compression and Cache Replacement 22

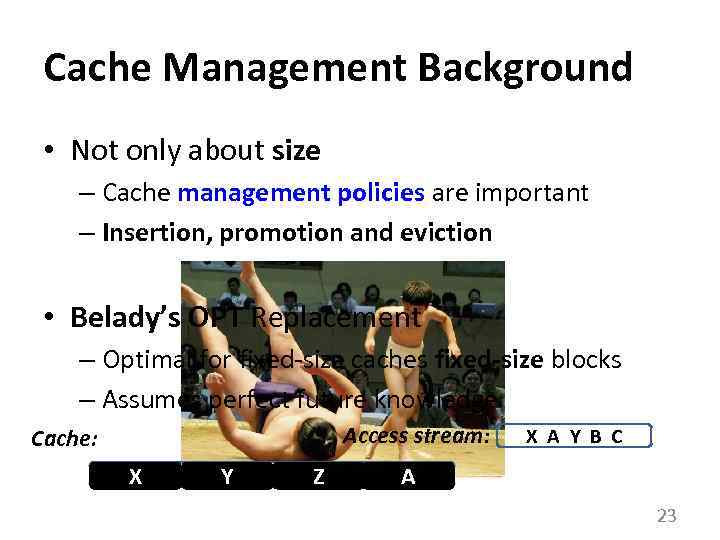

Cache Management Background • Not only about size – Cache management policies are important – Insertion, promotion and eviction • Belady’s OPT Replacement – Optimal for fixed-size caches fixed-size blocks – Assumes perfect future knowledge Access stream: Cache: X Y Z X A Y B C A 23

Cache Management Background • Not only about size – Cache management policies are important – Insertion, promotion and eviction • Belady’s OPT Replacement – Optimal for fixed-size caches fixed-size blocks – Assumes perfect future knowledge Access stream: Cache: X Y Z X A Y B C A 23

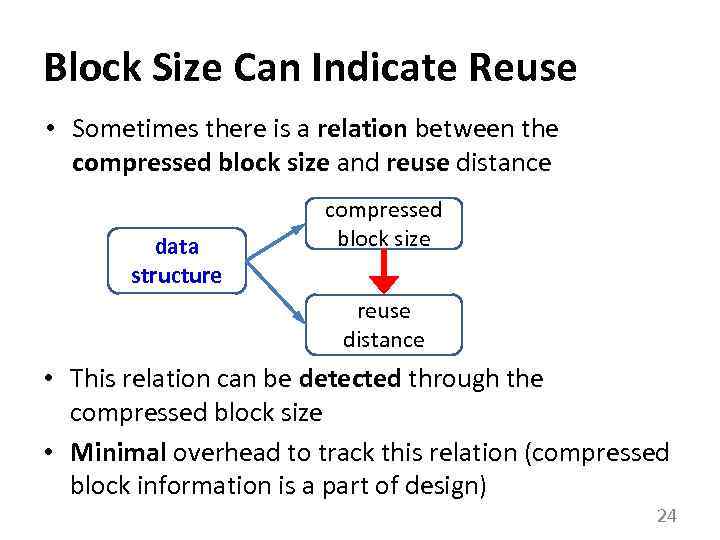

Block Size Can Indicate Reuse • Sometimes there is a relation between the compressed block size and reuse distance data structure compressed block size reuse distance • This relation can be detected through the compressed block size • Minimal overhead to track this relation (compressed block information is a part of design) 24

Block Size Can Indicate Reuse • Sometimes there is a relation between the compressed block size and reuse distance data structure compressed block size reuse distance • This relation can be detected through the compressed block size • Minimal overhead to track this relation (compressed block information is a part of design) 24

![Code Example to Support Intuition int A[N]; // small indices: compressible double B[16]; // Code Example to Support Intuition int A[N]; // small indices: compressible double B[16]; //](https://present5.com/presentation/263803024_453315100/image-22.jpg) Code Example to Support Intuition int A[N]; // small indices: compressible double B[16]; // FP coefficients: incompressible for (int i=0; i

Code Example to Support Intuition int A[N]; // small indices: compressible double B[16]; // FP coefficients: incompressible for (int i=0; i

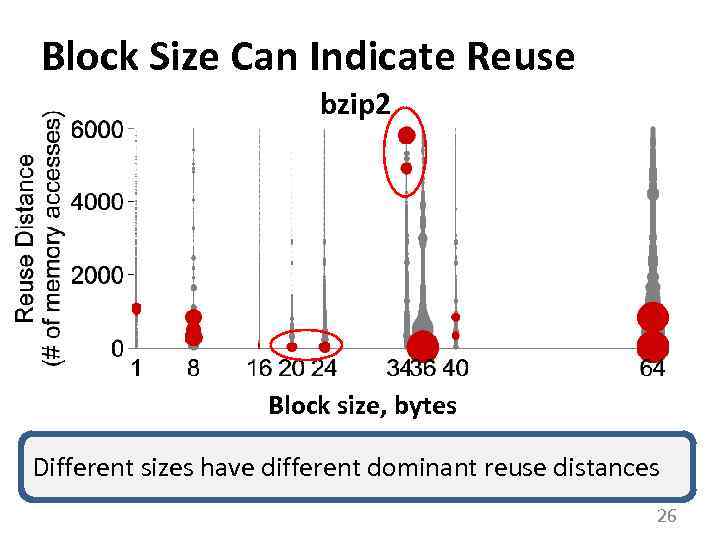

Block Size Can Indicate Reuse bzip 2 Block size, bytes Different sizes have different dominant reuse distances 26

Block Size Can Indicate Reuse bzip 2 Block size, bytes Different sizes have different dominant reuse distances 26

Compression-Aware Management Policies (CAMP) CAMP SIP: Size-based Insertion Policy MVE: Minimal-Value Eviction compressed block size data Probability of reuse Value = are on-par The benefits structure with cache compression - 2 X Compressed block size additional increase in capacity reuse distance 27

Compression-Aware Management Policies (CAMP) CAMP SIP: Size-based Insertion Policy MVE: Minimal-Value Eviction compressed block size data Probability of reuse Value = are on-par The benefits structure with cache compression - 2 X Compressed block size additional increase in capacity reuse distance 27

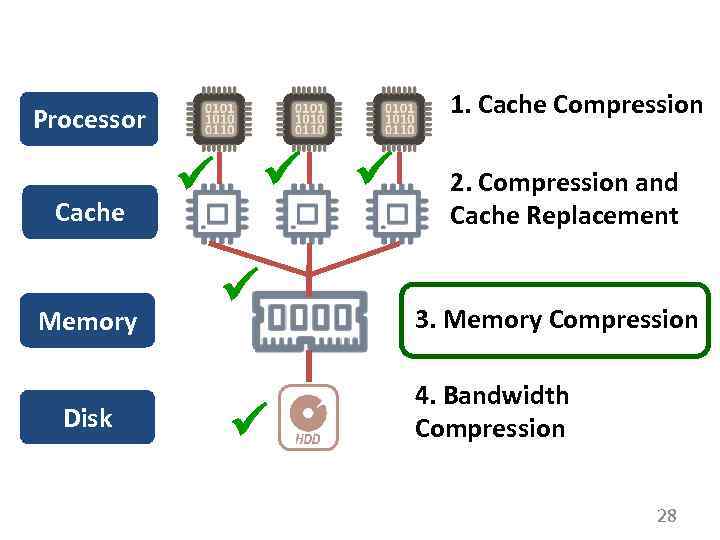

1. Cache Compression Processor Cache Memory Disk 2. Compression and Cache Replacement 3. Memory Compression 4. Bandwidth Compression 28

1. Cache Compression Processor Cache Memory Disk 2. Compression and Cache Replacement 3. Memory Compression 4. Bandwidth Compression 28

MICRO 2013 3. Main Memory Compression 29

MICRO 2013 3. Main Memory Compression 29

Challenges in Main Memory Compression 1. Address Computation 2. Mapping and Fragmentation 30

Challenges in Main Memory Compression 1. Address Computation 2. Mapping and Fragmentation 30

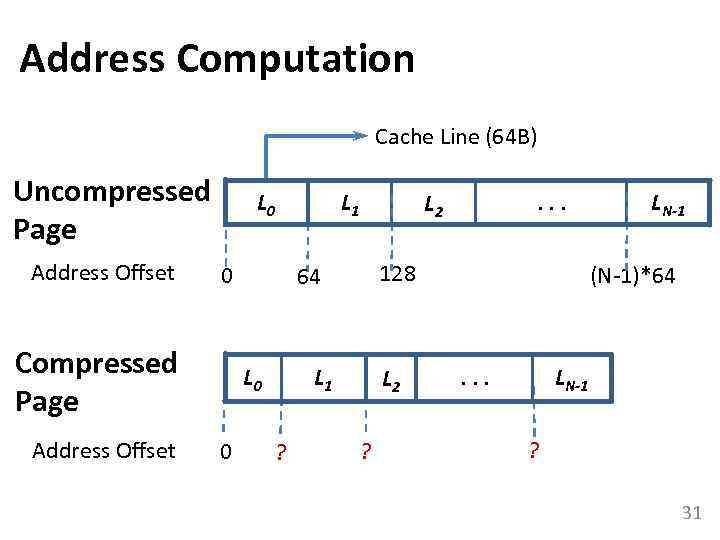

Address Computation Cache Line (64 B) Uncompressed Page Address Offset 0 Compressed Page Address Offset L 1 L 0 0 128 64 L 1 L 0 ? . . . L 2 ? LN-1 (N-1)*64 . . . LN-1 ? 31

Address Computation Cache Line (64 B) Uncompressed Page Address Offset 0 Compressed Page Address Offset L 1 L 0 0 128 64 L 1 L 0 ? . . . L 2 ? LN-1 (N-1)*64 . . . LN-1 ? 31

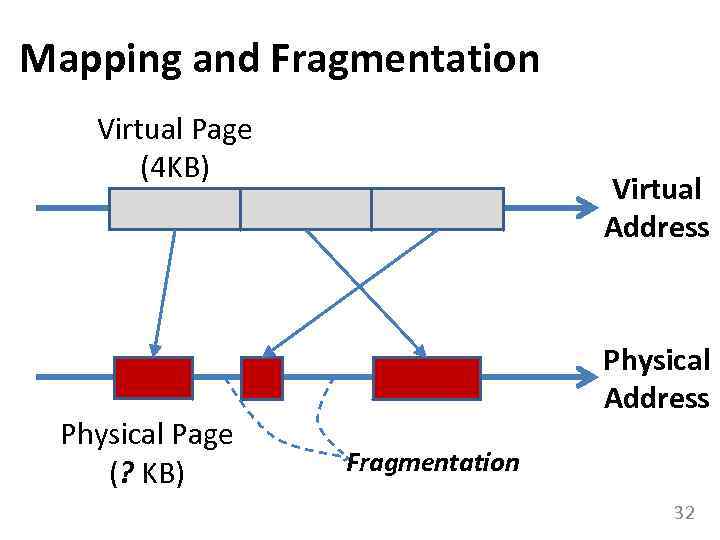

Mapping and Fragmentation Virtual Page (4 KB) Physical Page (? KB) Virtual Address Physical Address Fragmentation 32

Mapping and Fragmentation Virtual Page (4 KB) Physical Page (? KB) Virtual Address Physical Address Fragmentation 32

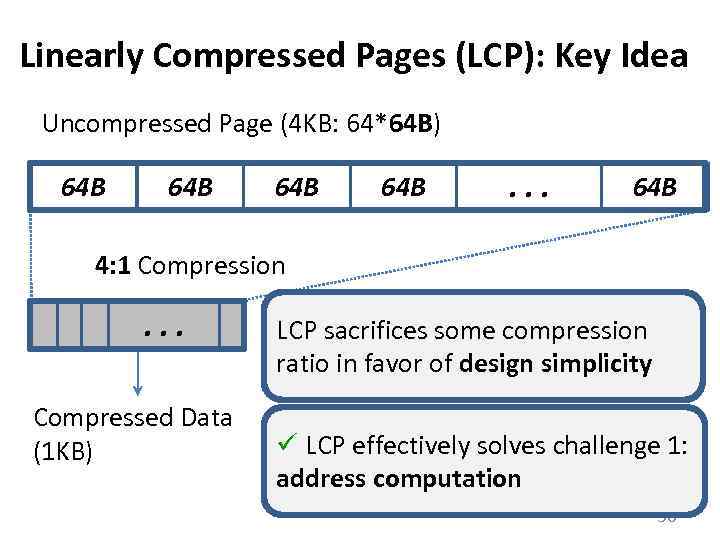

Linearly Compressed Pages (LCP): Key Idea Uncompressed Page (4 KB: 64*64 B) 64 B 64 B . . . 64 B 4: 1 Compression . . . Compressed Data (1 KB) LCP sacrifices some compression ratio in favor of design simplicity LCP effectively solves challenge 1: address computation 36

Linearly Compressed Pages (LCP): Key Idea Uncompressed Page (4 KB: 64*64 B) 64 B 64 B . . . 64 B 4: 1 Compression . . . Compressed Data (1 KB) LCP sacrifices some compression ratio in favor of design simplicity LCP effectively solves challenge 1: address computation 36

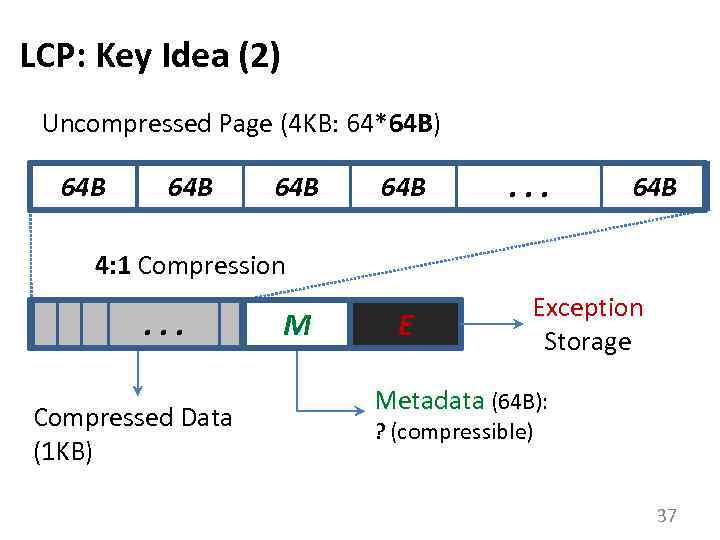

LCP: Key Idea (2) Uncompressed Page (4 KB: 64*64 B) 64 B 64 B . . . 64 B 4: 1 Compression . . . Compressed Data (1 KB) M E Exception Storage Metadata (64 B): ? (compressible) 37

LCP: Key Idea (2) Uncompressed Page (4 KB: 64*64 B) 64 B 64 B . . . 64 B 4: 1 Compression . . . Compressed Data (1 KB) M E Exception Storage Metadata (64 B): ? (compressible) 37

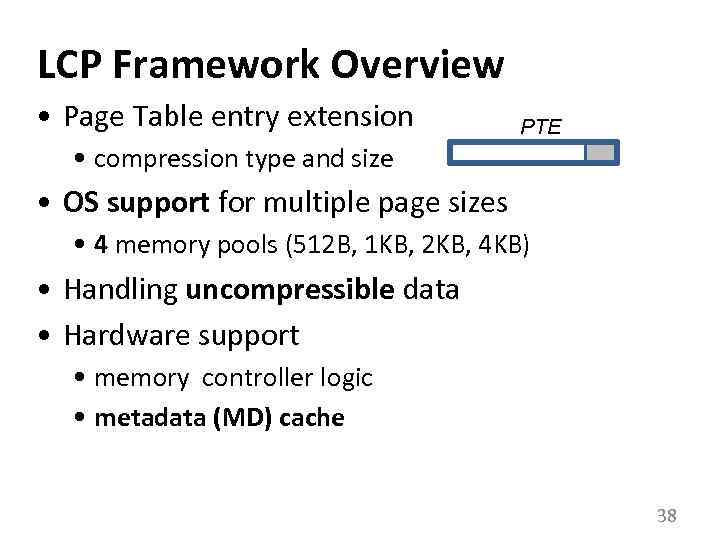

LCP Framework Overview • Page Table entry extension PTE • compression type and size • OS support for multiple page sizes • 4 memory pools (512 B, 1 KB, 2 KB, 4 KB) • Handling uncompressible data • Hardware support • memory controller logic • metadata (MD) cache 38

LCP Framework Overview • Page Table entry extension PTE • compression type and size • OS support for multiple page sizes • 4 memory pools (512 B, 1 KB, 2 KB, 4 KB) • Handling uncompressible data • Hardware support • memory controller logic • metadata (MD) cache 38

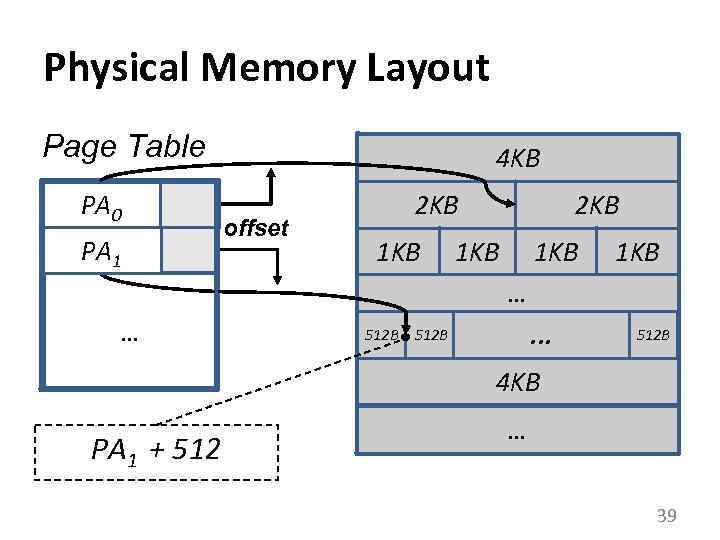

Physical Memory Layout Page Table PA 0 PA 1 4 KB offset 2 KB 1 KB 1 KB … … 512 B . . . 512 B 4 KB PA 1 + 512 … 39

Physical Memory Layout Page Table PA 0 PA 1 4 KB offset 2 KB 1 KB 1 KB … … 512 B . . . 512 B 4 KB PA 1 + 512 … 39

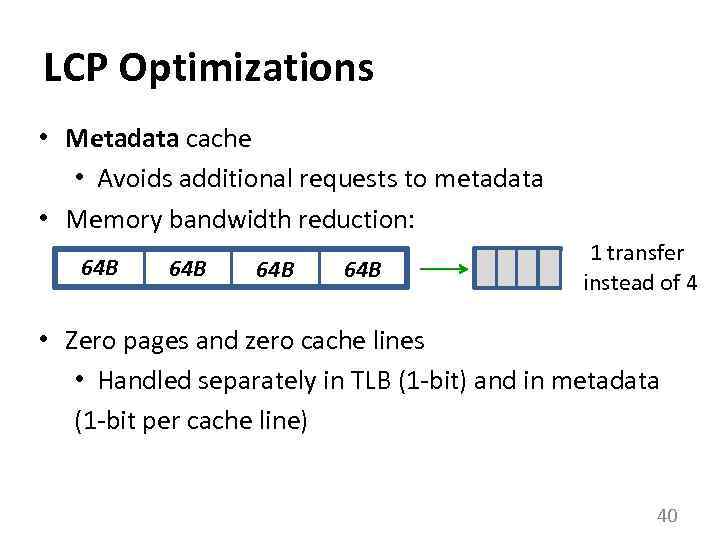

LCP Optimizations • Metadata cache • Avoids additional requests to metadata • Memory bandwidth reduction: 64 B 64 B 1 transfer instead of 4 • Zero pages and zero cache lines • Handled separately in TLB (1 -bit) and in metadata (1 -bit per cache line) 40

LCP Optimizations • Metadata cache • Avoids additional requests to metadata • Memory bandwidth reduction: 64 B 64 B 1 transfer instead of 4 • Zero pages and zero cache lines • Handled separately in TLB (1 -bit) and in metadata (1 -bit per cache line) 40

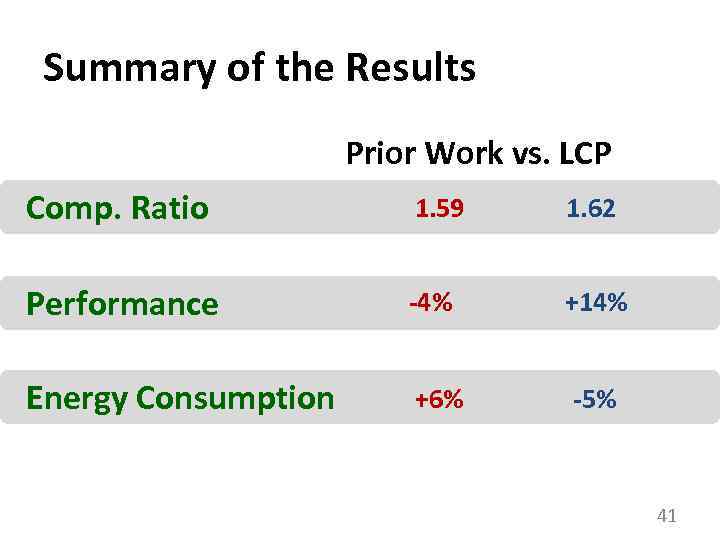

Summary of the Results Prior Work vs. LCP Comp. Ratio 1. 59 1. 62 Performance -4% +14% Energy Consumption +6% -5% 41

Summary of the Results Prior Work vs. LCP Comp. Ratio 1. 59 1. 62 Performance -4% +14% Energy Consumption +6% -5% 41

1. Cache Compression Processor Cache Memory Disk 2. Compression and Cache Replacement 3. Memory Compression 4. Bandwidth Compression 42

1. Cache Compression Processor Cache Memory Disk 2. Compression and Cache Replacement 3. Memory Compression 4. Bandwidth Compression 42

HPCA 2016 CAL 2015 4. Energy-Efficient Bandwidth Compression 43

HPCA 2016 CAL 2015 4. Energy-Efficient Bandwidth Compression 43

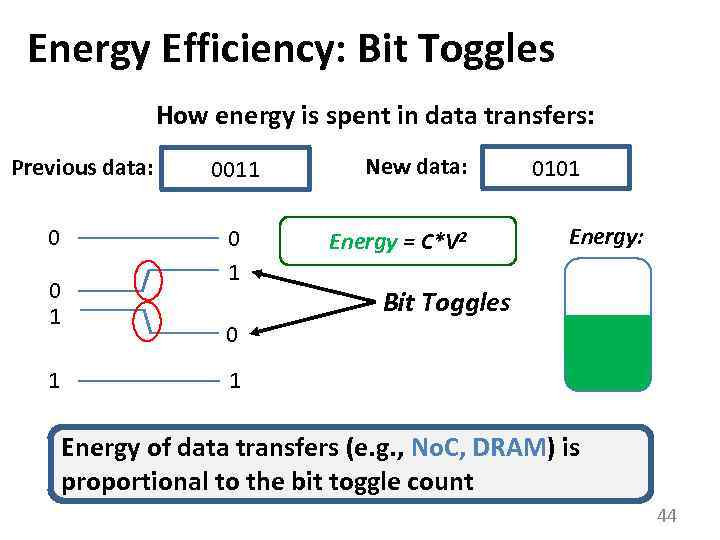

Energy Efficiency: Bit Toggles How energy is spent in data transfers: Previous data: 0 0 1 1 0011 0 1 New data: Energy = C*V 2 0101 Energy: Bit Toggles 0 1 Energy of data transfers (e. g. , No. C, DRAM) is proportional to the bit toggle count 44

Energy Efficiency: Bit Toggles How energy is spent in data transfers: Previous data: 0 0 1 1 0011 0 1 New data: Energy = C*V 2 0101 Energy: Bit Toggles 0 1 Energy of data transfers (e. g. , No. C, DRAM) is proportional to the bit toggle count 44

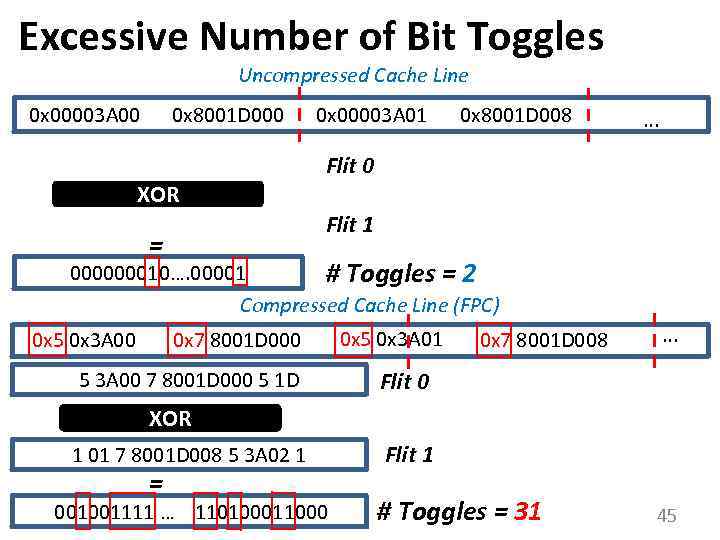

Excessive Number of Bit Toggles Uncompressed Cache Line 0 x 00003 A 00 0 x 8001 D 000 XOR 0 x 00003 A 01 0 x 8001 D 008 … Flit 0 Flit 1 = 000000010…. 00001 # Toggles = 2 Compressed Cache Line (FPC) 0 x 5 0 x 3 A 00 0 x 7 8001 D 000 5 3 A 00 7 8001 D 000 5 1 D 0 x 5 0 x 3 A 01 0 x 7 8001 D 008 … Flit 0 XOR 1 01 7 8001 D 008 5 3 A 02 1 = 001001111 … 110100011000 Flit 1 # Toggles = 31 45

Excessive Number of Bit Toggles Uncompressed Cache Line 0 x 00003 A 00 0 x 8001 D 000 XOR 0 x 00003 A 01 0 x 8001 D 008 … Flit 0 Flit 1 = 000000010…. 00001 # Toggles = 2 Compressed Cache Line (FPC) 0 x 5 0 x 3 A 00 0 x 7 8001 D 000 5 3 A 00 7 8001 D 000 5 1 D 0 x 5 0 x 3 A 01 0 x 7 8001 D 008 … Flit 0 XOR 1 01 7 8001 D 008 5 3 A 02 1 = 001001111 … 110100011000 Flit 1 # Toggles = 31 45

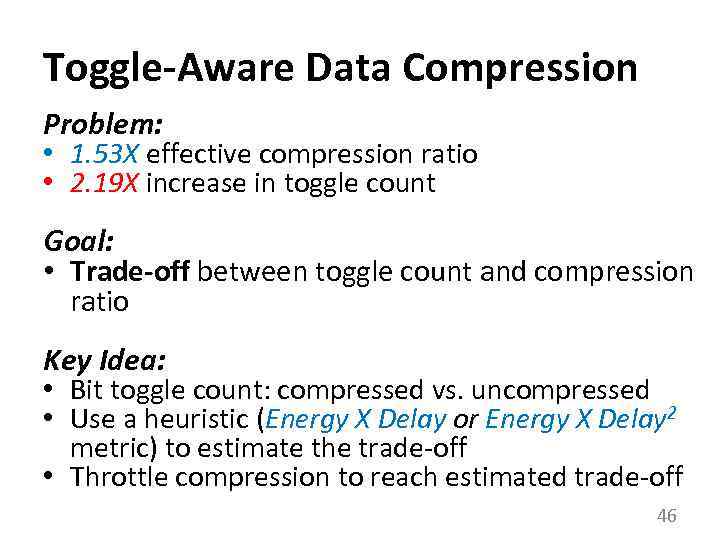

Toggle-Aware Data Compression Problem: • 1. 53 X effective compression ratio • 2. 19 X increase in toggle count Goal: • Trade-off between toggle count and compression ratio Key Idea: • Bit toggle count: compressed vs. uncompressed • Use a heuristic (Energy X Delay or Energy X Delay 2 metric) to estimate the trade-off • Throttle compression to reach estimated trade-off 46

Toggle-Aware Data Compression Problem: • 1. 53 X effective compression ratio • 2. 19 X increase in toggle count Goal: • Trade-off between toggle count and compression ratio Key Idea: • Bit toggle count: compressed vs. uncompressed • Use a heuristic (Energy X Delay or Energy X Delay 2 metric) to estimate the trade-off • Throttle compression to reach estimated trade-off 46

Practical Data Compression for Memory Hierarchies and Applications Gennady Pekhimenko Assistant Professor Computer Systems and Networking Group (CSNG) Eco. System Group

Practical Data Compression for Memory Hierarchies and Applications Gennady Pekhimenko Assistant Professor Computer Systems and Networking Group (CSNG) Eco. System Group