Future Lessons from 40 years of Environmental Modelling

24576-beven_rshu_2013.ppt

- Количество слайдов: 47

Future Lessons from 40 years of Environmental Modelling Keith Beven

Future Lessons from 40 years of Environmental Modelling Keith Beven

My background Wrote first hydrological model as an undergraduate at Bristol University in 1970 (programmed in Algol, stored on cards) Did a PhD at University of East Anglia on distributed physically-based models – almost the first application of finite element models in hydrology. Applied using only measured parameters and failed miserably (see Beven, HESS, 2001) (programmed in Fortran stored on cards). Developed Topmodel at Leeds University with Mike Kirkby (programmed in Fortran, edited on teletype) One of the team at Institute of Hydrology developing the SHE model (programmed in English, Danish & French Fortran, edited on screen)

My background Wrote first hydrological model as an undergraduate at Bristol University in 1970 (programmed in Algol, stored on cards) Did a PhD at University of East Anglia on distributed physically-based models – almost the first application of finite element models in hydrology. Applied using only measured parameters and failed miserably (see Beven, HESS, 2001) (programmed in Fortran stored on cards). Developed Topmodel at Leeds University with Mike Kirkby (programmed in Fortran, edited on teletype) One of the team at Institute of Hydrology developing the SHE model (programmed in English, Danish & French Fortran, edited on screen)

My background Started doing Monte Carlo experiments on models at the University of Virginia in 1980 (start of GLUE and equifinality concepts) Used Monte Carlo in continuous simulation for flood frequency estimation Rewrote the Institute of Hydrology distributed model (IHDM) on return to England Moved to Lancaster 1985, continued GLUE work, first publication with Andrew Binley in 1992. Gradually learning more about the uncertainty problem…… Work has been summarised in two books.

My background Started doing Monte Carlo experiments on models at the University of Virginia in 1980 (start of GLUE and equifinality concepts) Used Monte Carlo in continuous simulation for flood frequency estimation Rewrote the Institute of Hydrology distributed model (IHDM) on return to England Moved to Lancaster 1985, continued GLUE work, first publication with Andrew Binley in 1992. Gradually learning more about the uncertainty problem…… Work has been summarised in two books.

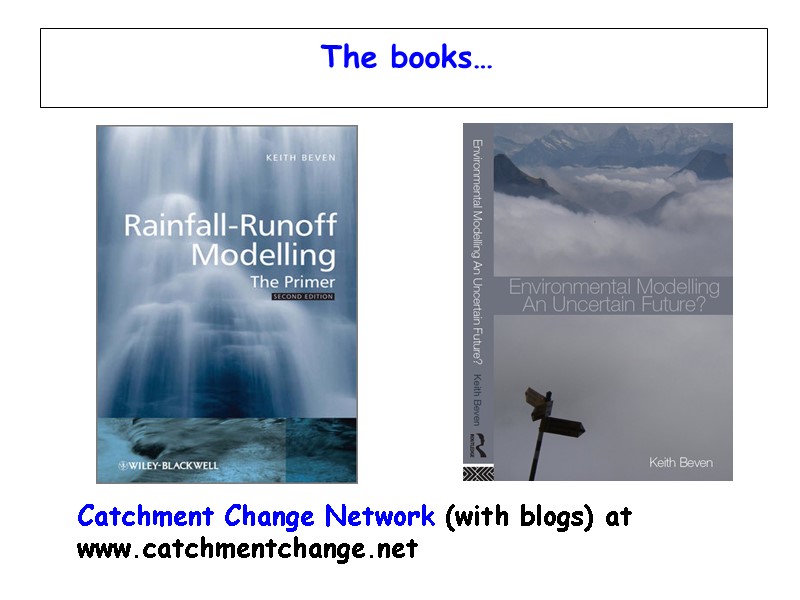

The books… Catchment Change Network (with blogs) at www.catchmentchange.net

The books… Catchment Change Network (with blogs) at www.catchmentchange.net

Summary of the Lessons All models are wrong, some might be useful (for either hypothesis testing or decision making) Many models might be acceptable representations of limited data Better process representations might still be developed Decisions based on model predictions might be better if we allow for uncertainty Lack of knowledge (epistemic error) is often more important than random (aleatory) errors Statistical likelihoods (based on aleatory assumptions) will often over-condition model fitting when epistemic errors are important

Summary of the Lessons All models are wrong, some might be useful (for either hypothesis testing or decision making) Many models might be acceptable representations of limited data Better process representations might still be developed Decisions based on model predictions might be better if we allow for uncertainty Lack of knowledge (epistemic error) is often more important than random (aleatory) errors Statistical likelihoods (based on aleatory assumptions) will often over-condition model fitting when epistemic errors are important

Lesson 1 All models are wrong, some might be useful (for either hypothesis testing or decision making)

Lesson 1 All models are wrong, some might be useful (for either hypothesis testing or decision making)

The purposes of environmental models Modelling as formulating understanding and hypothesis testing Modelling for policy /decision making IPCC4 Climate Change Report Stern Report on Impacts of Climate Change Water Framework Directive river basin management plans Managing eutrophication in the Baltic Sea Predicting pollutant dispersion in rivers, groundwaters and atmosphere for effluent licensing etc etc etc……

The purposes of environmental models Modelling as formulating understanding and hypothesis testing Modelling for policy /decision making IPCC4 Climate Change Report Stern Report on Impacts of Climate Change Water Framework Directive river basin management plans Managing eutrophication in the Baltic Sea Predicting pollutant dispersion in rivers, groundwaters and atmosphere for effluent licensing etc etc etc……

Hydrological Physics Many years ago the concept of the physically-based model was popular…… ”As scientists we are intrigued by the possibility of assembling our concepts and bits of knowledge into a neat package to show that we do, after all, understand our science and its interrelated phenomena” M. A. Kohler, 1969

Hydrological Physics Many years ago the concept of the physically-based model was popular…… ”As scientists we are intrigued by the possibility of assembling our concepts and bits of knowledge into a neat package to show that we do, after all, understand our science and its interrelated phenomena” M. A. Kohler, 1969

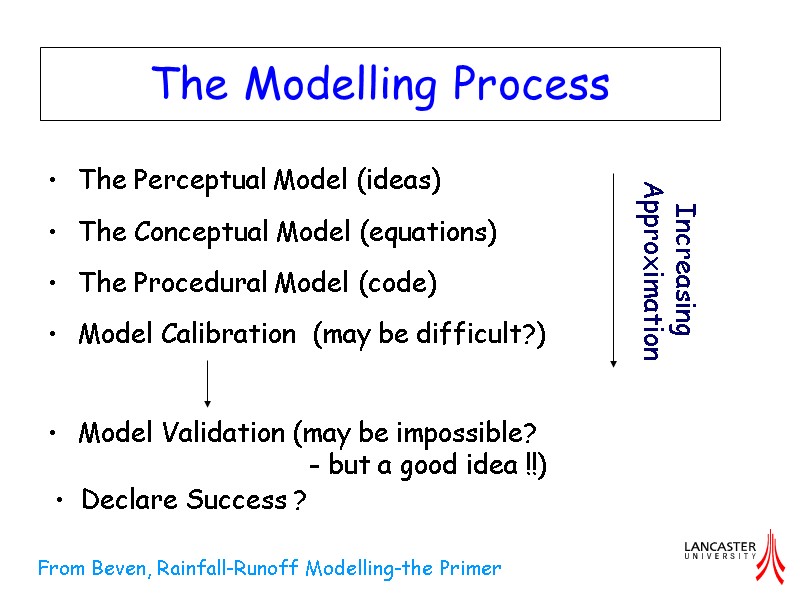

The Perceptual Model (ideas) The Conceptual Model (equations) The Procedural Model (code) Model Calibration (may be difficult?) Model Validation (may be impossible? - but a good idea !!) The Modelling Process Declare Success ? From Beven, Rainfall-Runoff Modelling-the Primer

The Perceptual Model (ideas) The Conceptual Model (equations) The Procedural Model (code) Model Calibration (may be difficult?) Model Validation (may be impossible? - but a good idea !!) The Modelling Process Declare Success ? From Beven, Rainfall-Runoff Modelling-the Primer

Types of model Not concerned here with the nature of the model of interest but may be: Deterministic or Stochastic Lumped or Distributed Linear or nonlinear Linear, lumped, deterministic models (small numbers of parameters, analytical uncertainty propagation) will be easier to analyse than nonlinear, distributed stochastic models (Monte Carlo experiments??)

Types of model Not concerned here with the nature of the model of interest but may be: Deterministic or Stochastic Lumped or Distributed Linear or nonlinear Linear, lumped, deterministic models (small numbers of parameters, analytical uncertainty propagation) will be easier to analyse than nonlinear, distributed stochastic models (Monte Carlo experiments??)

Hydrological Physics “The modelling results were never published. They were simply not good enough. The model did not reproduce the stream discharges, it did not reproduce the measured water table levels, it did not reproduce the observed heterogeneity of inputs into the stream from the hillslopes (Fig. 2). It was far easier at the time to publish the results of hypothetical simulations.” Keith Beven HESS, 2001

Hydrological Physics “The modelling results were never published. They were simply not good enough. The model did not reproduce the stream discharges, it did not reproduce the measured water table levels, it did not reproduce the observed heterogeneity of inputs into the stream from the hillslopes (Fig. 2). It was far easier at the time to publish the results of hypothetical simulations.” Keith Beven HESS, 2001

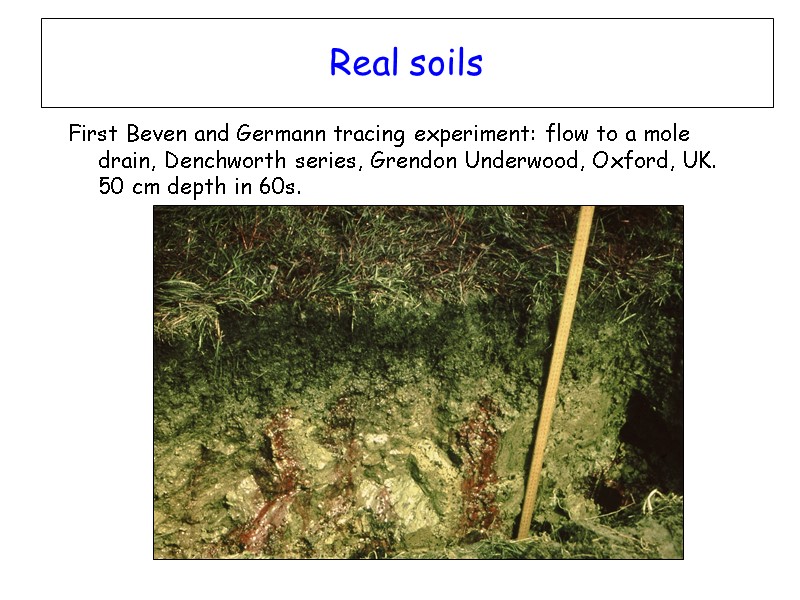

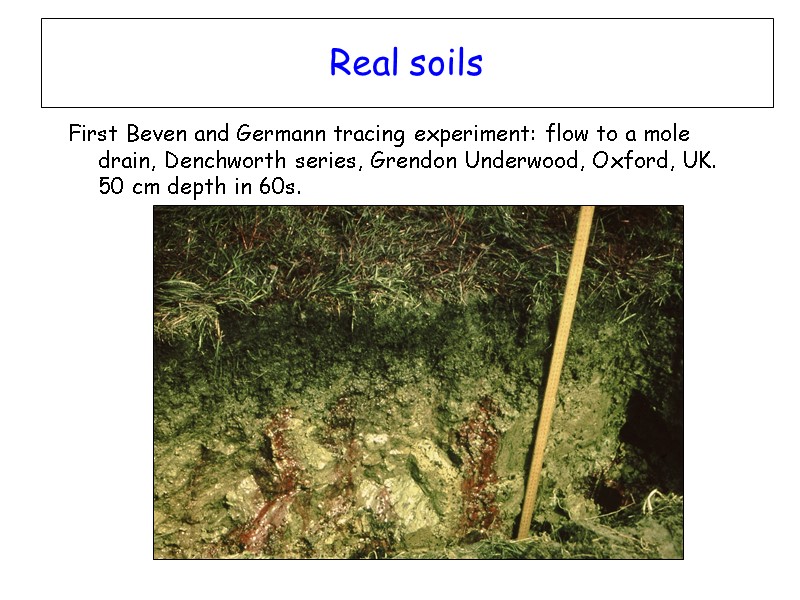

Real soils First Beven and Germann tracing experiment: flow to a mole drain, Denchworth series, Grendon Underwood, Oxford, UK. 50 cm depth in 60s.

Real soils First Beven and Germann tracing experiment: flow to a mole drain, Denchworth series, Grendon Underwood, Oxford, UK. 50 cm depth in 60s.

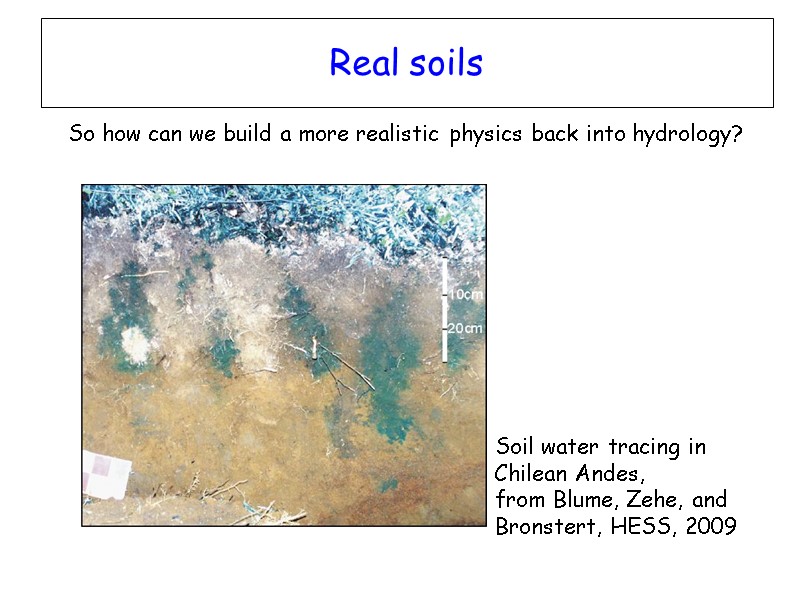

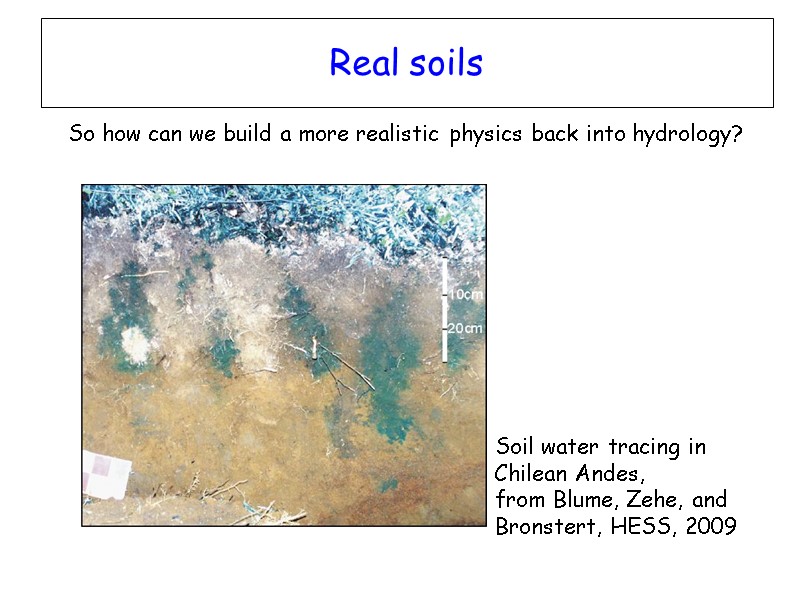

Real soils – the perceptual model Soil structure is important – both macropores and generation of fingering (Beven and Germann, WRR, 1982) Expectation that there will not be equilibrium gradients or fluxes (except perhaps after significant periods of drainage) Evidence for preferential flows and by-passing – including dye tracing and apparent negative conductivity values in field profile measurements Text book Darcy-Buckingham-Richards theory does not hold under all conditions (Oeven if still widely used) – still scope for developing better process representations At any model grid scale there should be hysteresis effects for wetting and drying that will be scale dependent

Real soils – the perceptual model Soil structure is important – both macropores and generation of fingering (Beven and Germann, WRR, 1982) Expectation that there will not be equilibrium gradients or fluxes (except perhaps after significant periods of drainage) Evidence for preferential flows and by-passing – including dye tracing and apparent negative conductivity values in field profile measurements Text book Darcy-Buckingham-Richards theory does not hold under all conditions (Oeven if still widely used) – still scope for developing better process representations At any model grid scale there should be hysteresis effects for wetting and drying that will be scale dependent

Lesson 2 2. Many models might be acceptable representations of limited data

Lesson 2 2. Many models might be acceptable representations of limited data

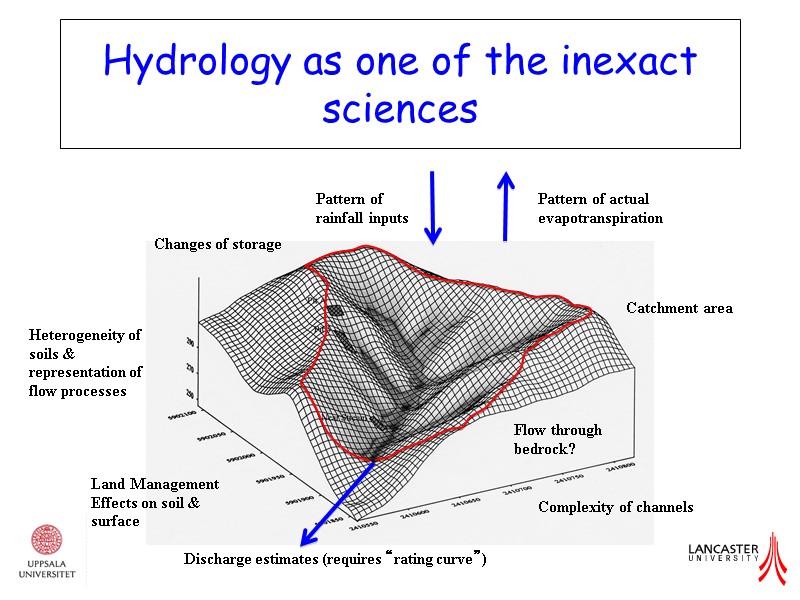

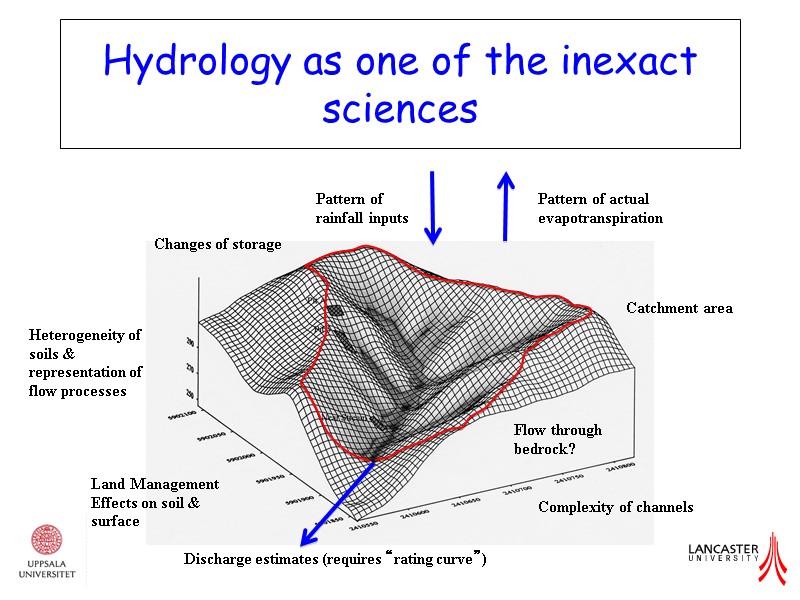

Hydrology as one of the inexact sciences Pattern of rainfall inputs Pattern of actual evapotranspiration Discharge estimates (requires “rating curve”) Catchment area Changes of storage Heterogeneity of soils & representation of flow processes Flow through bedrock? Land Management Effects on soil & surface Complexity of channels

Hydrology as one of the inexact sciences Pattern of rainfall inputs Pattern of actual evapotranspiration Discharge estimates (requires “rating curve”) Catchment area Changes of storage Heterogeneity of soils & representation of flow processes Flow through bedrock? Land Management Effects on soil & surface Complexity of channels

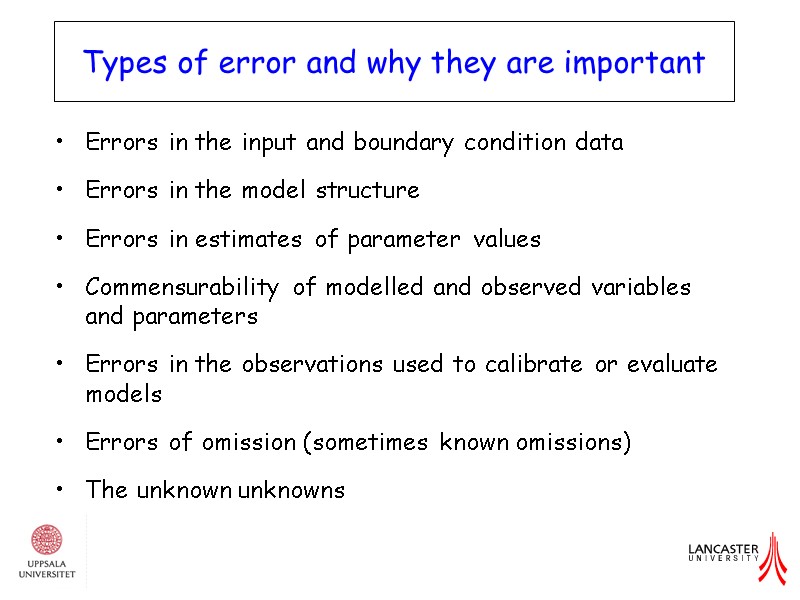

Errors in the input and boundary condition data Errors in the model structure Errors in estimates of parameter values Commensurability of modelled and observed variables and parameters Errors in the observations used to calibrate or evaluate models Errors of omission (sometimes known omissions) The unknown unknowns Types of error and why they are important

Errors in the input and boundary condition data Errors in the model structure Errors in estimates of parameter values Commensurability of modelled and observed variables and parameters Errors in the observations used to calibrate or evaluate models Errors of omission (sometimes known omissions) The unknown unknowns Types of error and why they are important

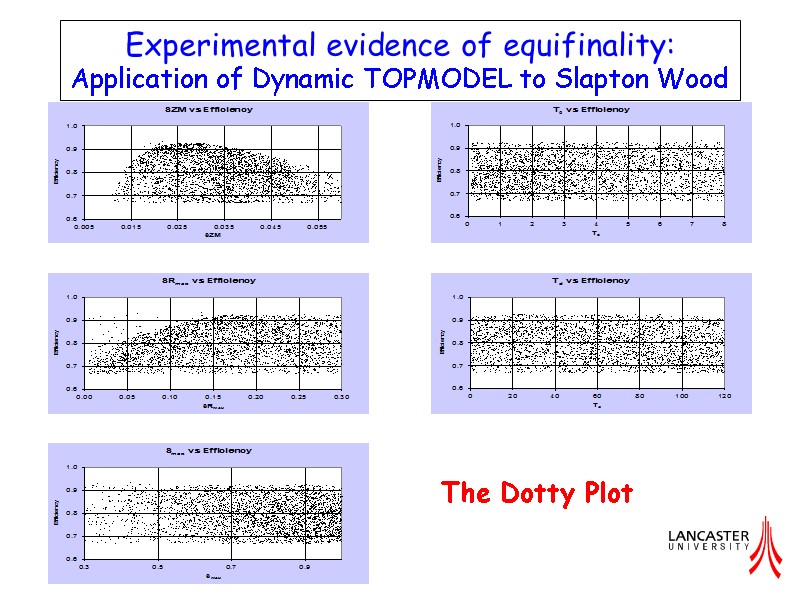

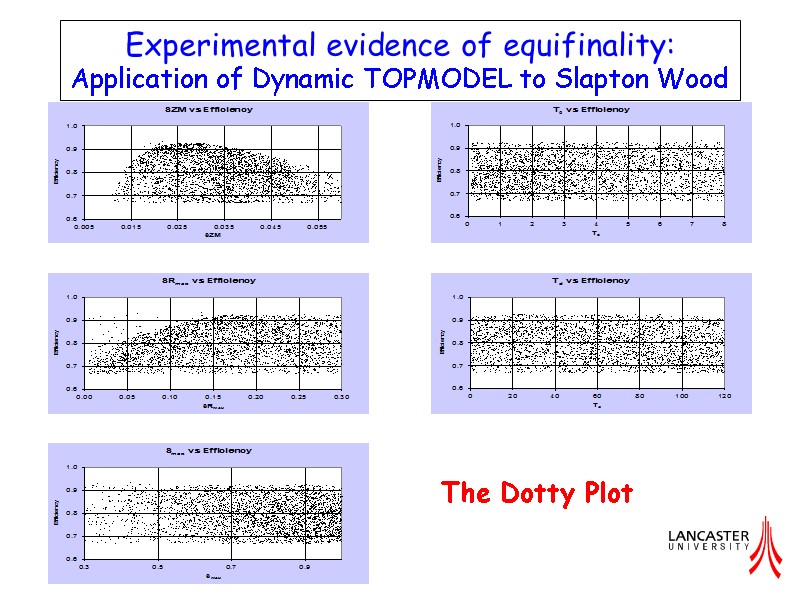

Experimental evidence of equifinality: Application of Dynamic TOPMODEL to Slapton Wood The Dotty Plot

Experimental evidence of equifinality: Application of Dynamic TOPMODEL to Slapton Wood The Dotty Plot

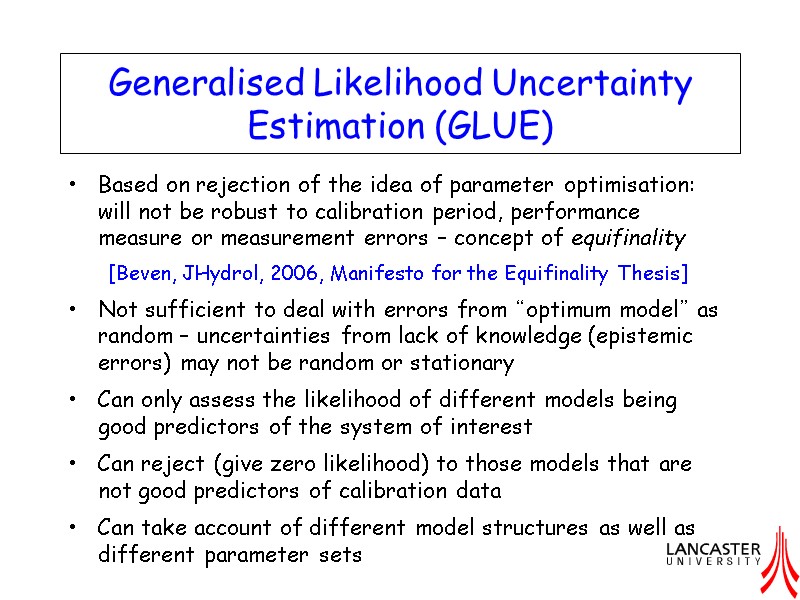

Generalised Likelihood Uncertainty Estimation (GLUE) Based on rejection of the idea of parameter optimisation: will not be robust to calibration period, performance measure or measurement errors – concept of equifinality [Beven, JHydrol, 2006, Manifesto for the Equifinality Thesis] Not sufficient to deal with errors from “optimum model” as random – uncertainties from lack of knowledge (epistemic errors) may not be random or stationary Can only assess the likelihood of different models being good predictors of the system of interest Can reject (give zero likelihood) to those models that are not good predictors of calibration data Can take account of different model structures as well as different parameter sets

Generalised Likelihood Uncertainty Estimation (GLUE) Based on rejection of the idea of parameter optimisation: will not be robust to calibration period, performance measure or measurement errors – concept of equifinality [Beven, JHydrol, 2006, Manifesto for the Equifinality Thesis] Not sufficient to deal with errors from “optimum model” as random – uncertainties from lack of knowledge (epistemic errors) may not be random or stationary Can only assess the likelihood of different models being good predictors of the system of interest Can reject (give zero likelihood) to those models that are not good predictors of calibration data Can take account of different model structures as well as different parameter sets

Elements of GLUE Choose prior distributions for effective parameter values Choose sampling strategy for priors Choose likelihood measure(s) and limits of acceptability for acceptable (behavioural) models Choose way of combining likelihood measures in multicriteria evaluations or in updating over multiple calibration periods (e.g. Bayes equation or other choice)

Elements of GLUE Choose prior distributions for effective parameter values Choose sampling strategy for priors Choose likelihood measure(s) and limits of acceptability for acceptable (behavioural) models Choose way of combining likelihood measures in multicriteria evaluations or in updating over multiple calibration periods (e.g. Bayes equation or other choice)

Published Applications of GLUE Rainfall-runoff modelling Modelling catchment geochemistry Modelling flood frequency and flood inundation Modelling river dispersion Modelling soil erosion Modelling land surface to atmosphere fluxes Modelling atmospheric deposition and critical loads Modelling groundwater capture zones Modelling groundwater recharge Modelling water stress cavitation and tree death Modelling forest fires

Published Applications of GLUE Rainfall-runoff modelling Modelling catchment geochemistry Modelling flood frequency and flood inundation Modelling river dispersion Modelling soil erosion Modelling land surface to atmosphere fluxes Modelling atmospheric deposition and critical loads Modelling groundwater capture zones Modelling groundwater recharge Modelling water stress cavitation and tree death Modelling forest fires

Lesson 3 3. Better process representations might still be developed

Lesson 3 3. Better process representations might still be developed

Real soils So how can we build a more realistic physics back into hydrology? Soil water tracing in Chilean Andes, from Blume, Zehe, and Bronstert, HESS, 2009

Real soils So how can we build a more realistic physics back into hydrology? Soil water tracing in Chilean Andes, from Blume, Zehe, and Bronstert, HESS, 2009

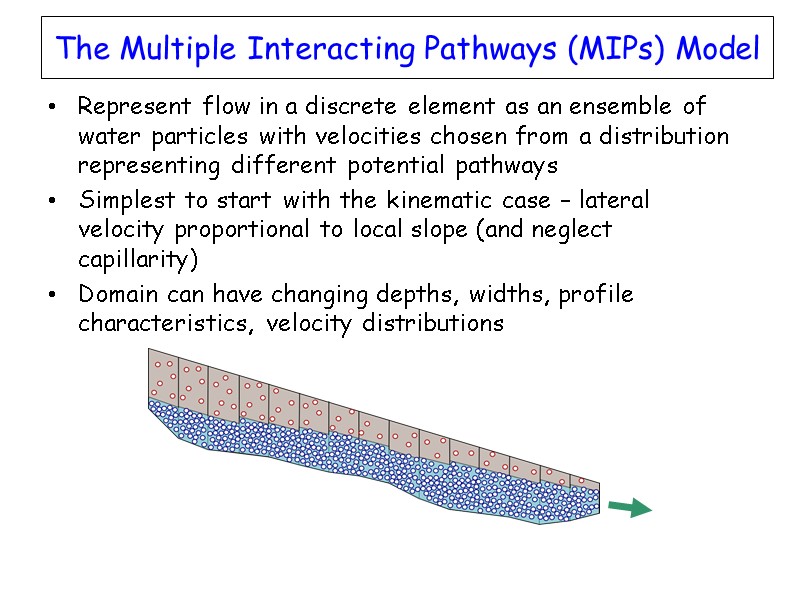

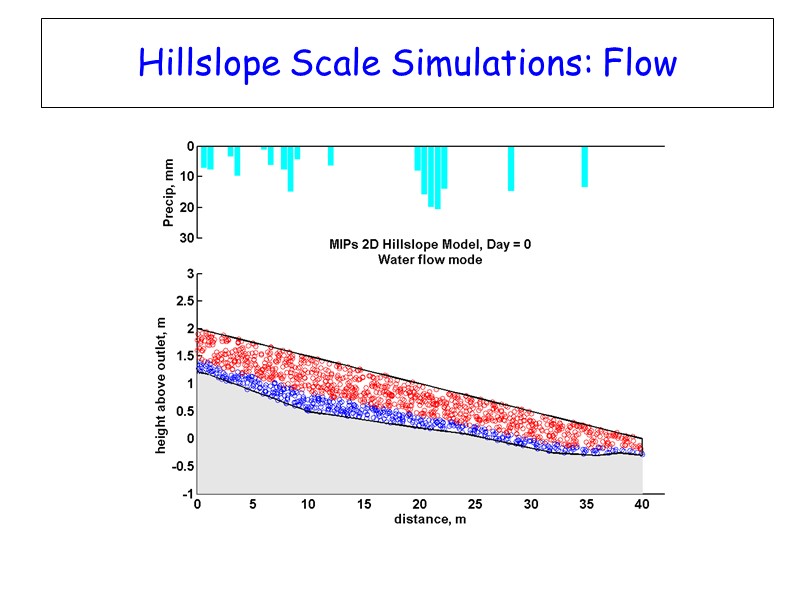

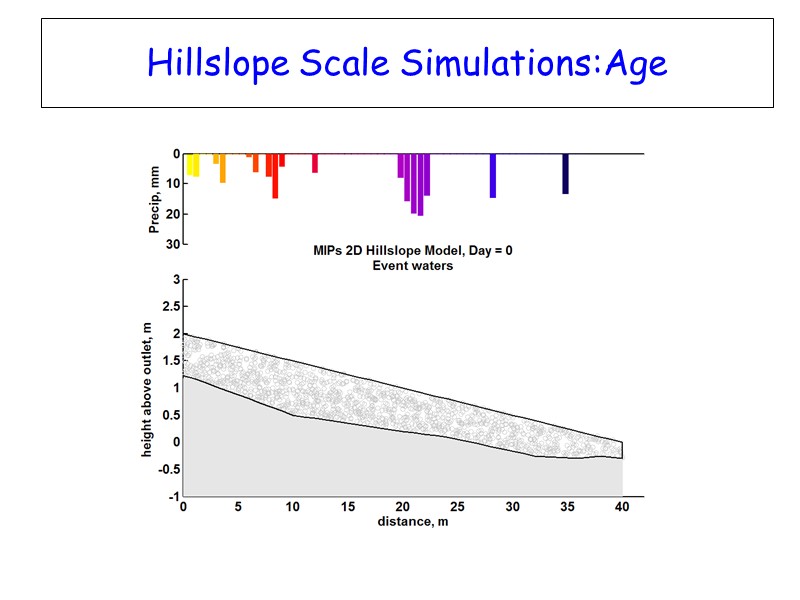

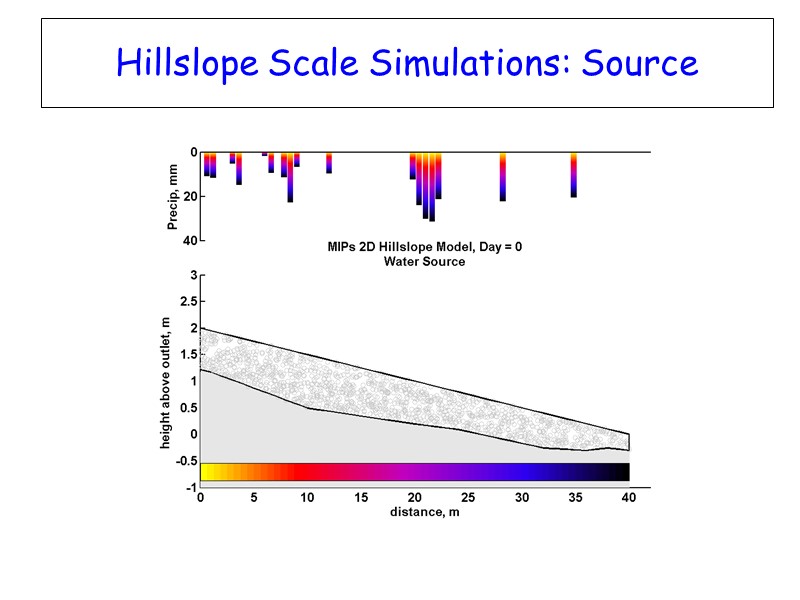

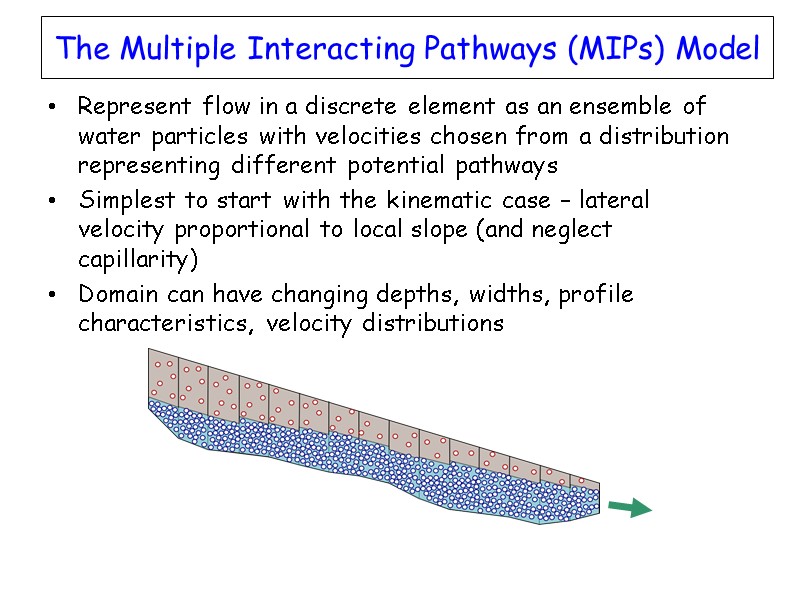

The Multiple Interacting Pathways (MIPs) Model Represent flow in a discrete element as an ensemble of water particles with velocities chosen from a distribution representing different potential pathways Simplest to start with the kinematic case – lateral velocity proportional to local slope (and neglect capillarity) Domain can have changing depths, widths, profile characteristics, velocity distributions

The Multiple Interacting Pathways (MIPs) Model Represent flow in a discrete element as an ensemble of water particles with velocities chosen from a distribution representing different potential pathways Simplest to start with the kinematic case – lateral velocity proportional to local slope (and neglect capillarity) Domain can have changing depths, widths, profile characteristics, velocity distributions

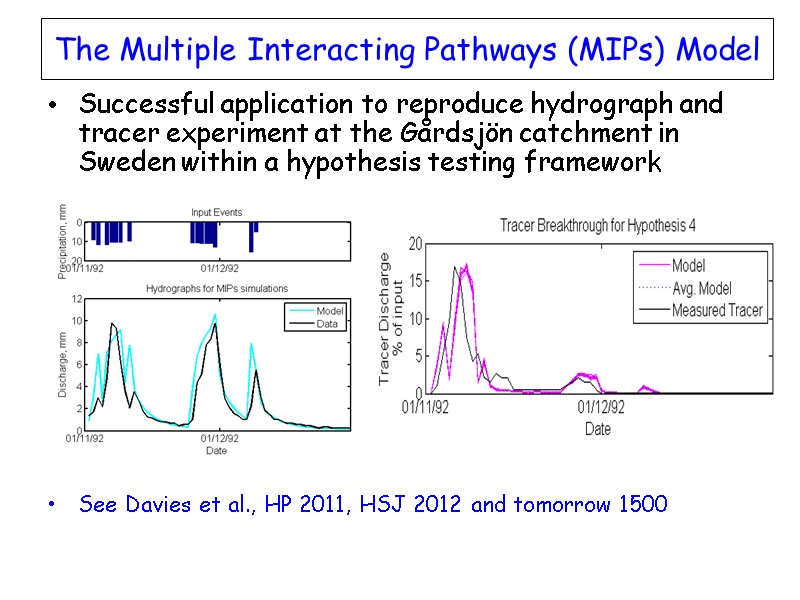

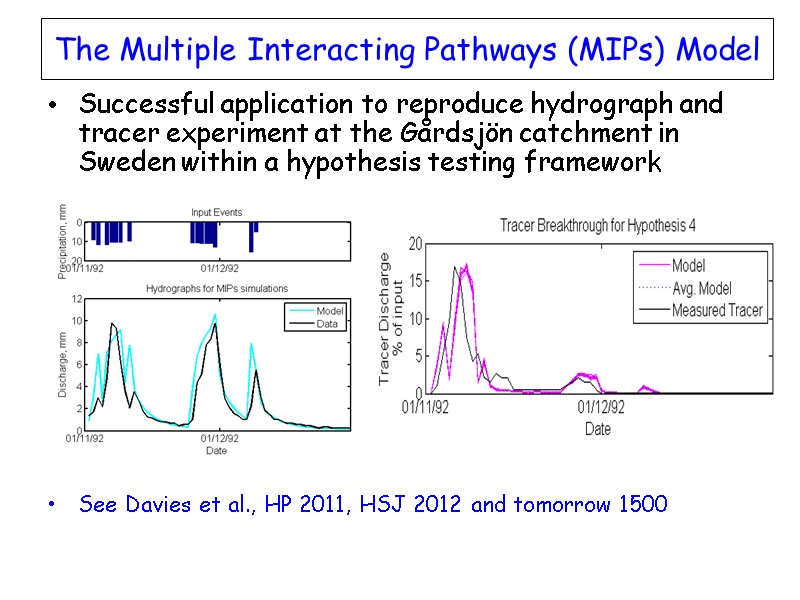

The Multiple Interacting Pathways (MIPs) Model Successful application to reproduce hydrograph and tracer experiment at the Gårdsjön catchment in Sweden within a hypothesis testing framework See Davies et al., HP 2011, HSJ 2012 and tomorrow 1500

The Multiple Interacting Pathways (MIPs) Model Successful application to reproduce hydrograph and tracer experiment at the Gårdsjön catchment in Sweden within a hypothesis testing framework See Davies et al., HP 2011, HSJ 2012 and tomorrow 1500

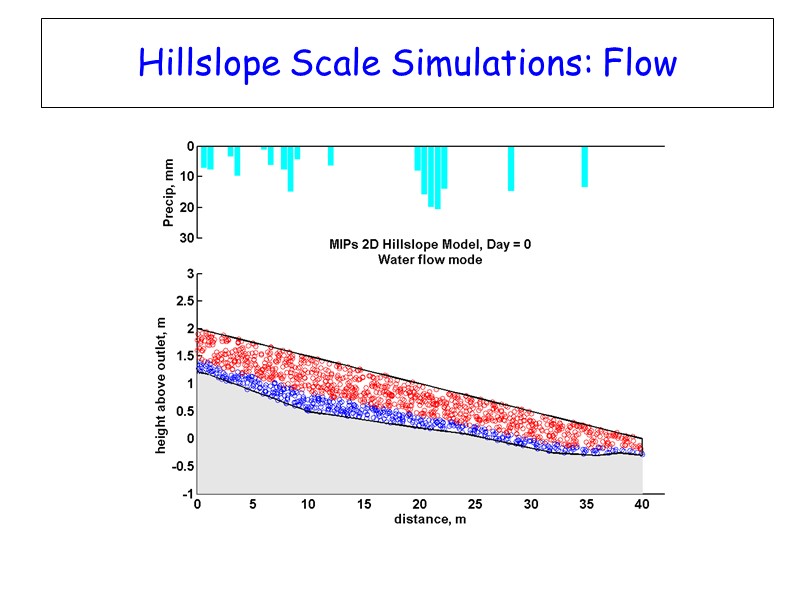

Hillslope Scale Simulations: Flow

Hillslope Scale Simulations: Flow

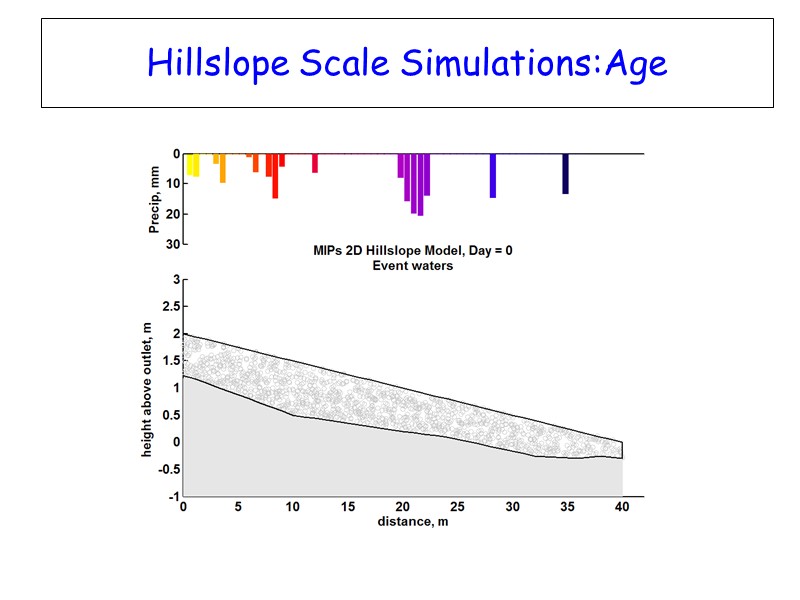

Hillslope Scale Simulations:Age

Hillslope Scale Simulations:Age

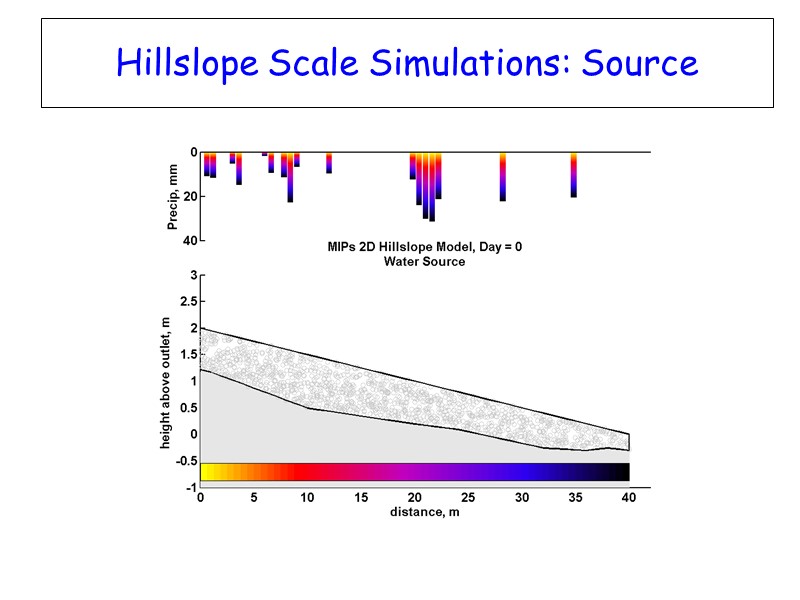

Hillslope Scale Simulations: Source

Hillslope Scale Simulations: Source

Lesson 4 4. Decisions based on model predictions might be better if we allow for uncertainty

Lesson 4 4. Decisions based on model predictions might be better if we allow for uncertainty

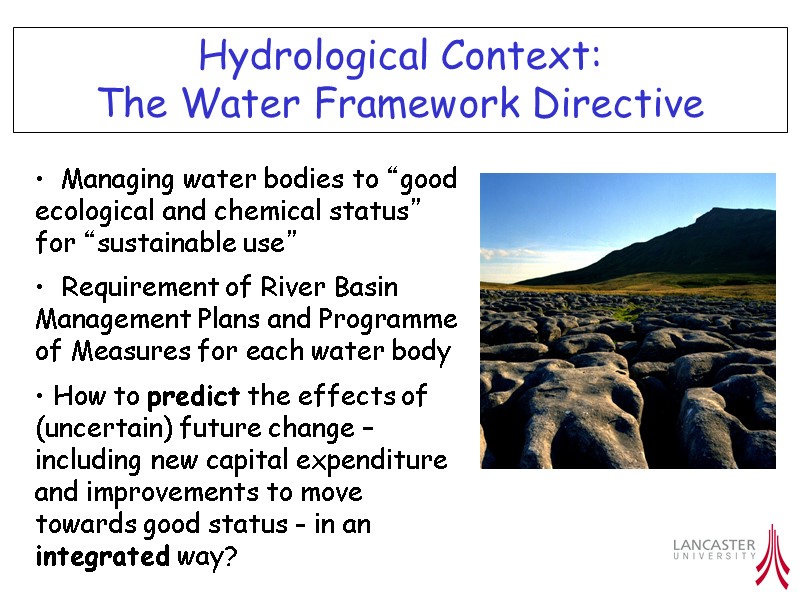

Hydrological Context: The Water Framework Directive Managing water bodies to “good ecological and chemical status” for “sustainable use” Requirement of River Basin Management Plans and Programme of Measures for each water body How to predict the effects of (uncertain) future change – including new capital expenditure and improvements to move towards good status - in an integrated way?

Hydrological Context: The Water Framework Directive Managing water bodies to “good ecological and chemical status” for “sustainable use” Requirement of River Basin Management Plans and Programme of Measures for each water body How to predict the effects of (uncertain) future change – including new capital expenditure and improvements to move towards good status - in an integrated way?

Uncertainty in Environmental Modelling - So why have we ignored uncertainties before? Estimating uncertainties requires many more measurements / time / money Measurement techniques would be improved (but some things might still be unknowable) Model structures would be improved (GCMs - but still difficult to justify sub-grid parameterisations) Computer power would increase (many runs needed for uncertainty estimation but for nonlinear, distributed stochastic model even achieving one run within the time scale of a project may still be a challenge) Experience would mean that parameter values would become more easily estimated (remains unproven in most domains because of uniqueness of place)

Uncertainty in Environmental Modelling - So why have we ignored uncertainties before? Estimating uncertainties requires many more measurements / time / money Measurement techniques would be improved (but some things might still be unknowable) Model structures would be improved (GCMs - but still difficult to justify sub-grid parameterisations) Computer power would increase (many runs needed for uncertainty estimation but for nonlinear, distributed stochastic model even achieving one run within the time scale of a project may still be a challenge) Experience would mean that parameter values would become more easily estimated (remains unproven in most domains because of uniqueness of place)

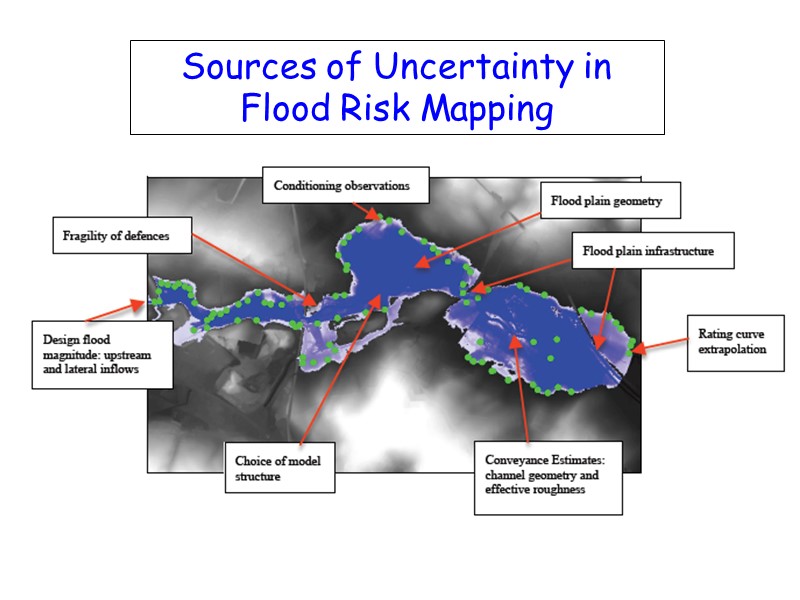

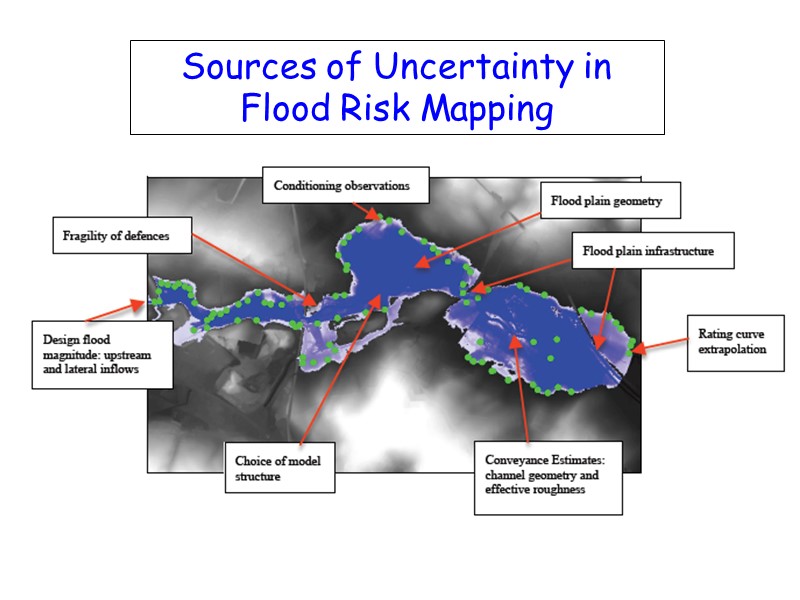

Sources of Uncertainty in Flood Risk Mapping

Sources of Uncertainty in Flood Risk Mapping

Mexborough: Summer 2007 Mapped maximum inundation and model predicted flow depths for Summer 2007 floods at Mexborough, Yorkshire using 2D JFLOW model

Mexborough: Summer 2007 Mapped maximum inundation and model predicted flow depths for Summer 2007 floods at Mexborough, Yorkshire using 2D JFLOW model

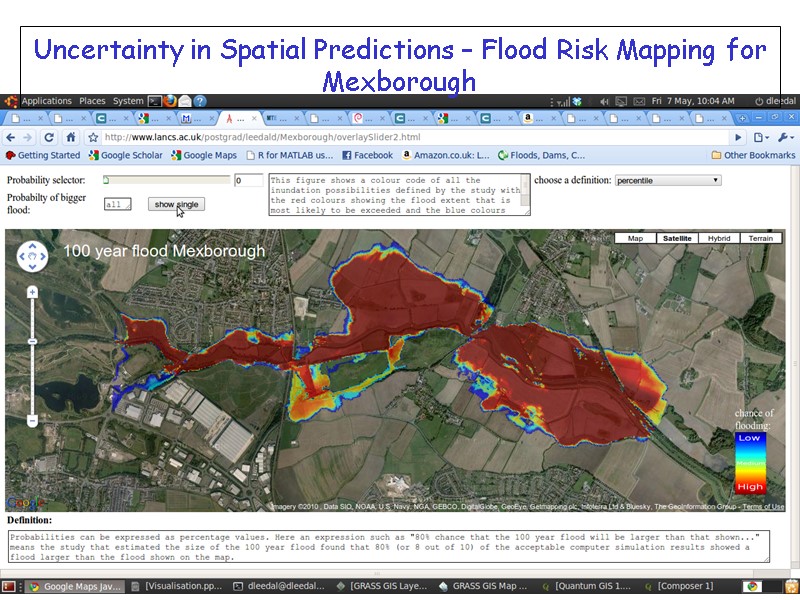

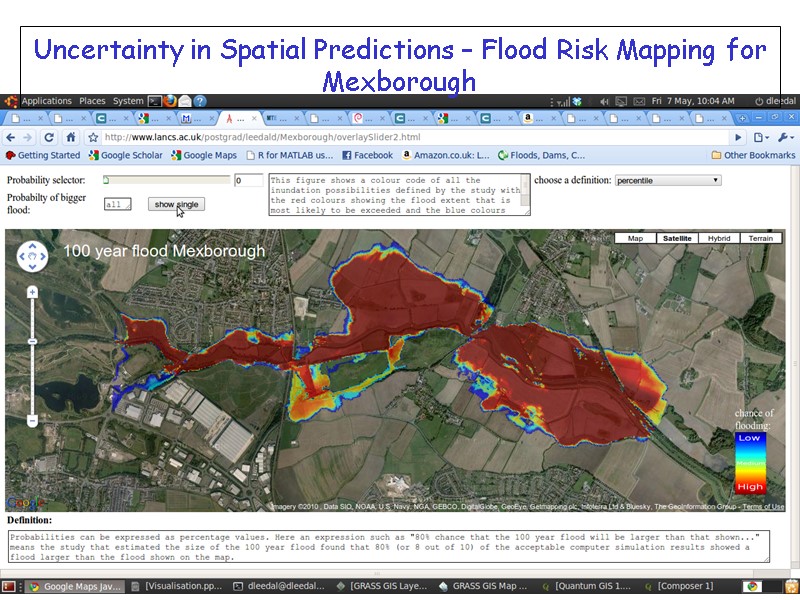

Uncertainty in Spatial Predictions – Flood Risk Mapping for Mexborough

Uncertainty in Spatial Predictions – Flood Risk Mapping for Mexborough

Lesson 5 5. Lack of knowledge (epistemic error) is often more important than random (aleatory) errors

Lesson 5 5. Lack of knowledge (epistemic error) is often more important than random (aleatory) errors

Formal statistical approach to likelihoods (generally) assumes that the (transformed) errors are additive and random (aleatory error) conditional on the model being correct But in environmental modelling, many sources of error (in model structure, input data, parameter values,….) are a result of lack of knowledge (epistemic error) which will result in nonstationarity of error characteristics In extreme cases, data available for calibration might even be disinformative. Types of error and why they are important

Formal statistical approach to likelihoods (generally) assumes that the (transformed) errors are additive and random (aleatory error) conditional on the model being correct But in environmental modelling, many sources of error (in model structure, input data, parameter values,….) are a result of lack of knowledge (epistemic error) which will result in nonstationarity of error characteristics In extreme cases, data available for calibration might even be disinformative. Types of error and why they are important

Hydrology as one of the inexact sciences The Water Balance Equation Q = R – Ea – ΔS All of terms subject to both epistemic and aleatory uncertainties……and there may be other inputs and outputs impossible to measure

Hydrology as one of the inexact sciences The Water Balance Equation Q = R – Ea – ΔS All of terms subject to both epistemic and aleatory uncertainties……and there may be other inputs and outputs impossible to measure

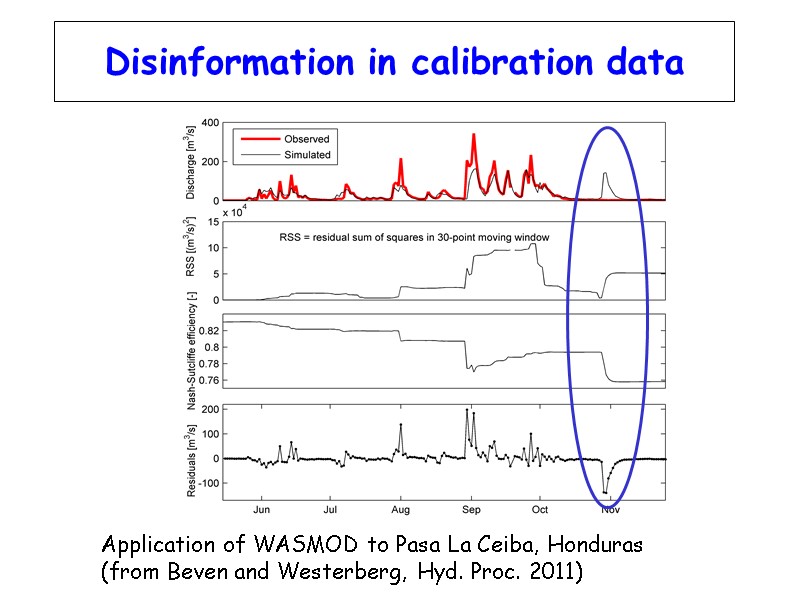

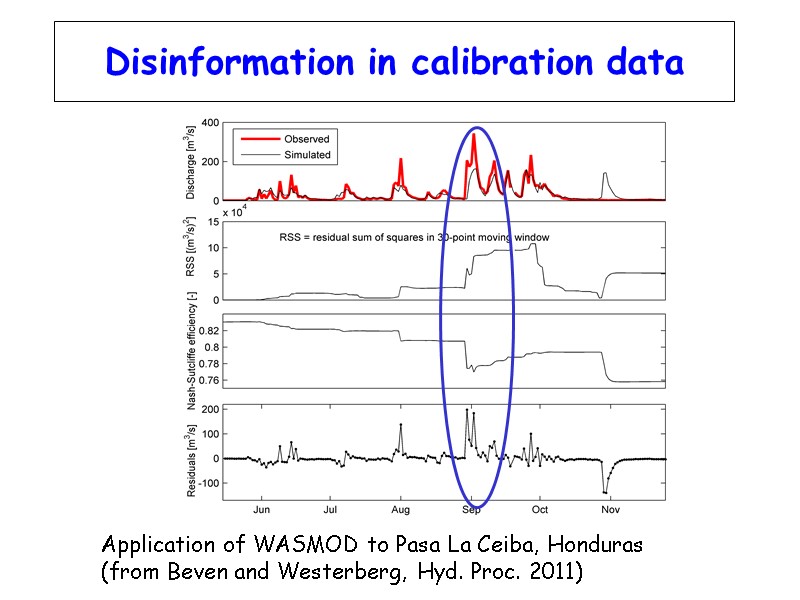

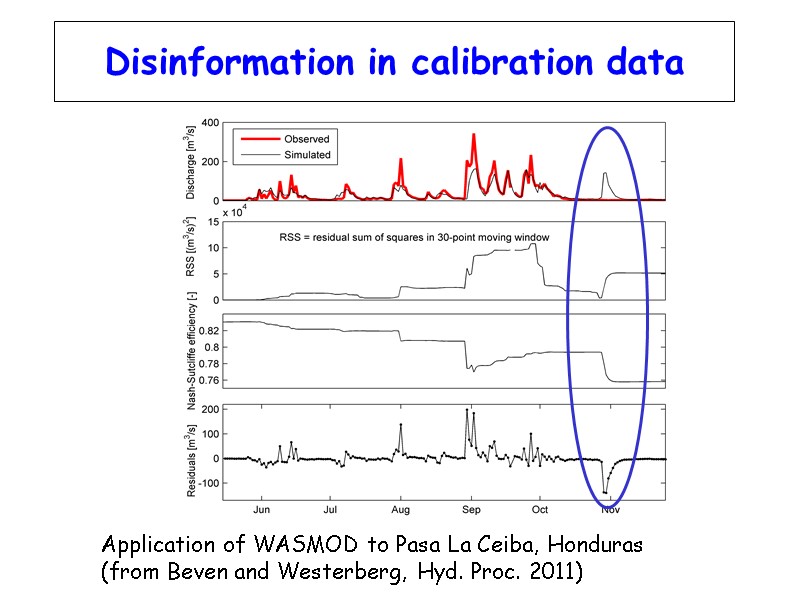

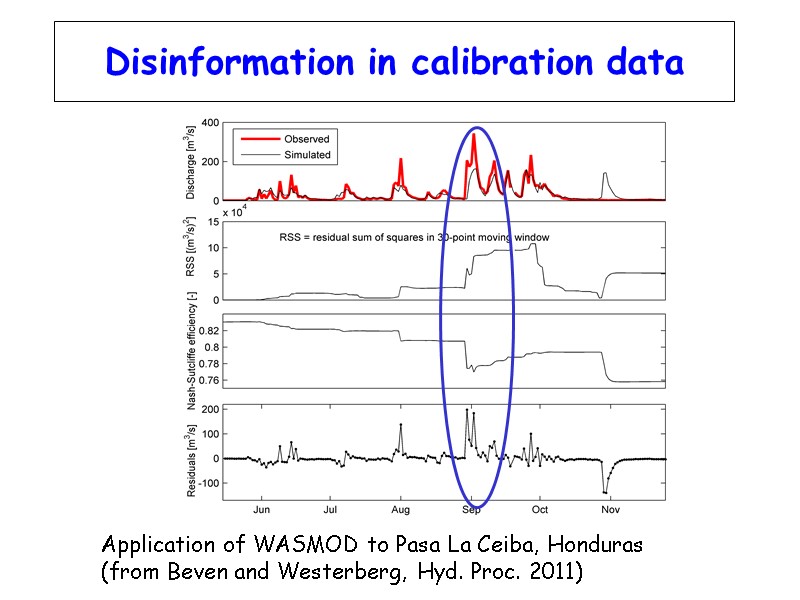

Disinformation in calibration data Application of WASMOD to Pasa La Ceiba, Honduras (from Beven and Westerberg, Hyd. Proc. 2011)

Disinformation in calibration data Application of WASMOD to Pasa La Ceiba, Honduras (from Beven and Westerberg, Hyd. Proc. 2011)

Disinformation in calibration data Application of WASMOD to Pasa La Ceiba, Honduras (from Beven and Westerberg, Hyd. Proc. 2011)

Disinformation in calibration data Application of WASMOD to Pasa La Ceiba, Honduras (from Beven and Westerberg, Hyd. Proc. 2011)

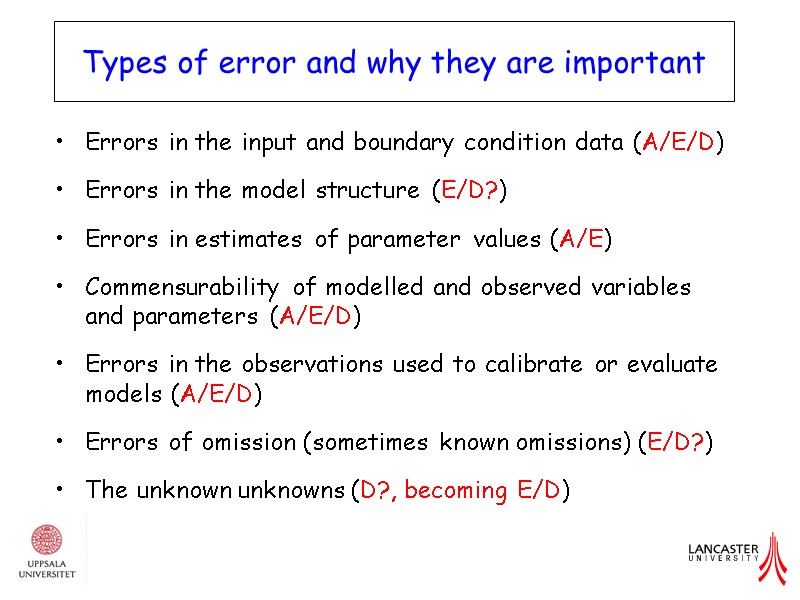

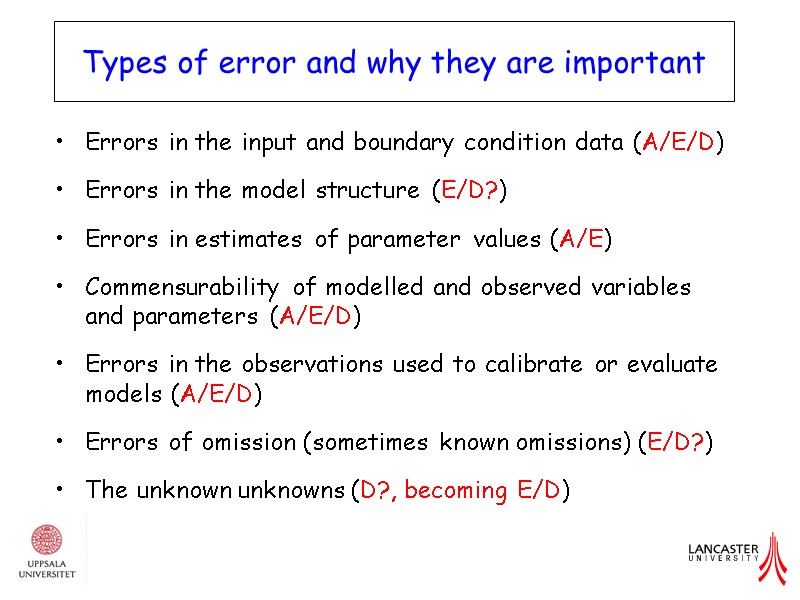

Errors in the input and boundary condition data (A/E/D) Errors in the model structure (E/D?) Errors in estimates of parameter values (A/E) Commensurability of modelled and observed variables and parameters (A/E/D) Errors in the observations used to calibrate or evaluate models (A/E/D) Errors of omission (sometimes known omissions) (E/D?) The unknown unknowns (D?, becoming E/D) Types of error and why they are important

Errors in the input and boundary condition data (A/E/D) Errors in the model structure (E/D?) Errors in estimates of parameter values (A/E) Commensurability of modelled and observed variables and parameters (A/E/D) Errors in the observations used to calibrate or evaluate models (A/E/D) Errors of omission (sometimes known omissions) (E/D?) The unknown unknowns (D?, becoming E/D) Types of error and why they are important

Lesson 6 6. Statistical likelihoods (based on aleatory assumptions) will often over-condition model fitting when epistemic errors are important

Lesson 6 6. Statistical likelihoods (based on aleatory assumptions) will often over-condition model fitting when epistemic errors are important

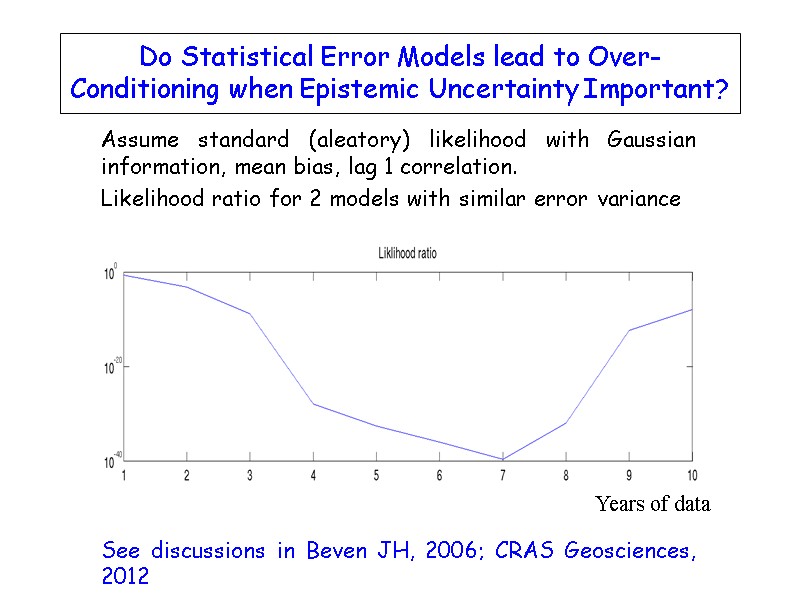

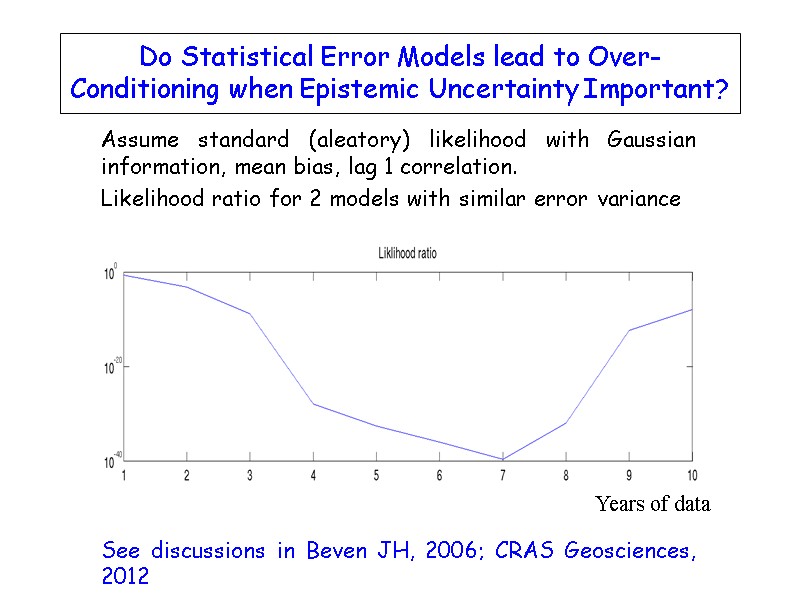

Assume standard (aleatory) likelihood with Gaussian information, mean bias, lag 1 correlation. Likelihood ratio for 2 models with similar error variance See discussions in Beven JH, 2006; CRAS Geosciences, 2012 Do Statistical Error Models lead to Over-Conditioning when Epistemic Uncertainty Important? Years of data

Assume standard (aleatory) likelihood with Gaussian information, mean bias, lag 1 correlation. Likelihood ratio for 2 models with similar error variance See discussions in Beven JH, 2006; CRAS Geosciences, 2012 Do Statistical Error Models lead to Over-Conditioning when Epistemic Uncertainty Important? Years of data

What will increase the relative information content of an event? Relative accuracy of estimation of the inputs driving the model Relative accuracy of estimates of the observations with which model outputs will be compared (including commensurability issues) Unusualness of an event (extremes, rarity of initial conditions,….) Revisiting information content of events

What will increase the relative information content of an event? Relative accuracy of estimation of the inputs driving the model Relative accuracy of estimates of the observations with which model outputs will be compared (including commensurability issues) Unusualness of an event (extremes, rarity of initial conditions,….) Revisiting information content of events

What will decrease the relative information content of an event? Repetition (multiple examples of similar conditions) Inconsistency of the input and output data Relative uncertainty of observations (e.g. highly uncertain overbank flood discharges would reduce information content of an extreme event, discharges for catchments with ill-defined rating curves might be less informative than in catchments with well defined curves) A preceding disinformative / less informative event over the dynamic response time scale of the catchment. Revisiting information content of events

What will decrease the relative information content of an event? Repetition (multiple examples of similar conditions) Inconsistency of the input and output data Relative uncertainty of observations (e.g. highly uncertain overbank flood discharges would reduce information content of an extreme event, discharges for catchments with ill-defined rating curves might be less informative than in catchments with well defined curves) A preceding disinformative / less informative event over the dynamic response time scale of the catchment. Revisiting information content of events

Framework for likelihood assessment Identify disinformative events independent of model Classify remaining events based on input characteristics Calculate storm based performance measure for each model run, pooled by event class with discounting of events following disinformative events Empirical summary of model errors in each class (informative and disinformative events separately) Prediction of new events as assigned to calibration classes Revisiting information content of events

Framework for likelihood assessment Identify disinformative events independent of model Classify remaining events based on input characteristics Calculate storm based performance measure for each model run, pooled by event class with discounting of events following disinformative events Empirical summary of model errors in each class (informative and disinformative events separately) Prediction of new events as assigned to calibration classes Revisiting information content of events

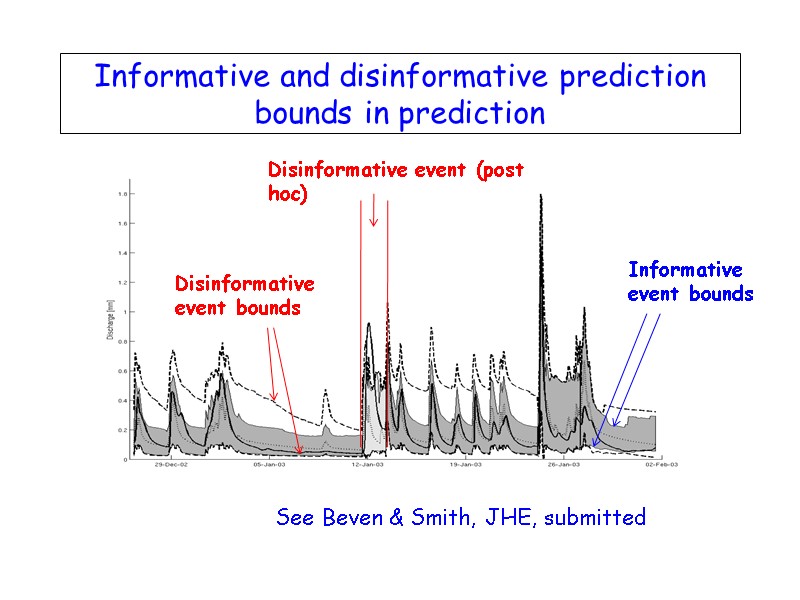

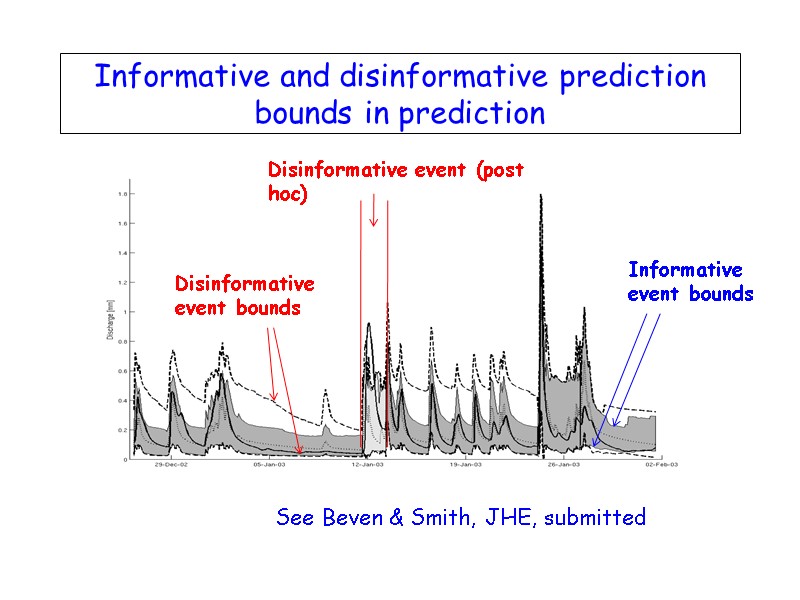

Informative and disinformative prediction bounds in prediction See Beven & Smith, JHE, submitted Disinformative event (post hoc) Informative event bounds Disinformative event bounds

Informative and disinformative prediction bounds in prediction See Beven & Smith, JHE, submitted Disinformative event (post hoc) Informative event bounds Disinformative event bounds

Summary of the Lessons All models are wrong, some might be useful (for either hypothesis testing or decision making) Many models might be acceptable representations of limited data Better process representations might still be developed Decisions based on model predictions might be better if we allow for uncertainty Lack of knowledge (epistemic error) is often more important than random (aleatory) errors Statistical likelihoods (based on aleatory assumptions) will often over-condition model fitting when epistemic errors are important

Summary of the Lessons All models are wrong, some might be useful (for either hypothesis testing or decision making) Many models might be acceptable representations of limited data Better process representations might still be developed Decisions based on model predictions might be better if we allow for uncertainty Lack of knowledge (epistemic error) is often more important than random (aleatory) errors Statistical likelihoods (based on aleatory assumptions) will often over-condition model fitting when epistemic errors are important

Still be done Reducing uncertainty depends more on better observation techniques than better model structures Better model structures might still be achieved – need more tests for both flow and transport Will still have to accept that models will have limited accuracy – so uncertainty estimation will remain important (including the element of surprise) Must look much more closely at the information content of data and formulate likelihoods that are robust to epistemic error Need to communicate the meaning of predictions (and uncertainties) to decision makers – it may make a difference to the decision process

Still be done Reducing uncertainty depends more on better observation techniques than better model structures Better model structures might still be achieved – need more tests for both flow and transport Will still have to accept that models will have limited accuracy – so uncertainty estimation will remain important (including the element of surprise) Must look much more closely at the information content of data and formulate likelihoods that are robust to epistemic error Need to communicate the meaning of predictions (and uncertainties) to decision makers – it may make a difference to the decision process